diff --git a/.github/actions/setup-sonar-tools/action.yml b/.github/actions/setup-sonar-tools/action.yml

index d791c120bf..154824c70a 100644

--- a/.github/actions/setup-sonar-tools/action.yml

+++ b/.github/actions/setup-sonar-tools/action.yml

@@ -16,7 +16,8 @@ runs:

- name: Install sonarcloud tools

run: |

- sudo apt-get install nodejs curl unzip \

+ sudo apt-get update \

+ && sudo apt-get install nodejs curl unzip \

&& curl --create-dirs -sSLo $HOME/.sonar/sonar-scanner.zip \

https://binaries.sonarsource.com/Distribution/sonar-scanner-cli/sonar-scanner-cli-$SONAR_SCANNER_VERSION-linux.zip \

&& unzip -o $HOME/.sonar/sonar-scanner.zip -d $HOME/.sonar/ \

diff --git a/documentation/ExampleJax.ipynb b/documentation/ExampleJax.ipynb

index 1899305b67..c9fbb589e5 100644

--- a/documentation/ExampleJax.ipynb

+++ b/documentation/ExampleJax.ipynb

@@ -46,10 +46,10 @@

"output_type": "stream",

"text": [

"Cloning into 'tmp/benchmark-models'...\n",

- "remote: Enumerating objects: 336, done.\u001b[K\n",

- "remote: Counting objects: 100% (336/336), done.\u001b[K\n",

- "remote: Compressing objects: 100% (285/285), done.\u001b[K\n",

- "remote: Total 336 (delta 88), reused 216 (delta 39), pack-reused 0\u001b[K\n",

+ "remote: Enumerating objects: 336, done.\u001B[K\n",

+ "remote: Counting objects: 100% (336/336), done.\u001B[K\n",

+ "remote: Compressing objects: 100% (285/285), done.\u001B[K\n",

+ "remote: Total 336 (delta 88), reused 216 (delta 39), pack-reused 0\u001B[K\n",

"Receiving objects: 100% (336/336), 2.11 MiB | 7.48 MiB/s, done.\n",

"Resolving deltas: 100% (88/88), done.\n"

]

@@ -557,8 +557,7 @@

"clang -Wno-unused-result -Wsign-compare -Wunreachable-code -fno-common -dynamic -DNDEBUG -g -fwrapv -O3 -Wall -isysroot /Library/Developer/CommandLineTools/SDKs/MacOSX13.sdk -I/Users/fabian/Documents/projects/AMICI/documentation/amici_models/Boehm_JProteomeRes2014 -I/Users/fabian/Documents/projects/AMICI/python/sdist/amici/include -I/Users/fabian/Documents/projects/AMICI/python/sdist/amici/ThirdParty/gsl -I/Users/fabian/Documents/projects/AMICI/python/sdist/amici/ThirdParty/sundials/include -I/Users/fabian/Documents/projects/AMICI/python/sdist/amici/ThirdParty/SuiteSparse/include -I/opt/homebrew/Cellar/hdf5/1.12.2_2/include -I/Users/fabian/Documents/projects/AMICI/build/venv/include -I/opt/homebrew/opt/python@3.10/Frameworks/Python.framework/Versions/3.10/include/python3.10 -c swig/Boehm_JProteomeRes2014_wrap.cpp -o build/temp.macosx-13-arm64-cpython-310/swig/Boehm_JProteomeRes2014_wrap.o -std=c++14\n",

"clang -Wno-unused-result -Wsign-compare -Wunreachable-code -fno-common -dynamic -DNDEBUG -g -fwrapv -O3 -Wall -isysroot /Library/Developer/CommandLineTools/SDKs/MacOSX13.sdk -I/Users/fabian/Documents/projects/AMICI/documentation/amici_models/Boehm_JProteomeRes2014 -I/Users/fabian/Documents/projects/AMICI/python/sdist/amici/include -I/Users/fabian/Documents/projects/AMICI/python/sdist/amici/ThirdParty/gsl -I/Users/fabian/Documents/projects/AMICI/python/sdist/amici/ThirdParty/sundials/include -I/Users/fabian/Documents/projects/AMICI/python/sdist/amici/ThirdParty/SuiteSparse/include -I/opt/homebrew/Cellar/hdf5/1.12.2_2/include -I/Users/fabian/Documents/projects/AMICI/build/venv/include -I/opt/homebrew/opt/python@3.10/Frameworks/Python.framework/Versions/3.10/include/python3.10 -c wrapfunctions.cpp -o build/temp.macosx-13-arm64-cpython-310/wrapfunctions.o -std=c++14\n",

"clang++ -bundle -undefined dynamic_lookup -isysroot /Library/Developer/CommandLineTools/SDKs/MacOSX13.sdk build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_Jy.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_dJydsigma.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_dJydy.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_dJydy_colptrs.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_dJydy_rowvals.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_dsigmaydp.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_dwdp.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_dwdp_colptrs.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_dwdp_rowvals.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_dwdw.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_dwdw_colptrs.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_dwdw_rowvals.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_dwdx.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_dwdx_colptrs.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_dwdx_rowvals.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_dxdotdw.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_dxdotdw_colptrs.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_dxdotdw_rowvals.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_dydx.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_sigmay.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_sx0_fixedParameters.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_w.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_x0.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_x0_fixedParameters.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_x_rdata.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_x_solver.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_xdot.o build/temp.macosx-13-arm64-cpython-310/Boehm_JProteomeRes2014_y.o build/temp.macosx-13-arm64-cpython-310/swig/Boehm_JProteomeRes2014_wrap.o build/temp.macosx-13-arm64-cpython-310/wrapfunctions.o -L/opt/homebrew/Cellar/hdf5/1.12.2_2/lib -L/Users/fabian/Documents/projects/AMICI/python/sdist/amici/libs -lamici -lsundials -lsuitesparse -lcblas -lhdf5_hl_cpp -lhdf5_hl -lhdf5_cpp -lhdf5 -o /Users/fabian/Documents/projects/AMICI/documentation/amici_models/Boehm_JProteomeRes2014/Boehm_JProteomeRes2014/_Boehm_JProteomeRes2014.cpython-310-darwin.so\n",

- "ld: warning: -undefined dynamic_lookup may not work with chained fixups\n",

- "\n"

+ "ld: warning: -undefined dynamic_lookup may not work with chained fixups\n"

]

},

{

@@ -571,7 +570,7 @@

}

],

"source": [

- "from amici.petab_import import import_petab_problem\n",

+ "from amici.petab.petab_import import import_petab_problem\n",

"\n",

"amici_model = import_petab_problem(petab_problem, force_compile=True)"

]

@@ -589,7 +588,7 @@

"id": "e2ef051a",

"metadata": {},

"source": [

- "For full jax support, we would have to implement a new [primitive](https://jax.readthedocs.io/en/latest/notebooks/How_JAX_primitives_work.html), which would require quite a bit of engineering, and in the end wouldn't add much benefit since AMICI can't run on GPUs. Instead will interface AMICI using the experimental jax module [`host_callback`](https://jax.readthedocs.io/en/latest/jax.experimental.host_callback.html). "

+ "For full jax support, we would have to implement a new [primitive](https://jax.readthedocs.io/en/latest/notebooks/How_JAX_primitives_work.html), which would require quite a bit of engineering, and in the end wouldn't add much benefit since AMICI can't run on GPUs. Instead, we will interface AMICI using the experimental jax module [`host_callback`](https://jax.readthedocs.io/en/latest/jax.experimental.host_callback.html). "

]

},

{

@@ -607,7 +606,7 @@

"metadata": {},

"outputs": [],

"source": [

- "from amici.petab_objective import simulate_petab\n",

+ "from amici.petab.simulations import simulate_petab\n",

"import amici\n",

"\n",

"amici_solver = amici_model.getSolver()\n",

@@ -655,7 +654,7 @@

"id": "98e819bd",

"metadata": {},

"source": [

- "Now we can finally define the JAX function that runs amici simulation using the host callback. We add a `custom_jvp` decorater so that we can define a custom jacobian vector product function in the next step. More details about custom jacobian vector product functions can be found in the [JAX documentation](https://jax.readthedocs.io/en/latest/notebooks/Custom_derivative_rules_for_Python_code.html)"

+ "Now we can finally define the JAX function that runs amici simulation using the host callback. We add a `custom_jvp` decorator so that we can define a custom jacobian vector product function in the next step. More details about custom jacobian vector product functions can be found in the [JAX documentation](https://jax.readthedocs.io/en/latest/notebooks/Custom_derivative_rules_for_Python_code.html)"

]

},

{

@@ -937,7 +936,7 @@

"metadata": {},

"source": [

"We see quite some differences in the gradient calculation. The primary reason is that running JAX in default configuration will use float32 precision for the parameters that are passed to AMICI, which uses float64, and the derivative of the parameter transformation \n",

- "As AMICI simulations that run on the CPU are the most expensive operation, there is barely any tradeoff for using float32 vs float64 in JAX. Therefore we configure JAX to use float64 instead and rerun simulations."

+ "As AMICI simulations that run on the CPU are the most expensive operation, there is barely any tradeoff for using float32 vs float64 in JAX. Therefore, we configure JAX to use float64 instead and rerun simulations."

]

},

{

diff --git a/documentation/GettingStarted.ipynb b/documentation/GettingStarted.ipynb

index 91fb9cb12c..1bacf00bef 100644

--- a/documentation/GettingStarted.ipynb

+++ b/documentation/GettingStarted.ipynb

@@ -14,7 +14,7 @@

"metadata": {},

"source": [

"## Model Compilation\n",

- "Before simulations can be run, the model must be imported and compiled. In this process, AMICI performs all symbolic manipulations that later enable scalable simulations and efficient sensitivity computation. The first step towards model compilation is the creation of an [SbmlImporter](https://amici.readthedocs.io/en/latest/generated/amici.sbml_import.SbmlImporter.html) instance, which requires an SBML Document that specifies the model using the [Systems Biology Markup Language (SBML)](http://sbml.org/Main_Page). \n",

+ "Before simulations can be run, the model must be imported and compiled. In this process, AMICI performs all symbolic manipulations that later enable scalable simulations and efficient sensitivity computation. The first step towards model compilation is the creation of an [SbmlImporter](https://amici.readthedocs.io/en/latest/generated/amici.sbml_import.SbmlImporter.html) instance, which requires an SBML Document that specifies the model using the [Systems Biology Markup Language (SBML)](https://sbml.org/). \n",

"\n",

"For the purpose of this tutorial, we will use `model_steadystate_scaled.xml`, which is contained in the same directory as this notebook."

]

@@ -113,7 +113,7 @@

"cell_type": "markdown",

"metadata": {},

"source": [

- "Model simulations can be executed using the [amici.runAmiciSimulations](https://amici.readthedocs.io/en/latest/generated/amici.html#amici.runAmiciSimulation) routine. By default the model does not not contain any timepoints for which the model is to be simulated. Here we define a simulation timecourse with two timepoints at `0` and `1` and then run the simulation."

+ "Model simulations can be executed using the [amici.runAmiciSimulations](https://amici.readthedocs.io/en/latest/generated/amici.html#amici.runAmiciSimulation) routine. By default, the model does not contain any timepoints for which the model is to be simulated. Here we define a simulation timecourse with two timepoints at `0` and `1` and then run the simulation."

]

},

{

diff --git a/documentation/python_modules.rst b/documentation/python_modules.rst

index 5481865a7d..237a0a021f 100644

--- a/documentation/python_modules.rst

+++ b/documentation/python_modules.rst

@@ -11,6 +11,14 @@ AMICI Python API

amici.sbml_import

amici.pysb_import

amici.bngl_import

+ amici.petab

+ amici.petab.import_helpers

+ amici.petab.parameter_mapping

+ amici.petab.petab_import

+ amici.petab.pysb_import

+ amici.petab.sbml_import

+ amici.petab.simulations

+ amici.petab.simulator

amici.petab_import

amici.petab_import_pysb

amici.petab_objective

diff --git a/python/examples/example_constant_species/ExampleEquilibrationLogic.ipynb b/python/examples/example_constant_species/ExampleEquilibrationLogic.ipynb

index 5f66ea4db9..eb343dca09 100644

--- a/python/examples/example_constant_species/ExampleEquilibrationLogic.ipynb

+++ b/python/examples/example_constant_species/ExampleEquilibrationLogic.ipynb

@@ -62,7 +62,7 @@

"from IPython.display import Image\n",

"\n",

"fig = Image(\n",

- " filename=(\"../../../documentation/gfx/steadystate_solver_workflow.png\")\n",

+ " filename=\"../../../documentation/gfx/steadystate_solver_workflow.png\"\n",

")\n",

"fig"

]

@@ -97,12 +97,9 @@

],

"source": [

"import libsbml\n",

- "import importlib\n",

"import amici\n",

"import os\n",

- "import sys\n",

"import numpy as np\n",

- "import matplotlib.pyplot as plt\n",

"\n",

"# SBML model we want to import\n",

"sbml_file = \"model_constant_species.xml\"\n",

@@ -397,7 +394,7 @@

" * `-5`: Error: The model was simulated past the timepoint `t=1e100` without finding a steady state. Therefore, it is likely that the model has not steady state for the given parameter vector.\n",

"\n",

"Here, only the second entry of `posteq_status` contains a positive integer: The first run of Newton's method failed due to a Jacobian, which oculd not be factorized, but the second run (simulation) contains the entry 1 (success). The third entry is 0, thus Newton's method was not launched for a second time.\n",

- "More information can be found in`posteq_numsteps`: Also here, only the second entry contains a positive integer, which is smaller than the maximum number of steps taken (<1000). Hence steady state was reached via simulation, which corresponds to the simulated time written to `posteq_time`.\n",

+ "More information can be found in`posteq_numsteps`: Also here, only the second entry contains a positive integer, which is smaller than the maximum number of steps taken (<1000). Hence, steady state was reached via simulation, which corresponds to the simulated time written to `posteq_time`.\n",

"\n",

"We want to demonstrate a complete failure if inferring the steady state by reducing the number of integration steps to a lower value:"

]

@@ -951,7 +948,7 @@

}

],

"source": [

- "# Singluar Jacobian, use simulation\n",

+ "# Singular Jacobian, use simulation\n",

"model.setSteadyStateSensitivityMode(\n",

" amici.SteadyStateSensitivityMode.integrateIfNewtonFails\n",

")\n",

@@ -1207,7 +1204,7 @@

}

],

"source": [

- "# Non-singular Jacobian, use simulaiton\n",

+ "# Non-singular Jacobian, use simulation\n",

"model_reduced.setSteadyStateSensitivityMode(\n",

" amici.SteadyStateSensitivityMode.integrateIfNewtonFails\n",

")\n",

diff --git a/python/examples/example_errors.ipynb b/python/examples/example_errors.ipynb

index 5e07803d96..2b35964d8b 100644

--- a/python/examples/example_errors.ipynb

+++ b/python/examples/example_errors.ipynb

@@ -19,15 +19,16 @@

"source": [

"%matplotlib inline\n",

"import os\n",

+ "from contextlib import suppress\n",

+ "from pathlib import Path\n",

+ "\n",

+ "import matplotlib.pyplot as plt\n",

+ "import numpy as np\n",

+ "\n",

"import amici\n",

- "from amici.petab_import import import_petab_problem\n",

- "from amici.petab_objective import simulate_petab, RDATAS, EDATAS\n",

+ "from amici.petab.petab_import import import_petab_problem\n",

+ "from amici.petab.simulations import simulate_petab, RDATAS, EDATAS\n",

"from amici.plotting import plot_state_trajectories, plot_jacobian\n",

- "import petab\n",

- "import numpy as np\n",

- "import matplotlib.pyplot as plt\n",

- "from pathlib import Path\n",

- "from contextlib import suppress\n",

"\n",

"try:\n",

" import benchmark_models_petab\n",

@@ -153,7 +154,7 @@

" [amici.simulation_status_to_str(rdata.status) for rdata in res[RDATAS]],\n",

")\n",

"assert all(rdata.status == amici.AMICI_SUCCESS for rdata in res[RDATAS])\n",

- "print(\"Simulations finished succesfully.\")\n",

+ "print(\"Simulations finished successfully.\")\n",

"print()\n",

"\n",

"\n",

@@ -174,7 +175,7 @@

" [amici.simulation_status_to_str(rdata.status) for rdata in res[RDATAS]],\n",

")\n",

"assert all(rdata.status == amici.AMICI_SUCCESS for rdata in res[RDATAS])\n",

- "print(\"Simulations finished succesfully.\")"

+ "print(\"Simulations finished successfully.\")"

]

},

{

@@ -339,7 +340,7 @@

"**What happened?**\n",

"\n",

"AMICI failed to integrate the forward problem. The problem occurred for only one simulation condition, `condition_step_00_3`. The issue occurred at $t = 429.232$, where the error test failed.\n",

- "This means, the solver is unable to take a step of non-zero size without violating the choosen error tolerances."

+ "This means, the solver is unable to take a step of non-zero size without violating the chosen error tolerances."

]

},

{

@@ -400,7 +401,7 @@

" [amici.simulation_status_to_str(rdata.status) for rdata in res[RDATAS]],\n",

")\n",

"assert all(rdata.status == amici.AMICI_SUCCESS for rdata in res[RDATAS])\n",

- "print(\"Simulations finished succesfully.\")"

+ "print(\"Simulations finished successfully.\")"

]

},

{

@@ -457,7 +458,7 @@

"source": [

"**What happened?**\n",

"\n",

- "The simulation failed because the initial step-size after an event or heaviside function was too small. The error occured during simulation of condition `model1_data1` after successful preequilibration (`model1_data2`)."

+ "The simulation failed because the initial step-size after an event or heaviside function was too small. The error occurred during simulation of condition `model1_data1` after successful preequilibration (`model1_data2`)."

]

},

{

@@ -646,7 +647,7 @@

"id": "62d82971",

"metadata": {},

"source": [

- "Considering that `n_par` occurrs as exponent, it's magnitude looks pretty high.\n",

+ "Considering that `n_par` occurs as exponent, it's magnitude looks pretty high.\n",

"This term is very likely causing the problem - let's check:"

]

},

@@ -909,7 +910,7 @@

"source": [

"**What happened?**\n",

"\n",

- "All given experimental conditions require pre-equilibration, i.e., finding a steady state. AMICI first tries to find a steady state using the Newton solver, if that fails, it tries simulating until steady state, if that also failes, it tries the Newton solver from the end of the simulation. In this case, all three failed. Neither Newton's method nor simulation yielded a steady state satisfying the required tolerances.\n",

+ "All given experimental conditions require pre-equilibration, i.e., finding a steady state. AMICI first tries to find a steady state using the Newton solver, if that fails, it tries simulating until steady state, if that also fails, it tries the Newton solver from the end of the simulation. In this case, all three failed. Neither Newton's method nor simulation yielded a steady state satisfying the required tolerances.\n",

"\n",

"This can also be seen in `ReturnDataView.preeq_status` (the three statuses corresponds to Newton \\#1, Simulation, Newton \\#2):"

]

diff --git a/python/examples/example_jax/ExampleJax.ipynb b/python/examples/example_jax/ExampleJax.ipynb

index efda5b458e..225bd13667 100644

--- a/python/examples/example_jax/ExampleJax.ipynb

+++ b/python/examples/example_jax/ExampleJax.ipynb

@@ -262,7 +262,7 @@

"metadata": {},

"outputs": [],

"source": [

- "from amici.petab_import import import_petab_problem\n",

+ "from amici.petab.petab_import import import_petab_problem\n",

"\n",

"amici_model = import_petab_problem(\n",

" petab_problem, force_compile=True, verbose=False\n",

@@ -276,7 +276,7 @@

"source": [

"# JAX implementation\n",

"\n",

- "For full jax support, we would have to implement a new [primitive](https://jax.readthedocs.io/en/latest/notebooks/How_JAX_primitives_work.html), which would require quite a bit of engineering, and in the end wouldn't add much benefit since AMICI can't run on GPUs. Instead will interface AMICI using the experimental jax module [host_callback](https://jax.readthedocs.io/en/latest/jax.experimental.host_callback.html)."

+ "For full jax support, we would have to implement a new [primitive](https://jax.readthedocs.io/en/latest/notebooks/How_JAX_primitives_work.html), which would require quite a bit of engineering, and in the end wouldn't add much benefit since AMICI can't run on GPUs. Instead, will interface AMICI using the experimental jax module [host_callback](https://jax.readthedocs.io/en/latest/jax.experimental.host_callback.html)."

]

},

{

@@ -294,7 +294,7 @@

"metadata": {},

"outputs": [],

"source": [

- "from amici.petab_objective import simulate_petab\n",

+ "from amici.petab.simulations import simulate_petab\n",

"import amici\n",

"\n",

"amici_solver = amici_model.getSolver()\n",

@@ -341,7 +341,7 @@

"id": "98e819bd",

"metadata": {},

"source": [

- "Now we can finally define the JAX function that runs amici simulation using the host callback. We add a `custom_jvp` decorater so that we can define a custom jacobian vector product function in the next step. More details about custom jacobian vector product functions can be found in the [JAX documentation](https://jax.readthedocs.io/en/latest/notebooks/Custom_derivative_rules_for_Python_code.html)"

+ "Now we can finally define the JAX function that runs amici simulation using the host callback. We add a `custom_jvp` decorator so that we can define a custom jacobian vector product function in the next step. More details about custom jacobian vector product functions can be found in the [JAX documentation](https://jax.readthedocs.io/en/latest/notebooks/Custom_derivative_rules_for_Python_code.html)"

]

},

{

diff --git a/python/examples/example_large_models/example_performance_optimization.ipynb b/python/examples/example_large_models/example_performance_optimization.ipynb

index 31a9fc1729..82ca8d9dbb 100644

--- a/python/examples/example_large_models/example_performance_optimization.ipynb

+++ b/python/examples/example_large_models/example_performance_optimization.ipynb

@@ -9,9 +9,9 @@

"\n",

"**Objective:** Give some hints to speed up import and simulation of larger models\n",

"\n",

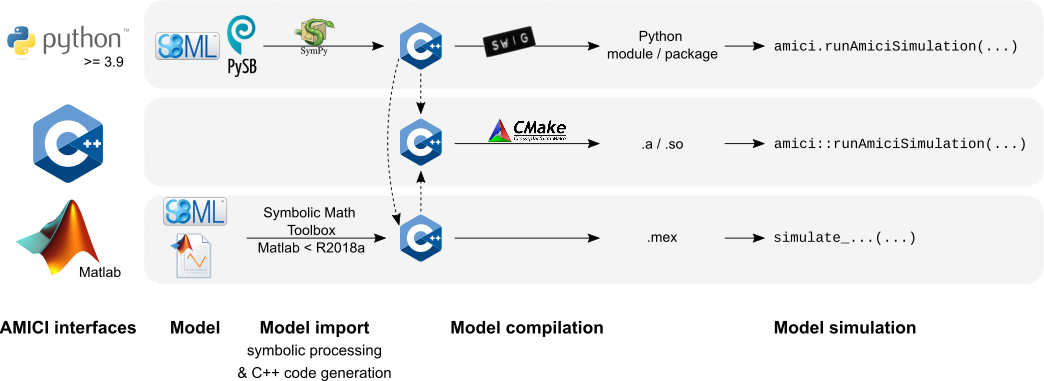

- "This notebook gives some hints that may help to speed up import and simulation of (mostly) larger models. While some of these settings may also yield slight performance improvements for smaller models, other settings may make things slower. The impact may be highly model-dependent (number of states, number of parameters, rate expressions) or system-dependent and it's worthile doing some benchmarking.\n",

+ "This notebook gives some hints that may help to speed up import and simulation of (mostly) larger models. While some of these settings may also yield slight performance improvements for smaller models, other settings may make things slower. The impact may be highly model-dependent (number of states, number of parameters, rate expressions) or system-dependent, and it's worthwhile doing some benchmarking.\n",

"\n",

- "To simulate models in AMICI, a model specified in a high-level format needs to be imported first, as shown in the following figure. This rougly involves the following steps:\n",

+ "To simulate models in AMICI, a model specified in a high-level format needs to be imported first, as shown in the following figure. This roughly involves the following steps:\n",

"\n",

"1. Generating the ODEs\n",

"2. Computing derivatives\n",

@@ -21,7 +21,7 @@

"\n",

"\n",

"\n",

- "There are various options to speed up individual steps of this process. Generally, faster import comes with slower simulation and vice versa. During parameter estimation, a model is often imported only once, and then millions of simulations are run. Therefore, faster simulation will easily compensate for slower import (one-off cost). In other cases, many models may to have to be imported, but only few simulations will be executed. In this case, faster import may bee more relevant.\n",

+ "There are various options to speed up individual steps of this process. Generally, faster import comes with slower simulation and vice versa. During parameter estimation, a model is often imported only once, and then millions of simulations are run. Therefore, faster simulation will easily compensate for slower import (one-off cost). In other cases, many models may to have to be imported, but only few simulations will be executed. In this case, faster import may be more relevant.\n",

"\n",

"In the following, we will present various settings that (may) influence import and simulation time. We will follow the order of steps outlined above.\n",

"\n",

@@ -35,7 +35,7 @@

"metadata": {},

"outputs": [],

"source": [

- "from IPython.core.pylabtools import figsize, getfigs\n",

+ "from IPython.core.pylabtools import figsize\n",

"import matplotlib.pyplot as plt\n",

"import pandas as pd\n",

"\n",

@@ -78,7 +78,7 @@

"See also the following section for the case that no sensitivities are required at all.\n",

"\n",

"\n",

- "#### Not generating sensivitiy code\n",

+ "#### Not generating sensitivity code\n",

"\n",

"If only forward simulations of a model are required, a modest import speedup can be obtained from not generating sensitivity code. This can be enabled via the `generate_sensitivity_code` argument of [amici.sbml_import.SbmlImporter.sbml2amici](https://amici.readthedocs.io/en/latest/generated/amici.sbml_import.SbmlImporter.html#amici.sbml_import.SbmlImporter.sbml2amici) or [amici.pysb_import.pysb2amici](https://amici.readthedocs.io/en/latest/generated/amici.pysb_import.html?highlight=pysb2amici#amici.pysb_import.pysb2amici).\n",

"\n",

@@ -160,7 +160,7 @@

"source": [

"#### Parallelization\n",

"\n",

- "For large models or complex model expressions, symbolic computation of the derivatives can be quite time consuming. This can be parallelized by setting the environment variable `AMICI_IMPORT_NPROCS` to the number of parallel processes that should be used. The impact strongly depends on the model. Note that setting this value too high may have a negative performance impact (benchmark!).\n",

+ "For large models or complex model expressions, symbolic computation of the derivatives can be quite time-consuming. This can be parallelized by setting the environment variable `AMICI_IMPORT_NPROCS` to the number of parallel processes that should be used. The impact strongly depends on the model. Note that setting this value too high may have a negative performance impact (benchmark!).\n",

"\n",

"Impact for a large and a tiny model:"

]

@@ -241,7 +241,7 @@

"\n",

"Simplification of model expressions can be disabled by passing `simplify=None` to [amici.sbml_import.SbmlImporter.sbml2amici](https://amici.readthedocs.io/en/latest/generated/amici.sbml_import.SbmlImporter.html#amici.sbml_import.SbmlImporter.sbml2amici) or [amici.pysb_import.pysb2amici](https://amici.readthedocs.io/en/latest/generated/amici.pysb_import.html?highlight=pysb2amici#amici.pysb_import.pysb2amici).\n",

"\n",

- "Depending on the given model, different simplification schemes may be cheaper or more beneficial than the default. SymPy's simplifcation functions are [well documentated](https://docs.sympy.org/latest/modules/simplify/simplify.html)."

+ "Depending on the given model, different simplification schemes may be cheaper or more beneficial than the default. SymPy's simplification functions are [well documented](https://docs.sympy.org/latest/modules/simplify/simplify.html)."

]

},

{

@@ -384,11 +384,11 @@

"source": [

"#### Compiler flags\n",

"\n",

- "For most compilers, different machine code optimizations can be enabled/disabled by the `-O0`, `-O1`, `-O2`, `-O3` flags, where a higher number enables more optimizations. For fastet simulation, `-O3` should be used. However, these optimizations come at the cost of increased compile times. If models grow very large, some optimizations (especially with `g++`, see above) become prohibitively slow. In this case, a lower optimization level may be necessary to be able to compile models at all.\n",

+ "For most compilers, different machine code optimizations can be enabled/disabled by the `-O0`, `-O1`, `-O2`, `-O3` flags, where a higher number enables more optimizations. For faster simulation, `-O3` should be used. However, these optimizations come at the cost of increased compile times. If models grow very large, some optimizations (especially with `g++`, see above) become prohibitively slow. In this case, a lower optimization level may be necessary to be able to compile models at all.\n",

"\n",

- "Another potential performance gain can be obtained from using CPU-specific instructions using `-march=native`. The disadvantage is, that the compiled model extension will only run on CPUs supporting the same instruction set. This may be become problematic when attempting to use an AMICI model on a machine other than on which it was compiled (e.g. on hetergenous compute clusters).\n",

+ "Another potential performance gain can be obtained from using CPU-specific instructions using `-march=native`. The disadvantage is, that the compiled model extension will only run on CPUs supporting the same instruction set. This may be become problematic when attempting to use an AMICI model on a machine other than on which it was compiled (e.g. on heterogeneous compute clusters).\n",

"\n",

- "These compiler flags should be set for both, AMICI installation installation and model compilation. \n",

+ "These compiler flags should be set for both, AMICI installation and model compilation. \n",

"\n",

"For AMICI installation, e.g.,\n",

"```bash\n",

@@ -475,7 +475,7 @@

"source": [

"#### Using some optimized BLAS\n",

"\n",

- "You might have access to some custom [BLAS](https://en.wikipedia.org/wiki/Basic_Linear_Algebra_Subprograms) optimized for your hardware which might speed up your simulations somewhat. We are not aware of any systematic evaluation and cannot make any recomendation. You pass the respective compiler and linker flags via the environment variables `BLAS_CFLAGS` and `BLAS_LIBS`, respectively."

+ "You might have access to some custom [BLAS](https://en.wikipedia.org/wiki/Basic_Linear_Algebra_Subprograms) optimized for your hardware which might speed up your simulations somewhat. We are not aware of any systematic evaluation and cannot make any recommendation. You pass the respective compiler and linker flags via the environment variables `BLAS_CFLAGS` and `BLAS_LIBS`, respectively."

]

},

{

@@ -487,7 +487,7 @@

"\n",

"A major determinant of simulation time for a given model is the required accuracy and the selected solvers. This has been evaluated, for example, in https://doi.org/10.1038/s41598-021-82196-2 and is not covered further here. \n",

"\n",

- "### Adjoint *vs.* forward sensivities\n",

+ "### Adjoint *vs.* forward sensitivities\n",

"\n",

"If only the objective function gradient is required, adjoint sensitivity analysis are often preferable over forward sensitivity analysis. As a rule of thumb, adjoint sensitivity analysis seems to outperform forward sensitivity analysis for models with more than 20 parameters:\n",

"\n",

diff --git a/python/examples/example_petab/petab.ipynb b/python/examples/example_petab/petab.ipynb

index 689d793f56..3e8c523829 100644

--- a/python/examples/example_petab/petab.ipynb

+++ b/python/examples/example_petab/petab.ipynb

@@ -15,8 +15,8 @@

"metadata": {},

"outputs": [],

"source": [

- "from amici.petab_import import import_petab_problem\n",

- "from amici.petab_objective import simulate_petab\n",

+ "from amici.petab.petab_import import import_petab_problem\n",

+ "from amici.petab.simulations import simulate_petab\n",

"import petab\n",

"\n",

"import os"

@@ -39,10 +39,10 @@

"output_type": "stream",

"text": [

"Cloning into 'tmp/benchmark-models'...\n",

- "remote: Enumerating objects: 142, done.\u001b[K\n",

- "remote: Counting objects: 100% (142/142), done.\u001b[K\n",

- "remote: Compressing objects: 100% (122/122), done.\u001b[K\n",

- "remote: Total 142 (delta 41), reused 104 (delta 18), pack-reused 0\u001b[K\n",

+ "remote: Enumerating objects: 142, done.\u001B[K\n",

+ "remote: Counting objects: 100% (142/142), done.\u001B[K\n",

+ "remote: Compressing objects: 100% (122/122), done.\u001B[K\n",

+ "remote: Total 142 (delta 41), reused 104 (delta 18), pack-reused 0\u001B[K\n",

"Receiving objects: 100% (142/142), 648.29 KiB | 1.23 MiB/s, done.\n",

"Resolving deltas: 100% (41/41), done.\n"

]

@@ -270,8 +270,7 @@

"cc1plus: warning: command line option ‘-Wstrict-prototypes’ is valid for C/ObjC but not for C++\n",

"gcc -pthread -B /home/yannik/anaconda3/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -I/home/yannik/amici/python/examples/amici_models/Boehm_JProteomeRes2014 -I/home/yannik/amici/python/sdist/amici/include -I/home/yannik/amici/python/sdist/amici/ThirdParty/gsl -I/home/yannik/amici/python/sdist/amici/ThirdParty/sundials/include -I/home/yannik/amici/python/sdist/amici/ThirdParty/SuiteSparse/include -I/usr/include/hdf5/serial -I/home/yannik/anaconda3/include/python3.7m -c Boehm_JProteomeRes2014_dxdotdp_explicit_rowvals.cpp -o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dxdotdp_explicit_rowvals.o -std=c++14\n",

"cc1plus: warning: command line option ‘-Wstrict-prototypes’ is valid for C/ObjC but not for C++\n",

- "g++ -pthread -shared -B /home/yannik/anaconda3/compiler_compat -L/home/yannik/anaconda3/lib -Wl,-rpath=/home/yannik/anaconda3/lib -Wl,--no-as-needed -Wl,--sysroot=/ build/temp.linux-x86_64-3.7/swig/Boehm_JProteomeRes2014_wrap.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dxdotdw.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_total_cl.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_x_rdata.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dxdotdp_implicit_colptrs.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dsigmaydp.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_y.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dydp.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_w.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_JSparseB_rowvals.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dxdotdw_rowvals.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dwdx_rowvals.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_x0.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dwdx.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dJydy_colptrs.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_JSparseB.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_JSparseB_colptrs.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dxdotdp_explicit_colptrs.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_sx0_fixedParameters.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_JSparse_rowvals.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dxdotdp_explicit.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dJydy.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dwdp_colptrs.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_x0_fixedParameters.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dxdotdw_colptrs.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dJydsigmay.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dxdotdp_implicit_rowvals.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dwdp.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_sx0.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_JB.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dwdx_colptrs.o build/temp.linux-x86_64-3.7/wrapfunctions.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_x_solver.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_JSparse.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_xdot.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dJydy_rowvals.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dwdp_rowvals.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_JSparse_colptrs.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_J.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dydx.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_JDiag.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_Jy.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_sigmay.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dxdotdp_explicit_rowvals.o -L/usr/lib/x86_64-linux-gnu/hdf5/serial -L/home/yannik/amici/python/sdist/amici/libs -lamici -lsundials -lsuitesparse -lcblas -lhdf5_hl_cpp -lhdf5_hl -lhdf5_cpp -lhdf5 -o /home/yannik/amici/python/examples/amici_models/Boehm_JProteomeRes2014/Boehm_JProteomeRes2014/_Boehm_JProteomeRes2014.cpython-37m-x86_64-linux-gnu.so\n",

- "\n"

+ "g++ -pthread -shared -B /home/yannik/anaconda3/compiler_compat -L/home/yannik/anaconda3/lib -Wl,-rpath=/home/yannik/anaconda3/lib -Wl,--no-as-needed -Wl,--sysroot=/ build/temp.linux-x86_64-3.7/swig/Boehm_JProteomeRes2014_wrap.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dxdotdw.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_total_cl.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_x_rdata.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dxdotdp_implicit_colptrs.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dsigmaydp.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_y.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dydp.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_w.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_JSparseB_rowvals.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dxdotdw_rowvals.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dwdx_rowvals.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_x0.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dwdx.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dJydy_colptrs.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_JSparseB.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_JSparseB_colptrs.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dxdotdp_explicit_colptrs.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_sx0_fixedParameters.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_JSparse_rowvals.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dxdotdp_explicit.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dJydy.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dwdp_colptrs.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_x0_fixedParameters.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dxdotdw_colptrs.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dJydsigmay.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dxdotdp_implicit_rowvals.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dwdp.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_sx0.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_JB.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dwdx_colptrs.o build/temp.linux-x86_64-3.7/wrapfunctions.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_x_solver.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_JSparse.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_xdot.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dJydy_rowvals.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dwdp_rowvals.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_JSparse_colptrs.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_J.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dydx.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_JDiag.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_Jy.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_sigmay.o build/temp.linux-x86_64-3.7/Boehm_JProteomeRes2014_dxdotdp_explicit_rowvals.o -L/usr/lib/x86_64-linux-gnu/hdf5/serial -L/home/yannik/amici/python/sdist/amici/libs -lamici -lsundials -lsuitesparse -lcblas -lhdf5_hl_cpp -lhdf5_hl -lhdf5_cpp -lhdf5 -o /home/yannik/amici/python/examples/amici_models/Boehm_JProteomeRes2014/Boehm_JProteomeRes2014/_Boehm_JProteomeRes2014.cpython-37m-x86_64-linux-gnu.so\n"

]

}

],

diff --git a/python/examples/example_presimulation/ExampleExperimentalConditions.ipynb b/python/examples/example_presimulation/ExampleExperimentalConditions.ipynb

index 63fbc7a4ff..83f23273af 100644

--- a/python/examples/example_presimulation/ExampleExperimentalConditions.ipynb

+++ b/python/examples/example_presimulation/ExampleExperimentalConditions.ipynb

@@ -21,16 +21,12 @@

"# Directory to which the generated model code is written\n",

"model_output_dir = model_name\n",

"\n",

+ "from pprint import pprint\n",

+ "\n",

"import libsbml\n",

- "import amici\n",

- "import amici.plotting\n",

- "import os\n",

- "import sys\n",

- "import importlib\n",

"import numpy as np\n",

- "import pandas as pd\n",

- "import matplotlib.pyplot as plt\n",

- "from pprint import pprint"

+ "\n",

+ "import amici.plotting"

]

},

{

@@ -143,7 +139,7 @@

"cell_type": "markdown",

"metadata": {},

"source": [

- "For this example we want specify the initial drug and kinase concentrations as experimental conditions. Accordingly we specify them as `fixedParameters`. The meaning of `fixedParameters` is defined in the [Glossary](https://amici.readthedocs.io/en/latest/glossary.html#term-fixed-parameters), which we display here for convenience."

+ "For this example we want to specify the initial drug and kinase concentrations as experimental conditions. Accordingly, we specify them as `fixedParameters`. The meaning of `fixedParameters` is defined in the [Glossary](https://amici.readthedocs.io/en/latest/glossary.html#term-fixed-parameters), which we display here for convenience."

]

},

{

@@ -369,7 +365,7 @@

"cell_type": "markdown",

"metadata": {},

"source": [

- "The resulting trajectory is definitely not what one may expect. The problem is that the `DRUG_0` and `KIN_0` set initial conditions for species in the model. By default these initial conditions are only applied at the very beginning of the simulation, i.e., before the preequilibration. Accordingly, the `fixedParameters` that we specified do not have any effect. To fix this, we need to set the `reinitializeFixedParameterInitialStates` attribue to `True`, to spefify that AMICI reinitializes all states that have `fixedParameter`-dependent initial states."

+ "The resulting trajectory is definitely not what one may expect. The problem is that the `DRUG_0` and `KIN_0` set initial conditions for species in the model. By default, these initial conditions are only applied at the very beginning of the simulation, i.e., before the preequilibration. Accordingly, the `fixedParameters` that we specified do not have any effect. To fix this, we need to set the `reinitializeFixedParameterInitialStates` attribute to `True`, to specify that AMICI reinitializes all states that have `fixedParameter`-dependent initial states."

]

},

{

diff --git a/python/examples/example_splines/ExampleSplines.ipynb b/python/examples/example_splines/ExampleSplines.ipynb

index d376ba91e5..0c237c6e6d 100644

--- a/python/examples/example_splines/ExampleSplines.ipynb

+++ b/python/examples/example_splines/ExampleSplines.ipynb

@@ -24,21 +24,20 @@

},

"outputs": [],

"source": [

- "import sys\n",

"import os\n",

- "import libsbml\n",

- "import amici\n",

- "\n",

- "import numpy as np\n",

- "import sympy as sp\n",

- "\n",

- "from shutil import rmtree\n",

+ "import sys\n",

"from importlib import import_module\n",

- "from uuid import uuid1\n",

+ "from shutil import rmtree\n",

"from tempfile import TemporaryDirectory\n",

+ "from uuid import uuid1\n",

+ "\n",

"import matplotlib as mpl\n",

+ "import numpy as np\n",

+ "import sympy as sp\n",

"from matplotlib import pyplot as plt\n",

"\n",

+ "import amici\n",

+ "\n",

"# Choose build directory\n",

"BUILD_PATH = None # temporary folder\n",

"# BUILD_PATH = 'build' # specified folder for debugging\n",

@@ -458,8 +457,7 @@

"\t\t\t2\n",

"\t\t\n",

"\t\n",

- "\n",

- "\n"

+ "\n"

]

}

],

@@ -1137,7 +1135,6 @@

"outputs": [],

"source": [

"import pandas as pd\n",

- "import seaborn as sns\n",

"import tempfile\n",

"import time"

]

@@ -1179,7 +1176,7 @@

"metadata": {},

"outputs": [],

"source": [

- "# If running as a Github action, just do the minimal amount of work required to check whether the code is working\n",

+ "# If running as a GitHub action, just do the minimal amount of work required to check whether the code is working\n",

"if os.getenv(\"GITHUB_ACTIONS\") is not None:\n",

" nruns = 1\n",

" num_nodes = [4]\n",

diff --git a/python/examples/example_splines_swameye/ExampleSplinesSwameye2003.ipynb b/python/examples/example_splines_swameye/ExampleSplinesSwameye2003.ipynb

index 8e3ee6db10..2a3c113bc7 100644

--- a/python/examples/example_splines_swameye/ExampleSplinesSwameye2003.ipynb

+++ b/python/examples/example_splines_swameye/ExampleSplinesSwameye2003.ipynb

@@ -47,23 +47,19 @@

},

"outputs": [],

"source": [

- "import os\n",

- "import math\n",

- "import logging\n",

- "import contextlib\n",

- "import multiprocessing\n",

"import copy\n",

+ "import logging\n",

+ "import os\n",

"\n",

+ "import libsbml\n",

"import numpy as np\n",

- "import sympy as sp\n",

"import pandas as pd\n",

+ "import petab\n",

+ "import pypesto.petab\n",

+ "import sympy as sp\n",

"from matplotlib import pyplot as plt\n",

"\n",

- "import libsbml\n",

- "import amici\n",

- "import petab\n",

- "import pypesto\n",

- "import pypesto.petab"

+ "import amici"

]

},

{

@@ -103,7 +99,7 @@

},

"outputs": [],

"source": [

- "# If running as a Github action, just do the minimal amount of work required to check whether the code is working\n",

+ "# If running as a GitHub action, just do the minimal amount of work required to check whether the code is working\n",

"if os.getenv(\"GITHUB_ACTIONS\") is not None:\n",

" n_starts = 15\n",

" pypesto_optimizer = pypesto.optimize.FidesOptimizer(\n",

diff --git a/python/examples/example_steadystate/ExampleSteadystate.ipynb b/python/examples/example_steadystate/ExampleSteadystate.ipynb

index b57ed522aa..d9f6ae635d 100644

--- a/python/examples/example_steadystate/ExampleSteadystate.ipynb

+++ b/python/examples/example_steadystate/ExampleSteadystate.ipynb

@@ -129,7 +129,7 @@

"cell_type": "markdown",

"metadata": {},

"source": [

- "In this example, we want to specify fixed parameters, observables and a $\\sigma$ parameter. Unfortunately, the latter two are not part of the [SBML standard](http://sbml.org/). However, they can be provided to `amici.SbmlImporter.sbml2amici` as demonstrated in the following."

+ "In this example, we want to specify fixed parameters, observables and a $\\sigma$ parameter. Unfortunately, the latter two are not part of the [SBML standard](https://sbml.org/). However, they can be provided to `amici.SbmlImporter.sbml2amici` as demonstrated in the following."

]

},

{

diff --git a/python/sdist/amici/__main__.py b/python/sdist/amici/__main__.py

index 165f5d9516..bf179cf871 100644

--- a/python/sdist/amici/__main__.py

+++ b/python/sdist/amici/__main__.py

@@ -1,6 +1,5 @@

"""Package-level entrypoint"""

-import os

import sys

from . import __version__, compiledWithOpenMP, has_clibs, hdf5_enabled

diff --git a/python/sdist/amici/parameter_mapping.py b/python/sdist/amici/parameter_mapping.py

index dd961f43c1..e27b210c46 100644

--- a/python/sdist/amici/parameter_mapping.py

+++ b/python/sdist/amici/parameter_mapping.py

@@ -1,4 +1,6 @@

# some extra imports for backward-compatibility

+import warnings

+

from .petab.conditions import ( # noqa # pylint: disable=unused-import

fill_in_parameters,

fill_in_parameters_for_condition,

@@ -15,3 +17,8 @@

unscale_parameter,

unscale_parameters_dict,

)

+

+warnings.warn(

+ "Importing amici.parameter_mapping is deprecated. Use `amici.petab.parameter_mapping` instead.",

+ DeprecationWarning,

+)

diff --git a/python/sdist/amici/petab/cli/import_petab.py b/python/sdist/amici/petab/cli/import_petab.py

index dfa2eb0aca..db600b0590 100644

--- a/python/sdist/amici/petab/cli/import_petab.py

+++ b/python/sdist/amici/petab/cli/import_petab.py

@@ -1,7 +1,8 @@

import argparse

import petab

-from amici.petab_import import import_model

+

+from ..petab_import import import_model_sbml

def _parse_cli_args():

@@ -142,7 +143,7 @@ def _main():

if args.flatten:

petab.flatten_timepoint_specific_output_overrides(pp)

- import_model(

+ import_model_sbml(

model_name=args.model_name,

sbml_model=pp.sbml_model,

condition_table=pp.condition_df,

diff --git a/python/sdist/amici/petab/import_helpers.py b/python/sdist/amici/petab/import_helpers.py

new file mode 100644

index 0000000000..3caf951ace

--- /dev/null

+++ b/python/sdist/amici/petab/import_helpers.py

@@ -0,0 +1,272 @@

+"""General helper functions for PEtab import.

+

+Functions for PEtab import that are independent of the model format.

+"""

+import importlib

+import logging

+import os

+import re

+from pathlib import Path

+from typing import Union

+

+import amici

+import pandas as pd

+import petab

+import sympy as sp

+from petab.C import (

+ CONDITION_NAME,

+ ESTIMATE,

+ NOISE_DISTRIBUTION,

+ NOISE_FORMULA,

+ OBSERVABLE_FORMULA,

+ OBSERVABLE_NAME,

+ OBSERVABLE_TRANSFORMATION,

+)

+from petab.parameters import get_valid_parameters_for_parameter_table

+from sympy.abc import _clash

+

+logger = logging.getLogger(__name__)

+

+

+def get_observation_model(

+ observable_df: pd.DataFrame,

+) -> tuple[

+ dict[str, dict[str, str]], dict[str, str], dict[str, Union[str, float]]

+]:

+ """

+ Get observables, sigmas, and noise distributions from PEtab observation

+ table in a format suitable for

+ :meth:`amici.sbml_import.SbmlImporter.sbml2amici`.

+

+ :param observable_df:

+ PEtab observables table

+

+ :return:

+ Tuple of dicts with observables, noise distributions, and sigmas.

+ """

+ if observable_df is None:

+ return {}, {}, {}

+

+ observables = {}

+ sigmas = {}

+

+ nan_pat = r"^[nN]a[nN]$"

+ for _, observable in observable_df.iterrows():

+ oid = str(observable.name)

+ # need to sanitize due to https://github.com/PEtab-dev/PEtab/issues/447

+ name = re.sub(nan_pat, "", str(observable.get(OBSERVABLE_NAME, "")))

+ formula_obs = re.sub(nan_pat, "", str(observable[OBSERVABLE_FORMULA]))

+ formula_noise = re.sub(nan_pat, "", str(observable[NOISE_FORMULA]))

+ observables[oid] = {"name": name, "formula": formula_obs}

+ sigmas[oid] = formula_noise

+

+ # PEtab does currently not allow observables in noiseFormula and AMICI

+ # cannot handle states in sigma expressions. Therefore, where possible,

+ # replace species occurring in error model definition by observableIds.

+ replacements = {

+ sp.sympify(observable["formula"], locals=_clash): sp.Symbol(

+ observable_id

+ )

+ for observable_id, observable in observables.items()

+ }

+ for observable_id, formula in sigmas.items():

+ repl = sp.sympify(formula, locals=_clash).subs(replacements)

+ sigmas[observable_id] = str(repl)

+

+ noise_distrs = petab_noise_distributions_to_amici(observable_df)

+

+ return observables, noise_distrs, sigmas

+

+

+def petab_noise_distributions_to_amici(

+ observable_df: pd.DataFrame,

+) -> dict[str, str]:

+ """

+ Map from the petab to the amici format of noise distribution

+ identifiers.

+

+ :param observable_df:

+ PEtab observable table

+

+ :return:

+ dictionary of observable_id => AMICI noise-distributions

+ """

+ amici_distrs = {}

+ for _, observable in observable_df.iterrows():

+ amici_val = ""

+

+ if (

+ OBSERVABLE_TRANSFORMATION in observable

+ and isinstance(observable[OBSERVABLE_TRANSFORMATION], str)

+ and observable[OBSERVABLE_TRANSFORMATION]

+ ):

+ amici_val += observable[OBSERVABLE_TRANSFORMATION] + "-"

+

+ if (

+ NOISE_DISTRIBUTION in observable

+ and isinstance(observable[NOISE_DISTRIBUTION], str)

+ and observable[NOISE_DISTRIBUTION]

+ ):

+ amici_val += observable[NOISE_DISTRIBUTION]

+ else:

+ amici_val += "normal"

+ amici_distrs[observable.name] = amici_val

+

+ return amici_distrs

+

+

+def petab_scale_to_amici_scale(scale_str: str) -> int:

+ """Convert PEtab parameter scaling string to AMICI scaling integer"""

+

+ if scale_str == petab.LIN:

+ return amici.ParameterScaling_none

+ if scale_str == petab.LOG:

+ return amici.ParameterScaling_ln

+ if scale_str == petab.LOG10:

+ return amici.ParameterScaling_log10

+

+ raise ValueError(f"Invalid parameter scale {scale_str}")

+

+

+def _create_model_name(folder: Union[str, Path]) -> str:

+ """

+ Create a name for the model.

+ Just re-use the last part of the folder.

+ """

+ return os.path.split(os.path.normpath(folder))[-1]

+

+

+def _can_import_model(

+ model_name: str, model_output_dir: Union[str, Path]

+) -> bool:

+ """

+ Check whether a module of that name can already be imported.

+ """

+ # try to import (in particular checks version)

+ try:

+ with amici.add_path(model_output_dir):

+ model_module = importlib.import_module(model_name)

+ except ModuleNotFoundError:

+ return False

+

+ # no need to (re-)compile

+ return hasattr(model_module, "getModel")

+

+

+def get_fixed_parameters(

+ petab_problem: petab.Problem,

+ non_estimated_parameters_as_constants=True,

+) -> list[str]:

+ """

+ Determine, set and return fixed model parameters.

+

+ Non-estimated parameters and parameters specified in the condition table

+ are turned into constants (unless they are overridden).

+ Only global SBML parameters are considered. Local parameters are ignored.

+

+ :param petab_problem:

+ The PEtab problem instance

+

+ :param non_estimated_parameters_as_constants:

+ Whether parameters marked as non-estimated in PEtab should be

+ considered constant in AMICI. Setting this to ``True`` will reduce

+ model size and simulation times. If sensitivities with respect to those

+ parameters are required, this should be set to ``False``.

+

+ :return:

+ list of IDs of parameters which are to be considered constant.

+ """

+ # if we have a parameter table, all parameters that are allowed to be

+ # listed in the parameter table, but are not marked as estimated, can be

+ # turned into AMICI constants

+ # due to legacy API, we might not always have a parameter table, though

+ fixed_parameters = set()

+ if petab_problem.parameter_df is not None:

+ all_parameters = get_valid_parameters_for_parameter_table(

+ model=petab_problem.model,

+ condition_df=petab_problem.condition_df,

+ observable_df=petab_problem.observable_df

+ if petab_problem.observable_df is not None

+ else pd.DataFrame(columns=petab.OBSERVABLE_DF_REQUIRED_COLS),

+ measurement_df=petab_problem.measurement_df

+ if petab_problem.measurement_df is not None

+ else pd.DataFrame(columns=petab.MEASUREMENT_DF_REQUIRED_COLS),

+ )

+ if non_estimated_parameters_as_constants:

+ estimated_parameters = petab_problem.parameter_df.index.values[

+ petab_problem.parameter_df[ESTIMATE] == 1

+ ]

+ else:

+ # don't treat parameter table parameters as constants

+ estimated_parameters = petab_problem.parameter_df.index.values

+ fixed_parameters = set(all_parameters) - set(estimated_parameters)

+

+ # Column names are model parameter IDs, compartment IDs or species IDs.

+ # Thereof, all parameters except for any overridden ones should be made

+ # constant.

+ # (Could potentially still be made constant, but leaving them might

+ # increase model reusability)

+

+ # handle parameters in condition table

+ condition_df = petab_problem.condition_df

+ if condition_df is not None:

+ logger.debug(f"Condition table: {condition_df.shape}")

+

+ # remove overridden parameters (`object`-type columns)

+ fixed_parameters.update(

+ p

+ for p in condition_df.columns

+ # get rid of conditionName column

+ if p != CONDITION_NAME

+ # there is no parametric override

+ # TODO: could check if the final overriding parameter is estimated

+ # or not, but for now, we skip the parameter if there is any kind

+ # of overriding

+ if condition_df[p].dtype != "O"

+ # p is a parameter

+ and not petab_problem.model.is_state_variable(p)

+ )

+

+ # Ensure mentioned parameters exist in the model. Remove additional ones

+ # from list

+ for fixed_parameter in fixed_parameters.copy():

+ # check global parameters

+ if not petab_problem.model.has_entity_with_id(fixed_parameter):

+ # TODO: could still exist as an output parameter?

+ logger.warning(

+ f"Column '{fixed_parameter}' used in condition "

+ "table but not entity with the corresponding ID "

+ "exists. Ignoring."

+ )

+ fixed_parameters.remove(fixed_parameter)

+

+ return list(sorted(fixed_parameters))

+

+

+def check_model(

+ amici_model: amici.Model,

+ petab_problem: petab.Problem,

+) -> None:

+ """Check that the model is consistent with the PEtab problem."""

+ if petab_problem.parameter_df is None:

+ return

+

+ amici_ids_free = set(amici_model.getParameterIds())

+ amici_ids = amici_ids_free | set(amici_model.getFixedParameterIds())

+

+ petab_ids_free = set(

+ petab_problem.parameter_df.loc[

+ petab_problem.parameter_df[ESTIMATE] == 1

+ ].index

+ )

+

+ amici_ids_free_required = petab_ids_free.intersection(amici_ids)

+

+ if not amici_ids_free_required.issubset(amici_ids_free):

+ raise ValueError(

+ "The available AMICI model does not support estimating the "

+ "following parameters. Please recompile the model and ensure "

+ "that these parameters are not treated as constants. Deleting "

+ "the current model might also resolve this. Parameters: "

+ f"{amici_ids_free_required.difference(amici_ids_free)}"

+ )

diff --git a/python/sdist/amici/petab/petab_import.py b/python/sdist/amici/petab/petab_import.py

new file mode 100644

index 0000000000..b9cbb1a433

--- /dev/null

+++ b/python/sdist/amici/petab/petab_import.py

@@ -0,0 +1,150 @@

+"""

+PEtab Import

+------------

+Import a model in the :mod:`petab` (https://github.com/PEtab-dev/PEtab) format

+into AMICI.

+"""

+

+import logging

+import os

+import shutil

+from pathlib import Path

+from typing import Union

+

+import amici

+import petab

+from petab.models import MODEL_TYPE_PYSB, MODEL_TYPE_SBML

+

+from ..logging import get_logger

+from .import_helpers import _can_import_model, _create_model_name, check_model

+from .sbml_import import import_model_sbml

+

+try:

+ from .pysb_import import import_model_pysb

+except ModuleNotFoundError:

+ # pysb not available

+ import_model_pysb = None

+

+logger = get_logger(__name__, logging.WARNING)

+

+

+def import_petab_problem(

+ petab_problem: petab.Problem,

+ model_output_dir: Union[str, Path, None] = None,

+ model_name: str = None,

+ force_compile: bool = False,

+ non_estimated_parameters_as_constants=True,

+ **kwargs,

+) -> "amici.Model":

+ """

+ Import model from petab problem.

+

+ :param petab_problem:

+ A petab problem containing all relevant information on the model.

+

+ :param model_output_dir:

+ Directory to write the model code to. Will be created if doesn't

+ exist. Defaults to current directory.

+

+ :param model_name:

+ Name of the generated model. If model file name was provided,

+ this defaults to the file name without extension, otherwise

+ the model ID will be used.

+

+ :param force_compile:

+ Whether to compile the model even if the target folder is not empty,

+ or the model exists already.

+

+ :param non_estimated_parameters_as_constants:

+ Whether parameters marked as non-estimated in PEtab should be

+ considered constant in AMICI. Setting this to ``True`` will reduce

+ model size and simulation times. If sensitivities with respect to those

+ parameters are required, this should be set to ``False``.

+

+ :param kwargs:

+ Additional keyword arguments to be passed to

+ :meth:`amici.sbml_import.SbmlImporter.sbml2amici`.

+

+ :return:

+ The imported model.

+ """

+ if petab_problem.model.type_id not in (MODEL_TYPE_SBML, MODEL_TYPE_PYSB):

+ raise NotImplementedError(

+ "Unsupported model type " + petab_problem.model.type_id

+ )

+

+ if petab_problem.mapping_df is not None:

+ # It's partially supported. Remove at your own risk...

+ raise NotImplementedError(

+ "PEtab v2.0.0 mapping tables are not yet supported."

+ )

+

+ model_name = model_name or petab_problem.model.model_id

+

+ if petab_problem.model.type_id == MODEL_TYPE_PYSB and model_name is None:

+ model_name = petab_problem.pysb_model.name

+ elif model_name is None and model_output_dir:

+ model_name = _create_model_name(model_output_dir)

+

+ # generate folder and model name if necessary

+ if model_output_dir is None:

+ if petab_problem.model.type_id == MODEL_TYPE_PYSB:

+ raise ValueError("Parameter `model_output_dir` is required.")

+

+ from .sbml_import import _create_model_output_dir_name

+

+ model_output_dir = _create_model_output_dir_name(

+ petab_problem.sbml_model, model_name

+ )

+ else:

+ model_output_dir = os.path.abspath(model_output_dir)

+

+ # create folder

+ if not os.path.exists(model_output_dir):

+ os.makedirs(model_output_dir)

+

+ # check if compilation necessary

+ if force_compile or not _can_import_model(model_name, model_output_dir):

+ # check if folder exists

+ if os.listdir(model_output_dir) and not force_compile:

+ raise ValueError(

+ f"Cannot compile to {model_output_dir}: not empty. "

+ "Please assign a different target or set `force_compile`."

+ )

+

+ # remove folder if exists

+ if os.path.exists(model_output_dir):

+ shutil.rmtree(model_output_dir)

+

+ logger.info(f"Compiling model {model_name} to {model_output_dir}.")

+ # compile the model

+ if petab_problem.model.type_id == MODEL_TYPE_PYSB:

+ import_model_pysb(

+ petab_problem,

+ model_name=model_name,

+ model_output_dir=model_output_dir,

+ **kwargs,

+ )

+ else:

+ import_model_sbml(

+ petab_problem=petab_problem,

+ model_name=model_name,

+ model_output_dir=model_output_dir,

+ non_estimated_parameters_as_constants=non_estimated_parameters_as_constants,

+ **kwargs,

+ )

+

+ # import model

+ model_module = amici.import_model_module(model_name, model_output_dir)

+ model = model_module.getModel()

+ check_model(amici_model=model, petab_problem=petab_problem)

+

+ logger.info(

+ f"Successfully loaded model {model_name} " f"from {model_output_dir}."

+ )

+

+ return model

+

+

+# for backwards compatibility

+import_model = import_model_sbml

diff --git a/python/sdist/amici/petab/pysb_import.py b/python/sdist/amici/petab/pysb_import.py

new file mode 100644

index 0000000000..51f53037af

--- /dev/null

+++ b/python/sdist/amici/petab/pysb_import.py

@@ -0,0 +1,274 @@

+"""

+PySB-PEtab Import

+-----------------

+Import a model in the PySB-adapted :mod:`petab`

+(https://github.com/PEtab-dev/PEtab) format into AMICI.

+"""

+

+import logging

+import re

+from pathlib import Path

+from typing import Optional, Union

+

+import petab

+import pysb

+import pysb.bng

+import sympy as sp

+from petab.C import CONDITION_NAME, NOISE_FORMULA, OBSERVABLE_FORMULA

+from petab.models.pysb_model import PySBModel

+

+from ..logging import get_logger, log_execution_time, set_log_level

+from . import PREEQ_INDICATOR_ID

+from .import_helpers import (

+ get_fixed_parameters,

+ petab_noise_distributions_to_amici,

+)

+from .util import get_states_in_condition_table

+

+logger = get_logger(__name__, logging.WARNING)

+

+

+def _add_observation_model(

+ pysb_model: pysb.Model, petab_problem: petab.Problem

+):

+ """Extend PySB model by observation model as defined in the PEtab

+ observables table"""

+

+ # add any required output parameters

+ local_syms = {

+ sp.Symbol.__str__(comp): comp

+ for comp in pysb_model.components

+ if isinstance(comp, sp.Symbol)

+ }

+ for formula in [

+ *petab_problem.observable_df[OBSERVABLE_FORMULA],

+ *petab_problem.observable_df[NOISE_FORMULA],

+ ]:

+ sym = sp.sympify(formula, locals=local_syms)

+ for s in sym.free_symbols:

+ if not isinstance(s, pysb.Component):

+ p = pysb.Parameter(str(s), 1.0)

+ pysb_model.add_component(p)

+ local_syms[sp.Symbol.__str__(p)] = p

+

+ # add observables and sigmas to pysb model

+ for observable_id, observable_formula, noise_formula in zip(

+ petab_problem.observable_df.index,

+ petab_problem.observable_df[OBSERVABLE_FORMULA],

+ petab_problem.observable_df[NOISE_FORMULA],

+ ):

+ obs_symbol = sp.sympify(observable_formula, locals=local_syms)

+ if observable_id in pysb_model.expressions.keys():

+ obs_expr = pysb_model.expressions[observable_id]

+ else:

+ obs_expr = pysb.Expression(observable_id, obs_symbol)

+ pysb_model.add_component(obs_expr)

+ local_syms[observable_id] = obs_expr

+

+ sigma_id = f"{observable_id}_sigma"

+ sigma_symbol = sp.sympify(noise_formula, locals=local_syms)

+ sigma_expr = pysb.Expression(sigma_id, sigma_symbol)

+ pysb_model.add_component(sigma_expr)

+ local_syms[sigma_id] = sigma_expr

+

+

+def _add_initialization_variables(

+ pysb_model: pysb.Model, petab_problem: petab.Problem

+):

+ """Add initialization variables to the PySB model to support initial

+ conditions specified in the PEtab condition table.

+

+ To parameterize initial states, we currently need initial assignments.

+ If they occur in the condition table, we create a new parameter

+ initial_${speciesID}. Feels dirty and should be changed (see also #924).

+ """

+

+ initial_states = get_states_in_condition_table(petab_problem)

+ fixed_parameters = []

+ if initial_states:

+ # add preequilibration indicator variable

+ # NOTE: would only be required if we actually have preequilibration

+ # adding it anyways. can be optimized-out later

+ if PREEQ_INDICATOR_ID in [c.name for c in pysb_model.components]:

+ raise AssertionError(

+ "Model already has a component with ID "

+ f"{PREEQ_INDICATOR_ID}. Cannot handle "

+ "species and compartments in condition table "

+ "then."

+ )

+ preeq_indicator = pysb.Parameter(PREEQ_INDICATOR_ID)

+ pysb_model.add_component(preeq_indicator)

+ # Can only reset parameters after preequilibration if they are fixed.

+ fixed_parameters.append(PREEQ_INDICATOR_ID)

+ logger.debug(

+ "Adding preequilibration indicator constant "

+ f"{PREEQ_INDICATOR_ID}"

+ )

+ logger.debug(f"Adding initial assignments for {initial_states.keys()}")

+

+ for assignee_id in initial_states:

+ init_par_id_preeq = f"initial_{assignee_id}_preeq"

+ init_par_id_sim = f"initial_{assignee_id}_sim"

+ for init_par_id in [init_par_id_preeq, init_par_id_sim]:

+ if init_par_id in [c.name for c in pysb_model.components]:

+ raise ValueError(

+ "Cannot create parameter for initial assignment "

+ f"for {assignee_id} because an entity named "

+ f"{init_par_id} exists already in the model."

+ )

+ p = pysb.Parameter(init_par_id)

+ pysb_model.add_component(p)

+

+ species_idx = int(re.match(r"__s(\d+)$", assignee_id)[1])

+ # use original model here since that's what was used to generate

+ # the ids in initial_states

+ species_pattern = petab_problem.model.model.species[species_idx]

+

+ # species pattern comes from the _original_ model, but we only want

+ # to modify pysb_model, so we have to reconstitute the pattern using

+ # pysb_model

+ for c in pysb_model.components:

+ globals()[c.name] = c

+ species_pattern = pysb.as_complex_pattern(eval(str(species_pattern)))

+

+ from pysb.pattern import match_complex_pattern

+

+ formula = pysb.Expression(

+ f"initial_{assignee_id}_formula",

+ preeq_indicator * pysb_model.parameters[init_par_id_preeq]

+ + (1 - preeq_indicator) * pysb_model.parameters[init_par_id_sim],

+ )

+ pysb_model.add_component(formula)

+

+ for initial in pysb_model.initials:

+ if match_complex_pattern(

+ initial.pattern, species_pattern, exact=True

+ ):

+ logger.debug(

+ "The PySB model has an initial defined for species "

+ f"{assignee_id}, but this species also has an initial "

+ "value defined in the PEtab condition table. The SBML "

+ "initial assignment will be overwritten to handle "

+ "preequilibration and initial values specified by the "

+ "PEtab problem."

+ )

+ initial.value = formula

+ break

+ else:

+ # No initial in the pysb model, so add one

+ init = pysb.Initial(species_pattern, formula)

+ pysb_model.add_component(init)

+

+ return fixed_parameters

+

+

+@log_execution_time("Importing PEtab model", logger)

+def import_model_pysb(

+ petab_problem: petab.Problem,

+ model_output_dir: Optional[Union[str, Path]] = None,

+ verbose: Optional[Union[bool, int]] = True,

+ model_name: Optional[str] = None,

+ **kwargs,

+) -> None:

+ """

+ Create AMICI model from PySB-PEtab problem

+

+ :param petab_problem:

+ PySB PEtab problem

+

+ :param model_output_dir:

+ Directory to write the model code to. Will be created if doesn't

+ exist. Defaults to current directory.

+

+ :param verbose:

+ Print/log extra information.

+

+ :param model_name:

+ Name of the generated model module

+

+ :param kwargs:

+ Additional keyword arguments to be passed to

+ :meth:`amici.pysb_import.pysb2amici`.

+ """

+ set_log_level(logger, verbose)

+

+ logger.info("Importing model ...")

+

+ if not isinstance(petab_problem.model, PySBModel):

+ raise ValueError("Not a PySB model")

+

+ # need to create a copy here as we don't want to modify the original

+ pysb.SelfExporter.cleanup()

+ og_export = pysb.SelfExporter.do_export

+ pysb.SelfExporter.do_export = False

+ pysb_model = pysb.Model(

+ base=petab_problem.model.model,

+ name=petab_problem.model.model_id,

+ )

+

+ _add_observation_model(pysb_model, petab_problem)

+ # generate species for the _original_ model

+ pysb.bng.generate_equations(petab_problem.model.model)

+ fixed_parameters = _add_initialization_variables(pysb_model, petab_problem)

+ pysb.SelfExporter.do_export = og_export

+

+ # check condition table for supported features, important to use pysb_model

+ # here, as we want to also cover output parameters

+ model_parameters = [p.name for p in pysb_model.parameters]

+ condition_species_parameters = get_states_in_condition_table(

+ petab_problem, return_patterns=True

+ )

+ for x in petab_problem.condition_df.columns:

+ if x == CONDITION_NAME:

+ continue

+

+ x = petab.mapping.resolve_mapping(petab_problem.mapping_df, x)

+

+ # parameters

+ if x in model_parameters:

+ continue

+

+ # species/pattern

+ if x in condition_species_parameters:

+ continue

+

+ raise NotImplementedError(

+ "For PySB PEtab import, only model parameters and species, but "

+ "not compartments are allowed in the condition table. Offending "

+ f"column: {x}"

+ )

+

+ constant_parameters = (

+ get_fixed_parameters(petab_problem) + fixed_parameters

+ )

+

+ if petab_problem.observable_df is None:

+ observables = None

+ sigmas = None

+ noise_distrs = None

+ else:

+ observables = [

+ expr.name

+ for expr in pysb_model.expressions

+ if expr.name in petab_problem.observable_df.index

+ ]

+

+ sigmas = {obs_id: f"{obs_id}_sigma" for obs_id in observables}

+

+ noise_distrs = petab_noise_distributions_to_amici(

+ petab_problem.observable_df

+ )

+

+ from amici.pysb_import import pysb2amici

+

+ pysb2amici(

+ model=pysb_model,

+ output_dir=model_output_dir,

+ model_name=model_name,

+ verbose=True,

+ observables=observables,

+ sigmas=sigmas,

+ constant_parameters=constant_parameters,

+ noise_distributions=noise_distrs,

+ **kwargs,

+ )

diff --git a/python/sdist/amici/petab/sbml_import.py b/python/sdist/amici/petab/sbml_import.py

new file mode 100644

index 0000000000..6388d6f8b0

--- /dev/null

+++ b/python/sdist/amici/petab/sbml_import.py

@@ -0,0 +1,553 @@

+import logging

+import math

+import os

+import tempfile

+from itertools import chain

+from pathlib import Path

+from typing import Optional, Union

+from warnings import warn

+

+import amici

+import libsbml

+import pandas as pd

+import petab

+import sympy as sp

+from _collections import OrderedDict

+from amici.logging import log_execution_time, set_log_level

+from petab.models import MODEL_TYPE_SBML

+from sympy.abc import _clash

+

+from . import PREEQ_INDICATOR_ID

+from .import_helpers import (

+ check_model,

+ get_fixed_parameters,

+ get_observation_model,

+)

+from .util import get_states_in_condition_table

+

+logger = logging.getLogger(__name__)

+

+

+@log_execution_time("Importing PEtab model", logger)