diff --git a/.github/workflows/ci.yml b/.github/workflows/ci.yml

index 54ae897..6243abf 100644

--- a/.github/workflows/ci.yml

+++ b/.github/workflows/ci.yml

@@ -21,5 +21,5 @@ jobs:

key: ${{ github.ref }}

path: .cache

- run: pip install mkdocs-material

- - run: pip install mkdocs-minify-plugin mkdocs-video mkdocs-git-committers-plugin mkdocs-git-revision-date-localized-plugin # mkdocs-git-revision-date-plugin # mkdocs-git-revision-date-localized-plugin mkdocs-git-authors-plugin

+ - run: pip install mkdocs-minify-plugin mkdocs-video mkdocs-git-committers-plugin-2 mkdocs-git-revision-date-localized-plugin # mkdocs-git-revision-date-plugin # mkdocs-git-revision-date-localized-plugin mkdocs-git-authors-plugin

- run: mkdocs gh-deploy --force

\ No newline at end of file

diff --git a/README.md b/README.md

index 24f4a7c..8bc4455 100644

--- a/README.md

+++ b/README.md

@@ -1,103 +1,29 @@

A Dynamic Points Removal Benchmark in Point Cloud Maps

---

-

-[](https://arxiv.org/abs/2307.07260)

-[](https://www.bilibili.com/video/BV1bC4y1R7h3)

-[](https://youtu.be/pCHsNKXDJQM?si=nhbAnPrbaZJEqbjx)

-[](https://hkustconnect-my.sharepoint.com/:b:/g/personal/qzhangcb_connect_ust_hk/EQvNHf9JNEtNpyPg1kkNLNABk0v1TgGyaM_OyCEVuID4RQ?e=TdWzAq)

-

-Here is a preview of the readme in codes. Task detects dynamic points in maps and removes them, enhancing the maps:

+Author: [Qingwen Zhang](http://kin-zhang.github.io) (Kin)

-

- -

+This is **our wiki page README**, please visit our [main branch](https://github.com/KTH-RPL/DynamicMap_Benchmark) for more information about the benchmark.

-**Folder** quick view:

+## Install

-- `methods` : contains all the methods in the benchmark

-- `scripts/py/eval`: eval the result pcd compared with ground truth, get quantitative table

-- `scripts/py/data` : pre-process data before benchmark. We also directly provided all the dataset we tested in the map. We run this benchmark offline in computer, so we will extract only pcd files from custom rosbag/other data format [KITTI, Argoverse2]

+If you want to try the MkDocs locally, the only thing you need is `Python` and some python package. If you are worrying it will destory your env, you can try [virual env](https://docs.python.org/3/library/venv.html) or [anaconda](https://www.anaconda.com/).

-**Quick** try:

-- Teaser data on KITTI sequence 00 only 384.8MB in [Zenodo online drive](https://zenodo.org/record/10886629)

- ```bash

- wget https://zenodo.org/records/10886629/files/00.zip

- unzip 00.zip -d ${data_path, e.g. /home/kin/data}

- ```

-- Clone our repo:

- ```bash

- git clone --recurse-submodules https://github.com/KTH-RPL/DynamicMap_Benchmark.git

- ```

-- Go to methods folder, build and run through

- ```bash

- cd methods/dufomap && cmake -B build -D CMAKE_CXX_COMPILER=g++-10 && cmake --build build

- ./build/dufomap_run ${data_path, e.g. /home/kin/data/00} ${assets/config.toml}

- ```

-

-### News:

-

-Feel free to pull a request if you want to add more methods or datasets. Welcome! We will try our best to update methods and datasets in this benchmark. Please give us a star 🌟 and cite our work 📖 if you find this useful for your research. Thanks!

-

-- **2024/04/29** [BeautyMap](https://arxiv.org/abs/2405.07283) is accepted by RA-L'24. Updated benchmark: BeautyMap and DeFlow submodule instruction in the benchmark. Added the first data-driven method [DeFlow](https://github.com/KTH-RPL/DeFlow/tree/feature/dynamicmap) into our benchmark. Feel free to check.

-- **2024/04/18** [DUFOMap](https://arxiv.org/abs/2403.01449) is accepted by RA-L'24. Updated benchmark: DUFOMap and dynablox submodule instruction in the benchmark. Two datasets w/o gt for demo are added in the download link. Feel free to check.

-- **2024/03/08** **Fix statements** on our ITSC'23 paper: KITTI sequences pose are also from SemanticKITTI which used SuMa. In the DUFOMap paper Section V-C, Table III, we present the dynamic removal result on different pose sources. Check discussion in [DUFOMap](https://arxiv.org/abs/2403.01449) paper if you are interested.

-- **2023/06/13** The [benchmark paper](https://arxiv.org/abs/2307.07260) Accepted by ITSC 2023 and release five methods (Octomap, Octomap w GF, ERASOR, Removert) and three datasets (01, 05, av2, semindoor) in [benchmark paper](https://arxiv.org/abs/2307.07260).

-

----

-

-- [ ] 2024/04/19: I will update a document page soon (tutorial, manual book, and new online leaderboard), and point out the commit for each paper. Since there are some minor mistakes in the first version. Stay tune with us!

-

-

-## Methods:

-

-Please check in [`methods`](methods) folder.

-

-Online (w/o prior map):

-- [x] DUFOMap (Ours 🚀): [RAL'24](https://arxiv.org/abs/2403.01449), [**Benchmark Instruction**](https://github.com/KTH-RPL/dufomap)

-- [x] Octomap w GF (Ours 🚀): [ITSC'23](https://arxiv.org/abs/2307.07260), [**Benchmark improvement ITSC 2023**](https://github.com/Kin-Zhang/octomap/tree/feat/benchmark)

-- [x] dynablox: [RAL'23 official link](https://github.com/ethz-asl/dynablox), [**Benchmark Adaptation**](https://github.com/Kin-Zhang/dynablox/tree/feature/benchmark)

-- [x] Octomap: [ICRA'10 & AR'13 official link](https://github.com/OctoMap/octomap_mapping), [**Benchmark implementation**](https://github.com/Kin-Zhang/octomap/tree/feat/benchmark)

-

-Learning-based (data-driven) (w pretrain-weights provided):

-- [x] DeFlow (Ours 🚀): [ICRA'24](https://arxiv.org/abs/2401.16122), [**Benchmark Adaptation**](https://github.com/KTH-RPL/DeFlow/tree/feature/dynamicmap)

-

-Offline (need prior map).

-- [x] BeautyMap (Ours 🚀): [RAL'24](https://arxiv.org/abs/2405.07283), [**Official Code**](https://github.com/MKJia/BeautyMap)

-- [x] ERASOR: [RAL'21 official link](https://github.com/LimHyungTae/ERASOR), [**benchmark implementation**](https://github.com/Kin-Zhang/ERASOR/tree/feat/no_ros)

-- [x] Removert: [IROS 2020 official link](https://github.com/irapkaist/removert), [**benchmark implementation**](https://github.com/Kin-Zhang/removert)

-

-Please note that we provided the comparison methods also but modified a little bit for us to run the experiments quickly, but no modified on their methods' core. Please check the LICENSE of each method in their official link before using it.

-

-You will find all methods in this benchmark under `methods` folder. So that you can easily reproduce the experiments. [Or click here to check our score screenshot directly](assets/imgs/eval_demo.png).

-

-

-Last but not least, feel free to pull request if you want to add more methods. Welcome!

-

-## Dataset & Scripts

-

-Download PCD files mentioned in paper from [Zenodo online drive](https://zenodo.org/records/10886629). Or create unified format by yourself through the [scripts we provided](scripts/README.md) for more open-data or your own dataset. Please follow the LICENSE of each dataset before using it.

-

-- [x] [Semantic-Kitti, outdoor small town](https://semantic-kitti.org/dataset.html) VLP-64

-- [x] [Argoverse2.0, outdoor US cities](https://www.argoverse.org/av2.html#lidar-link) VLP-32

-- [x] [UDI-Plane] Our own dataset, Collected by VLP-16 in a small vehicle.

-- [x] [KTH-Campuse] Our [Multi-Campus Dataset](https://mcdviral.github.io/), Collected by [Leica RTC360 3D Laser Scan](https://leica-geosystems.com/products/laser-scanners/scanners/leica-rtc360). Only 18 frames included to download for demo, please check [the official website](https://mcdviral.github.io/) for more.

-- [x] [Indoor-Floor] Our own dataset, Collected by Livox mid-360 in a quadruped robot.

-

-

-

-Welcome to contribute your dataset with ground truth to the community through pull request.

-

-### Evaluation

-

-First all the methods will output the clean map, if you are only **user on map clean task,** it's **enough**. But for evaluation, we need to extract the ground truth label from gt label based on clean map. Why we need this? Since maybe some methods downsample in their pipeline, we need to extract the gt label from the downsampled map.

-

-Check [create dataset readme part](scripts/README.md#evaluation) in the scripts folder to get more information. But you can directly download the dataset through the link we provided. Then no need to read the creation; just use the data you downloaded.

+main package [user is only need for sometime, check the issue section]

+```bash

+pip install mkdocs-material

+```

-- Visualize the result pcd files in [CloudCompare](https://www.danielgm.net/cc/) or the script to provide, one click to get all evaluation benchmarks and comparison images like paper have check in [scripts/py/eval](scripts/py/eval).

+plugin package

+```bash

+pip install mkdocs-minify-plugin mkdocs-git-revision-date-localized-plugin mkdocs-git-authors-plugin mkdocs-video

+```

-- All color bar also provided in CloudCompare, here is [tutorial how we make the animation video](TODO).

+### Run

+```bash

+mkdocs serve

+```

## Acknowledgements

diff --git a/docs/data.md b/docs/data/creation.md

similarity index 63%

rename from docs/data.md

rename to docs/data/creation.md

index 2a9abeb..2f7af05 100644

--- a/docs/data.md

+++ b/docs/data/creation.md

@@ -1,73 +1,13 @@

-# Data

+# Data Creation

-In this section, we will introduce the data format we use in the benchmark, and how to prepare the data (public datasets or collected by ourselves) for the benchmark.

-

-## Format

-

-We saved all our data into PCD files, first let me introduce the [PCD file format](https://pointclouds.org/documentation/tutorials/pcd_file_format.html):

-

-The important two for us are `VIEWPOINT`, `POINTS` and `DATA`:

-

-- **VIEWPOINT** - specifies an acquisition viewpoint for the points in the dataset. This could potentially be later on used for building transforms between different coordinate systems, or for aiding with features such as surface normals, that need a consistent orientation.

-

- The viewpoint information is specified as a translation (tx ty tz) + quaternion (qw qx qy qz). The default value is:

-

- ```bash

- VIEWPOINT 0 0 0 1 0 0 0

- ```

-

-- **POINTS** - specifies the number of points in the dataset.

-

-- **DATA** - specifies the data type that the point cloud data is stored in. As of version 0.7, three data types are supported: ascii, binary, and binary_compressed. We saved as binary for faster reading and writing.

-

-### Example

-

-```

-# .PCD v0.7 - Point Cloud Data file format

-VERSION 0.7

-FIELDS x y z intensity

-SIZE 4 4 4 4

-TYPE F F F F

-COUNT 1 1 1 1

-WIDTH 125883

-HEIGHT 1

-VIEWPOINT -15.6504 17.981 -0.934952 0.882959 -0.0239536 -0.0058903 -0.468802

-POINTS 125883

-DATA binary

-```

-

-In this `004390.pcd` we have 125883 points, and the pose (sensor center) of this frame is: `-15.6504 17.981 -0.934952 0.882959 -0.0239536 -0.0058903 -0.468802`. All points are already transformed to the world frame.

-

-## Download benchmark data

-

-We already processed the data in the benchmark, you can download the data from the [following links](https://zenodo.org/records/10886629):

-

-

-| Dataset | Description | Sensor Type | Total Frame Number | Size |

-| --- | --- | --- | --- | --- |

-| KITTI sequence 00 | in a small town with few dynamics (including one pedestrian around) | VLP-64 | 141 | 384.8 MB |

-| KITTI sequence 05 | in a small town straight way, one higher car, the benchmarking paper cover image from this sequence. | VLP-64 | 321 | 864.0 MB |

-| Argoverse2 | in a big city, crowded and tall buildings (including cyclists, vehicles, people walking near the building etc. | 2 x VLP-32 | 575 | 1.3 GB |

-| KTH campus (no gt) | Collected by us (Thien-Minh) on the KTH campus. Lots of people move around on the campus. | Leica RTC360 | 18 | 256.4 MB |

-| Semi-indoor | Collected by us, running on a small 1x2 vehicle with two people walking around the platform. | VLP-16 | 960 | 620.8 MB |

-| Twofloor (no gt) | Collected by us (Bowen Yang) in a quadruped robot. A two-floor structure environment with one pedestrian around. | Livox-mid 360 | 3305 | 725.1 MB |

-

-Download command:

-```bash

-wget https://zenodo.org/api/records/10886629/files-archive.zip

-

-# or download each sequence separately

-wget https://zenodo.org/records/10886629/files/00.zip

-wget https://zenodo.org/records/10886629/files/05.zip

-wget https://zenodo.org/records/10886629/files/av2.zip

-wget https://zenodo.org/records/10886629/files/kthcampus.zip

-wget https://zenodo.org/records/10886629/files/semindoor.zip

-wget https://zenodo.org/records/10886629/files/twofloor.zip

-```

+In this section, we demonstrate how to extract expected format data from public datasets (KITTI, Argoverse 2) and also collected by ourselves (rosbag).

+

+Still, I recommend you to download the benchmark data directly from the [Zenodo](https://zenodo.org/records/10886629) link without reading this section. Back to [data download and visualize](index.md#download-benchmark-data) page.

+It's only needed for people who want to **run more data from themselves**.

## Create by yourself

-If you want to process more data, you can follow the instructions below. (

+If you want to process more data, you can follow the instructions below.

!!! Note

Feel free to skip this section if you only want to use the benchmark data.

diff --git a/docs/data/index.md b/docs/data/index.md

new file mode 100644

index 0000000..1f6120e

--- /dev/null

+++ b/docs/data/index.md

@@ -0,0 +1,122 @@

+# Data Description

+

+In this section, we will introduce the data format we use in the benchmark, and how to visualize the data easily.

+Next section on creation will show you how to create this format data from your own data.

+

+## Benchmark Unified Format

+

+We saved all our data into **PCD files**, first let me introduce the [PCD file format](https://pointclouds.org/documentation/tutorials/pcd_file_format.html):

+

+The important two for us are `VIEWPOINT`, `POINTS` and `DATA`:

+

+- **VIEWPOINT** - specifies an acquisition viewpoint for the points in the dataset. This could potentially be later on used for building transforms between different coordinate systems, or for aiding with features such as surface normals, that need a consistent orientation.

+

+ The viewpoint information is specified as a translation (tx ty tz) + quaternion (qw qx qy qz). The default value is:

+

+ ```bash

+ VIEWPOINT 0 0 0 1 0 0 0

+ ```

+

+- **POINTS** - specifies the number of points in the dataset.

+

+- **DATA** - specifies the data type that the point cloud data is stored in. As of version 0.7, three data types are supported: ascii, binary, and binary_compressed. We saved as binary for faster reading and writing.

+

+### A Header Example

+

+I directly show a example header here from `004390.pcd` in KITTI sequence 00:

+

+```

+# .PCD v0.7 - Point Cloud Data file format

+VERSION 0.7

+FIELDS x y z intensity

+SIZE 4 4 4 4

+TYPE F F F F

+COUNT 1 1 1 1

+WIDTH 125883

+HEIGHT 1

+VIEWPOINT -15.6504 17.981 -0.934952 0.882959 -0.0239536 -0.0058903 -0.468802

+POINTS 125883

+DATA binary

+```

+

+In this `004390.pcd` we have 125883 points, and the pose (sensor center) of this frame is: `-15.6504 17.981 -0.934952 0.882959 -0.0239536 -0.0058903 -0.468802`.

+

+Again, all points from data frames are ==already transformed to the world frame== and VIEWPOINT is the sensor pose.

+

+### How to read PCD files

+

+In C++, we usually use PCL library to read PCD files, here is a simple example:

+

+```cpp

+#include

+#include

+

+pcl::PointCloud::Ptr pcd(new pcl::PointCloud);

+pcl::io::loadPCDFile("data/00/004390.pcd", *pcd);

+```

+

+In Python, we have a simple script to read PCD files in [the benchmark code](https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/scripts/py/utils/pcdpy3.py), or from [my gits](https://gist.github.com/Kin-Zhang/bd6475bdfa0ebde56ab5c060054d5185), you don't need to read the script in detail but use it directly.

+

+```python

+import pcdpy3 # the script I provided

+pcd_data = pcdpy3.PointCloud.from_path('data/00/004390.pcd')

+pc = pcd_data.np_data[:,:3] # shape (N, 3) N: the number of point, 3: x y z

+# if the header have intensity or rgb field, you can get it by:

+# pc_intensity = pcd_data.np_data[:,3] # shape (N,)

+# pc_rgb = pcd_data.np_data[:,3:6] # shape (N, 3)

+```

+

+## Download benchmark data

+

+We already processed the data in the benchmark, you can download the data from the [following links](https://zenodo.org/records/10886629):

+

+

+| Dataset | Description | Sensor Type | Total Frame Number | Size |

+| --- | --- | --- | --- | --- |

+| KITTI sequence 00 | in a small town with few dynamics (including one pedestrian around) | VLP-64 | 141 | 384.8 MB |

+| KITTI sequence 05 | in a small town straight way, one higher car, the benchmarking paper cover image from this sequence. | VLP-64 | 321 | 864.0 MB |

+| Argoverse2 | in a big city, crowded and tall buildings (including cyclists, vehicles, people walking near the building etc. | 2 x VLP-32 | 575 | 1.3 GB |

+| KTH campus (no gt) | Collected by us (Thien-Minh) on the KTH campus. Lots of people move around on the campus. | Leica RTC360 | 18 | 256.4 MB |

+| Semi-indoor | Collected by us, running on a small 1x2 vehicle with two people walking around the platform. | VLP-16 | 960 | 620.8 MB |

+| Twofloor (no gt) | Collected by us (Bowen Yang) in a quadruped robot. A two-floor structure environment with one pedestrian around. | Livox-mid 360 | 3305 | 725.1 MB |

+

+Download command:

+```bash

+wget https://zenodo.org/api/records/10886629/files-archive.zip

+

+# or download each sequence separately

+wget https://zenodo.org/records/10886629/files/00.zip

+wget https://zenodo.org/records/10886629/files/05.zip

+wget https://zenodo.org/records/10886629/files/av2.zip

+wget https://zenodo.org/records/10886629/files/kthcampus.zip

+wget https://zenodo.org/records/10886629/files/semindoor.zip

+wget https://zenodo.org/records/10886629/files/twofloor.zip

+```

+

+## Visualize the data

+

+We provide a simple script to visualize the data in the benchmark, you can find it in [scripts/py/data/play_data.py](https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/scripts/py/data/play_data.py). You may want to download the data and requirements first.

+

+```bash

+cd scripts/py

+

+# download the data

+wget https://zenodo.org/records/10886629/files/twofloor.zip

+

+# https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/scripts/py/requirements.txt

+pip install -r requirements.txt

+```

+

+Run it:

+```bash

+python data/play_data.py --data_folder /home/kin/data/twofloor --speed 1 # speed 1 for normal speed, 2 for faster with 2x speed

+```

+

+It will pop up a window to show the point cloud data, you can use the mouse to rotate, zoom in/out, and move the view. Terminal show the help information to start/stop the play.

+

+

+

+

+

+The axis here shows the sensor frame. The video is play in sensor-frame, so you can see the sensor move around in the video.

+

diff --git a/docs/evaluation.md b/docs/evaluation/index.md

similarity index 57%

rename from docs/evaluation.md

rename to docs/evaluation/index.md

index b14a75b..4839647 100644

--- a/docs/evaluation.md

+++ b/docs/evaluation/index.md

@@ -19,9 +19,9 @@ All the methods will output the **clean map**, so we need to extract the ground

### 0. Run Methods

-Check the [`methods`](../methods) folder, there is a [README](../methods/README.md) file to guide you how to run all the methods.

+Check the [`methods`](https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/methods) folder, there is a [README](https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/methods/README.md) file to guide you how to run all the methods.

-Or check the shell script in [`0_run_methods_all.sh`](../scripts/sh/0_run_methods_all.sh), run them with one command.

+Or check the shell script in [`0_run_methods_all.sh`](https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/scripts/sh/0_run_methods_all.sh), run them with one command.

```bash

./scripts/sh/0_run_methods_all.sh

@@ -36,14 +36,14 @@ Or check the shell script in [`0_run_methods_all.sh`](../scripts/sh/0_run_method

./export_eval_pcd /home/kin/bags/VLP16_cone_two_people octomapfg_output.pcd 0.05

```

-Or check the shell script in [`1_export_eval_pcd.sh`](../scripts/sh/1_export_eval_pcd.sh), run them with one command.

+Or check the shell script in [`1_export_eval_pcd.sh`](https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/scripts/sh/1_export_eval_pcd.sh), run them with one command.

```bash

./scripts/sh/1_export_eval_pcd.sh

```

### 2. Print the score

-Check the script and the only thing you need do is change the folder path to *your data folder*. And Select the methods you want to compare. Please try to open and read the [script first](py/eval/evaluate_all.py)

+Check the script and the only thing you need do is change the folder path to *your data folder*. And Select the methods you want to compare. Please try to open and read the [script first](https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/scripts/py/eval/evaluate_all.py)

```bash

python3 scripts/py/eval/evaluate_all.py

@@ -51,4 +51,13 @@ python3 scripts/py/eval/evaluate_all.py

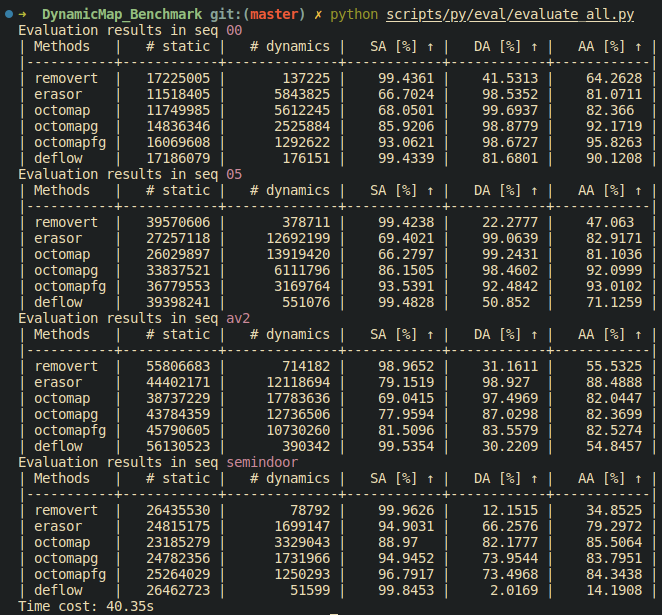

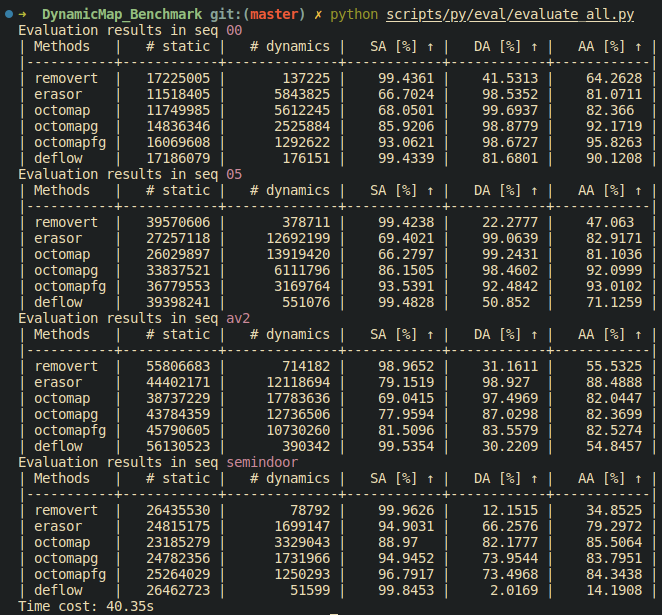

Here is the demo output:

-

\ No newline at end of file

+

+

+

+### 3. Visualize the result

+

+This jupyter-notebook [scripts/py/eval/figure_plot.ipynb](https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/scripts/py/eval/figure_plot.ipynb) will help you to visualize the result and output the qualitative result directly.

+

+

+

+

\ No newline at end of file

diff --git a/docs/index.md b/docs/index.md

index 416bdb8..c49c1e6 100644

--- a/docs/index.md

+++ b/docs/index.md

@@ -2,12 +2,15 @@

**Welcome to the Dynamic Map Benchmark Wiki Page!**

-You can always press `F` or top right search bar to search for specific topics.

+You can always press `F` or top right search bar to search for specific topics.

+

+Please give us [a star](https://github.com/KTH-RPL/DynamicMap_Benchmark)🌟 and [cite our work](#cite-our-papers)📖 to support our work if you find this useful.

-

+This is **our wiki page README**, please visit our [main branch](https://github.com/KTH-RPL/DynamicMap_Benchmark) for more information about the benchmark.

-**Folder** quick view:

+## Install

-- `methods` : contains all the methods in the benchmark

-- `scripts/py/eval`: eval the result pcd compared with ground truth, get quantitative table

-- `scripts/py/data` : pre-process data before benchmark. We also directly provided all the dataset we tested in the map. We run this benchmark offline in computer, so we will extract only pcd files from custom rosbag/other data format [KITTI, Argoverse2]

+If you want to try the MkDocs locally, the only thing you need is `Python` and some python package. If you are worrying it will destory your env, you can try [virual env](https://docs.python.org/3/library/venv.html) or [anaconda](https://www.anaconda.com/).

-**Quick** try:

-- Teaser data on KITTI sequence 00 only 384.8MB in [Zenodo online drive](https://zenodo.org/record/10886629)

- ```bash

- wget https://zenodo.org/records/10886629/files/00.zip

- unzip 00.zip -d ${data_path, e.g. /home/kin/data}

- ```

-- Clone our repo:

- ```bash

- git clone --recurse-submodules https://github.com/KTH-RPL/DynamicMap_Benchmark.git

- ```

-- Go to methods folder, build and run through

- ```bash

- cd methods/dufomap && cmake -B build -D CMAKE_CXX_COMPILER=g++-10 && cmake --build build

- ./build/dufomap_run ${data_path, e.g. /home/kin/data/00} ${assets/config.toml}

- ```

-

-### News:

-

-Feel free to pull a request if you want to add more methods or datasets. Welcome! We will try our best to update methods and datasets in this benchmark. Please give us a star 🌟 and cite our work 📖 if you find this useful for your research. Thanks!

-

-- **2024/04/29** [BeautyMap](https://arxiv.org/abs/2405.07283) is accepted by RA-L'24. Updated benchmark: BeautyMap and DeFlow submodule instruction in the benchmark. Added the first data-driven method [DeFlow](https://github.com/KTH-RPL/DeFlow/tree/feature/dynamicmap) into our benchmark. Feel free to check.

-- **2024/04/18** [DUFOMap](https://arxiv.org/abs/2403.01449) is accepted by RA-L'24. Updated benchmark: DUFOMap and dynablox submodule instruction in the benchmark. Two datasets w/o gt for demo are added in the download link. Feel free to check.

-- **2024/03/08** **Fix statements** on our ITSC'23 paper: KITTI sequences pose are also from SemanticKITTI which used SuMa. In the DUFOMap paper Section V-C, Table III, we present the dynamic removal result on different pose sources. Check discussion in [DUFOMap](https://arxiv.org/abs/2403.01449) paper if you are interested.

-- **2023/06/13** The [benchmark paper](https://arxiv.org/abs/2307.07260) Accepted by ITSC 2023 and release five methods (Octomap, Octomap w GF, ERASOR, Removert) and three datasets (01, 05, av2, semindoor) in [benchmark paper](https://arxiv.org/abs/2307.07260).

-

----

-

-- [ ] 2024/04/19: I will update a document page soon (tutorial, manual book, and new online leaderboard), and point out the commit for each paper. Since there are some minor mistakes in the first version. Stay tune with us!

-

-

-## Methods:

-

-Please check in [`methods`](methods) folder.

-

-Online (w/o prior map):

-- [x] DUFOMap (Ours 🚀): [RAL'24](https://arxiv.org/abs/2403.01449), [**Benchmark Instruction**](https://github.com/KTH-RPL/dufomap)

-- [x] Octomap w GF (Ours 🚀): [ITSC'23](https://arxiv.org/abs/2307.07260), [**Benchmark improvement ITSC 2023**](https://github.com/Kin-Zhang/octomap/tree/feat/benchmark)

-- [x] dynablox: [RAL'23 official link](https://github.com/ethz-asl/dynablox), [**Benchmark Adaptation**](https://github.com/Kin-Zhang/dynablox/tree/feature/benchmark)

-- [x] Octomap: [ICRA'10 & AR'13 official link](https://github.com/OctoMap/octomap_mapping), [**Benchmark implementation**](https://github.com/Kin-Zhang/octomap/tree/feat/benchmark)

-

-Learning-based (data-driven) (w pretrain-weights provided):

-- [x] DeFlow (Ours 🚀): [ICRA'24](https://arxiv.org/abs/2401.16122), [**Benchmark Adaptation**](https://github.com/KTH-RPL/DeFlow/tree/feature/dynamicmap)

-

-Offline (need prior map).

-- [x] BeautyMap (Ours 🚀): [RAL'24](https://arxiv.org/abs/2405.07283), [**Official Code**](https://github.com/MKJia/BeautyMap)

-- [x] ERASOR: [RAL'21 official link](https://github.com/LimHyungTae/ERASOR), [**benchmark implementation**](https://github.com/Kin-Zhang/ERASOR/tree/feat/no_ros)

-- [x] Removert: [IROS 2020 official link](https://github.com/irapkaist/removert), [**benchmark implementation**](https://github.com/Kin-Zhang/removert)

-

-Please note that we provided the comparison methods also but modified a little bit for us to run the experiments quickly, but no modified on their methods' core. Please check the LICENSE of each method in their official link before using it.

-

-You will find all methods in this benchmark under `methods` folder. So that you can easily reproduce the experiments. [Or click here to check our score screenshot directly](assets/imgs/eval_demo.png).

-

-

-Last but not least, feel free to pull request if you want to add more methods. Welcome!

-

-## Dataset & Scripts

-

-Download PCD files mentioned in paper from [Zenodo online drive](https://zenodo.org/records/10886629). Or create unified format by yourself through the [scripts we provided](scripts/README.md) for more open-data or your own dataset. Please follow the LICENSE of each dataset before using it.

-

-- [x] [Semantic-Kitti, outdoor small town](https://semantic-kitti.org/dataset.html) VLP-64

-- [x] [Argoverse2.0, outdoor US cities](https://www.argoverse.org/av2.html#lidar-link) VLP-32

-- [x] [UDI-Plane] Our own dataset, Collected by VLP-16 in a small vehicle.

-- [x] [KTH-Campuse] Our [Multi-Campus Dataset](https://mcdviral.github.io/), Collected by [Leica RTC360 3D Laser Scan](https://leica-geosystems.com/products/laser-scanners/scanners/leica-rtc360). Only 18 frames included to download for demo, please check [the official website](https://mcdviral.github.io/) for more.

-- [x] [Indoor-Floor] Our own dataset, Collected by Livox mid-360 in a quadruped robot.

-

-

-

-Welcome to contribute your dataset with ground truth to the community through pull request.

-

-### Evaluation

-

-First all the methods will output the clean map, if you are only **user on map clean task,** it's **enough**. But for evaluation, we need to extract the ground truth label from gt label based on clean map. Why we need this? Since maybe some methods downsample in their pipeline, we need to extract the gt label from the downsampled map.

-

-Check [create dataset readme part](scripts/README.md#evaluation) in the scripts folder to get more information. But you can directly download the dataset through the link we provided. Then no need to read the creation; just use the data you downloaded.

+main package [user is only need for sometime, check the issue section]

+```bash

+pip install mkdocs-material

+```

-- Visualize the result pcd files in [CloudCompare](https://www.danielgm.net/cc/) or the script to provide, one click to get all evaluation benchmarks and comparison images like paper have check in [scripts/py/eval](scripts/py/eval).

+plugin package

+```bash

+pip install mkdocs-minify-plugin mkdocs-git-revision-date-localized-plugin mkdocs-git-authors-plugin mkdocs-video

+```

-- All color bar also provided in CloudCompare, here is [tutorial how we make the animation video](TODO).

+### Run

+```bash

+mkdocs serve

+```

## Acknowledgements

diff --git a/docs/data.md b/docs/data/creation.md

similarity index 63%

rename from docs/data.md

rename to docs/data/creation.md

index 2a9abeb..2f7af05 100644

--- a/docs/data.md

+++ b/docs/data/creation.md

@@ -1,73 +1,13 @@

-# Data

+# Data Creation

-In this section, we will introduce the data format we use in the benchmark, and how to prepare the data (public datasets or collected by ourselves) for the benchmark.

-

-## Format

-

-We saved all our data into PCD files, first let me introduce the [PCD file format](https://pointclouds.org/documentation/tutorials/pcd_file_format.html):

-

-The important two for us are `VIEWPOINT`, `POINTS` and `DATA`:

-

-- **VIEWPOINT** - specifies an acquisition viewpoint for the points in the dataset. This could potentially be later on used for building transforms between different coordinate systems, or for aiding with features such as surface normals, that need a consistent orientation.

-

- The viewpoint information is specified as a translation (tx ty tz) + quaternion (qw qx qy qz). The default value is:

-

- ```bash

- VIEWPOINT 0 0 0 1 0 0 0

- ```

-

-- **POINTS** - specifies the number of points in the dataset.

-

-- **DATA** - specifies the data type that the point cloud data is stored in. As of version 0.7, three data types are supported: ascii, binary, and binary_compressed. We saved as binary for faster reading and writing.

-

-### Example

-

-```

-# .PCD v0.7 - Point Cloud Data file format

-VERSION 0.7

-FIELDS x y z intensity

-SIZE 4 4 4 4

-TYPE F F F F

-COUNT 1 1 1 1

-WIDTH 125883

-HEIGHT 1

-VIEWPOINT -15.6504 17.981 -0.934952 0.882959 -0.0239536 -0.0058903 -0.468802

-POINTS 125883

-DATA binary

-```

-

-In this `004390.pcd` we have 125883 points, and the pose (sensor center) of this frame is: `-15.6504 17.981 -0.934952 0.882959 -0.0239536 -0.0058903 -0.468802`. All points are already transformed to the world frame.

-

-## Download benchmark data

-

-We already processed the data in the benchmark, you can download the data from the [following links](https://zenodo.org/records/10886629):

-

-

-| Dataset | Description | Sensor Type | Total Frame Number | Size |

-| --- | --- | --- | --- | --- |

-| KITTI sequence 00 | in a small town with few dynamics (including one pedestrian around) | VLP-64 | 141 | 384.8 MB |

-| KITTI sequence 05 | in a small town straight way, one higher car, the benchmarking paper cover image from this sequence. | VLP-64 | 321 | 864.0 MB |

-| Argoverse2 | in a big city, crowded and tall buildings (including cyclists, vehicles, people walking near the building etc. | 2 x VLP-32 | 575 | 1.3 GB |

-| KTH campus (no gt) | Collected by us (Thien-Minh) on the KTH campus. Lots of people move around on the campus. | Leica RTC360 | 18 | 256.4 MB |

-| Semi-indoor | Collected by us, running on a small 1x2 vehicle with two people walking around the platform. | VLP-16 | 960 | 620.8 MB |

-| Twofloor (no gt) | Collected by us (Bowen Yang) in a quadruped robot. A two-floor structure environment with one pedestrian around. | Livox-mid 360 | 3305 | 725.1 MB |

-

-Download command:

-```bash

-wget https://zenodo.org/api/records/10886629/files-archive.zip

-

-# or download each sequence separately

-wget https://zenodo.org/records/10886629/files/00.zip

-wget https://zenodo.org/records/10886629/files/05.zip

-wget https://zenodo.org/records/10886629/files/av2.zip

-wget https://zenodo.org/records/10886629/files/kthcampus.zip

-wget https://zenodo.org/records/10886629/files/semindoor.zip

-wget https://zenodo.org/records/10886629/files/twofloor.zip

-```

+In this section, we demonstrate how to extract expected format data from public datasets (KITTI, Argoverse 2) and also collected by ourselves (rosbag).

+

+Still, I recommend you to download the benchmark data directly from the [Zenodo](https://zenodo.org/records/10886629) link without reading this section. Back to [data download and visualize](index.md#download-benchmark-data) page.

+It's only needed for people who want to **run more data from themselves**.

## Create by yourself

-If you want to process more data, you can follow the instructions below. (

+If you want to process more data, you can follow the instructions below.

!!! Note

Feel free to skip this section if you only want to use the benchmark data.

diff --git a/docs/data/index.md b/docs/data/index.md

new file mode 100644

index 0000000..1f6120e

--- /dev/null

+++ b/docs/data/index.md

@@ -0,0 +1,122 @@

+# Data Description

+

+In this section, we will introduce the data format we use in the benchmark, and how to visualize the data easily.

+Next section on creation will show you how to create this format data from your own data.

+

+## Benchmark Unified Format

+

+We saved all our data into **PCD files**, first let me introduce the [PCD file format](https://pointclouds.org/documentation/tutorials/pcd_file_format.html):

+

+The important two for us are `VIEWPOINT`, `POINTS` and `DATA`:

+

+- **VIEWPOINT** - specifies an acquisition viewpoint for the points in the dataset. This could potentially be later on used for building transforms between different coordinate systems, or for aiding with features such as surface normals, that need a consistent orientation.

+

+ The viewpoint information is specified as a translation (tx ty tz) + quaternion (qw qx qy qz). The default value is:

+

+ ```bash

+ VIEWPOINT 0 0 0 1 0 0 0

+ ```

+

+- **POINTS** - specifies the number of points in the dataset.

+

+- **DATA** - specifies the data type that the point cloud data is stored in. As of version 0.7, three data types are supported: ascii, binary, and binary_compressed. We saved as binary for faster reading and writing.

+

+### A Header Example

+

+I directly show a example header here from `004390.pcd` in KITTI sequence 00:

+

+```

+# .PCD v0.7 - Point Cloud Data file format

+VERSION 0.7

+FIELDS x y z intensity

+SIZE 4 4 4 4

+TYPE F F F F

+COUNT 1 1 1 1

+WIDTH 125883

+HEIGHT 1

+VIEWPOINT -15.6504 17.981 -0.934952 0.882959 -0.0239536 -0.0058903 -0.468802

+POINTS 125883

+DATA binary

+```

+

+In this `004390.pcd` we have 125883 points, and the pose (sensor center) of this frame is: `-15.6504 17.981 -0.934952 0.882959 -0.0239536 -0.0058903 -0.468802`.

+

+Again, all points from data frames are ==already transformed to the world frame== and VIEWPOINT is the sensor pose.

+

+### How to read PCD files

+

+In C++, we usually use PCL library to read PCD files, here is a simple example:

+

+```cpp

+#include

+#include

+

+pcl::PointCloud::Ptr pcd(new pcl::PointCloud);

+pcl::io::loadPCDFile("data/00/004390.pcd", *pcd);

+```

+

+In Python, we have a simple script to read PCD files in [the benchmark code](https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/scripts/py/utils/pcdpy3.py), or from [my gits](https://gist.github.com/Kin-Zhang/bd6475bdfa0ebde56ab5c060054d5185), you don't need to read the script in detail but use it directly.

+

+```python

+import pcdpy3 # the script I provided

+pcd_data = pcdpy3.PointCloud.from_path('data/00/004390.pcd')

+pc = pcd_data.np_data[:,:3] # shape (N, 3) N: the number of point, 3: x y z

+# if the header have intensity or rgb field, you can get it by:

+# pc_intensity = pcd_data.np_data[:,3] # shape (N,)

+# pc_rgb = pcd_data.np_data[:,3:6] # shape (N, 3)

+```

+

+## Download benchmark data

+

+We already processed the data in the benchmark, you can download the data from the [following links](https://zenodo.org/records/10886629):

+

+

+| Dataset | Description | Sensor Type | Total Frame Number | Size |

+| --- | --- | --- | --- | --- |

+| KITTI sequence 00 | in a small town with few dynamics (including one pedestrian around) | VLP-64 | 141 | 384.8 MB |

+| KITTI sequence 05 | in a small town straight way, one higher car, the benchmarking paper cover image from this sequence. | VLP-64 | 321 | 864.0 MB |

+| Argoverse2 | in a big city, crowded and tall buildings (including cyclists, vehicles, people walking near the building etc. | 2 x VLP-32 | 575 | 1.3 GB |

+| KTH campus (no gt) | Collected by us (Thien-Minh) on the KTH campus. Lots of people move around on the campus. | Leica RTC360 | 18 | 256.4 MB |

+| Semi-indoor | Collected by us, running on a small 1x2 vehicle with two people walking around the platform. | VLP-16 | 960 | 620.8 MB |

+| Twofloor (no gt) | Collected by us (Bowen Yang) in a quadruped robot. A two-floor structure environment with one pedestrian around. | Livox-mid 360 | 3305 | 725.1 MB |

+

+Download command:

+```bash

+wget https://zenodo.org/api/records/10886629/files-archive.zip

+

+# or download each sequence separately

+wget https://zenodo.org/records/10886629/files/00.zip

+wget https://zenodo.org/records/10886629/files/05.zip

+wget https://zenodo.org/records/10886629/files/av2.zip

+wget https://zenodo.org/records/10886629/files/kthcampus.zip

+wget https://zenodo.org/records/10886629/files/semindoor.zip

+wget https://zenodo.org/records/10886629/files/twofloor.zip

+```

+

+## Visualize the data

+

+We provide a simple script to visualize the data in the benchmark, you can find it in [scripts/py/data/play_data.py](https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/scripts/py/data/play_data.py). You may want to download the data and requirements first.

+

+```bash

+cd scripts/py

+

+# download the data

+wget https://zenodo.org/records/10886629/files/twofloor.zip

+

+# https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/scripts/py/requirements.txt

+pip install -r requirements.txt

+```

+

+Run it:

+```bash

+python data/play_data.py --data_folder /home/kin/data/twofloor --speed 1 # speed 1 for normal speed, 2 for faster with 2x speed

+```

+

+It will pop up a window to show the point cloud data, you can use the mouse to rotate, zoom in/out, and move the view. Terminal show the help information to start/stop the play.

+

+

+

+

+

+The axis here shows the sensor frame. The video is play in sensor-frame, so you can see the sensor move around in the video.

+

diff --git a/docs/evaluation.md b/docs/evaluation/index.md

similarity index 57%

rename from docs/evaluation.md

rename to docs/evaluation/index.md

index b14a75b..4839647 100644

--- a/docs/evaluation.md

+++ b/docs/evaluation/index.md

@@ -19,9 +19,9 @@ All the methods will output the **clean map**, so we need to extract the ground

### 0. Run Methods

-Check the [`methods`](../methods) folder, there is a [README](../methods/README.md) file to guide you how to run all the methods.

+Check the [`methods`](https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/methods) folder, there is a [README](https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/methods/README.md) file to guide you how to run all the methods.

-Or check the shell script in [`0_run_methods_all.sh`](../scripts/sh/0_run_methods_all.sh), run them with one command.

+Or check the shell script in [`0_run_methods_all.sh`](https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/scripts/sh/0_run_methods_all.sh), run them with one command.

```bash

./scripts/sh/0_run_methods_all.sh

@@ -36,14 +36,14 @@ Or check the shell script in [`0_run_methods_all.sh`](../scripts/sh/0_run_method

./export_eval_pcd /home/kin/bags/VLP16_cone_two_people octomapfg_output.pcd 0.05

```

-Or check the shell script in [`1_export_eval_pcd.sh`](../scripts/sh/1_export_eval_pcd.sh), run them with one command.

+Or check the shell script in [`1_export_eval_pcd.sh`](https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/scripts/sh/1_export_eval_pcd.sh), run them with one command.

```bash

./scripts/sh/1_export_eval_pcd.sh

```

### 2. Print the score

-Check the script and the only thing you need do is change the folder path to *your data folder*. And Select the methods you want to compare. Please try to open and read the [script first](py/eval/evaluate_all.py)

+Check the script and the only thing you need do is change the folder path to *your data folder*. And Select the methods you want to compare. Please try to open and read the [script first](https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/scripts/py/eval/evaluate_all.py)

```bash

python3 scripts/py/eval/evaluate_all.py

@@ -51,4 +51,13 @@ python3 scripts/py/eval/evaluate_all.py

Here is the demo output:

-

\ No newline at end of file

+

+

+

+### 3. Visualize the result

+

+This jupyter-notebook [scripts/py/eval/figure_plot.ipynb](https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/scripts/py/eval/figure_plot.ipynb) will help you to visualize the result and output the qualitative result directly.

+

+

+

+

\ No newline at end of file

diff --git a/docs/index.md b/docs/index.md

index 416bdb8..c49c1e6 100644

--- a/docs/index.md

+++ b/docs/index.md

@@ -2,12 +2,15 @@

**Welcome to the Dynamic Map Benchmark Wiki Page!**

-You can always press `F` or top right search bar to search for specific topics.

+You can always press `F` or top right search bar to search for specific topics.

+

+Please give us [a star](https://github.com/KTH-RPL/DynamicMap_Benchmark)🌟 and [cite our work](#cite-our-papers)📖 to support our work if you find this useful.

CHANGELOG:

-- 2024/06/25: Qingwen is starting to work on the wiki page.

+- **2024/08/24**: Reorganize the wiki page. Add all scripts usage in the benchmark code, [Data Overview](data/index.md) with visualization, [Method Overview](method/index.md) with a demo to output clean map and [Evaluation](evaluation/index.md) to evaluate the performance of the methods and also automatically output the visualization in the paper.

+- 2024/06/25: [Qingwen](https://kin-zhang.github.io/) is starting to work on the wiki page.

- **2024/04/29** [BeautyMap](https://arxiv.org/abs/2405.07283) is accepted by RA-L'24. Updated benchmark: BeautyMap and DeFlow submodule instruction in the benchmark. Added the first data-driven method [DeFlow](https://github.com/KTH-RPL/DeFlow/tree/feature/dynamicmap) into our benchmark. Feel free to check.

- **2024/04/18** [DUFOMap](https://arxiv.org/abs/2403.01449) is accepted by RA-L'24. Updated benchmark: DUFOMap and dynablox submodule instruction in the benchmark. Two datasets w/o gt for demo are added in the download link. Feel free to check.

- 2024/03/08 **Fix statements** on our ITSC'23 paper: KITTI sequences pose are also from SemanticKITTI which used SuMa. In the DUFOMap paper Section V-C, Table III, we present the dynamic removal result on different pose sources. Check discussion in [DUFOMap](https://arxiv.org/abs/2403.01449) paper if you are interested.

@@ -30,15 +33,15 @@ These ghost points negatively affect downstream tasks and overall point cloud qu

* What kind of data format we use?

- PCD files (pose information saved in `VIEWPOINT` header). Read [Data Section](data.md)

+ PCD files (pose information saved in `VIEWPOINT` header). Read [Data Section](data/index.md)

* How to evaluate the performance of a method?

- Two python scripts. Read [Evaluation Section](evaluation.md)

+ Two python scripts. Read [Evaluation Section](evaluation/index.md)

* How to run a benchmark method on my data?

- Format your data and run the method. Read [Create data](data.md/) and [Run method](Method.md)

+ Format your data and run the method. Read [Create data](data/creation.md) and [Run method](method/index.md)

## 🎁 Methods we included

@@ -87,16 +90,6 @@ Please cite our works if you find these useful for your research:

pages={608-614},

doi={10.1109/ITSC57777.2023.10422094}

}

-@article{jia2024beautymap,

- author={Jia, Mingkai and Zhang, Qingwen and Yang, Bowen and Wu, Jin and Liu, Ming and Jensfelt, Patric},

- journal={IEEE Robotics and Automation Letters},

- title={BeautyMap: Binary-Encoded Adaptable Ground Matrix for Dynamic Points Removal in Global Maps},

- year={2024},

- volume={},

- number={},

- pages={1-8},

- doi={10.1109/LRA.2024.3402625}

-}

@article{daniel2024dufomap,

author={Duberg, Daniel and Zhang, Qingwen and Jia, Mingkai and Jensfelt, Patric},

journal={IEEE Robotics and Automation Letters},

@@ -107,4 +100,14 @@ Please cite our works if you find these useful for your research:

pages={5038-5045},

doi={10.1109/LRA.2024.3387658}

}

+@article{jia2024beautymap,

+ author={Jia, Mingkai and Zhang, Qingwen and Yang, Bowen and Wu, Jin and Liu, Ming and Jensfelt, Patric},

+ journal={IEEE Robotics and Automation Letters},

+ title={{BeautyMap}: Binary-Encoded Adaptable Ground Matrix for Dynamic Points Removal in Global Maps},

+ year={2024},

+ volume={9},

+ number={7},

+ pages={6256-6263},

+ doi={10.1109/LRA.2024.3402625}

+}

```

\ No newline at end of file

diff --git a/docs/method.md b/docs/method.md

deleted file mode 100644

index d816a27..0000000

--- a/docs/method.md

+++ /dev/null

@@ -1,104 +0,0 @@

-# Methods

-

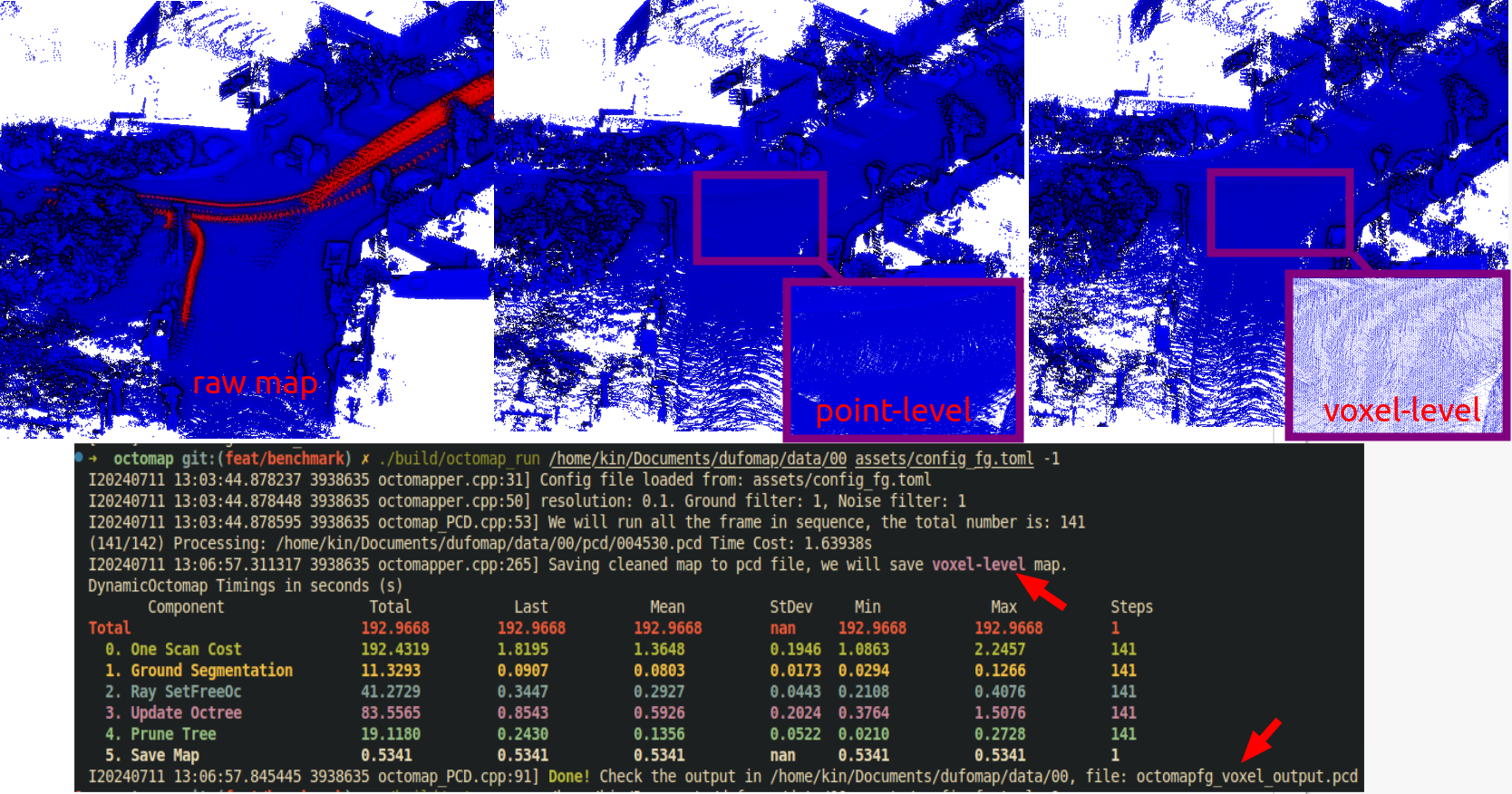

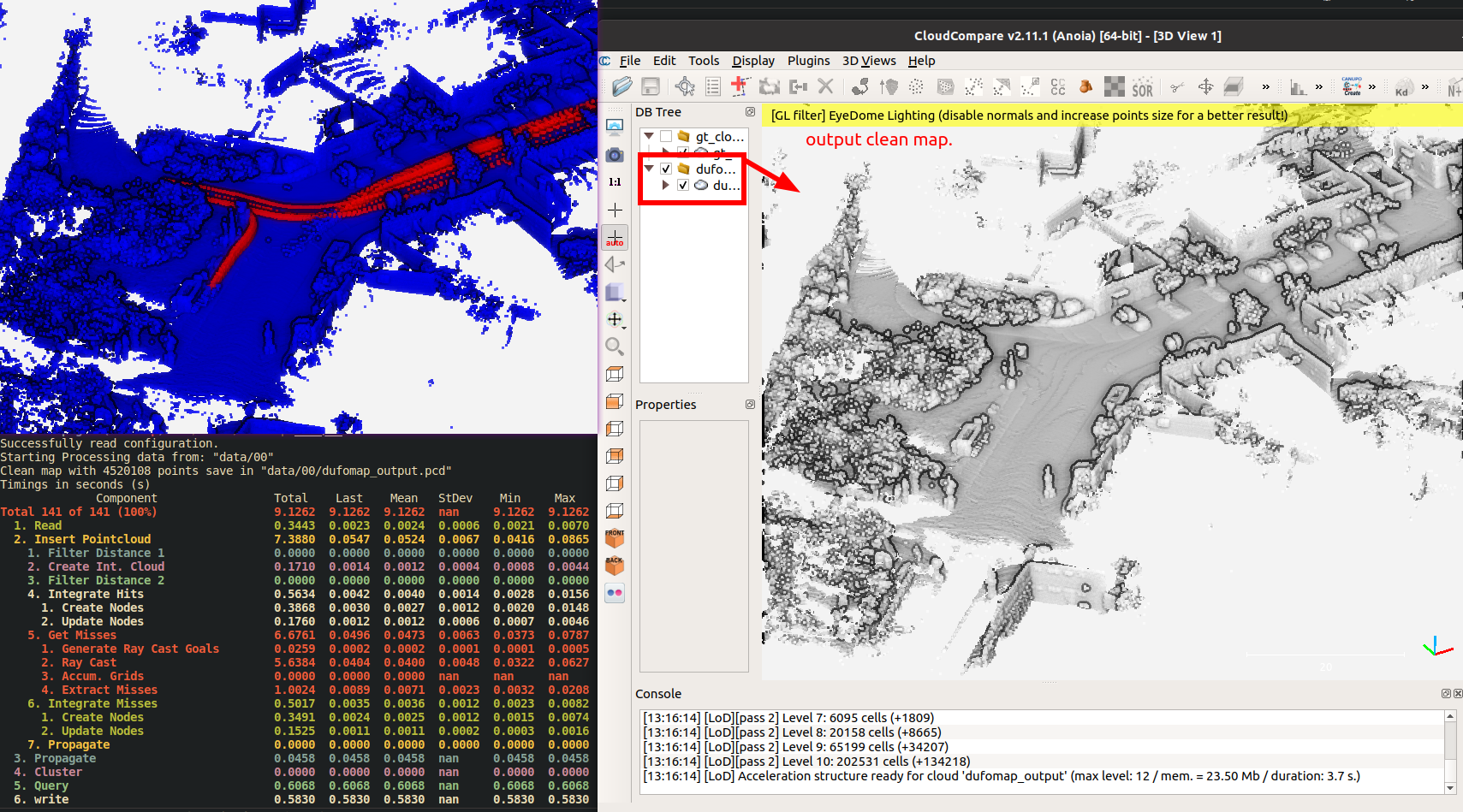

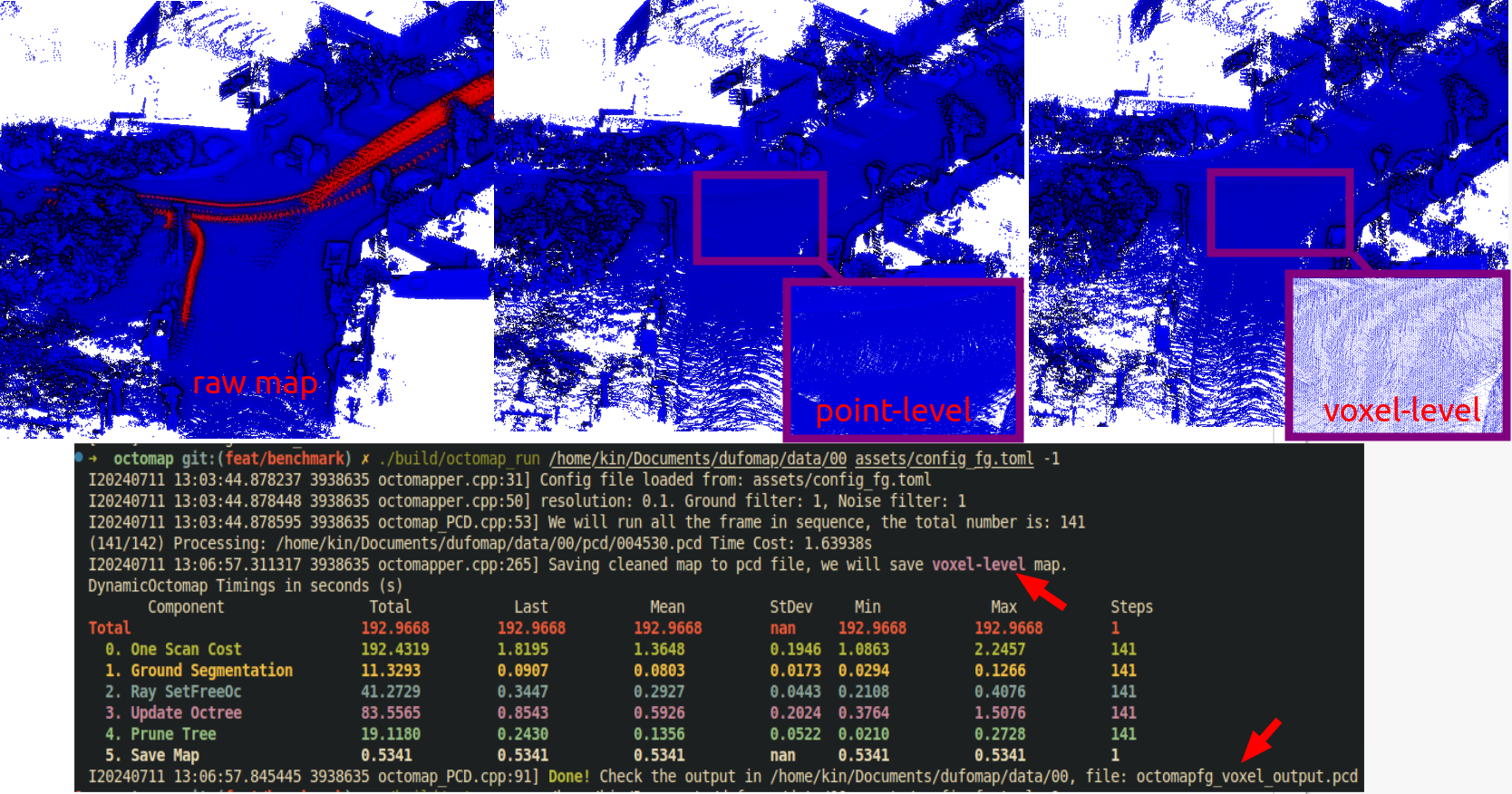

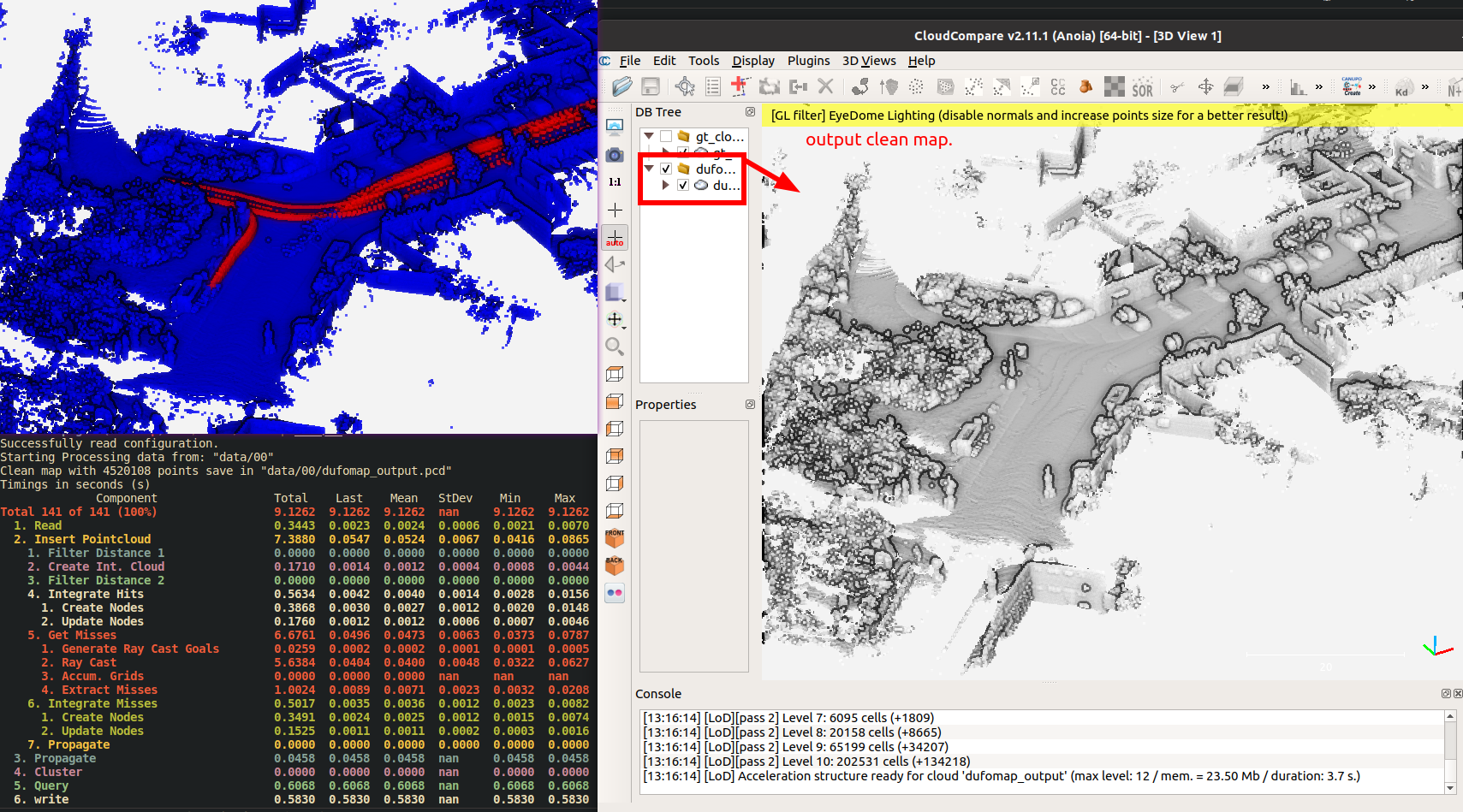

-Demo Image, results you can have after reading this README:

-

- -

-All of them have same dependencies [PCL, Glog, yaml-cpp], we will show how to install and build:

-## Install & Build

-

-Test computer and System:

-

-- Desktop setting: i9-12900KF, 64GB RAM, Swap 90GB, 1TB SSD

-- System setting: Ubuntu 20.04 [ROS noetic-full installed in system]

-

-Dependencies listed following if you want to install them manually, or you can use docker to build and run if you don't like trash your env.

-### Docker

-If you want to use docker, please check [Dockerfile](../Dockerfile) for more details. This can also be a reference for you to install the dependencies.

-```

-cd DynamicMap_Benchmark

-docker build -t zhangkin/dynamic_map .

-docker run -it --rm --name dynamicmap -v /home/kin/data:/home/kin/data zhangkin/dynamic_map /bin/zsh

-```

-- `-v` means link your data folder to docker container, so you can use your data in docker container. `-v ${your_env_path}:${container_path}`

-- If it's hard to build, you can always use `docker pull zhangkin/dynamic_map` to pull the image from docker hub.

-

-

-### PCL / OpenCV

-Normally, you will directly have PCL and OpenCV library if you installed ROS-full in your computer.

-OpenCV is required by Removert only, PCL is required by Benchmark.

-

-Reminder for ubuntu 20.04 may occur this error:

-```bash

-fatal error: opencv2/cv.h: No such file or directory

-```

-ln from opencv4 to opencv2

-```bash

-sudo ln -s /usr/include/opencv4/opencv2 /usr/include/opencv2

-```

-

-### glog gflag [for print]

-or you can install through `sudo apt install`

-```sh

-sh -c "$(wget -O- https://raw.githubusercontent.com/Kin-Zhang/Kin-Zhang/main/Dockerfiles/latest_glog_gflag.sh)"

-```

-

-### yaml-cpp [for config]

-Please set the FLAG, check this issue if you want to know more: https://github.com/jbeder/yaml-cpp/issues/682, [TOOD inside the CMakeLists.txt](https://github.com/jbeder/yaml-cpp/issues/566)

-

-If you install in Ubuntu 22.04, please check this commit: https://github.com/jbeder/yaml-cpp/commit/c86a9e424c5ee48e04e0412e9edf44f758e38fb9 which is the version could build in 22.04

-

-```sh

-cd ${Tmp_folder}

-git clone https://github.com/jbeder/yaml-cpp.git && cd yaml-cpp

-env CFLAGS='-fPIC' CXXFLAGS='-fPIC' cmake -Bbuild

-cmake --build build --config Release

-sudo cmake --build build --config Release --target install

-```

-

-### Build

-

-```bash

-cd ${methods you want}

-cmake -B build && cmake --build build

-```

-

-## RUN

-

-Check each methods config file in their own folder `config/*.yaml` or `assets/*.yaml`

-```bash

-./build/${methods_name}_run ${data_path} ${config.yaml} -1

-```

-

-For example, if you want to run octomap with GF

-

-```bash

-./build/octomap_run /home/kin/data/00 assets/config_fg.yaml -1

-```

-

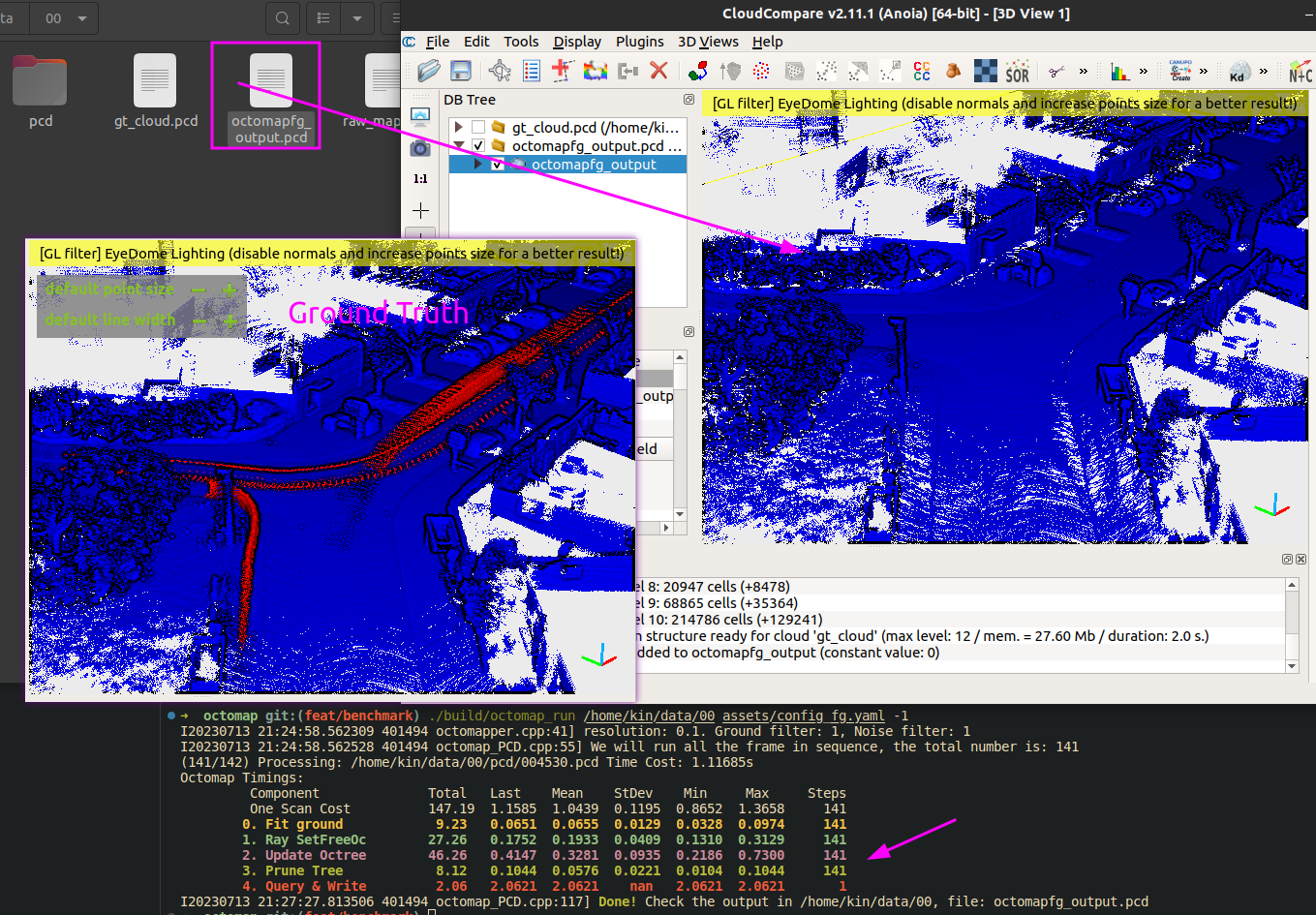

-Then you can get a time table with clean map result from Octomap w GF like top image shows. Or ERASOR on semindoor dataset:

-

-```bash

-./build/dufomap_run /home/kin/data/semindoor assets/config.toml

-```

-

-All Running commands, `-1` means all pcd files in the `pcd` folder, if you only want to run `10` frames change to `10`.

-

-```bash

-./build/octomap_run ${data_path} ${config.yaml} -1

-./build/dufomap_run ${data_path} ${config.toml}

-

-# beautymap

-python main.py --data_dir data/00 --dis_range 40 --xy_resolution 1 --h_res 0.5

-

-# deflow

-python main.py checkpoint=/home/kin/deflow_best.ckpt dataset_path=/home/kin/data/00

-```

-

-Then maybe you would like to have quantitative and qualitative result, check [scripts/py/eval](../scripts/py/eval).

-

-

\ No newline at end of file

diff --git a/docs/method/dufomap.md b/docs/method/dufomap.md

new file mode 100644

index 0000000..364d124

--- /dev/null

+++ b/docs/method/dufomap.md

@@ -0,0 +1,3 @@

+# DUFOMap

+

+Here is a quick blog post about the DUFOMap paper. I will soon update more details here.

\ No newline at end of file

diff --git a/docs/method/index.md b/docs/method/index.md

new file mode 100644

index 0000000..8459bb8

--- /dev/null

+++ b/docs/method/index.md

@@ -0,0 +1,87 @@

+# Methods

+

+In this section we will introduce how to run the methods in the benchmark.

+

+Here is a demo result you can have after reading this README:

+

+

-

-All of them have same dependencies [PCL, Glog, yaml-cpp], we will show how to install and build:

-## Install & Build

-

-Test computer and System:

-

-- Desktop setting: i9-12900KF, 64GB RAM, Swap 90GB, 1TB SSD

-- System setting: Ubuntu 20.04 [ROS noetic-full installed in system]

-

-Dependencies listed following if you want to install them manually, or you can use docker to build and run if you don't like trash your env.

-### Docker

-If you want to use docker, please check [Dockerfile](../Dockerfile) for more details. This can also be a reference for you to install the dependencies.

-```

-cd DynamicMap_Benchmark

-docker build -t zhangkin/dynamic_map .

-docker run -it --rm --name dynamicmap -v /home/kin/data:/home/kin/data zhangkin/dynamic_map /bin/zsh

-```

-- `-v` means link your data folder to docker container, so you can use your data in docker container. `-v ${your_env_path}:${container_path}`

-- If it's hard to build, you can always use `docker pull zhangkin/dynamic_map` to pull the image from docker hub.

-

-

-### PCL / OpenCV

-Normally, you will directly have PCL and OpenCV library if you installed ROS-full in your computer.

-OpenCV is required by Removert only, PCL is required by Benchmark.

-

-Reminder for ubuntu 20.04 may occur this error:

-```bash

-fatal error: opencv2/cv.h: No such file or directory

-```

-ln from opencv4 to opencv2

-```bash

-sudo ln -s /usr/include/opencv4/opencv2 /usr/include/opencv2

-```

-

-### glog gflag [for print]

-or you can install through `sudo apt install`

-```sh

-sh -c "$(wget -O- https://raw.githubusercontent.com/Kin-Zhang/Kin-Zhang/main/Dockerfiles/latest_glog_gflag.sh)"

-```

-

-### yaml-cpp [for config]

-Please set the FLAG, check this issue if you want to know more: https://github.com/jbeder/yaml-cpp/issues/682, [TOOD inside the CMakeLists.txt](https://github.com/jbeder/yaml-cpp/issues/566)

-

-If you install in Ubuntu 22.04, please check this commit: https://github.com/jbeder/yaml-cpp/commit/c86a9e424c5ee48e04e0412e9edf44f758e38fb9 which is the version could build in 22.04

-

-```sh

-cd ${Tmp_folder}

-git clone https://github.com/jbeder/yaml-cpp.git && cd yaml-cpp

-env CFLAGS='-fPIC' CXXFLAGS='-fPIC' cmake -Bbuild

-cmake --build build --config Release

-sudo cmake --build build --config Release --target install

-```

-

-### Build

-

-```bash

-cd ${methods you want}

-cmake -B build && cmake --build build

-```

-

-## RUN

-

-Check each methods config file in their own folder `config/*.yaml` or `assets/*.yaml`

-```bash

-./build/${methods_name}_run ${data_path} ${config.yaml} -1

-```

-

-For example, if you want to run octomap with GF

-

-```bash

-./build/octomap_run /home/kin/data/00 assets/config_fg.yaml -1

-```

-

-Then you can get a time table with clean map result from Octomap w GF like top image shows. Or ERASOR on semindoor dataset:

-

-```bash

-./build/dufomap_run /home/kin/data/semindoor assets/config.toml

-```

-

-All Running commands, `-1` means all pcd files in the `pcd` folder, if you only want to run `10` frames change to `10`.

-

-```bash

-./build/octomap_run ${data_path} ${config.yaml} -1

-./build/dufomap_run ${data_path} ${config.toml}

-

-# beautymap

-python main.py --data_dir data/00 --dis_range 40 --xy_resolution 1 --h_res 0.5

-

-# deflow

-python main.py checkpoint=/home/kin/deflow_best.ckpt dataset_path=/home/kin/data/00

-```

-

-Then maybe you would like to have quantitative and qualitative result, check [scripts/py/eval](../scripts/py/eval).

-

-

\ No newline at end of file

diff --git a/docs/method/dufomap.md b/docs/method/dufomap.md

new file mode 100644

index 0000000..364d124

--- /dev/null

+++ b/docs/method/dufomap.md

@@ -0,0 +1,3 @@

+# DUFOMap

+

+Here is a quick blog post about the DUFOMap paper. I will soon update more details here.

\ No newline at end of file

diff --git a/docs/method/index.md b/docs/method/index.md

new file mode 100644

index 0000000..8459bb8

--- /dev/null

+++ b/docs/method/index.md

@@ -0,0 +1,87 @@

+# Methods

+

+In this section we will introduce how to run the methods in the benchmark.

+

+Here is a demo result you can have after reading this README:

+

+ +

+## Install & Build

+

+Test computer and System:

+

+- Desktop setting: i9-12900KF, 64GB RAM, Swap 90GB, 1TB SSD

+- System setting: Ubuntu 20.04 [ROS noetic-full installed in system]

+

+### Setup

+

+We show the dependencies for [our octomap](https://github.com/Kin-Zhang/octomap) as an example.

+

+```bash

+sudo apt update && sudo apt install -y libpcl-dev

+sudo apt install -y libgoogle-glog-dev libgflags-dev

+```

+

+#### Docker option

+

+You can use docker to build and run if you don't like trash your env and is able to run all methods in our benchmark.

+

+If you want to use docker, please check [Dockerfile](https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/Dockerfile) for more details. This can also be a reference for you to install the dependencies.

+```

+cd DynamicMap_Benchmark

+docker build -t zhangkin/dynamic_map .

+docker run -it --rm --name dynamicmap -v /home/kin/data:/home/kin/data zhangkin/dynamic_map /bin/zsh

+```

+- `-v` means link your data folder to docker container, so you can use your data in docker container. `-v ${your_env_path}:${container_path}`

+- If it's hard to build, you can always use `docker pull zhangkin/dynamic_map` to pull the image from docker hub.

+

+### Build

+

+```bash

+git clone https://github.com/Kin-Zhang/octomap

+cd octomap

+cmake -B build && cmake --build build

+```

+

+## RUN

+

+Check each methods config file in their own folder `config/*.yaml` or `assets/*.yaml`

+```bash

+./build/${methods_name}_run ${data_path} ${config.yaml} -1

+```

+

+For example, if you want to run octomap with GF

+

+```bash

+./build/octomap_run /home/kin/data/00 assets/config_fg.yaml -1

+```

+

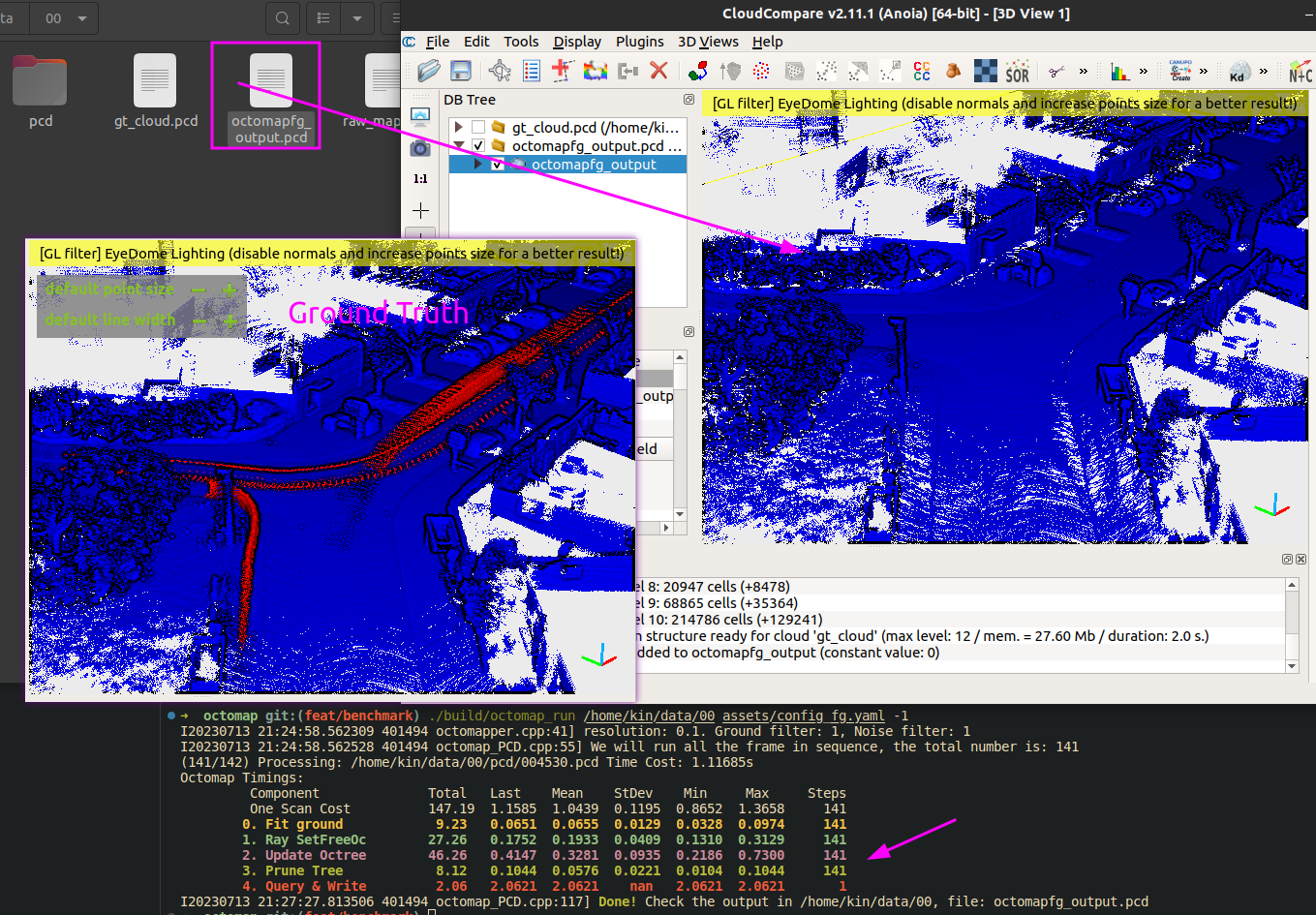

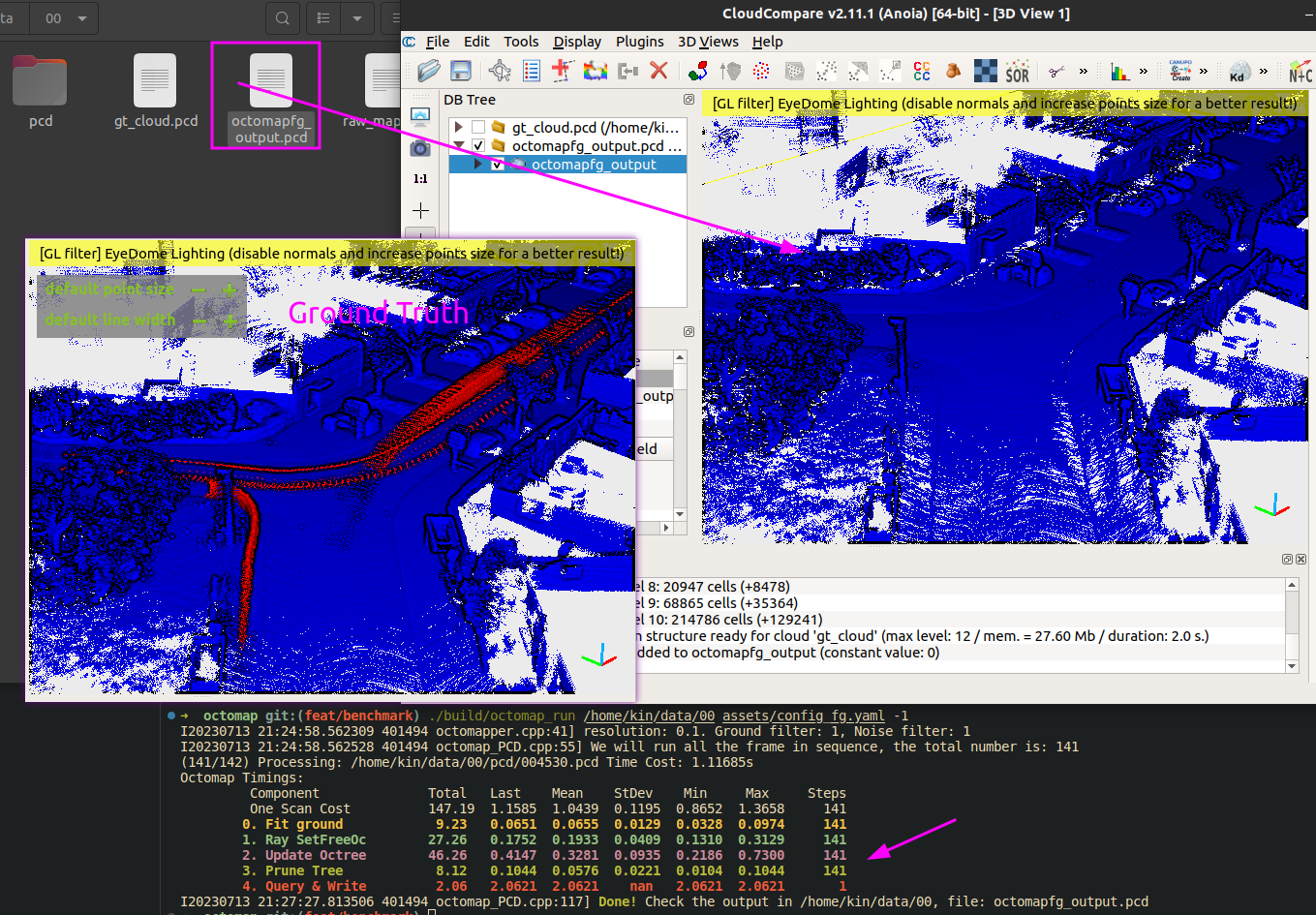

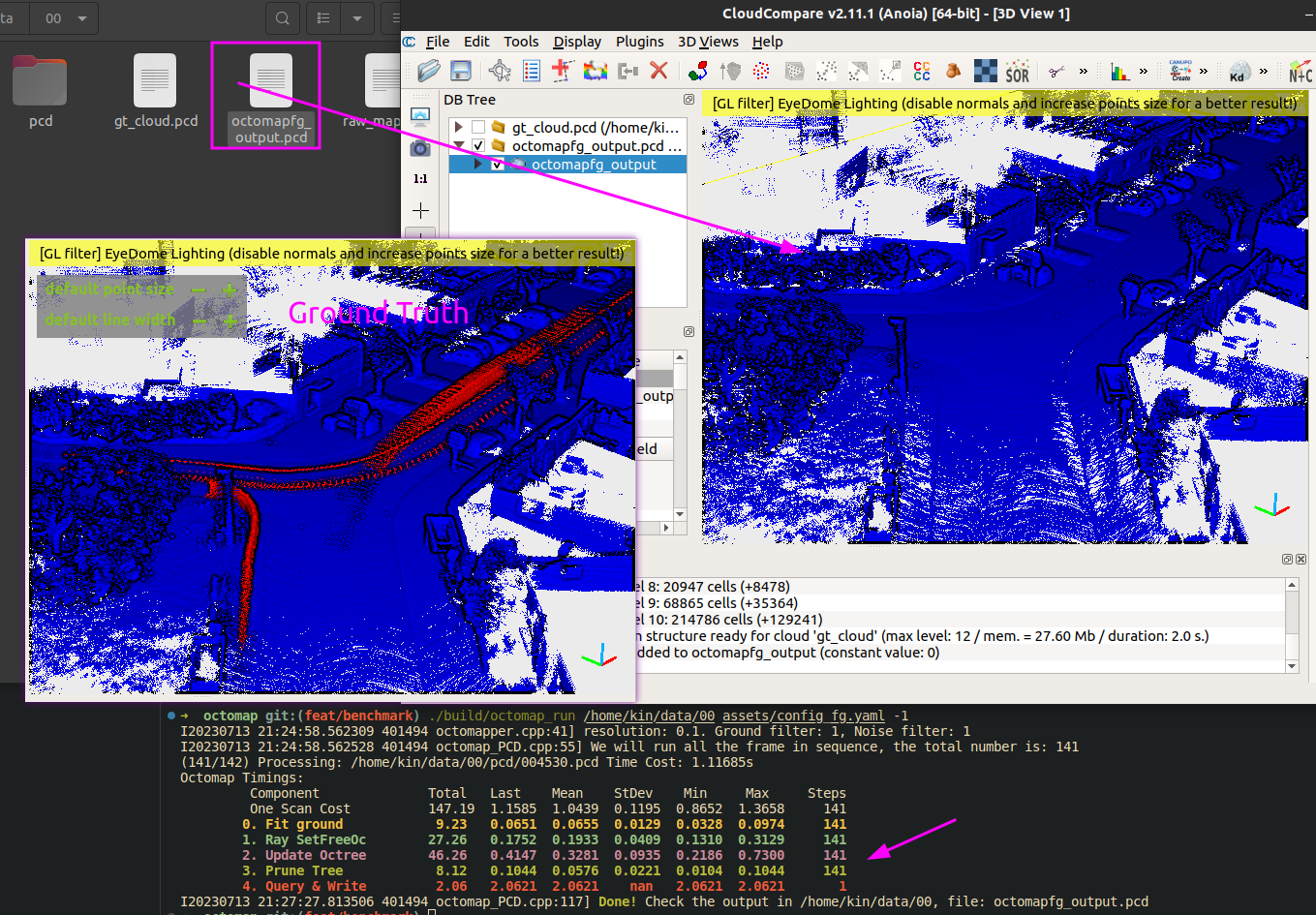

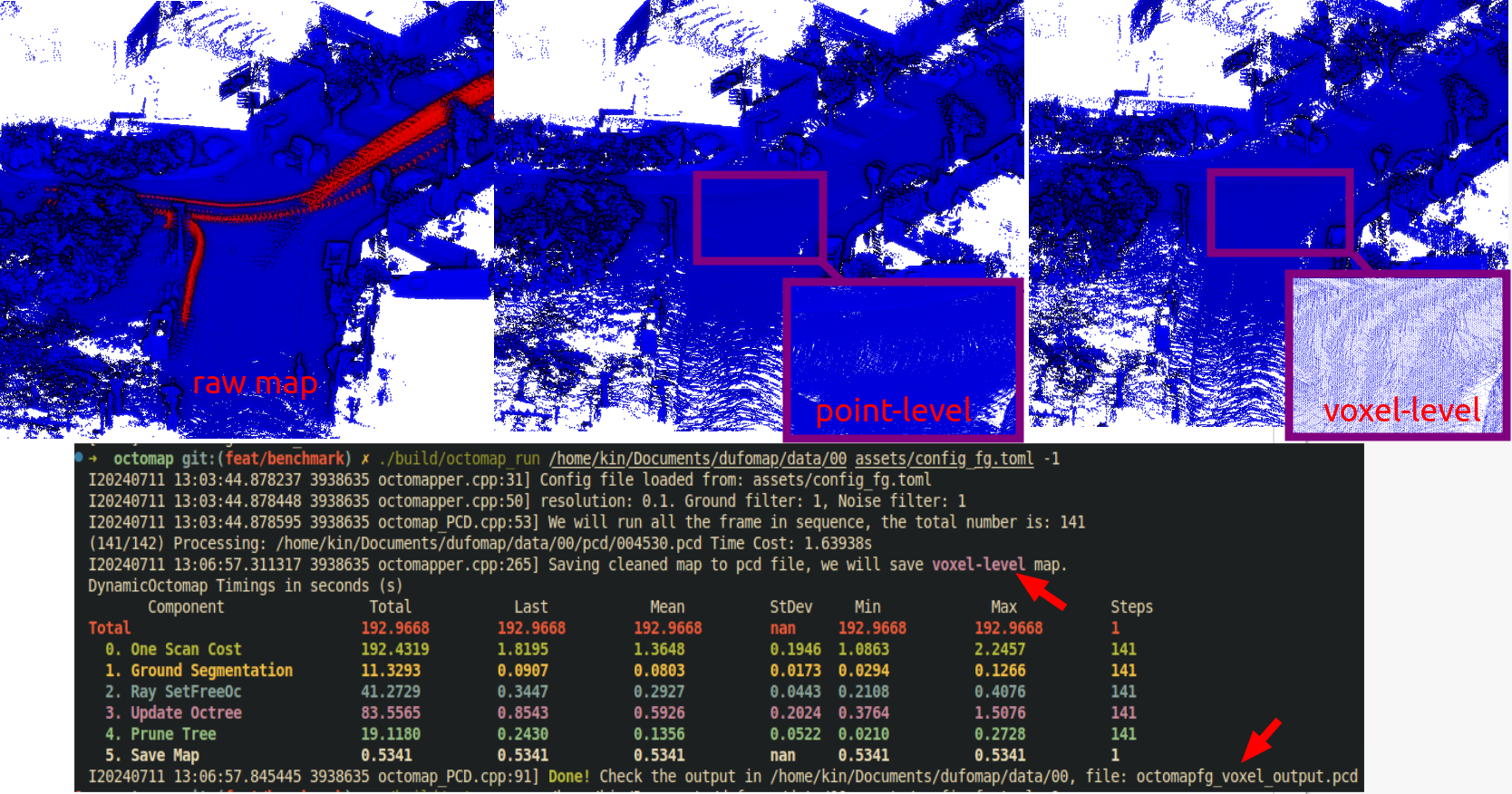

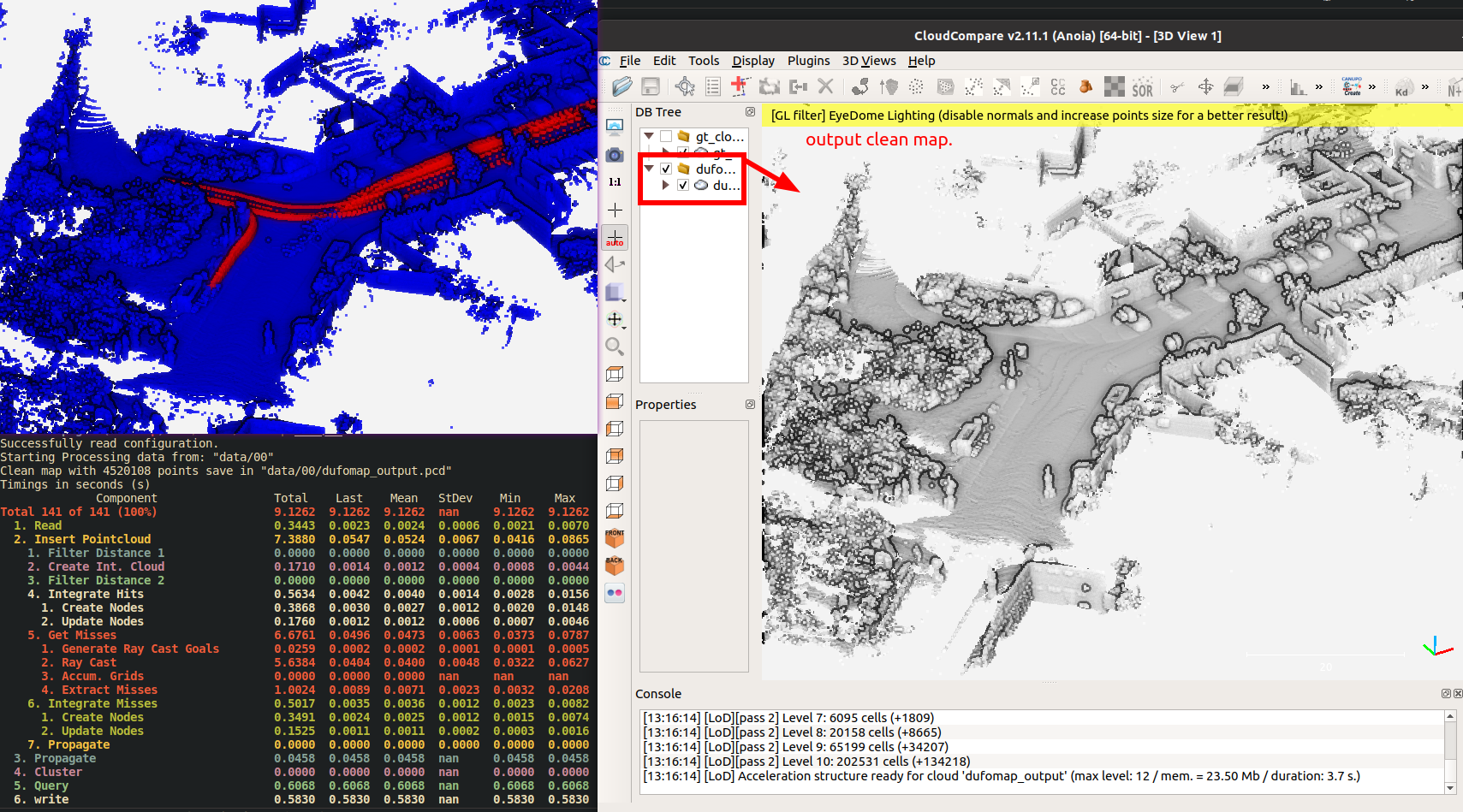

+Then you can get a time table with clean map result from Octomap w GF like top image shows. You can also output the voxel map by changing the config file.

+

+

+

+

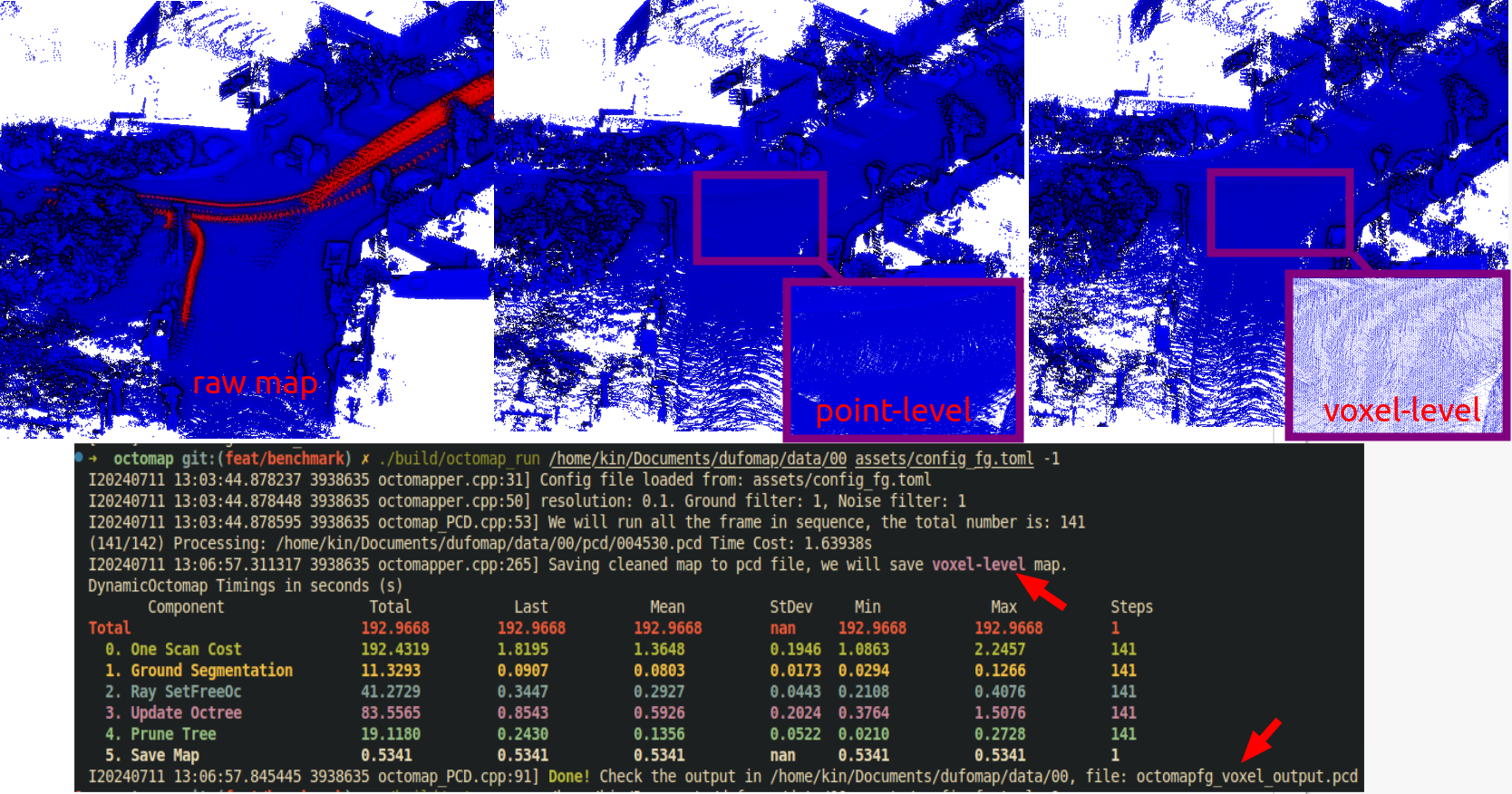

+Other methods like DUFOMap (by cd into the benchmark submodule or clone alone), you can run like this:

+

+```bash

+git clone https://github.com/Kin-Zhang/dufomap.git

+cmake -B build -D CMAKE_CXX_COMPILER=g++-10 && cmake --build build

+./build/dufomap_run /home/kin/data/semindoor assets/config.toml

+```

+

+

+

+All Running commands, `-1` means all pcd files in the `pcd` folder, if you only want to run `10` frames change to `10`.

+

+```bash

+./build/octomap_run ${data_path} ${config.yaml} -1

+./build/dufomap_run ${data_path} ${config.toml}

+

+# beautymap

+python main.py --data_dir data/00 --dis_range 40 --xy_resolution 1 --h_res 0.5

+

+# deflow

+python main.py checkpoint=/home/kin/deflow_best.ckpt dataset_path=/home/kin/data/00

+```

+

+Then maybe you would like to have quantitative and qualitative result, check [evaluation](../evaluation/index.md) part.

\ No newline at end of file

diff --git a/mkdocs.yml b/mkdocs.yml

index a649b7a..a289d3c 100644

--- a/mkdocs.yml

+++ b/mkdocs.yml

@@ -7,7 +7,7 @@ repo_url: https://github.com/KTH-RPL/DynamicMap_Benchmark

edit_uri: ./edit/mkdocs/docs

# Copyright

-copyright: Copyright © 2023 KTH RPL Qingwen Zhang

+copyright: Copyright © 2023 - 2024 KTH RPL Qingwen Zhang

# Configuration

theme:

@@ -67,7 +67,7 @@ theme:

plugins:

- git-committers:

repository: KTH-RPL/DynamicMap_Benchmark

- branch: main

+ branch: mkdocs

- search:

separator: '[\s\-,:!=\[\]()"`/]+|\.(?!\d)|&[lg]t;|(?!\b)(?=[A-Z][a-z])'

- minify:

@@ -145,8 +145,12 @@ markdown_extensions:

nav:

- Getting started:

- Home: index.md

- - Data: data.md

- - Method: method.md

- - Evaluation: evaluation.md

- # - A Demo: demo.md

+ - Data:

+ - Data Overview: data/index.md

+ - Data Creation: data/creation.md

+ - Method:

+ - Overview: method/index.md

+ # - DUFOMap: method/dufomap.md

+ - Evaluation:

+ - Overview: evaluation/index.md

- Project Lists: papers.md

\ No newline at end of file

+

+## Install & Build

+

+Test computer and System:

+

+- Desktop setting: i9-12900KF, 64GB RAM, Swap 90GB, 1TB SSD

+- System setting: Ubuntu 20.04 [ROS noetic-full installed in system]

+

+### Setup

+

+We show the dependencies for [our octomap](https://github.com/Kin-Zhang/octomap) as an example.

+

+```bash

+sudo apt update && sudo apt install -y libpcl-dev

+sudo apt install -y libgoogle-glog-dev libgflags-dev

+```

+

+#### Docker option

+

+You can use docker to build and run if you don't like trash your env and is able to run all methods in our benchmark.

+

+If you want to use docker, please check [Dockerfile](https://github.com/KTH-RPL/DynamicMap_Benchmark/blob/main/Dockerfile) for more details. This can also be a reference for you to install the dependencies.

+```

+cd DynamicMap_Benchmark

+docker build -t zhangkin/dynamic_map .

+docker run -it --rm --name dynamicmap -v /home/kin/data:/home/kin/data zhangkin/dynamic_map /bin/zsh

+```

+- `-v` means link your data folder to docker container, so you can use your data in docker container. `-v ${your_env_path}:${container_path}`

+- If it's hard to build, you can always use `docker pull zhangkin/dynamic_map` to pull the image from docker hub.

+

+### Build

+

+```bash

+git clone https://github.com/Kin-Zhang/octomap

+cd octomap

+cmake -B build && cmake --build build

+```

+

+## RUN

+

+Check each methods config file in their own folder `config/*.yaml` or `assets/*.yaml`

+```bash

+./build/${methods_name}_run ${data_path} ${config.yaml} -1

+```

+

+For example, if you want to run octomap with GF

+

+```bash

+./build/octomap_run /home/kin/data/00 assets/config_fg.yaml -1

+```

+

+Then you can get a time table with clean map result from Octomap w GF like top image shows. You can also output the voxel map by changing the config file.

+

+

+

+

+Other methods like DUFOMap (by cd into the benchmark submodule or clone alone), you can run like this:

+

+```bash

+git clone https://github.com/Kin-Zhang/dufomap.git

+cmake -B build -D CMAKE_CXX_COMPILER=g++-10 && cmake --build build

+./build/dufomap_run /home/kin/data/semindoor assets/config.toml

+```

+

+

+

+All Running commands, `-1` means all pcd files in the `pcd` folder, if you only want to run `10` frames change to `10`.

+

+```bash

+./build/octomap_run ${data_path} ${config.yaml} -1

+./build/dufomap_run ${data_path} ${config.toml}

+

+# beautymap

+python main.py --data_dir data/00 --dis_range 40 --xy_resolution 1 --h_res 0.5

+

+# deflow

+python main.py checkpoint=/home/kin/deflow_best.ckpt dataset_path=/home/kin/data/00

+```

+

+Then maybe you would like to have quantitative and qualitative result, check [evaluation](../evaluation/index.md) part.

\ No newline at end of file

diff --git a/mkdocs.yml b/mkdocs.yml

index a649b7a..a289d3c 100644

--- a/mkdocs.yml

+++ b/mkdocs.yml

@@ -7,7 +7,7 @@ repo_url: https://github.com/KTH-RPL/DynamicMap_Benchmark

edit_uri: ./edit/mkdocs/docs

# Copyright

-copyright: Copyright © 2023 KTH RPL Qingwen Zhang

+copyright: Copyright © 2023 - 2024 KTH RPL Qingwen Zhang

# Configuration

theme:

@@ -67,7 +67,7 @@ theme:

plugins:

- git-committers:

repository: KTH-RPL/DynamicMap_Benchmark

- branch: main

+ branch: mkdocs

- search:

separator: '[\s\-,:!=\[\]()"`/]+|\.(?!\d)|&[lg]t;|(?!\b)(?=[A-Z][a-z])'

- minify:

@@ -145,8 +145,12 @@ markdown_extensions:

nav:

- Getting started:

- Home: index.md

- - Data: data.md

- - Method: method.md

- - Evaluation: evaluation.md

- # - A Demo: demo.md

+ - Data:

+ - Data Overview: data/index.md

+ - Data Creation: data/creation.md

+ - Method:

+ - Overview: method/index.md

+ # - DUFOMap: method/dufomap.md

+ - Evaluation:

+ - Overview: evaluation/index.md

- Project Lists: papers.md

\ No newline at end of file

-

- -

- -

-All of them have same dependencies [PCL, Glog, yaml-cpp], we will show how to install and build:

-## Install & Build

-

-Test computer and System:

-

-- Desktop setting: i9-12900KF, 64GB RAM, Swap 90GB, 1TB SSD

-- System setting: Ubuntu 20.04 [ROS noetic-full installed in system]

-

-Dependencies listed following if you want to install them manually, or you can use docker to build and run if you don't like trash your env.

-### Docker

-If you want to use docker, please check [Dockerfile](../Dockerfile) for more details. This can also be a reference for you to install the dependencies.

-```

-cd DynamicMap_Benchmark

-docker build -t zhangkin/dynamic_map .

-docker run -it --rm --name dynamicmap -v /home/kin/data:/home/kin/data zhangkin/dynamic_map /bin/zsh

-```

-- `-v` means link your data folder to docker container, so you can use your data in docker container. `-v ${your_env_path}:${container_path}`

-- If it's hard to build, you can always use `docker pull zhangkin/dynamic_map` to pull the image from docker hub.

-

-

-### PCL / OpenCV

-Normally, you will directly have PCL and OpenCV library if you installed ROS-full in your computer.

-OpenCV is required by Removert only, PCL is required by Benchmark.

-

-Reminder for ubuntu 20.04 may occur this error:

-```bash

-fatal error: opencv2/cv.h: No such file or directory

-```

-ln from opencv4 to opencv2

-```bash

-sudo ln -s /usr/include/opencv4/opencv2 /usr/include/opencv2

-```

-

-### glog gflag [for print]

-or you can install through `sudo apt install`

-```sh

-sh -c "$(wget -O- https://raw.githubusercontent.com/Kin-Zhang/Kin-Zhang/main/Dockerfiles/latest_glog_gflag.sh)"

-```

-

-### yaml-cpp [for config]

-Please set the FLAG, check this issue if you want to know more: https://github.com/jbeder/yaml-cpp/issues/682, [TOOD inside the CMakeLists.txt](https://github.com/jbeder/yaml-cpp/issues/566)

-

-If you install in Ubuntu 22.04, please check this commit: https://github.com/jbeder/yaml-cpp/commit/c86a9e424c5ee48e04e0412e9edf44f758e38fb9 which is the version could build in 22.04

-

-```sh

-cd ${Tmp_folder}

-git clone https://github.com/jbeder/yaml-cpp.git && cd yaml-cpp

-env CFLAGS='-fPIC' CXXFLAGS='-fPIC' cmake -Bbuild

-cmake --build build --config Release

-sudo cmake --build build --config Release --target install

-```

-

-### Build

-

-```bash

-cd ${methods you want}

-cmake -B build && cmake --build build

-```

-

-## RUN

-

-Check each methods config file in their own folder `config/*.yaml` or `assets/*.yaml`

-```bash

-./build/${methods_name}_run ${data_path} ${config.yaml} -1

-```

-

-For example, if you want to run octomap with GF

-

-```bash

-./build/octomap_run /home/kin/data/00 assets/config_fg.yaml -1

-```

-

-Then you can get a time table with clean map result from Octomap w GF like top image shows. Or ERASOR on semindoor dataset:

-

-```bash

-./build/dufomap_run /home/kin/data/semindoor assets/config.toml

-```

-

-All Running commands, `-1` means all pcd files in the `pcd` folder, if you only want to run `10` frames change to `10`.

-

-```bash

-./build/octomap_run ${data_path} ${config.yaml} -1

-./build/dufomap_run ${data_path} ${config.toml}

-

-# beautymap

-python main.py --data_dir data/00 --dis_range 40 --xy_resolution 1 --h_res 0.5

-

-# deflow

-python main.py checkpoint=/home/kin/deflow_best.ckpt dataset_path=/home/kin/data/00

-```

-

-Then maybe you would like to have quantitative and qualitative result, check [scripts/py/eval](../scripts/py/eval).

-

-

\ No newline at end of file

diff --git a/docs/method/dufomap.md b/docs/method/dufomap.md

new file mode 100644

index 0000000..364d124

--- /dev/null

+++ b/docs/method/dufomap.md

@@ -0,0 +1,3 @@

+# DUFOMap

+

+Here is a quick blog post about the DUFOMap paper. I will soon update more details here.

\ No newline at end of file

diff --git a/docs/method/index.md b/docs/method/index.md

new file mode 100644

index 0000000..8459bb8

--- /dev/null

+++ b/docs/method/index.md

@@ -0,0 +1,87 @@

+# Methods

+

+In this section we will introduce how to run the methods in the benchmark.

+

+Here is a demo result you can have after reading this README:

+

+

-

-All of them have same dependencies [PCL, Glog, yaml-cpp], we will show how to install and build:

-## Install & Build

-

-Test computer and System:

-

-- Desktop setting: i9-12900KF, 64GB RAM, Swap 90GB, 1TB SSD

-- System setting: Ubuntu 20.04 [ROS noetic-full installed in system]

-

-Dependencies listed following if you want to install them manually, or you can use docker to build and run if you don't like trash your env.

-### Docker

-If you want to use docker, please check [Dockerfile](../Dockerfile) for more details. This can also be a reference for you to install the dependencies.

-```

-cd DynamicMap_Benchmark

-docker build -t zhangkin/dynamic_map .

-docker run -it --rm --name dynamicmap -v /home/kin/data:/home/kin/data zhangkin/dynamic_map /bin/zsh

-```

-- `-v` means link your data folder to docker container, so you can use your data in docker container. `-v ${your_env_path}:${container_path}`

-- If it's hard to build, you can always use `docker pull zhangkin/dynamic_map` to pull the image from docker hub.

-

-

-### PCL / OpenCV

-Normally, you will directly have PCL and OpenCV library if you installed ROS-full in your computer.

-OpenCV is required by Removert only, PCL is required by Benchmark.

-

-Reminder for ubuntu 20.04 may occur this error:

-```bash

-fatal error: opencv2/cv.h: No such file or directory

-```

-ln from opencv4 to opencv2

-```bash

-sudo ln -s /usr/include/opencv4/opencv2 /usr/include/opencv2

-```

-

-### glog gflag [for print]

-or you can install through `sudo apt install`

-```sh

-sh -c "$(wget -O- https://raw.githubusercontent.com/Kin-Zhang/Kin-Zhang/main/Dockerfiles/latest_glog_gflag.sh)"

-```

-

-### yaml-cpp [for config]

-Please set the FLAG, check this issue if you want to know more: https://github.com/jbeder/yaml-cpp/issues/682, [TOOD inside the CMakeLists.txt](https://github.com/jbeder/yaml-cpp/issues/566)

-

-If you install in Ubuntu 22.04, please check this commit: https://github.com/jbeder/yaml-cpp/commit/c86a9e424c5ee48e04e0412e9edf44f758e38fb9 which is the version could build in 22.04

-

-```sh

-cd ${Tmp_folder}

-git clone https://github.com/jbeder/yaml-cpp.git && cd yaml-cpp

-env CFLAGS='-fPIC' CXXFLAGS='-fPIC' cmake -Bbuild

-cmake --build build --config Release

-sudo cmake --build build --config Release --target install

-```

-

-### Build

-

-```bash

-cd ${methods you want}

-cmake -B build && cmake --build build

-```

-

-## RUN

-

-Check each methods config file in their own folder `config/*.yaml` or `assets/*.yaml`

-```bash

-./build/${methods_name}_run ${data_path} ${config.yaml} -1

-```

-

-For example, if you want to run octomap with GF

-

-```bash

-./build/octomap_run /home/kin/data/00 assets/config_fg.yaml -1

-```

-

-Then you can get a time table with clean map result from Octomap w GF like top image shows. Or ERASOR on semindoor dataset:

-

-```bash

-./build/dufomap_run /home/kin/data/semindoor assets/config.toml

-```

-

-All Running commands, `-1` means all pcd files in the `pcd` folder, if you only want to run `10` frames change to `10`.

-

-```bash

-./build/octomap_run ${data_path} ${config.yaml} -1

-./build/dufomap_run ${data_path} ${config.toml}

-

-# beautymap

-python main.py --data_dir data/00 --dis_range 40 --xy_resolution 1 --h_res 0.5

-

-# deflow

-python main.py checkpoint=/home/kin/deflow_best.ckpt dataset_path=/home/kin/data/00

-```

-

-Then maybe you would like to have quantitative and qualitative result, check [scripts/py/eval](../scripts/py/eval).

-

-

\ No newline at end of file

diff --git a/docs/method/dufomap.md b/docs/method/dufomap.md

new file mode 100644

index 0000000..364d124

--- /dev/null

+++ b/docs/method/dufomap.md

@@ -0,0 +1,3 @@

+# DUFOMap

+

+Here is a quick blog post about the DUFOMap paper. I will soon update more details here.

\ No newline at end of file

diff --git a/docs/method/index.md b/docs/method/index.md

new file mode 100644

index 0000000..8459bb8

--- /dev/null

+++ b/docs/method/index.md

@@ -0,0 +1,87 @@

+# Methods

+

+In this section we will introduce how to run the methods in the benchmark.

+

+Here is a demo result you can have after reading this README:

+

+ +

+## Install & Build

+

+Test computer and System:

+

+- Desktop setting: i9-12900KF, 64GB RAM, Swap 90GB, 1TB SSD

+- System setting: Ubuntu 20.04 [ROS noetic-full installed in system]

+

+### Setup

+

+We show the dependencies for [our octomap](https://github.com/Kin-Zhang/octomap) as an example.

+

+```bash

+sudo apt update && sudo apt install -y libpcl-dev

+sudo apt install -y libgoogle-glog-dev libgflags-dev

+```

+

+#### Docker option

+

+You can use docker to build and run if you don't like trash your env and is able to run all methods in our benchmark.

+