diff --git a/.github/ISSUE_TEMPLATE/custom.md b/.github/ISSUE_TEMPLATE/custom.md

new file mode 100644

index 0000000..a6d4478

--- /dev/null

+++ b/.github/ISSUE_TEMPLATE/custom.md

@@ -0,0 +1,19 @@

+---

+name: Issue template

+about: Issue template for code error.

+title: ''

+labels: ''

+assignees: ''

+

+---

+

+请提供下述完整信息以便快速定位问题/Please provide the following information to quickly locate the problem

+

+- 系统环境/System Environment:

+- 版本号/Version:Paddle: PaddleOCR: 问题相关组件/Related components:

+- 运行指令/Command Code:

+- 完整报错/Complete Error Message:

+

+我们提供了AceIssueSolver来帮助你解答问题,你是否想要它来解答(请填写yes/no)?/We provide AceIssueSolver to solve issues, do you want it? (Please write yes/no):

+

+请尽量不要包含图片在问题中/Please try to not include the image in the issue.

diff --git a/.github/pull_request_template.md b/.github/pull_request_template.md

new file mode 100644

index 0000000..ca62dac

--- /dev/null

+++ b/.github/pull_request_template.md

@@ -0,0 +1,15 @@

+### PR 类型 PR types

+

+

+### PR 变化内容类型 PR changes

+

+

+### 描述 Description

+

+

+### 提PR之前的检查 Check-list

+

+- [ ] 这个 PR 是提交到dygraph分支或者是一个cherry-pick,否则请先提交到dygarph分支。

+ This PR is pushed to the dygraph branch or cherry-picked from the dygraph branch. Otherwise, please push your changes to the dygraph branch.

+- [ ] 这个PR清楚描述了功能,帮助评审能提升效率。This PR have fully described what it does such that reviewers can speedup.

+- [ ] 这个PR已经经过本地测试。This PR can be convered by current tests or already test locally by you.

diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000..3a05fb7

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,34 @@

+# Byte-compiled / optimized / DLL files

+__pycache__/

+.ipynb_checkpoints/

+*.py[cod]

+*$py.class

+

+# C extensions

+*.so

+

+inference/

+inference_results/

+output/

+train_data/

+log/

+*.DS_Store

+*.vs

+*.user

+*~

+*.vscode

+*.idea

+

+*.log

+.clang-format

+.clang_format.hook

+

+build/

+dist/

+paddleocr.egg-info/

+/deploy/android_demo/app/OpenCV/

+/deploy/android_demo/app/PaddleLite/

+/deploy/android_demo/app/.cxx/

+/deploy/android_demo/app/cache/

+test_tipc/web/models/

+test_tipc/web/node_modules/

\ No newline at end of file

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

new file mode 100644

index 0000000..5f7fec8

--- /dev/null

+++ b/.pre-commit-config.yaml

@@ -0,0 +1,35 @@

+- repo: https://github.com/PaddlePaddle/mirrors-yapf.git

+ sha: 0d79c0c469bab64f7229c9aca2b1186ef47f0e37

+ hooks:

+ - id: yapf

+ files: \.py$

+- repo: https://github.com/pre-commit/pre-commit-hooks

+ sha: a11d9314b22d8f8c7556443875b731ef05965464

+ hooks:

+ - id: check-merge-conflict

+ - id: check-symlinks

+ - id: detect-private-key

+ files: (?!.*paddle)^.*$

+ - id: end-of-file-fixer

+ files: \.md$

+ - id: trailing-whitespace

+ files: \.md$

+- repo: https://github.com/Lucas-C/pre-commit-hooks

+ sha: v1.0.1

+ hooks:

+ - id: forbid-crlf

+ files: \.md$

+ - id: remove-crlf

+ files: \.md$

+ - id: forbid-tabs

+ files: \.md$

+ - id: remove-tabs

+ files: \.md$

+- repo: local

+ hooks:

+ - id: clang-format

+ name: clang-format

+ description: Format files with ClangFormat

+ entry: bash .clang_format.hook -i

+ language: system

+ files: \.(c|cc|cxx|cpp|cu|h|hpp|hxx|cuh|proto)$

\ No newline at end of file

diff --git a/.style.yapf b/.style.yapf

new file mode 100644

index 0000000..4741fb4

--- /dev/null

+++ b/.style.yapf

@@ -0,0 +1,3 @@

+[style]

+based_on_style = pep8

+column_limit = 80

diff --git a/LICENSE b/LICENSE

new file mode 100644

index 0000000..5fe8694

--- /dev/null

+++ b/LICENSE

@@ -0,0 +1,203 @@

+Copyright (c) 2016 PaddlePaddle Authors. All Rights Reserved

+

+ Apache License

+ Version 2.0, January 2004

+ http://www.apache.org/licenses/

+

+ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

+

+ 1. Definitions.

+

+ "License" shall mean the terms and conditions for use, reproduction,

+ and distribution as defined by Sections 1 through 9 of this document.

+

+ "Licensor" shall mean the copyright owner or entity authorized by

+ the copyright owner that is granting the License.

+

+ "Legal Entity" shall mean the union of the acting entity and all

+ other entities that control, are controlled by, or are under common

+ control with that entity. For the purposes of this definition,

+ "control" means (i) the power, direct or indirect, to cause the

+ direction or management of such entity, whether by contract or

+ otherwise, or (ii) ownership of fifty percent (50%) or more of the

+ outstanding shares, or (iii) beneficial ownership of such entity.

+

+ "You" (or "Your") shall mean an individual or Legal Entity

+ exercising permissions granted by this License.

+

+ "Source" form shall mean the preferred form for making modifications,

+ including but not limited to software source code, documentation

+ source, and configuration files.

+

+ "Object" form shall mean any form resulting from mechanical

+ transformation or translation of a Source form, including but

+ not limited to compiled object code, generated documentation,

+ and conversions to other media types.

+

+ "Work" shall mean the work of authorship, whether in Source or

+ Object form, made available under the License, as indicated by a

+ copyright notice that is included in or attached to the work

+ (an example is provided in the Appendix below).

+

+ "Derivative Works" shall mean any work, whether in Source or Object

+ form, that is based on (or derived from) the Work and for which the

+ editorial revisions, annotations, elaborations, or other modifications

+ represent, as a whole, an original work of authorship. For the purposes

+ of this License, Derivative Works shall not include works that remain

+ separable from, or merely link (or bind by name) to the interfaces of,

+ the Work and Derivative Works thereof.

+

+ "Contribution" shall mean any work of authorship, including

+ the original version of the Work and any modifications or additions

+ to that Work or Derivative Works thereof, that is intentionally

+ submitted to Licensor for inclusion in the Work by the copyright owner

+ or by an individual or Legal Entity authorized to submit on behalf of

+ the copyright owner. For the purposes of this definition, "submitted"

+ means any form of electronic, verbal, or written communication sent

+ to the Licensor or its representatives, including but not limited to

+ communication on electronic mailing lists, source code control systems,

+ and issue tracking systems that are managed by, or on behalf of, the

+ Licensor for the purpose of discussing and improving the Work, but

+ excluding communication that is conspicuously marked or otherwise

+ designated in writing by the copyright owner as "Not a Contribution."

+

+ "Contributor" shall mean Licensor and any individual or Legal Entity

+ on behalf of whom a Contribution has been received by Licensor and

+ subsequently incorporated within the Work.

+

+ 2. Grant of Copyright License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ copyright license to reproduce, prepare Derivative Works of,

+ publicly display, publicly perform, sublicense, and distribute the

+ Work and such Derivative Works in Source or Object form.

+

+ 3. Grant of Patent License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ (except as stated in this section) patent license to make, have made,

+ use, offer to sell, sell, import, and otherwise transfer the Work,

+ where such license applies only to those patent claims licensable

+ by such Contributor that are necessarily infringed by their

+ Contribution(s) alone or by combination of their Contribution(s)

+ with the Work to which such Contribution(s) was submitted. If You

+ institute patent litigation against any entity (including a

+ cross-claim or counterclaim in a lawsuit) alleging that the Work

+ or a Contribution incorporated within the Work constitutes direct

+ or contributory patent infringement, then any patent licenses

+ granted to You under this License for that Work shall terminate

+ as of the date such litigation is filed.

+

+ 4. Redistribution. You may reproduce and distribute copies of the

+ Work or Derivative Works thereof in any medium, with or without

+ modifications, and in Source or Object form, provided that You

+ meet the following conditions:

+

+ (a) You must give any other recipients of the Work or

+ Derivative Works a copy of this License; and

+

+ (b) You must cause any modified files to carry prominent notices

+ stating that You changed the files; and

+

+ (c) You must retain, in the Source form of any Derivative Works

+ that You distribute, all copyright, patent, trademark, and

+ attribution notices from the Source form of the Work,

+ excluding those notices that do not pertain to any part of

+ the Derivative Works; and

+

+ (d) If the Work includes a "NOTICE" text file as part of its

+ distribution, then any Derivative Works that You distribute must

+ include a readable copy of the attribution notices contained

+ within such NOTICE file, excluding those notices that do not

+ pertain to any part of the Derivative Works, in at least one

+ of the following places: within a NOTICE text file distributed

+ as part of the Derivative Works; within the Source form or

+ documentation, if provided along with the Derivative Works; or,

+ within a display generated by the Derivative Works, if and

+ wherever such third-party notices normally appear. The contents

+ of the NOTICE file are for informational purposes only and

+ do not modify the License. You may add Your own attribution

+ notices within Derivative Works that You distribute, alongside

+ or as an addendum to the NOTICE text from the Work, provided

+ that such additional attribution notices cannot be construed

+ as modifying the License.

+

+ You may add Your own copyright statement to Your modifications and

+ may provide additional or different license terms and conditions

+ for use, reproduction, or distribution of Your modifications, or

+ for any such Derivative Works as a whole, provided Your use,

+ reproduction, and distribution of the Work otherwise complies with

+ the conditions stated in this License.

+

+ 5. Submission of Contributions. Unless You explicitly state otherwise,

+ any Contribution intentionally submitted for inclusion in the Work

+ by You to the Licensor shall be under the terms and conditions of

+ this License, without any additional terms or conditions.

+ Notwithstanding the above, nothing herein shall supersede or modify

+ the terms of any separate license agreement you may have executed

+ with Licensor regarding such Contributions.

+

+ 6. Trademarks. This License does not grant permission to use the trade

+ names, trademarks, service marks, or product names of the Licensor,

+ except as required for reasonable and customary use in describing the

+ origin of the Work and reproducing the content of the NOTICE file.

+

+ 7. Disclaimer of Warranty. Unless required by applicable law or

+ agreed to in writing, Licensor provides the Work (and each

+ Contributor provides its Contributions) on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

+ implied, including, without limitation, any warranties or conditions

+ of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

+ PARTICULAR PURPOSE. You are solely responsible for determining the

+ appropriateness of using or redistributing the Work and assume any

+ risks associated with Your exercise of permissions under this License.

+

+ 8. Limitation of Liability. In no event and under no legal theory,

+ whether in tort (including negligence), contract, or otherwise,

+ unless required by applicable law (such as deliberate and grossly

+ negligent acts) or agreed to in writing, shall any Contributor be

+ liable to You for damages, including any direct, indirect, special,

+ incidental, or consequential damages of any character arising as a

+ result of this License or out of the use or inability to use the

+ Work (including but not limited to damages for loss of goodwill,

+ work stoppage, computer failure or malfunction, or any and all

+ other commercial damages or losses), even if such Contributor

+ has been advised of the possibility of such damages.

+

+ 9. Accepting Warranty or Additional Liability. While redistributing

+ the Work or Derivative Works thereof, You may choose to offer,

+ and charge a fee for, acceptance of support, warranty, indemnity,

+ or other liability obligations and/or rights consistent with this

+ License. However, in accepting such obligations, You may act only

+ on Your own behalf and on Your sole responsibility, not on behalf

+ of any other Contributor, and only if You agree to indemnify,

+ defend, and hold each Contributor harmless for any liability

+ incurred by, or claims asserted against, such Contributor by reason

+ of your accepting any such warranty or additional liability.

+

+ END OF TERMS AND CONDITIONS

+

+ APPENDIX: How to apply the Apache License to your work.

+

+ To apply the Apache License to your work, attach the following

+ boilerplate notice, with the fields enclosed by brackets "[]"

+ replaced with your own identifying information. (Don't include

+ the brackets!) The text should be enclosed in the appropriate

+ comment syntax for the file format. We also recommend that a

+ file or class name and description of purpose be included on the

+ same "printed page" as the copyright notice for easier

+ identification within third-party archives.

+

+ Copyright (c) 2016 PaddlePaddle Authors. All Rights Reserved.

+

+ Licensed under the Apache License, Version 2.0 (the "License");

+ you may not use this file except in compliance with the License.

+ You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

diff --git a/MANIFEST.in b/MANIFEST.in

new file mode 100644

index 0000000..f821618

--- /dev/null

+++ b/MANIFEST.in

@@ -0,0 +1,10 @@

+include LICENSE

+include README.md

+

+recursive-include ppocr/utils *.*

+recursive-include ppocr/data *.py

+recursive-include ppocr/postprocess *.py

+recursive-include tools/infer *.py

+recursive-include tools __init__.py

+recursive-include ppocr/utils/e2e_utils *.py

+recursive-include ppstructure *.py

\ No newline at end of file

diff --git a/StyleText/README.md b/StyleText/README.md

new file mode 100644

index 0000000..eddedbd

--- /dev/null

+++ b/StyleText/README.md

@@ -0,0 +1,219 @@

+English | [简体中文](README_ch.md)

+

+## Style Text

+

+### Contents

+- [1. Introduction](#Introduction)

+- [2. Preparation](#Preparation)

+- [3. Quick Start](#Quick_Start)

+- [4. Applications](#Applications)

+- [5. Code Structure](#Code_structure)

+

+

+

+### Introduction

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

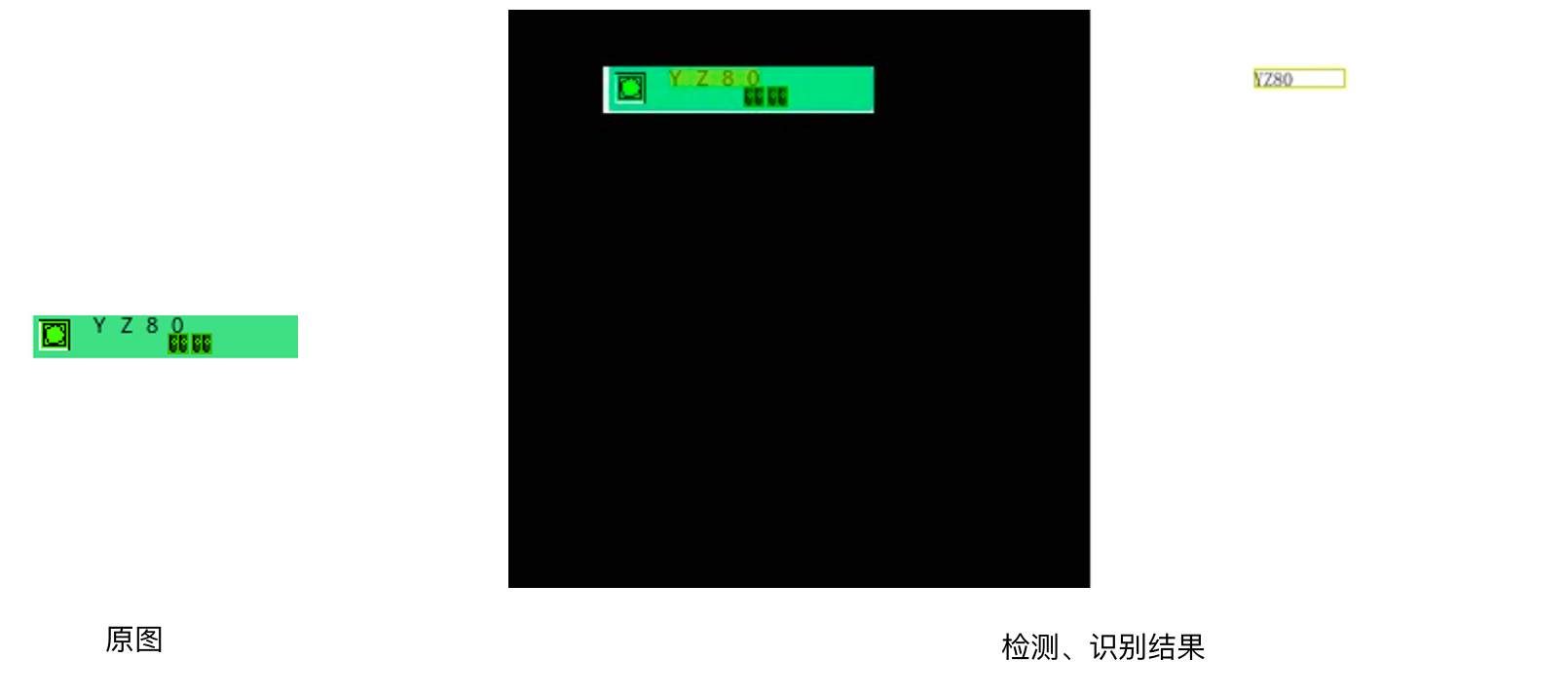

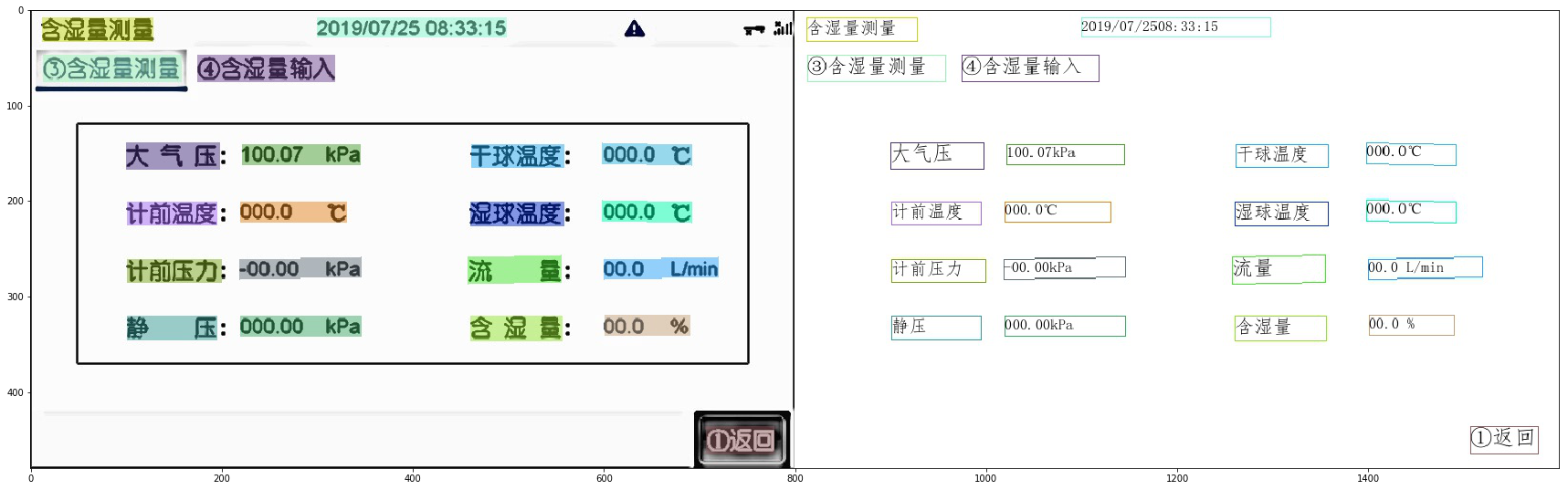

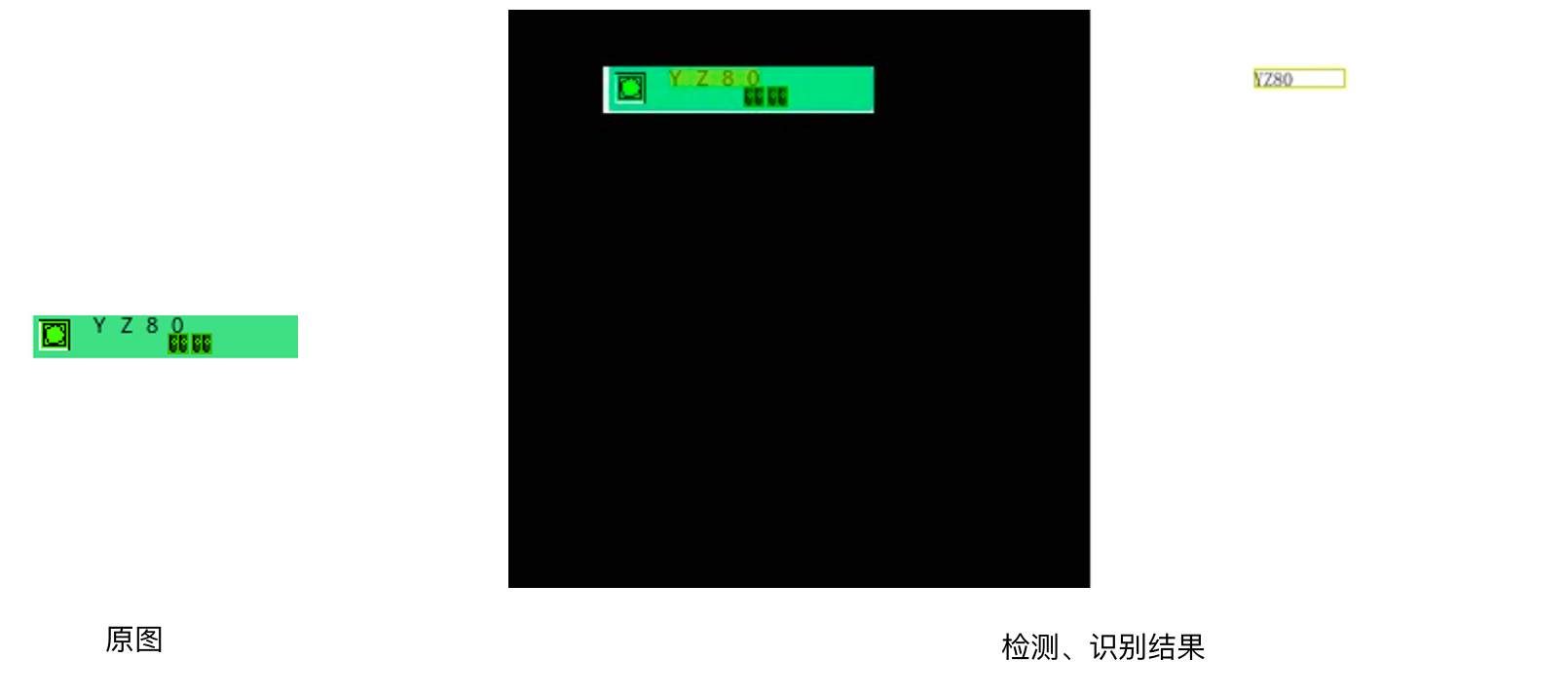

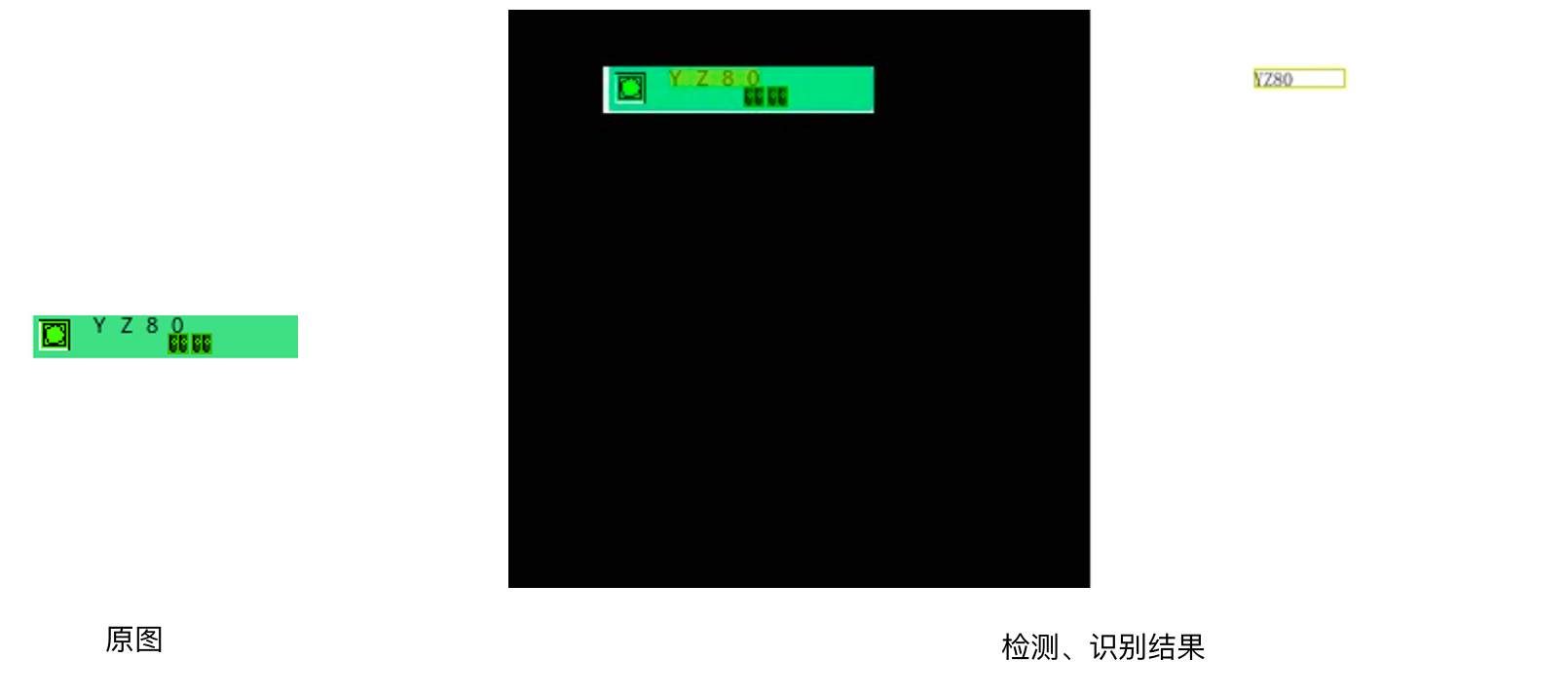

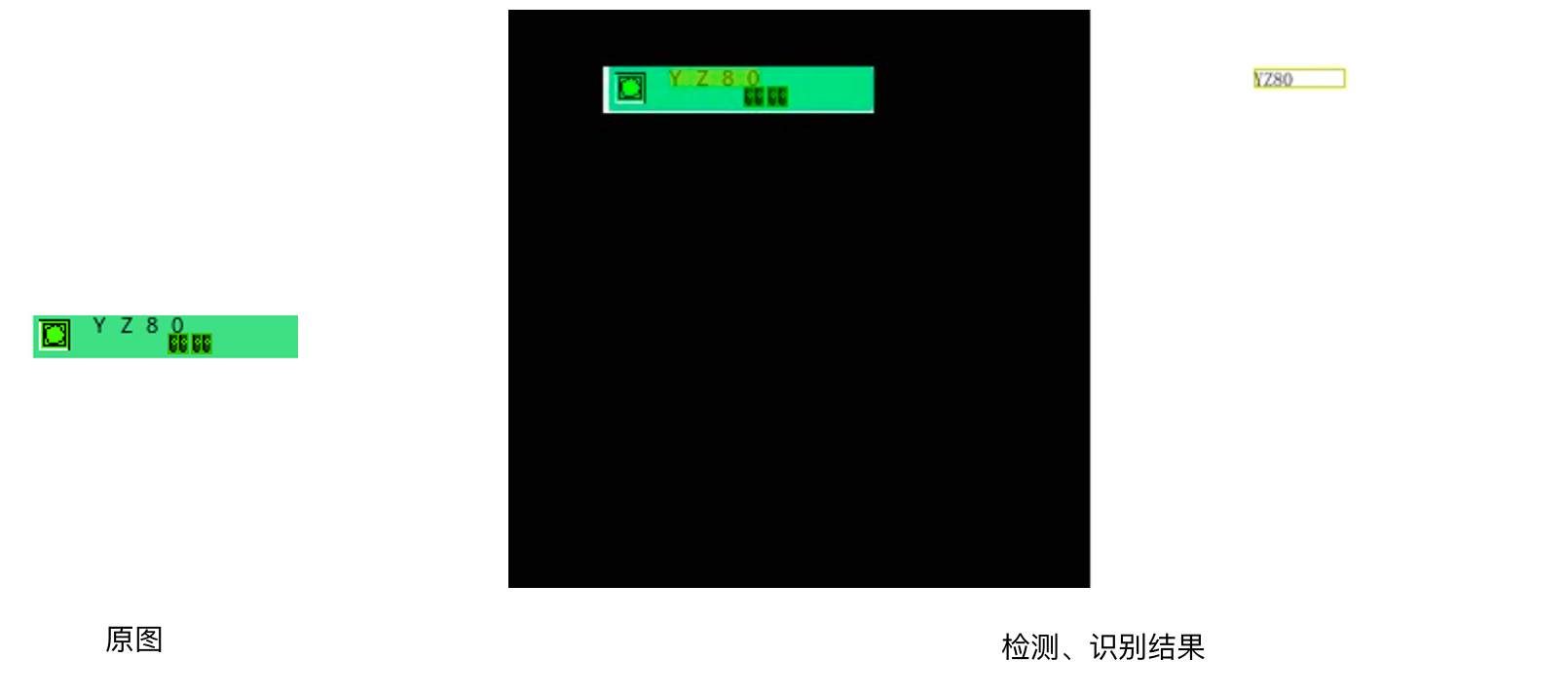

图1 PCB检测识别效果

+

+注:欢迎在AIStudio领取免费算力体验线上实训,项目链接: [基于PP-OCRv3实现PCB字符识别](https://aistudio.baidu.com/aistudio/projectdetail/4008973)

+

+# 2. 安装说明

+

+

+下载PaddleOCR源码,安装依赖环境。

+

+

+```python

+# 如仍需安装or安装更新,可以执行以下步骤

+git clone https://github.com/PaddlePaddle/PaddleOCR.git

+# git clone https://gitee.com/PaddlePaddle/PaddleOCR

+```

+

+

+```python

+# 安装依赖包

+pip install -r /home/aistudio/PaddleOCR/requirements.txt

+```

+

+# 3. 数据准备

+

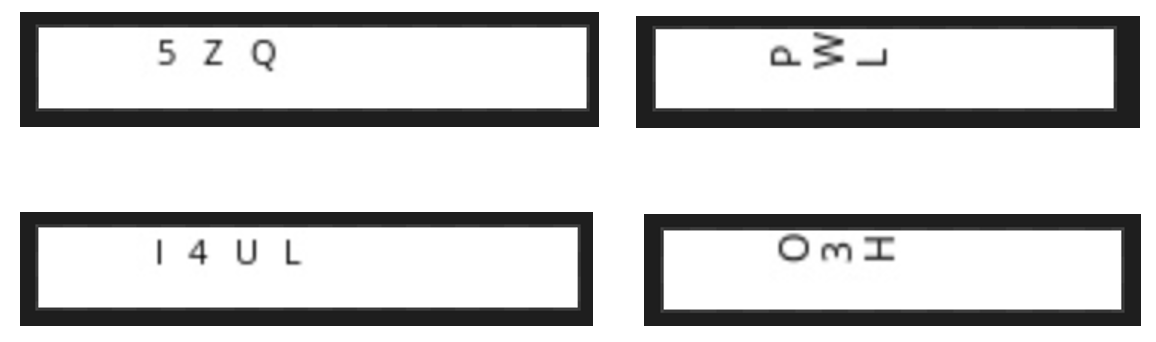

+我们通过图片合成工具生成 **图2** 所示的PCB图片,整图只有高25、宽150左右、文字区域高9、宽45左右,包含垂直和水平2种方向的文本:

+

+

+图2 数据集示例

+

+暂时不开源生成的PCB数据集,但是通过更换背景,通过如下代码生成数据即可:

+

+```

+cd gen_data

+python3 gen.py --num_img=10

+```

+

+生成图片参数解释:

+

+```

+num_img:生成图片数量

+font_min_size、font_max_size:字体最大、最小尺寸

+bg_path:文字区域背景存放路径

+det_bg_path:整图背景存放路径

+fonts_path:字体路径

+corpus_path:语料路径

+output_dir:生成图片存储路径

+```

+

+这里生成 **100张** 相同尺寸和文本的图片,如 **图3** 所示,方便大家跑通实验。通过如下代码解压数据集:

+

+

+图3 案例提供数据集示例

+

+

+```python

+tar xf ./data/data148165/dataset.tar -C ./

+```

+

+在生成数据集的时需要生成检测和识别训练需求的格式:

+

+

+- **文本检测**

+

+标注文件格式如下,中间用'\t'分隔:

+

+```

+" 图像文件名 json.dumps编码的图像标注信息"

+ch4_test_images/img_61.jpg [{"transcription": "MASA", "points": [[310, 104], [416, 141], [418, 216], [312, 179]]}, {...}]

+```

+

+json.dumps编码前的图像标注信息是包含多个字典的list,字典中的 `points` 表示文本框的四个点的坐标(x, y),从左上角的点开始顺时针排列。 `transcription` 表示当前文本框的文字,***当其内容为“###”时,表示该文本框无效,在训练时会跳过。***

+

+- **文本识别**

+

+标注文件的格式如下, txt文件中默认请将图片路径和图片标签用'\t'分割,如用其他方式分割将造成训练报错。

+

+```

+" 图像文件名 图像标注信息 "

+

+train_data/rec/train/word_001.jpg 简单可依赖

+train_data/rec/train/word_002.jpg 用科技让复杂的世界更简单

+...

+```

+

+

+# 4. 文本检测

+

+选用飞桨OCR开发套件[PaddleOCR](https://github.com/PaddlePaddle/PaddleOCR)中的PP-OCRv3模型进行文本检测和识别。针对检测模型和识别模型,进行了共计9个方面的升级:

+

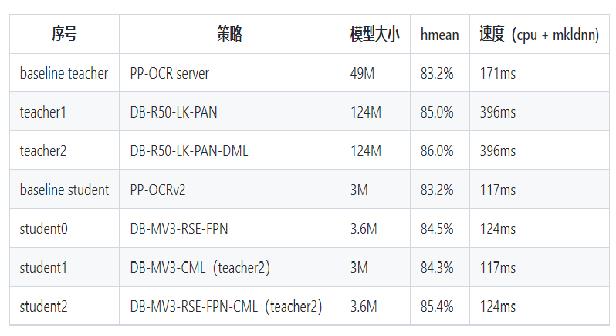

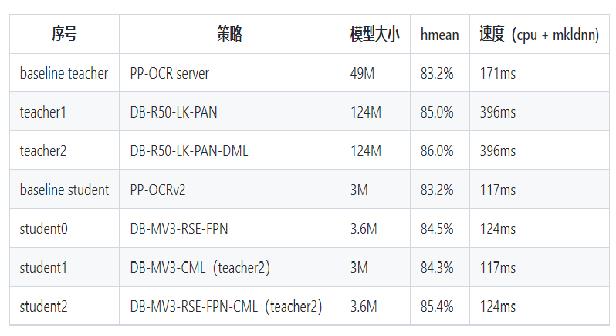

+- PP-OCRv3检测模型对PP-OCRv2中的CML协同互学习文本检测蒸馏策略进行了升级,分别针对教师模型和学生模型进行进一步效果优化。其中,在对教师模型优化时,提出了大感受野的PAN结构LK-PAN和引入了DML蒸馏策略;在对学生模型优化时,提出了残差注意力机制的FPN结构RSE-FPN。

+

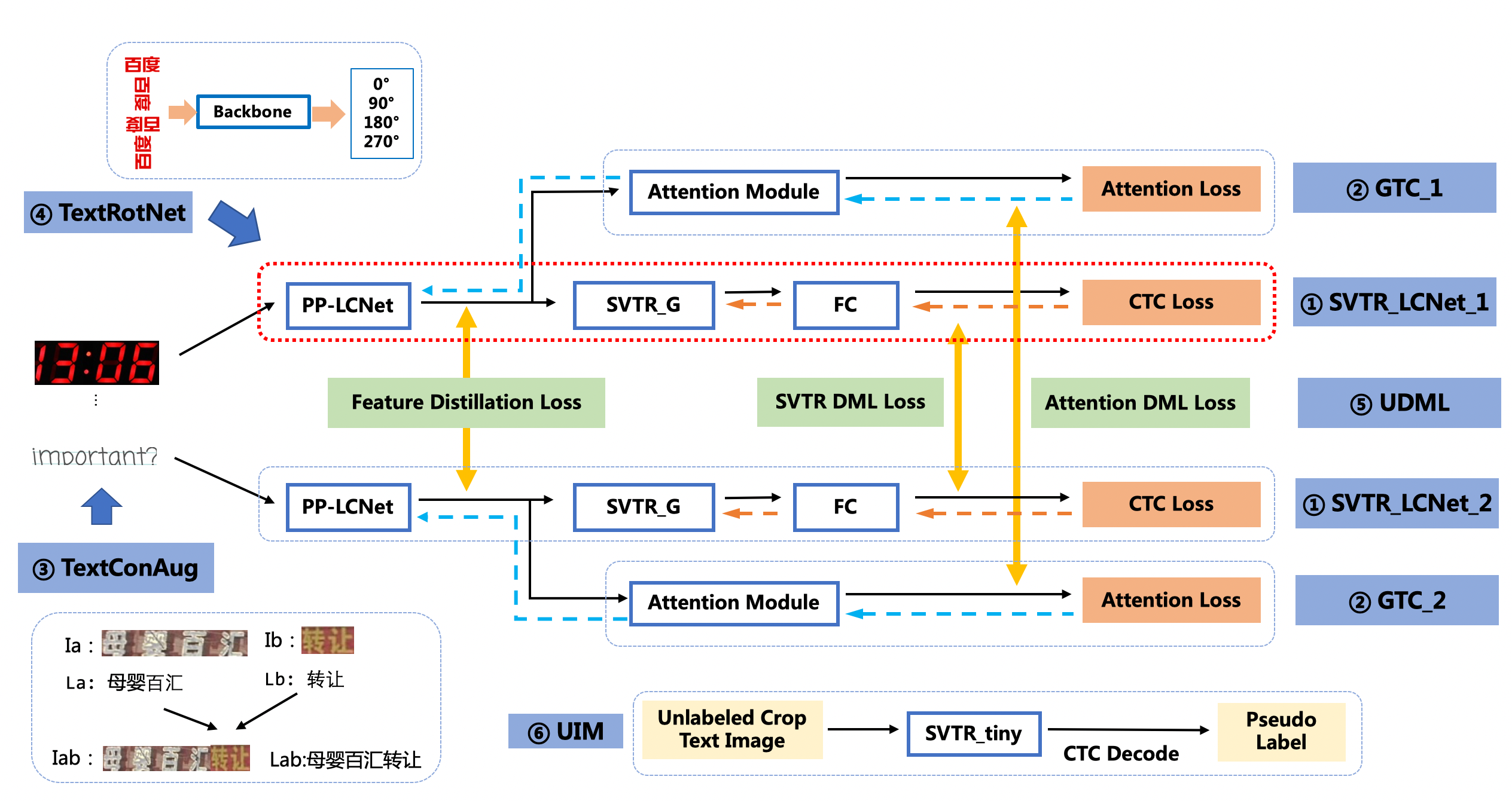

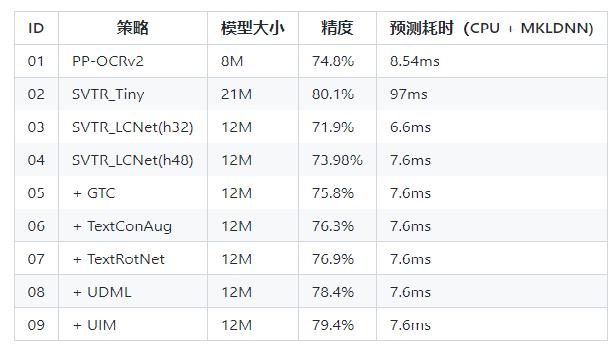

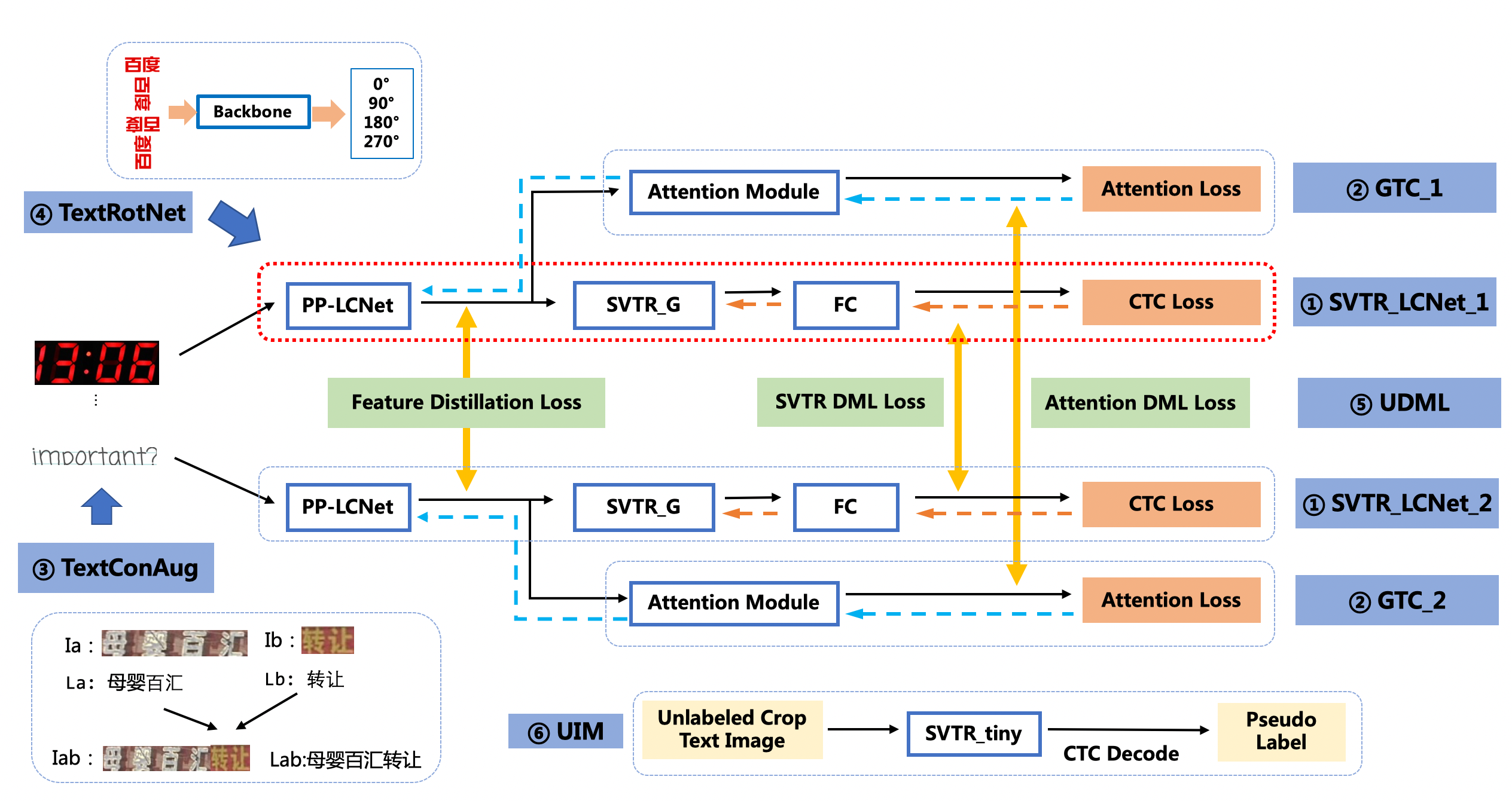

+- PP-OCRv3的识别模块是基于文本识别算法SVTR优化。SVTR不再采用RNN结构,通过引入Transformers结构更加有效地挖掘文本行图像的上下文信息,从而提升文本识别能力。PP-OCRv3通过轻量级文本识别网络SVTR_LCNet、Attention损失指导CTC损失训练策略、挖掘文字上下文信息的数据增广策略TextConAug、TextRotNet自监督预训练模型、UDML联合互学习策略、UIM无标注数据挖掘方案,6个方面进行模型加速和效果提升。

+

+更多细节请参考PP-OCRv3[技术报告](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/doc/doc_ch/PP-OCRv3_introduction.md)。

+

+

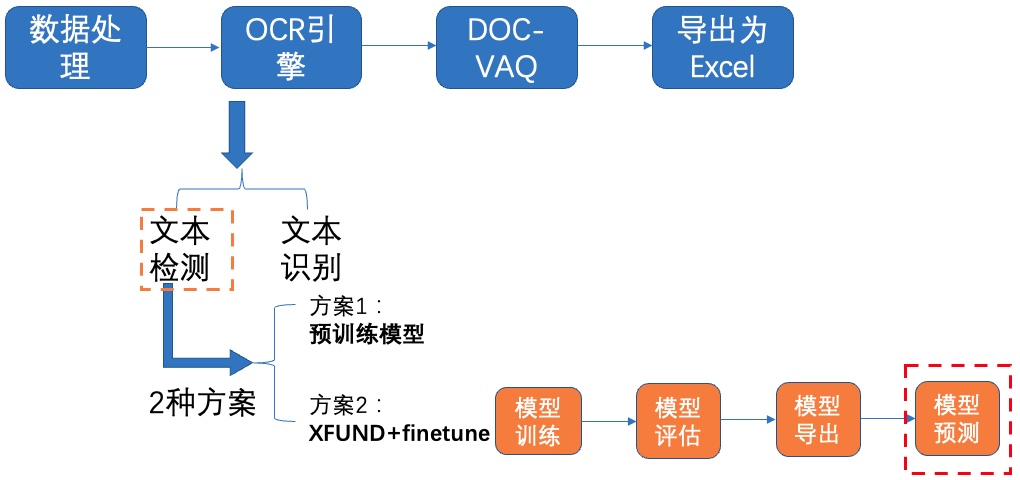

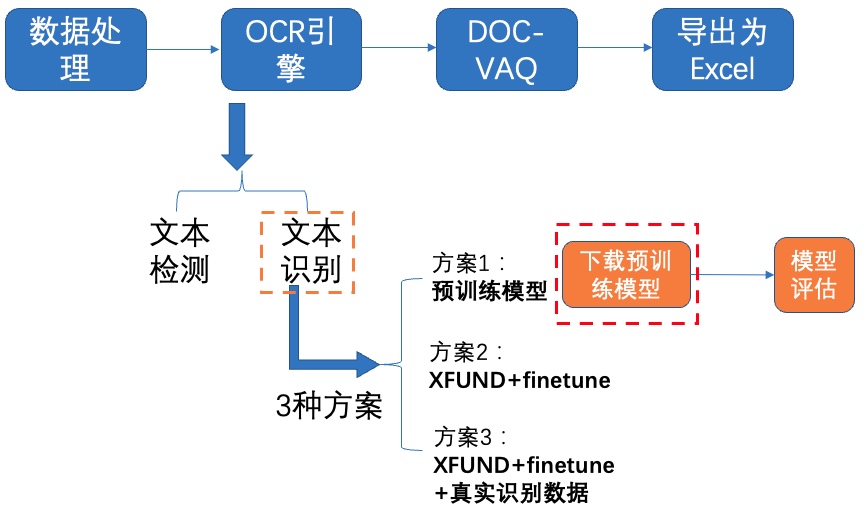

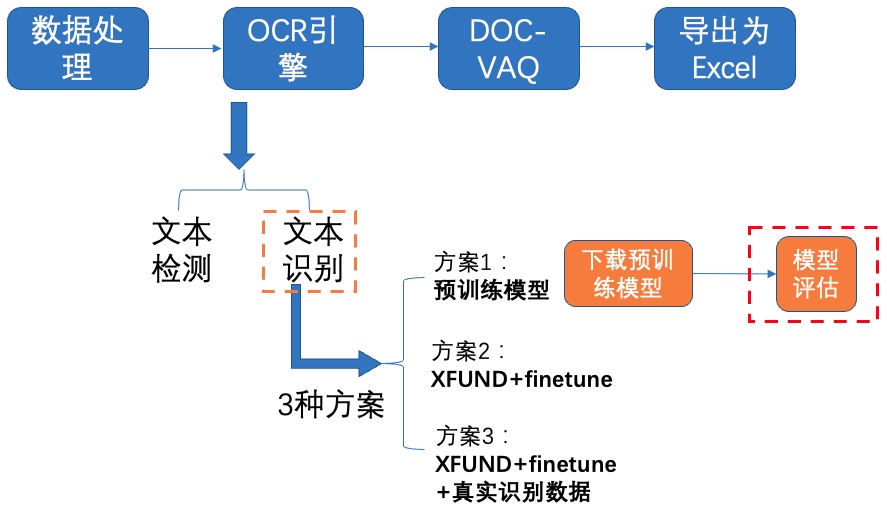

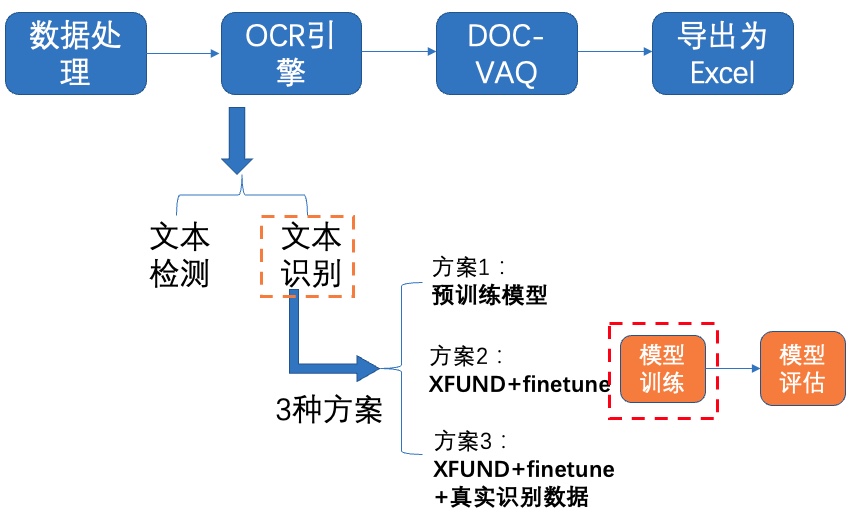

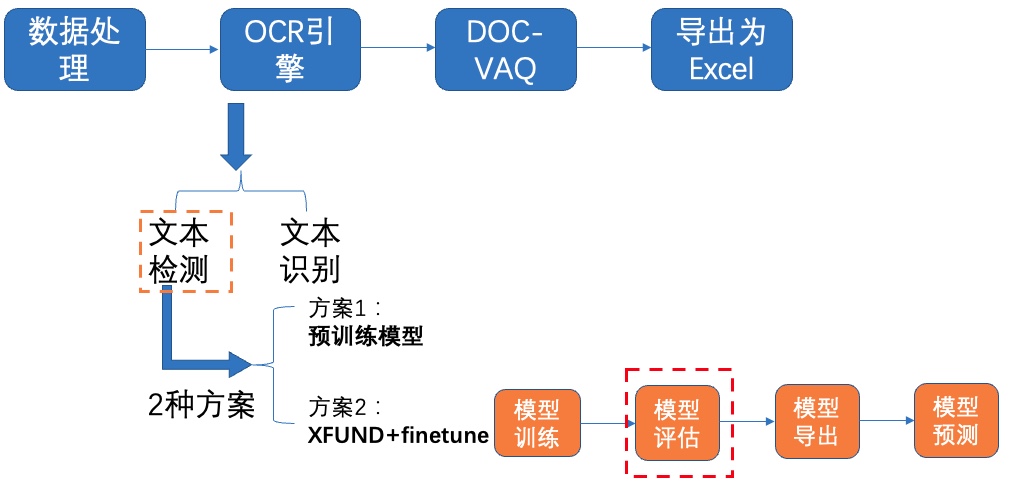

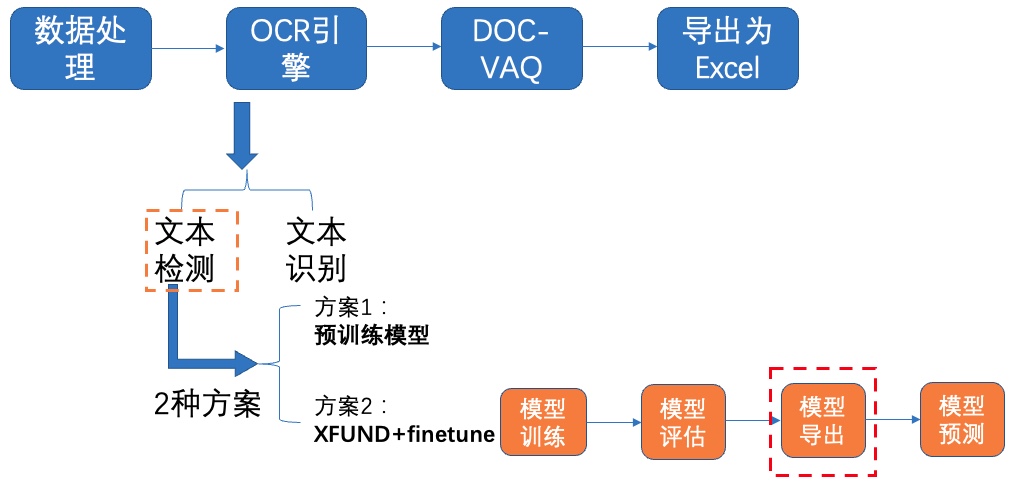

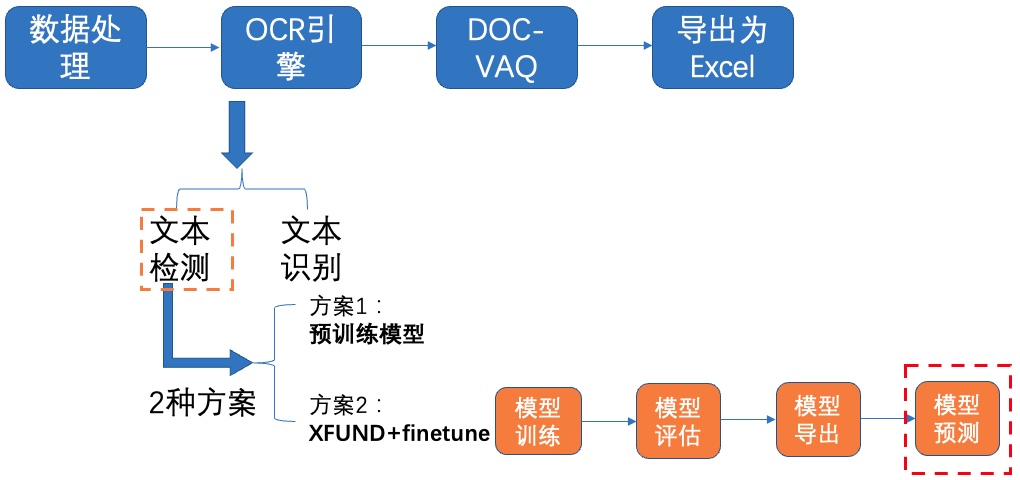

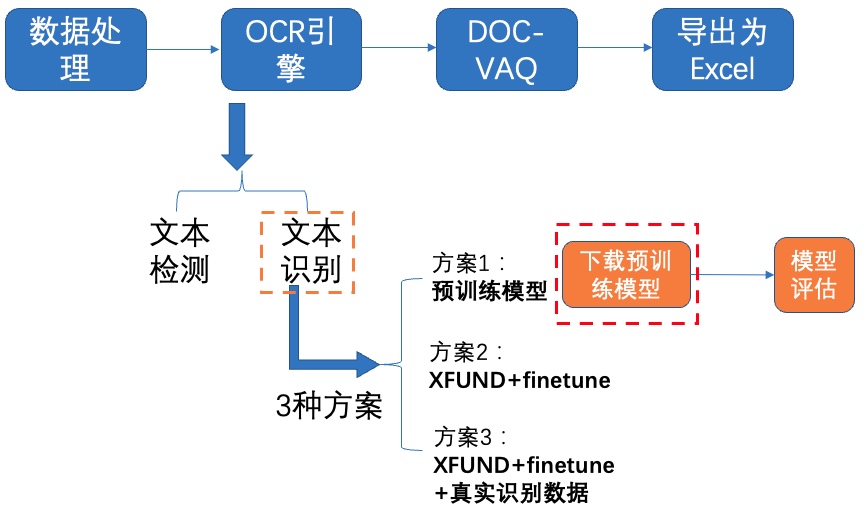

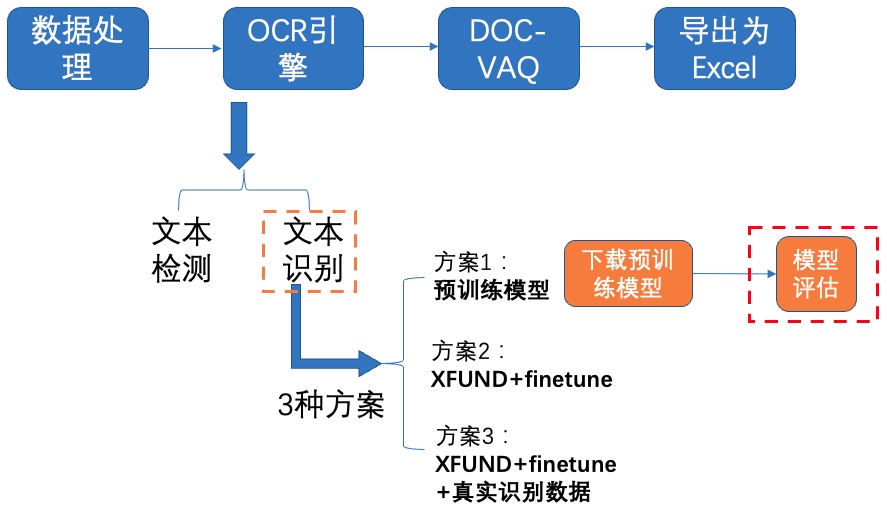

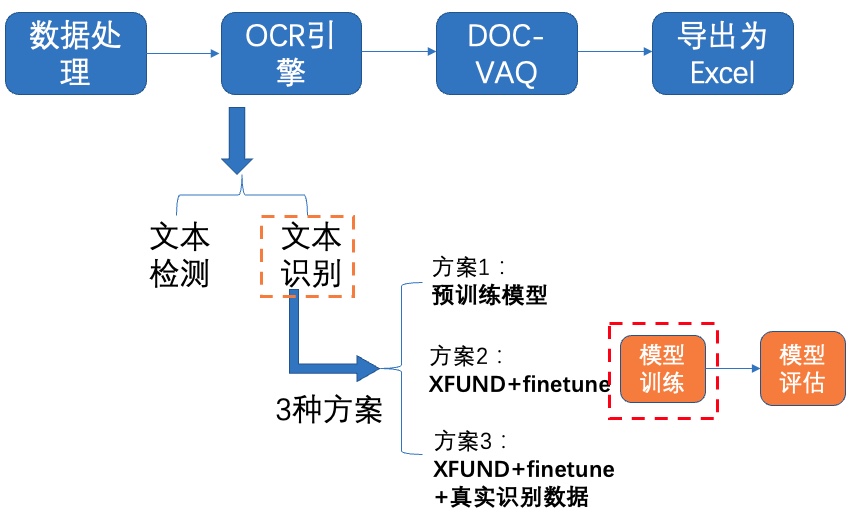

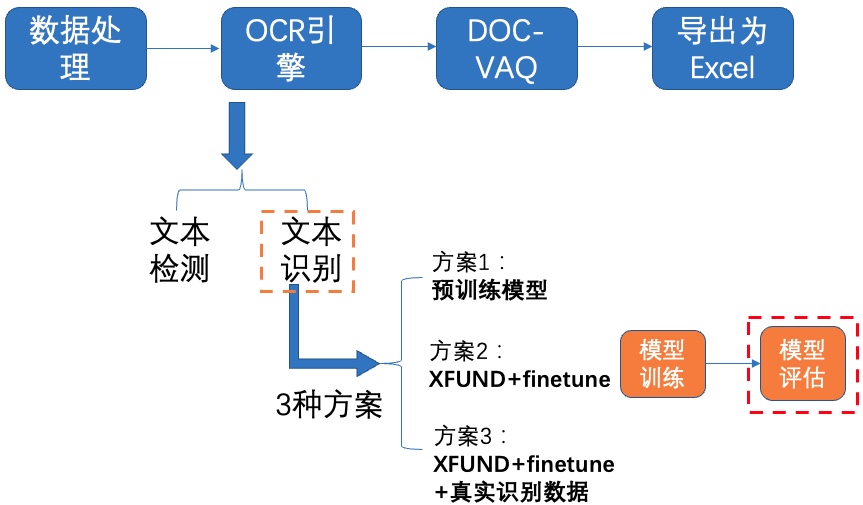

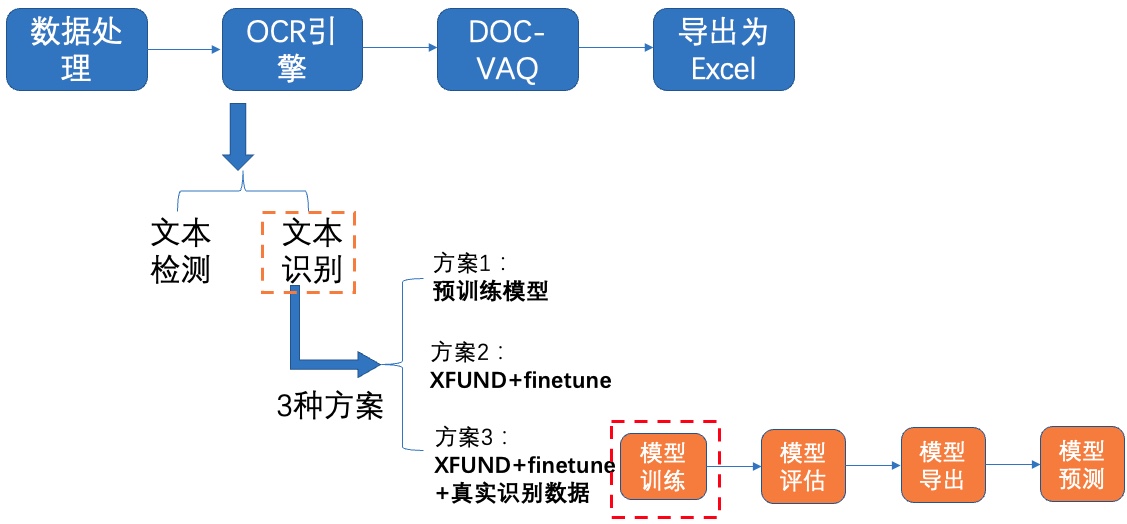

+我们使用 **3种方案** 进行检测模型的训练、评估:

+- **PP-OCRv3英文超轻量检测预训练模型直接评估**

+- PP-OCRv3英文超轻量检测预训练模型 + **验证集padding**直接评估

+- PP-OCRv3英文超轻量检测预训练模型 + **fine-tune**

+

+## **4.1 预训练模型直接评估**

+

+我们首先通过PaddleOCR提供的预训练模型在验证集上进行评估,如果评估指标能满足效果,可以直接使用预训练模型,不再需要训练。

+

+使用预训练模型直接评估步骤如下:

+

+**1)下载预训练模型**

+

+

+PaddleOCR已经提供了PP-OCR系列模型,部分模型展示如下表所示:

+

+| 模型简介 | 模型名称 | 推荐场景 | 检测模型 | 方向分类器 | 识别模型 |

+| ------------------------------------- | ----------------------- | --------------- | ------------------------------------------------------------ | ------------------------------------------------------------ | ------------------------------------------------------------ |

+| 中英文超轻量PP-OCRv3模型(16.2M) | ch_PP-OCRv3_xx | 移动端&服务器端 | [推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_det_distill_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/chinese/ch_PP-OCRv3_rec_train.tar) |

+| 英文超轻量PP-OCRv3模型(13.4M) | en_PP-OCRv3_xx | 移动端&服务器端 | [推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_det_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_det_distill_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_rec_train.tar) |

+| 中英文超轻量PP-OCRv2模型(13.0M) | ch_PP-OCRv2_xx | 移动端&服务器端 | [推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_det_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_det_distill_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_rec_infer.tar) / [训练模型](https://paddleocr.bj.bcebos.com/PP-OCRv2/chinese/ch_PP-OCRv2_rec_train.tar) |

+| 中英文超轻量PP-OCR mobile模型(9.4M) | ch_ppocr_mobile_v2.0_xx | 移动端&服务器端 | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_det_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_det_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_rec_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_rec_pre.tar) |

+| 中英文通用PP-OCR server模型(143.4M) | ch_ppocr_server_v2.0_xx | 服务器端 | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_det_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_det_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar) | [推理模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_rec_infer.tar) / [预训练模型](https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_rec_pre.tar) |

+

+更多模型下载(包括多语言),可以参[考PP-OCR系列模型下载](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/doc/doc_ch/models_list.md)

+

+这里我们使用PP-OCRv3英文超轻量检测模型,下载并解压预训练模型:

+

+

+

+

+```python

+# 如果更换其他模型,更新下载链接和解压指令就可以

+cd /home/aistudio/PaddleOCR

+mkdir pretrain_models

+cd pretrain_models

+# 下载英文预训练模型

+wget https://paddleocr.bj.bcebos.com/PP-OCRv3/english/en_PP-OCRv3_det_distill_train.tar

+tar xf en_PP-OCRv3_det_distill_train.tar && rm -rf en_PP-OCRv3_det_distill_train.tar

+%cd ..

+```

+

+**模型评估**

+

+

+首先修改配置文件`configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml`中的以下字段:

+```

+Eval.dataset.data_dir:指向验证集图片存放目录,'/home/aistudio/dataset'

+Eval.dataset.label_file_list:指向验证集标注文件,'/home/aistudio/dataset/det_gt_val.txt'

+Eval.dataset.transforms.DetResizeForTest: 尺寸

+ limit_side_len: 48

+ limit_type: 'min'

+```

+

+然后在验证集上进行评估,具体代码如下:

+

+

+

+```python

+cd /home/aistudio/PaddleOCR

+python tools/eval.py \

+ -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml \

+ -o Global.checkpoints="./pretrain_models/en_PP-OCRv3_det_distill_train/best_accuracy"

+```

+

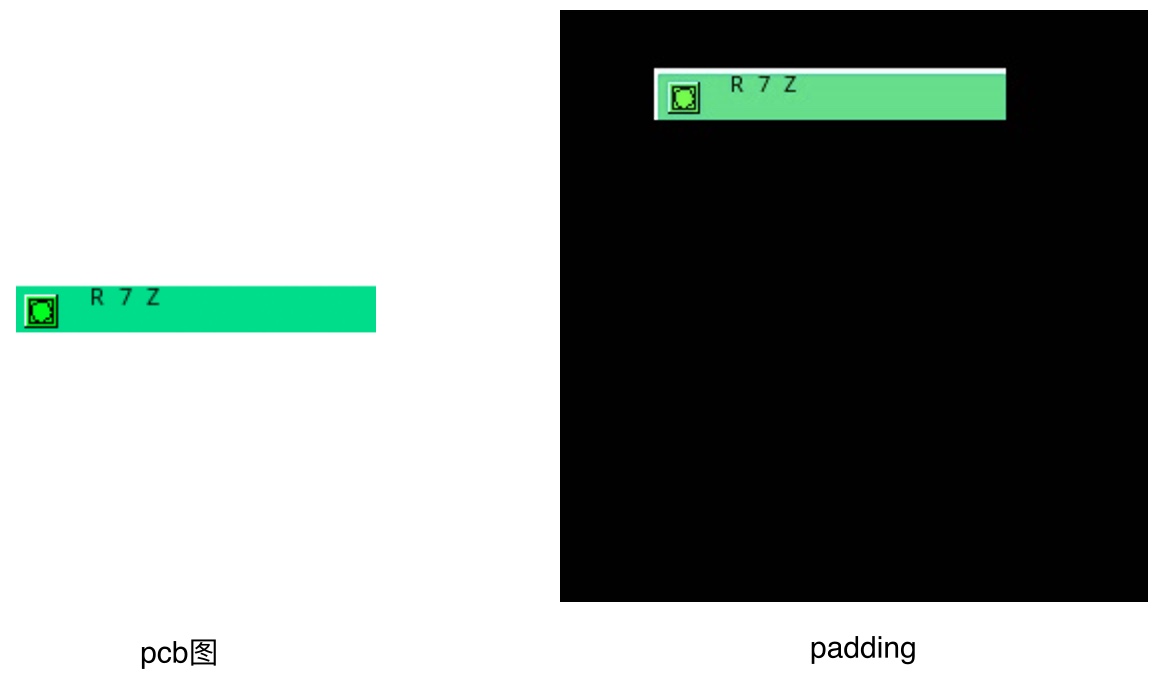

+## **4.2 预训练模型+验证集padding直接评估**

+

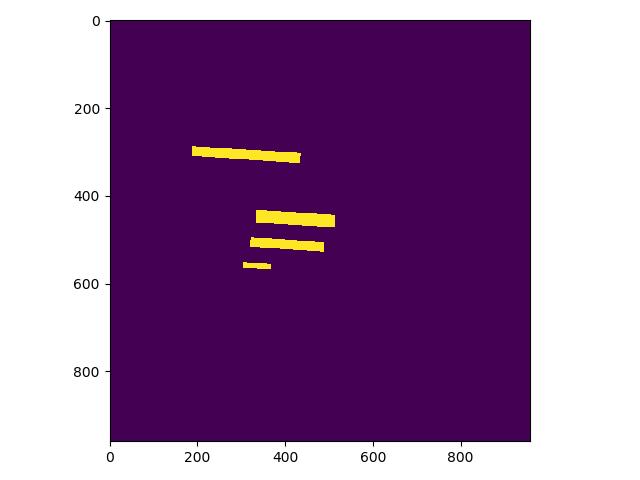

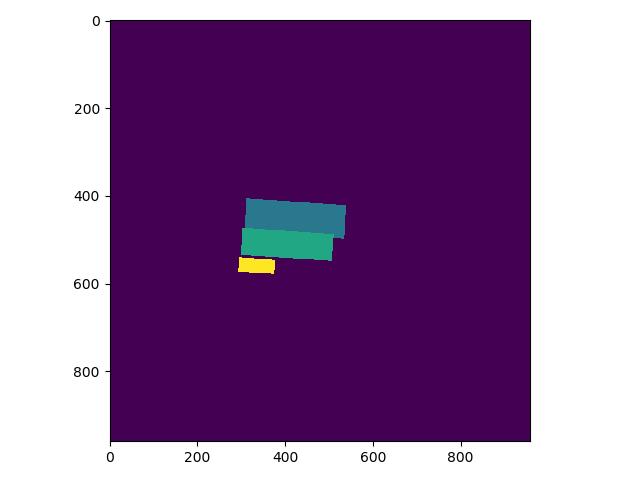

+考虑到PCB图片比较小,宽度只有25左右、高度只有140-170左右,我们在原图的基础上进行padding,再进行检测评估,padding前后效果对比如 **图4** 所示:

+

+

+图4 padding前后对比图

+

+将图片都padding到300*300大小,因为坐标信息发生了变化,我们同时要修改标注文件,在`/home/aistudio/dataset`目录里也提供了padding之后的图片,大家也可以尝试训练和评估:

+

+同上,我们需要修改配置文件`configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_cml.yml`中的以下字段:

+```

+Eval.dataset.data_dir:指向验证集图片存放目录,'/home/aistudio/dataset'

+Eval.dataset.label_file_list:指向验证集标注文件,/home/aistudio/dataset/det_gt_padding_val.txt

+Eval.dataset.transforms.DetResizeForTest: 尺寸

+ limit_side_len: 1100

+ limit_type: 'min'

+```

+

+如需获取已训练模型,请扫码填写问卷,加入PaddleOCR官方交流群获取全部OCR垂类模型下载链接、《动手学OCR》电子书等全套OCR学习资料🎁

+

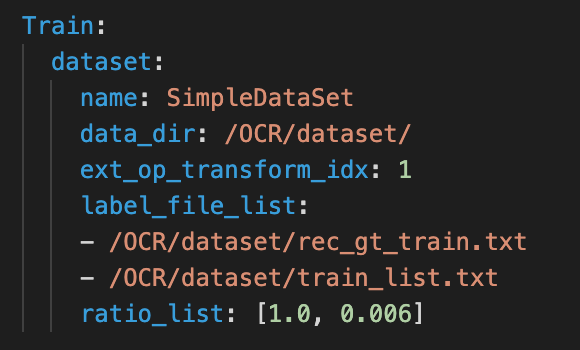

+

+

图5 添加公开通用识别数据配置文件示例

+

+

+我们提取Student模型的参数,在PCB数据集上进行fine-tune,可以参考如下代码:

+

+

+```python

+import paddle

+# 加载预训练模型

+all_params = paddle.load("./pretrain_models/ch_PP-OCRv3_rec_train/best_accuracy.pdparams")

+# 查看权重参数的keys

+print(all_params.keys())

+# 学生模型的权重提取

+s_params = {key[len("student_model."):]: all_params[key] for key in all_params if "student_model." in key}

+# 查看学生模型权重参数的keys

+print(s_params.keys())

+# 保存

+paddle.save(s_params, "./pretrain_models/ch_PP-OCRv3_rec_train/student.pdparams")

+```

+

+修改参数后,**每个方案**都执行如下命令启动训练:

+

+

+

+```python

+cd /home/aistudio/PaddleOCR/

+python3 tools/train.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml

+```

+

+

+使用训练好的模型进行评估,更新模型路径`Global.checkpoints`:

+

+

+```python

+cd /home/aistudio/PaddleOCR/

+python3 tools/eval.py \

+ -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml \

+ -o Global.checkpoints=./output/rec_ppocr_v3/latest

+```

+

+所有方案评估指标如下:

+

+| 序号 | 方案 | acc | 效果提升 | 实验分析 |

+| -------- | -------- | -------- | -------- | -------- |

+| 1 | PP-OCRv3中英文超轻量识别预训练模型直接评估 | 46.67% | - | 提供的预训练模型具有泛化能力 |

+| 2 | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune | 42.02% |-4.65% | 在数据量不足的情况,反而比预训练模型效果低(也可以通过调整超参数再试试)|

+| 3 | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune + 公开通用识别数据集 | 77.00% | +30.33% | 在数据量不足的情况下,可以考虑补充公开数据训练 |

+| 4 | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune + 增加PCB图像数量 | 99.99% | +22.99% | 如果能获取更多数据量的情况,可以通过增加数据量提升效果 |

+

+```

+注:上述实验结果均是在1500张图片(1200张训练集,300张测试集)、2W张图片、添加公开通用识别数据集上训练、评估的得到,AIstudio只提供了100张数据,所以指标有所差异属于正常,只要策略有效、规律相同即可。

+```

+

+# 6. 模型导出

+

+inference 模型(paddle.jit.save保存的模型) 一般是模型训练,把模型结构和模型参数保存在文件中的固化模型,多用于预测部署场景。 训练过程中保存的模型是checkpoints模型,保存的只有模型的参数,多用于恢复训练等。 与checkpoints模型相比,inference 模型会额外保存模型的结构信息,在预测部署、加速推理上性能优越,灵活方便,适合于实际系统集成。

+

+

+```python

+# 导出检测模型

+python3 tools/export_model.py \

+ -c configs/det/ch_PP-OCRv3/ch_PP-OCRv3_det_student.yml \

+ -o Global.pretrained_model="./output/ch_PP-OCR_V3_det/latest" \

+ Global.save_inference_dir="./inference_model/ch_PP-OCR_V3_det/"

+```

+

+因为上述模型只训练了1个epoch,因此我们使用训练最优的模型进行预测,存储在`/home/aistudio/best_models/`目录下,解压即可

+

+

+```python

+cd /home/aistudio/best_models/

+wget https://paddleocr.bj.bcebos.com/fanliku/PCB/det_ppocr_v3_en_infer_PCB.tar

+tar xf /home/aistudio/best_models/det_ppocr_v3_en_infer_PCB.tar -C /home/aistudio/PaddleOCR/pretrain_models/

+```

+

+

+```python

+# 检测模型inference模型预测

+cd /home/aistudio/PaddleOCR/

+python3 tools/infer/predict_det.py \

+ --image_dir="/home/aistudio/dataset/imgs/0000.jpg" \

+ --det_algorithm="DB" \

+ --det_model_dir="./pretrain_models/det_ppocr_v3_en_infer_PCB/" \

+ --det_limit_side_len=48 \

+ --det_limit_type='min' \

+ --det_db_unclip_ratio=2.5 \

+ --use_gpu=True

+```

+

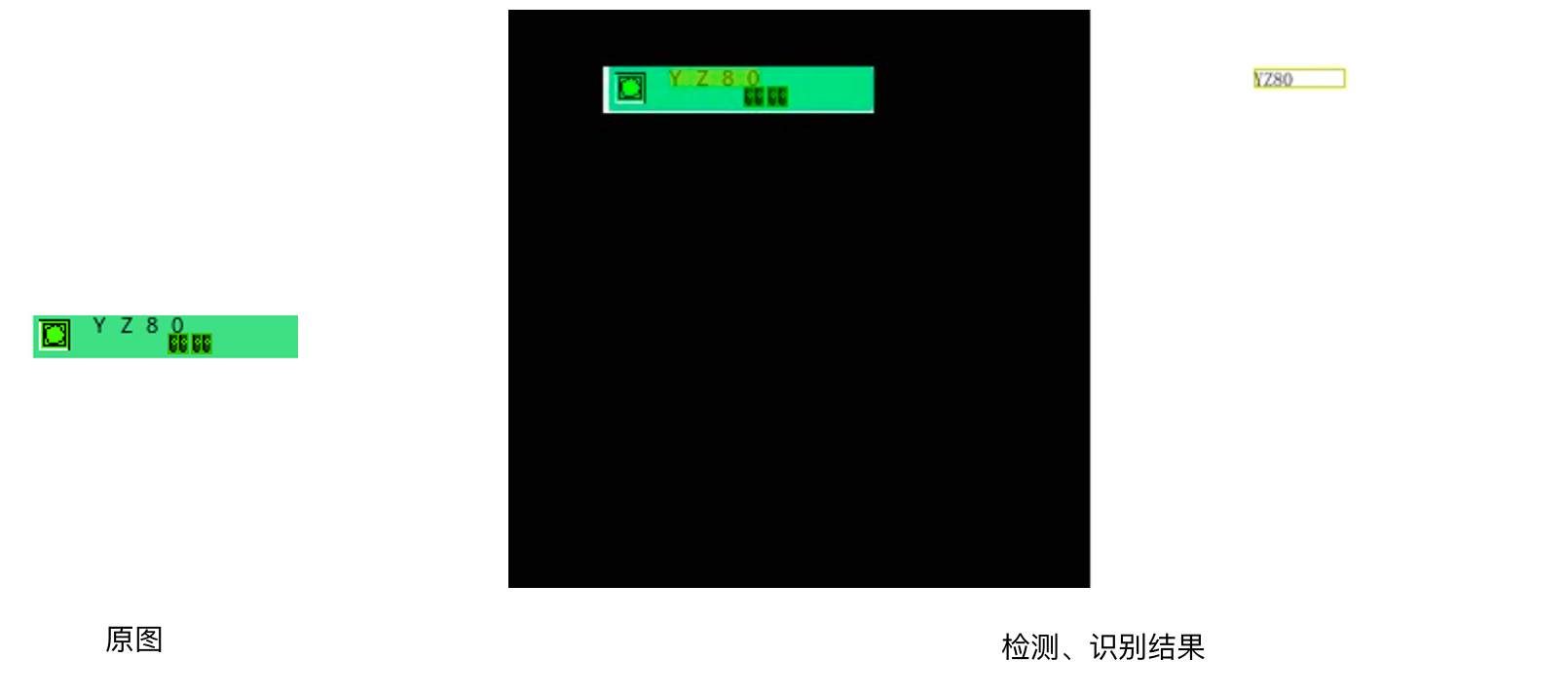

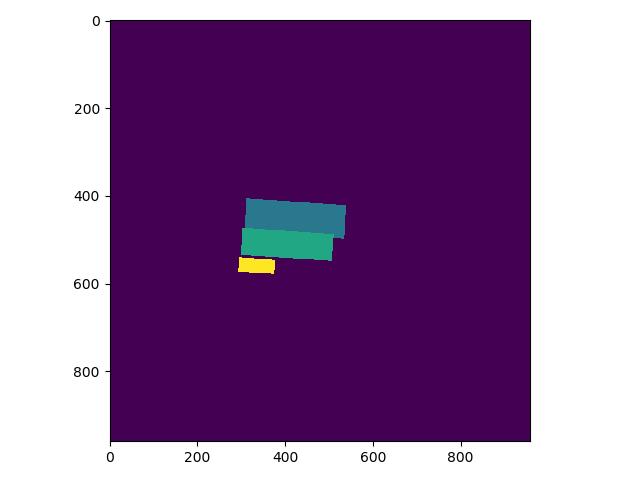

+结果存储在`inference_results`目录下,检测如下图所示:

+

+图6 检测结果

+

+

+同理,导出识别模型并进行推理。

+

+```python

+# 导出识别模型

+python3 tools/export_model.py \

+ -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec.yml \

+ -o Global.pretrained_model="./output/rec_ppocr_v3/latest" \

+ Global.save_inference_dir="./inference_model/rec_ppocr_v3/"

+

+```

+

+同检测模型,识别模型也只训练了1个epoch,因此我们使用训练最优的模型进行预测,存储在`/home/aistudio/best_models/`目录下,解压即可

+

+

+```python

+cd /home/aistudio/best_models/

+wget https://paddleocr.bj.bcebos.com/fanliku/PCB/rec_ppocr_v3_ch_infer_PCB.tar

+tar xf /home/aistudio/best_models/rec_ppocr_v3_ch_infer_PCB.tar -C /home/aistudio/PaddleOCR/pretrain_models/

+```

+

+

+```python

+# 识别模型inference模型预测

+cd /home/aistudio/PaddleOCR/

+python3 tools/infer/predict_rec.py \

+ --image_dir="../test_imgs/0000_rec.jpg" \

+ --rec_model_dir="./pretrain_models/rec_ppocr_v3_ch_infer_PCB" \

+ --rec_image_shape="3, 48, 320" \

+ --use_space_char=False \

+ --use_gpu=True

+```

+

+```python

+# 检测+识别模型inference模型预测

+cd /home/aistudio/PaddleOCR/

+python3 tools/infer/predict_system.py \

+ --image_dir="../test_imgs/0000.jpg" \

+ --det_model_dir="./pretrain_models/det_ppocr_v3_en_infer_PCB" \

+ --det_limit_side_len=48 \

+ --det_limit_type='min' \

+ --det_db_unclip_ratio=2.5 \

+ --rec_model_dir="./pretrain_models/rec_ppocr_v3_ch_infer_PCB" \

+ --rec_image_shape="3, 48, 320" \

+ --draw_img_save_dir=./det_rec_infer/ \

+ --use_space_char=False \

+ --use_angle_cls=False \

+ --use_gpu=True

+

+```

+

+端到端预测结果存储在`det_res_infer`文件夹内,结果如下图所示:

+

+图7 检测+识别结果

+

+# 7. 端对端评测

+

+接下来介绍文本检测+文本识别的端对端指标评估方式。主要分为三步:

+

+1)首先运行`tools/infer/predict_system.py`,将`image_dir`改为需要评估的数据文件家,得到保存的结果:

+

+

+```python

+# 检测+识别模型inference模型预测

+python3 tools/infer/predict_system.py \

+ --image_dir="../dataset/imgs/" \

+ --det_model_dir="./pretrain_models/det_ppocr_v3_en_infer_PCB" \

+ --det_limit_side_len=48 \

+ --det_limit_type='min' \

+ --det_db_unclip_ratio=2.5 \

+ --rec_model_dir="./pretrain_models/rec_ppocr_v3_ch_infer_PCB" \

+ --rec_image_shape="3, 48, 320" \

+ --draw_img_save_dir=./det_rec_infer/ \

+ --use_space_char=False \

+ --use_angle_cls=False \

+ --use_gpu=True

+```

+

+得到保存结果,文本检测识别可视化图保存在`det_rec_infer/`目录下,预测结果保存在`det_rec_infer/system_results.txt`中,格式如下:`0018.jpg [{"transcription": "E295", "points": [[88, 33], [137, 33], [137, 40], [88, 40]]}]`

+

+2)然后将步骤一保存的数据转换为端对端评测需要的数据格式: 修改 `tools/end2end/convert_ppocr_label.py`中的代码,convert_label函数中设置输入标签路径,Mode,保存标签路径等,对预测数据的GTlabel和预测结果的label格式进行转换。

+```

+ppocr_label_gt = "/home/aistudio/dataset/det_gt_val.txt"

+convert_label(ppocr_label_gt, "gt", "./save_gt_label/")

+

+ppocr_label_gt = "/home/aistudio/PaddleOCR/PCB_result/det_rec_infer/system_results.txt"

+convert_label(ppocr_label_gt, "pred", "./save_PPOCRV2_infer/")

+```

+

+运行`convert_ppocr_label.py`:

+

+

+```python

+ python3 tools/end2end/convert_ppocr_label.py

+```

+

+得到如下结果:

+```

+├── ./save_gt_label/

+├── ./save_PPOCRV2_infer/

+```

+

+3) 最后,执行端对端评测,运行`tools/end2end/eval_end2end.py`计算端对端指标,运行方式如下:

+

+

+```python

+pip install editdistance

+python3 tools/end2end/eval_end2end.py ./save_gt_label/ ./save_PPOCRV2_infer/

+```

+

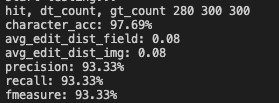

+使用`预训练模型+fine-tune'检测模型`、`预训练模型 + 2W张PCB图片funetune`识别模型,在300张PCB图片上评估得到如下结果,fmeasure为主要关注的指标:

+

+图8 端到端评估指标

+

+```

+注: 使用上述命令不能跑出该结果,因为数据集不相同,可以更换为自己训练好的模型,按上述流程运行

+```

+

+# 8. Jetson部署

+

+我们只需要以下步骤就可以完成Jetson nano部署模型,简单易操作:

+

+**1、在Jetson nano开发版上环境准备:**

+

+* 安装PaddlePaddle

+

+* 下载PaddleOCR并安装依赖

+

+**2、执行预测**

+

+* 将推理模型下载到jetson

+

+* 执行检测、识别、串联预测即可

+

+详细[参考流程](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.5/deploy/Jetson/readme_ch.md)。

+

+# 9. 总结

+

+检测实验分别使用PP-OCRv3预训练模型在PCB数据集上进行了直接评估、验证集padding、 fine-tune 3种方案,识别实验分别使用PP-OCRv3预训练模型在PCB数据集上进行了直接评估、 fine-tune、添加公开通用识别数据集、增加PCB图片数量4种方案,指标对比如下:

+

+* 检测

+

+

+| 序号 | 方案 | hmean | 效果提升 | 实验分析 |

+| ---- | -------------------------------------------------------- | ------ | -------- | ------------------------------------- |

+| 1 | PP-OCRv3英文超轻量检测预训练模型直接评估 | 64.64% | - | 提供的预训练模型具有泛化能力 |

+| 2 | PP-OCRv3英文超轻量检测预训练模型 + 验证集padding直接评估 | 72.13% | +7.49% | padding可以提升尺寸较小图片的检测效果 |

+| 3 | PP-OCRv3英文超轻量检测预训练模型 + fine-tune | 100.00% | +27.87% | fine-tune会提升垂类场景效果 |

+

+* 识别

+

+| 序号 | 方案 | acc | 效果提升 | 实验分析 |

+| ---- | ------------------------------------------------------------ | ------ | -------- | ------------------------------------------------------------ |

+| 1 | PP-OCRv3中英文超轻量识别预训练模型直接评估 | 46.67% | - | 提供的预训练模型具有泛化能力 |

+| 2 | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune | 42.02% | -4.65% | 在数据量不足的情况,反而比预训练模型效果低(也可以通过调整超参数再试试) |

+| 3 | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune + 公开通用识别数据集 | 77.00% | +30.33% | 在数据量不足的情况下,可以考虑补充公开数据训练 |

+| 4 | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune + 增加PCB图像数量 | 99.99% | +22.99% | 如果能获取更多数据量的情况,可以通过增加数据量提升效果 |

+

+* 端到端

+

+| det | rec | fmeasure |

+| --------------------------------------------- | ------------------------------------------------------------ | -------- |

+| PP-OCRv3英文超轻量检测预训练模型 + fine-tune | PP-OCRv3中英文超轻量识别预训练模型 + fine-tune + 增加PCB图像数量 | 93.30% |

+

+*结论*

+

+PP-OCRv3的检测模型在未经过fine-tune的情况下,在PCB数据集上也有64.64%的精度,说明具有泛化能力。验证集padding之后,精度提升7.5%,在图片尺寸较小的情况,我们可以通过padding的方式提升检测效果。经过 fine-tune 后能够极大的提升检测效果,精度达到100%。

+

+PP-OCRv3的识别模型方案1和方案2对比可以发现,当数据量不足的情况,预训练模型精度可能比fine-tune效果还要高,所以我们可以先尝试预训练模型直接评估。如果在数据量不足的情况下想进一步提升模型效果,可以通过添加公开通用识别数据集,识别效果提升30%,非常有效。最后如果我们能够采集足够多的真实场景数据集,可以通过增加数据量提升模型效果,精度达到99.99%。

+

+# 更多资源

+

+- 更多深度学习知识、产业案例、面试宝典等,请参考:[awesome-DeepLearning](https://github.com/paddlepaddle/awesome-DeepLearning)

+

+- 更多PaddleOCR使用教程,请参考:[PaddleOCR](https://github.com/PaddlePaddle/PaddleOCR/tree/dygraph)

+

+

+- 飞桨框架相关资料,请参考:[飞桨深度学习平台](https://www.paddlepaddle.org.cn/?fr=paddleEdu_aistudio)

+

+# 参考

+

+* 数据生成代码库:https://github.com/zcswdt/Color_OCR_image_generator

diff --git "a/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/background/bg.jpg" "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/background/bg.jpg"

new file mode 100644

index 0000000..3cb6eab

Binary files /dev/null and "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/background/bg.jpg" differ

diff --git "a/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/corpus/text.txt" "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/corpus/text.txt"

new file mode 100644

index 0000000..8b8cb79

--- /dev/null

+++ "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/corpus/text.txt"

@@ -0,0 +1,30 @@

+5ZQ

+I4UL

+PWL

+SNOG

+ZL02

+1C30

+O3H

+YHRS

+N03S

+1U5Y

+JTK

+EN4F

+YKJ

+DWNH

+R42W

+X0V

+4OF5

+08AM

+Y93S

+GWE2

+0KR

+9U2A

+DBQ

+Y6J

+ROZ

+K06

+KIEY

+NZQJ

+UN1B

+6X4

\ No newline at end of file

diff --git "a/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/det_background/1.png" "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/det_background/1.png"

new file mode 100644

index 0000000..8a49eaa

Binary files /dev/null and "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/det_background/1.png" differ

diff --git "a/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/det_background/2.png" "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/det_background/2.png"

new file mode 100644

index 0000000..c3fcc0c

Binary files /dev/null and "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/det_background/2.png" differ

diff --git "a/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/gen.py" "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/gen.py"

new file mode 100644

index 0000000..97024d1

--- /dev/null

+++ "b/applications/PCB\345\255\227\347\254\246\350\257\206\345\210\253/gen_data/gen.py"

@@ -0,0 +1,263 @@

+# copyright (c) 2020 PaddlePaddle Authors. All Rights Reserve.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+"""

+This code is refer from:

+https://github.com/zcswdt/Color_OCR_image_generator

+"""

+import os

+import random

+from PIL import Image, ImageDraw, ImageFont

+import json

+import argparse

+

+

+def get_char_lines(txt_root_path):

+ """

+ desc:get corpus line

+ """

+ txt_files = os.listdir(txt_root_path)

+ char_lines = []

+ for txt in txt_files:

+ f = open(os.path.join(txt_root_path, txt), mode='r', encoding='utf-8')

+ lines = f.readlines()

+ f.close()

+ for line in lines:

+ char_lines.append(line.strip())

+ return char_lines

+

+

+def get_horizontal_text_picture(image_file, chars, fonts_list, cf):

+ """

+ desc:gen horizontal text picture

+ """

+ img = Image.open(image_file)

+ if img.mode != 'RGB':

+ img = img.convert('RGB')

+ img_w, img_h = img.size

+

+ # random choice font

+ font_path = random.choice(fonts_list)

+ # random choice font size

+ font_size = random.randint(cf.font_min_size, cf.font_max_size)

+ font = ImageFont.truetype(font_path, font_size)

+

+ ch_w = []

+ ch_h = []

+ for ch in chars:

+ left, top, right, bottom = font.getbbox(ch)

+ wt, ht = right - left, bottom - top

+ ch_w.append(wt)

+ ch_h.append(ht)

+ f_w = sum(ch_w)

+ f_h = max(ch_h)

+

+ # add space

+ char_space_width = max(ch_w)

+ f_w += (char_space_width * (len(chars) - 1))

+

+ x1 = random.randint(0, img_w - f_w)

+ y1 = random.randint(0, img_h - f_h)

+ x2 = x1 + f_w

+ y2 = y1 + f_h

+

+ crop_y1 = y1

+ crop_x1 = x1

+ crop_y2 = y2

+ crop_x2 = x2

+

+ best_color = (0, 0, 0)

+ draw = ImageDraw.Draw(img)

+ for i, ch in enumerate(chars):

+ draw.text((x1, y1), ch, best_color, font=font)

+ x1 += (ch_w[i] + char_space_width)

+ crop_img = img.crop((crop_x1, crop_y1, crop_x2, crop_y2))

+ return crop_img, chars

+

+

+def get_vertical_text_picture(image_file, chars, fonts_list, cf):

+ """

+ desc:gen vertical text picture

+ """

+ img = Image.open(image_file)

+ if img.mode != 'RGB':

+ img = img.convert('RGB')

+ img_w, img_h = img.size

+ # random choice font

+ font_path = random.choice(fonts_list)

+ # random choice font size

+ font_size = random.randint(cf.font_min_size, cf.font_max_size)

+ font = ImageFont.truetype(font_path, font_size)

+

+ ch_w = []

+ ch_h = []

+ for ch in chars:

+ left, top, right, bottom = font.getbbox(ch)

+ wt, ht = right - left, bottom - top

+ ch_w.append(wt)

+ ch_h.append(ht)

+ f_w = max(ch_w)

+ f_h = sum(ch_h)

+

+ x1 = random.randint(0, img_w - f_w)

+ y1 = random.randint(0, img_h - f_h)

+ x2 = x1 + f_w

+ y2 = y1 + f_h

+

+ crop_y1 = y1

+ crop_x1 = x1

+ crop_y2 = y2

+ crop_x2 = x2

+

+ best_color = (0, 0, 0)

+ draw = ImageDraw.Draw(img)

+ i = 0

+ for ch in chars:

+ draw.text((x1, y1), ch, best_color, font=font)

+ y1 = y1 + ch_h[i]

+ i = i + 1

+ crop_img = img.crop((crop_x1, crop_y1, crop_x2, crop_y2))

+ crop_img = crop_img.transpose(Image.ROTATE_90)

+ return crop_img, chars

+

+

+def get_fonts(fonts_path):

+ """

+ desc: get all fonts

+ """

+ font_files = os.listdir(fonts_path)

+ fonts_list=[]

+ for font_file in font_files:

+ font_path=os.path.join(fonts_path, font_file)

+ fonts_list.append(font_path)

+ return fonts_list

+

+if __name__ == '__main__':

+ parser = argparse.ArgumentParser()

+ parser.add_argument('--num_img', type=int, default=30, help="Number of images to generate")

+ parser.add_argument('--font_min_size', type=int, default=11)

+ parser.add_argument('--font_max_size', type=int, default=12,

+ help="Help adjust the size of the generated text and the size of the picture")

+ parser.add_argument('--bg_path', type=str, default='./background',

+ help='The generated text pictures will be pasted onto the pictures of this folder')

+ parser.add_argument('--det_bg_path', type=str, default='./det_background',

+ help='The generated text pictures will use the pictures of this folder as the background')

+ parser.add_argument('--fonts_path', type=str, default='../../StyleText/fonts',

+ help='The font used to generate the picture')

+ parser.add_argument('--corpus_path', type=str, default='./corpus',

+ help='The corpus used to generate the text picture')

+ parser.add_argument('--output_dir', type=str, default='./output/', help='Images save dir')

+

+

+ cf = parser.parse_args()

+ # save path

+ if not os.path.exists(cf.output_dir):

+ os.mkdir(cf.output_dir)

+

+ # get corpus

+ txt_root_path = cf.corpus_path

+ char_lines = get_char_lines(txt_root_path=txt_root_path)

+

+ # get all fonts

+ fonts_path = cf.fonts_path

+ fonts_list = get_fonts(fonts_path)

+

+ # rec bg

+ img_root_path = cf.bg_path

+ imnames=os.listdir(img_root_path)

+

+ # det bg

+ det_bg_path = cf.det_bg_path

+ bg_pics = os.listdir(det_bg_path)

+

+ # OCR det files

+ det_val_file = open(cf.output_dir + 'det_gt_val.txt', 'w', encoding='utf-8')

+ det_train_file = open(cf.output_dir + 'det_gt_train.txt', 'w', encoding='utf-8')

+ # det imgs

+ det_save_dir = 'imgs/'

+ if not os.path.exists(cf.output_dir + det_save_dir):

+ os.mkdir(cf.output_dir + det_save_dir)

+ det_val_save_dir = 'imgs_val/'

+ if not os.path.exists(cf.output_dir + det_val_save_dir):

+ os.mkdir(cf.output_dir + det_val_save_dir)

+

+ # OCR rec files

+ rec_val_file = open(cf.output_dir + 'rec_gt_val.txt', 'w', encoding='utf-8')

+ rec_train_file = open(cf.output_dir + 'rec_gt_train.txt', 'w', encoding='utf-8')

+ # rec imgs

+ rec_save_dir = 'rec_imgs/'

+ if not os.path.exists(cf.output_dir + rec_save_dir):

+ os.mkdir(cf.output_dir + rec_save_dir)

+ rec_val_save_dir = 'rec_imgs_val/'

+ if not os.path.exists(cf.output_dir + rec_val_save_dir):

+ os.mkdir(cf.output_dir + rec_val_save_dir)

+

+

+ val_ratio = cf.num_img * 0.2 # val dataset ratio

+

+ print('start generating...')

+ for i in range(0, cf.num_img):

+ imname = random.choice(imnames)

+ img_path = os.path.join(img_root_path, imname)

+

+ rnd = random.random()

+ # gen horizontal text picture

+ if rnd < 0.5:

+ gen_img, chars = get_horizontal_text_picture(img_path, char_lines[i], fonts_list, cf)

+ ori_w, ori_h = gen_img.size

+ gen_img = gen_img.crop((0, 3, ori_w, ori_h))

+ # gen vertical text picture

+ else:

+ gen_img, chars = get_vertical_text_picture(img_path, char_lines[i], fonts_list, cf)

+ ori_w, ori_h = gen_img.size

+ gen_img = gen_img.crop((3, 0, ori_w, ori_h))

+

+ ori_w, ori_h = gen_img.size

+

+ # rec imgs

+ save_img_name = str(i).zfill(4) + '.jpg'

+ if i < val_ratio:

+ save_dir = os.path.join(rec_val_save_dir, save_img_name)

+ line = save_dir + '\t' + char_lines[i] + '\n'

+ rec_val_file.write(line)

+ else:

+ save_dir = os.path.join(rec_save_dir, save_img_name)

+ line = save_dir + '\t' + char_lines[i] + '\n'

+ rec_train_file.write(line)

+ gen_img.save(cf.output_dir + save_dir, quality = 95, subsampling=0)

+

+ # det img

+ # random choice bg

+ bg_pic = random.sample(bg_pics, 1)[0]

+ det_img = Image.open(os.path.join(det_bg_path, bg_pic))

+ # the PCB position is fixed, modify it according to your own scenario

+ if bg_pic == '1.png':

+ x1 = 38

+ y1 = 3

+ else:

+ x1 = 34

+ y1 = 1

+

+ det_img.paste(gen_img, (x1, y1))

+ # text pos

+ chars_pos = [[x1, y1], [x1 + ori_w, y1], [x1 + ori_w, y1 + ori_h], [x1, y1 + ori_h]]

+ label = [{"transcription":char_lines[i], "points":chars_pos}]

+ if i < val_ratio:

+ save_dir = os.path.join(det_val_save_dir, save_img_name)

+ det_val_file.write(save_dir + '\t' + json.dumps(

+ label, ensure_ascii=False) + '\n')

+ else:

+ save_dir = os.path.join(det_save_dir, save_img_name)

+ det_train_file.write(save_dir + '\t' + json.dumps(

+ label, ensure_ascii=False) + '\n')

+ det_img.save(cf.output_dir + save_dir, quality = 95, subsampling=0)

diff --git a/applications/README.md b/applications/README.md

new file mode 100644

index 0000000..950adf7

--- /dev/null

+++ b/applications/README.md

@@ -0,0 +1,78 @@

+[English](README_en.md) | 简体中文

+

+# 场景应用

+

+PaddleOCR场景应用覆盖通用,制造、金融、交通行业的主要OCR垂类应用,在PP-OCR、PP-Structure的通用能力基础之上,以notebook的形式展示利用场景数据微调、模型优化方法、数据增广等内容,为开发者快速落地OCR应用提供示范与启发。

+

+- [教程文档](#1)

+ - [通用](#11)

+ - [制造](#12)

+ - [金融](#13)

+ - [交通](#14)

+

+- [模型下载](#2)

+

+

+

+## 教程文档

+

+

+

+### 通用

+

+| 类别 | 亮点 | 模型下载 | 教程 | 示例图 |

+| ---------------------- | ------------------------------------------------------------ | -------------- | --------------------------------------- | ------------------------------------------------------------ |

+| 高精度中文识别模型SVTR | 比PP-OCRv3识别模型精度高3%,

可用于数据挖掘或对预测效率要求不高的场景。 | [模型下载](#2) | [中文](./高精度中文识别模型.md)/English |  |

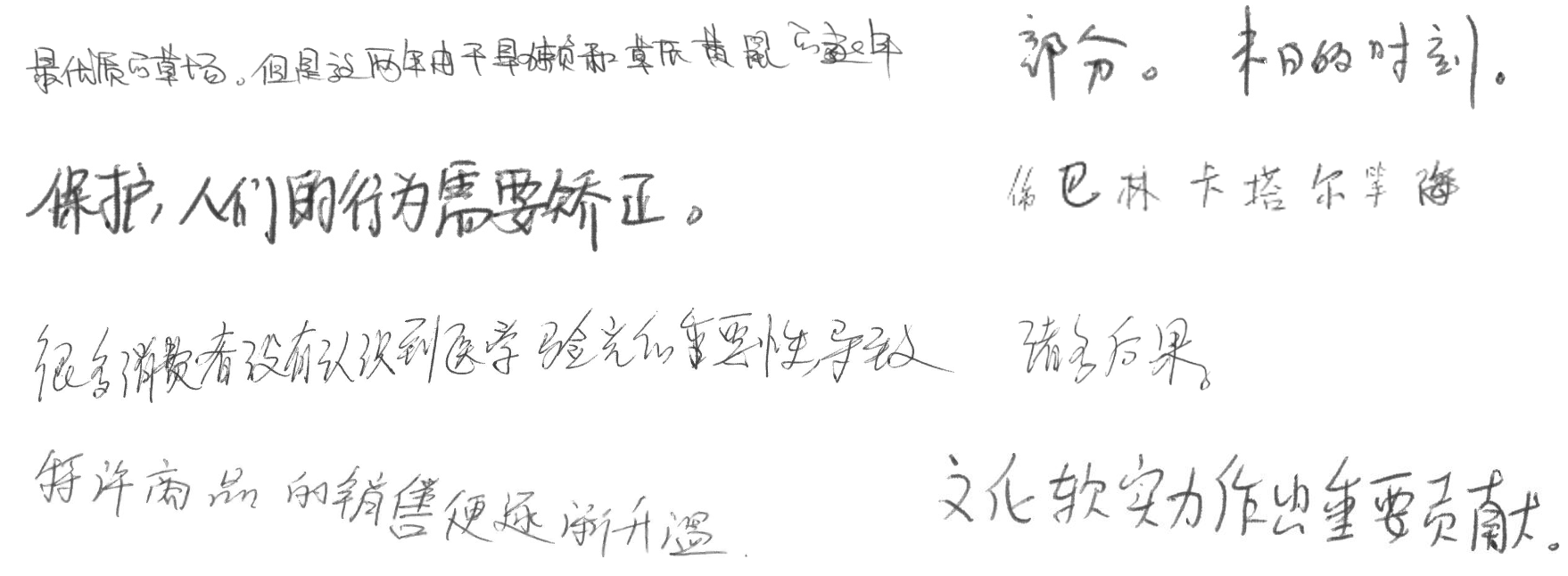

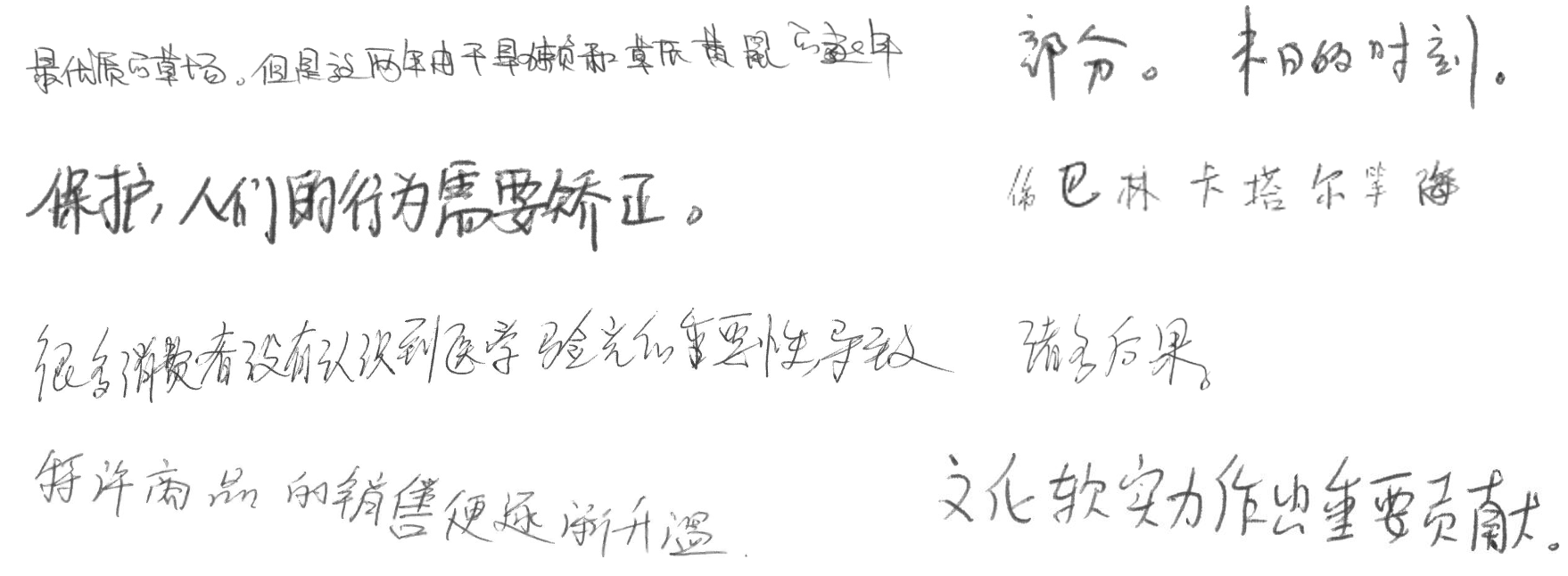

+| 手写体识别 | 新增字形支持 | [模型下载](#2) | [中文](./手写文字识别.md)/English |

|

+| 手写体识别 | 新增字形支持 | [模型下载](#2) | [中文](./手写文字识别.md)/English |  |

+

+

+

+### 制造

+

+| 类别 | 亮点 | 模型下载 | 教程 | 示例图 |

+| -------------- | ------------------------------ | -------------- | ------------------------------------------------------------ | ------------------------------------------------------------ |

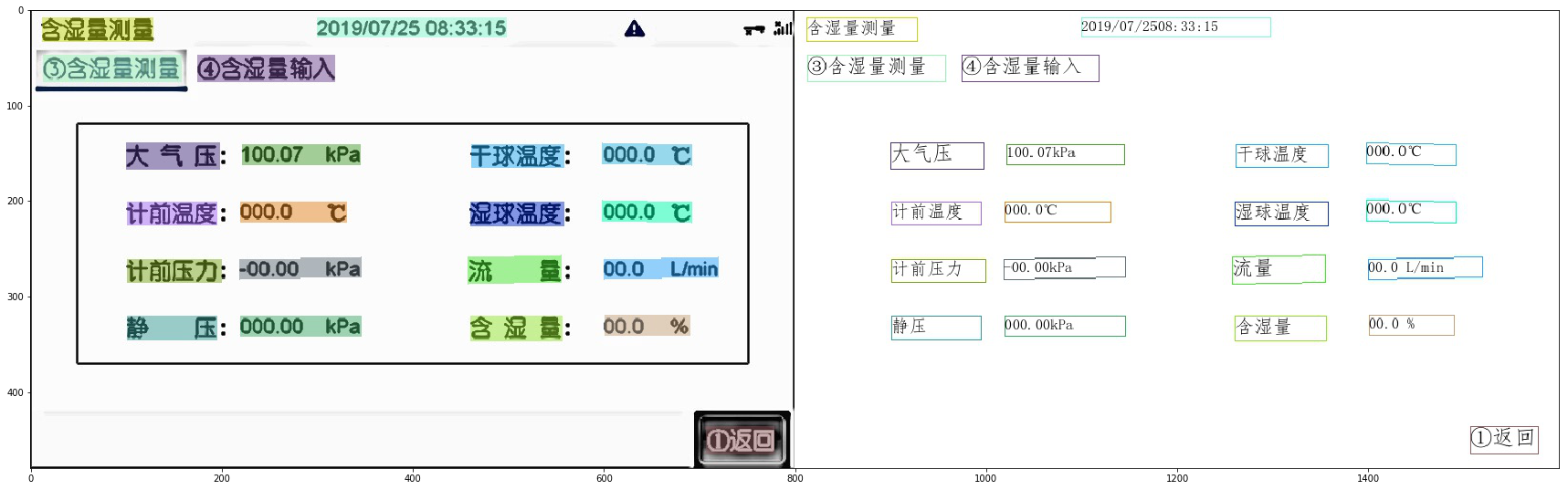

+| 数码管识别 | 数码管数据合成、漏识别调优 | [模型下载](#2) | [中文](./光功率计数码管字符识别/光功率计数码管字符识别.md)/English |

|

+

+

+

+### 制造

+

+| 类别 | 亮点 | 模型下载 | 教程 | 示例图 |

+| -------------- | ------------------------------ | -------------- | ------------------------------------------------------------ | ------------------------------------------------------------ |

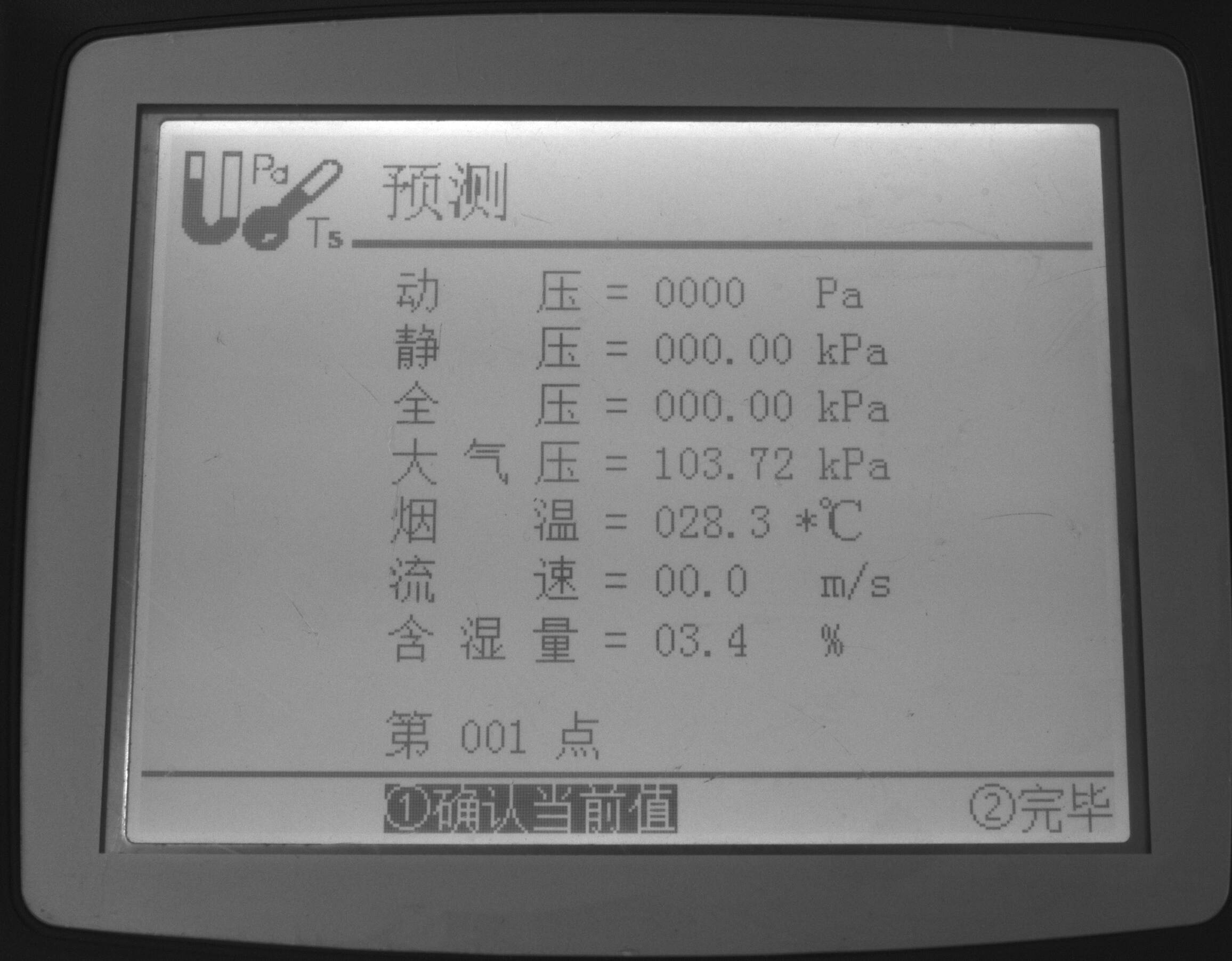

+| 数码管识别 | 数码管数据合成、漏识别调优 | [模型下载](#2) | [中文](./光功率计数码管字符识别/光功率计数码管字符识别.md)/English |  |

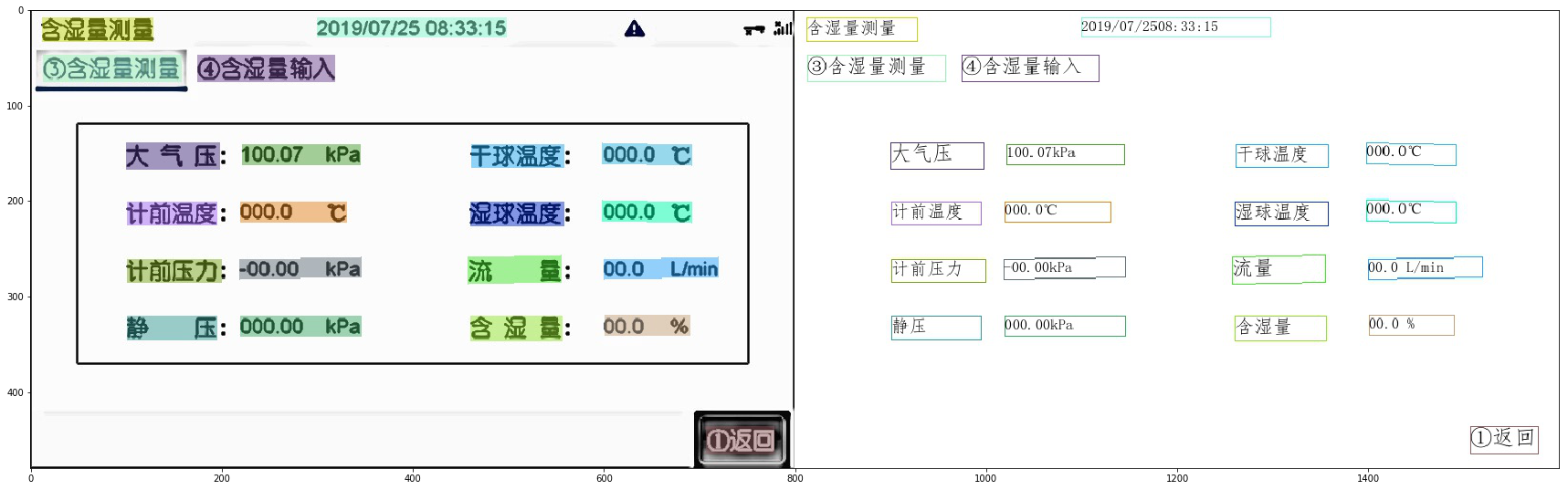

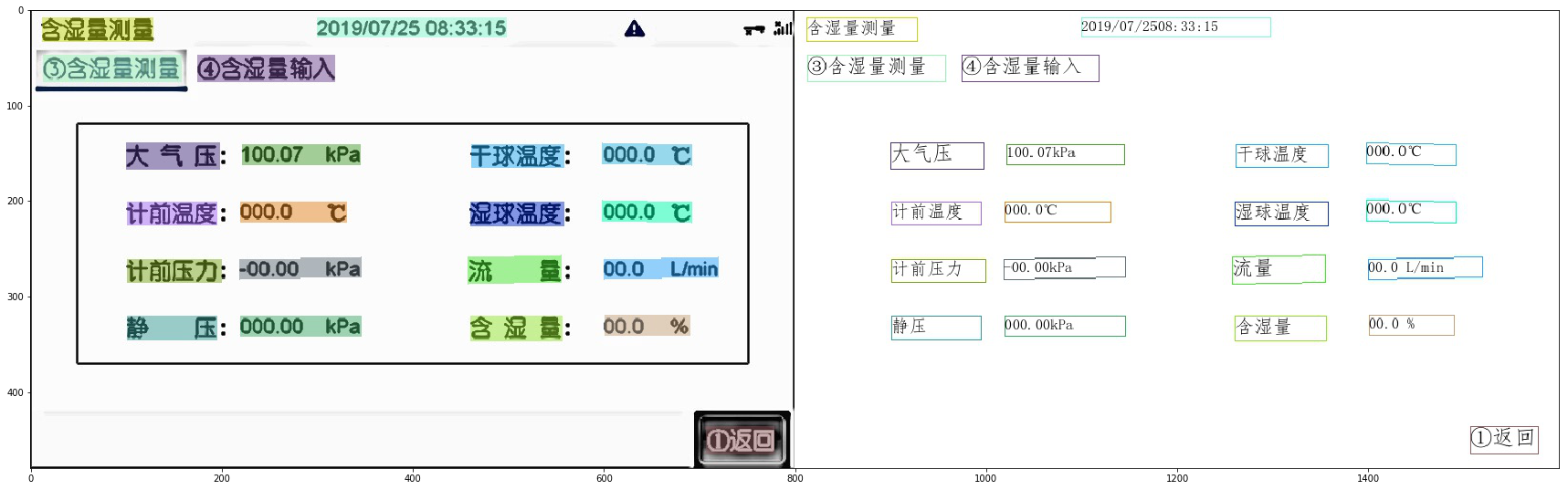

+| 液晶屏读数识别 | 检测模型蒸馏、Serving部署 | [模型下载](#2) | [中文](./液晶屏读数识别.md)/English |

|

+| 液晶屏读数识别 | 检测模型蒸馏、Serving部署 | [模型下载](#2) | [中文](./液晶屏读数识别.md)/English |  |

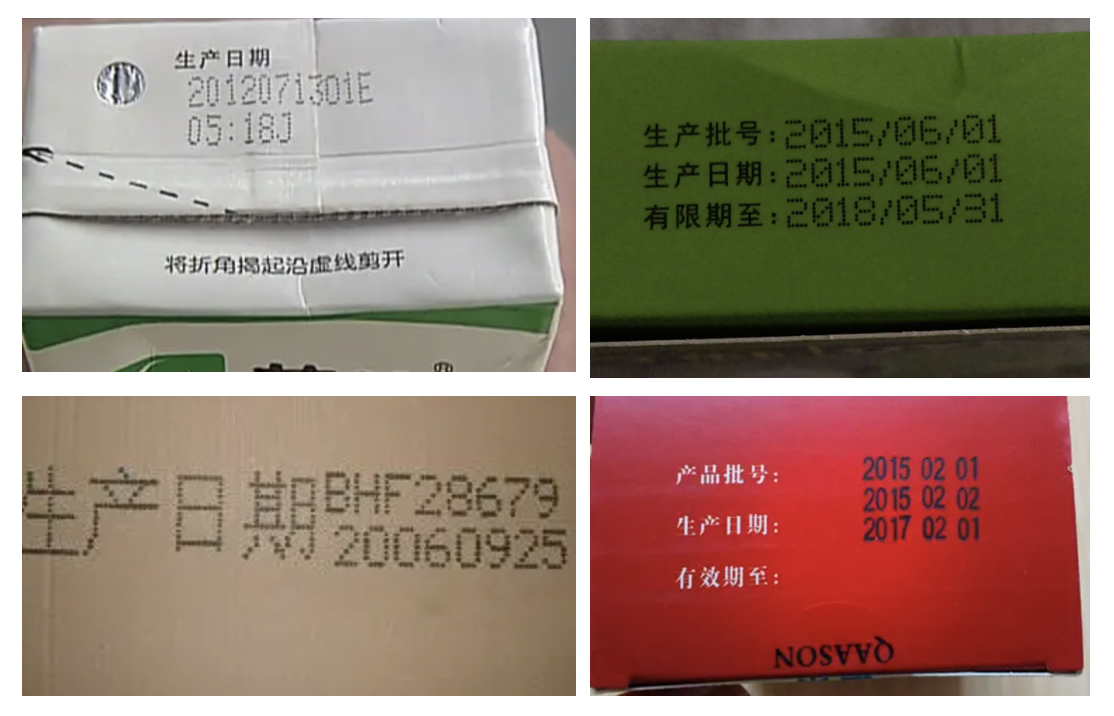

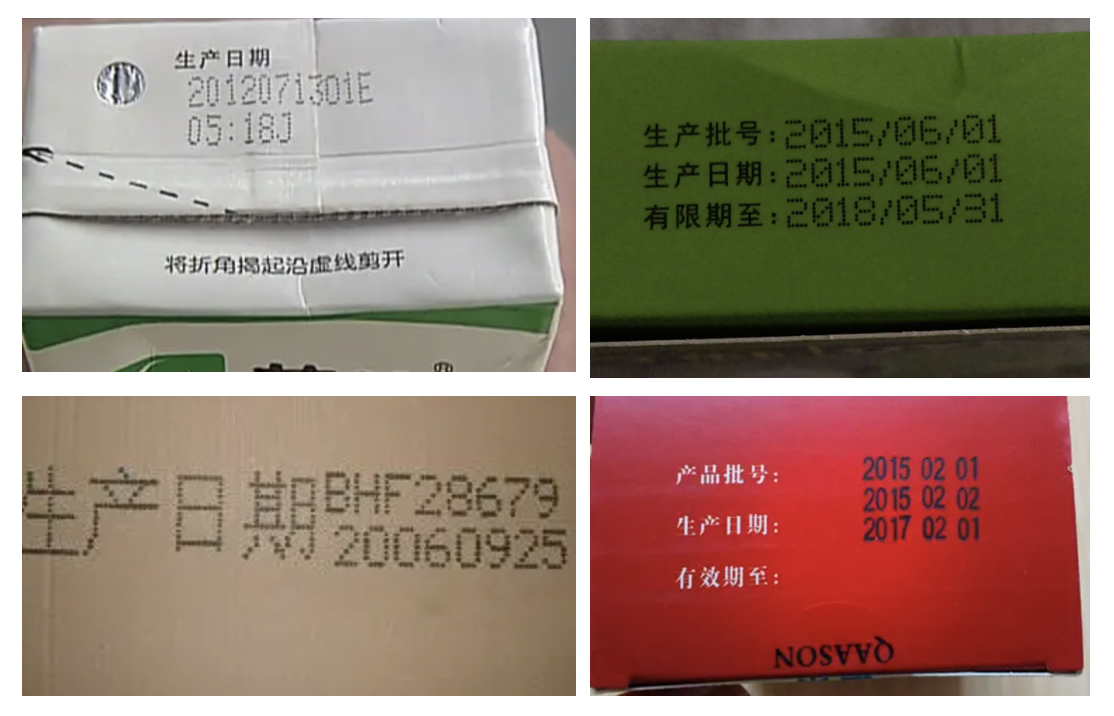

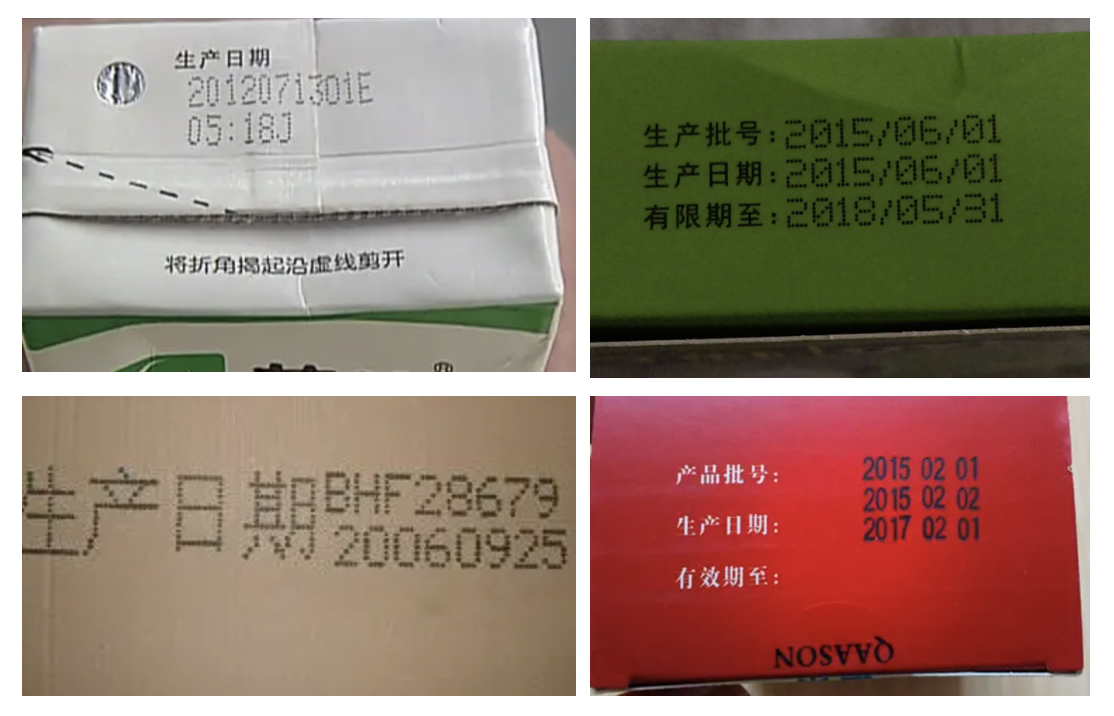

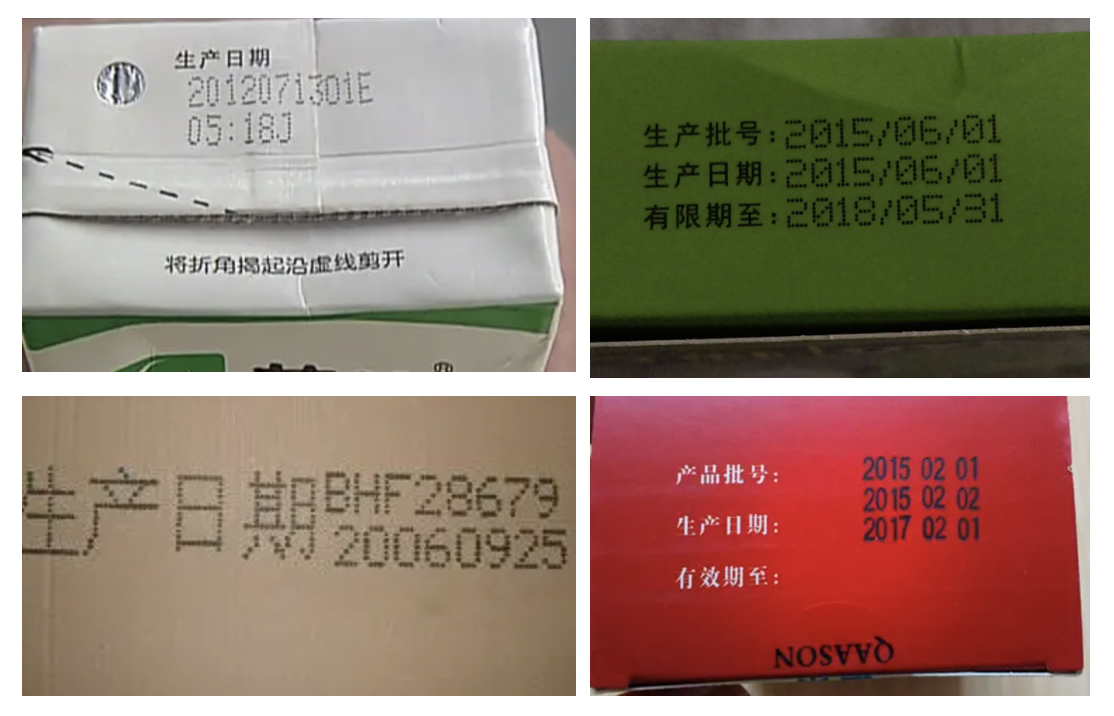

+| 包装生产日期 | 点阵字符合成、过曝过暗文字识别 | [模型下载](#2) | [中文](./包装生产日期识别.md)/English |

|

+| 包装生产日期 | 点阵字符合成、过曝过暗文字识别 | [模型下载](#2) | [中文](./包装生产日期识别.md)/English |  |

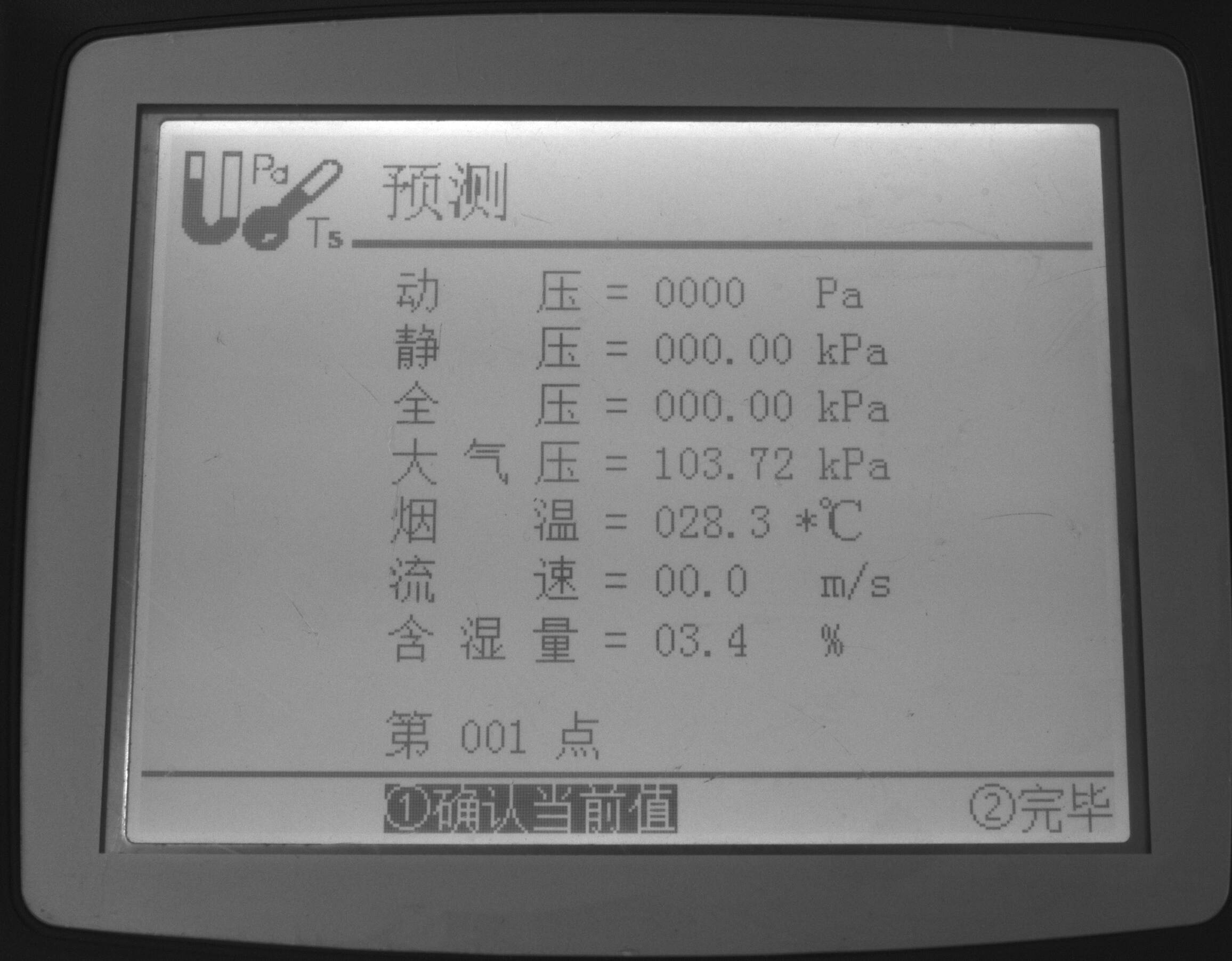

+| PCB文字识别 | 小尺寸文本检测与识别 | [模型下载](#2) | [中文](./PCB字符识别/PCB字符识别.md)/English |

|

+| PCB文字识别 | 小尺寸文本检测与识别 | [模型下载](#2) | [中文](./PCB字符识别/PCB字符识别.md)/English |  |

+| 电表识别 | 大分辨率图像检测调优 | [模型下载](#2) | | |

+| 液晶屏缺陷检测 | 非文字字符识别 | | | |

+

+

+

+### 金融

+

+| 类别 | 亮点 | 模型下载 | 教程 | 示例图 |

+| -------------- | ----------------------------- | -------------- | ----------------------------------------- | ------------------------------------------------------------ |

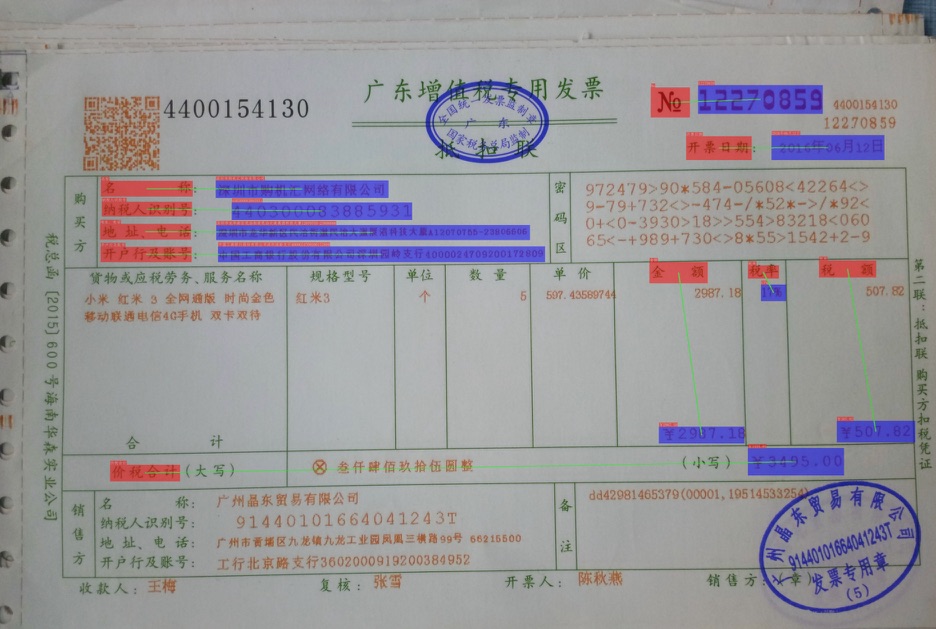

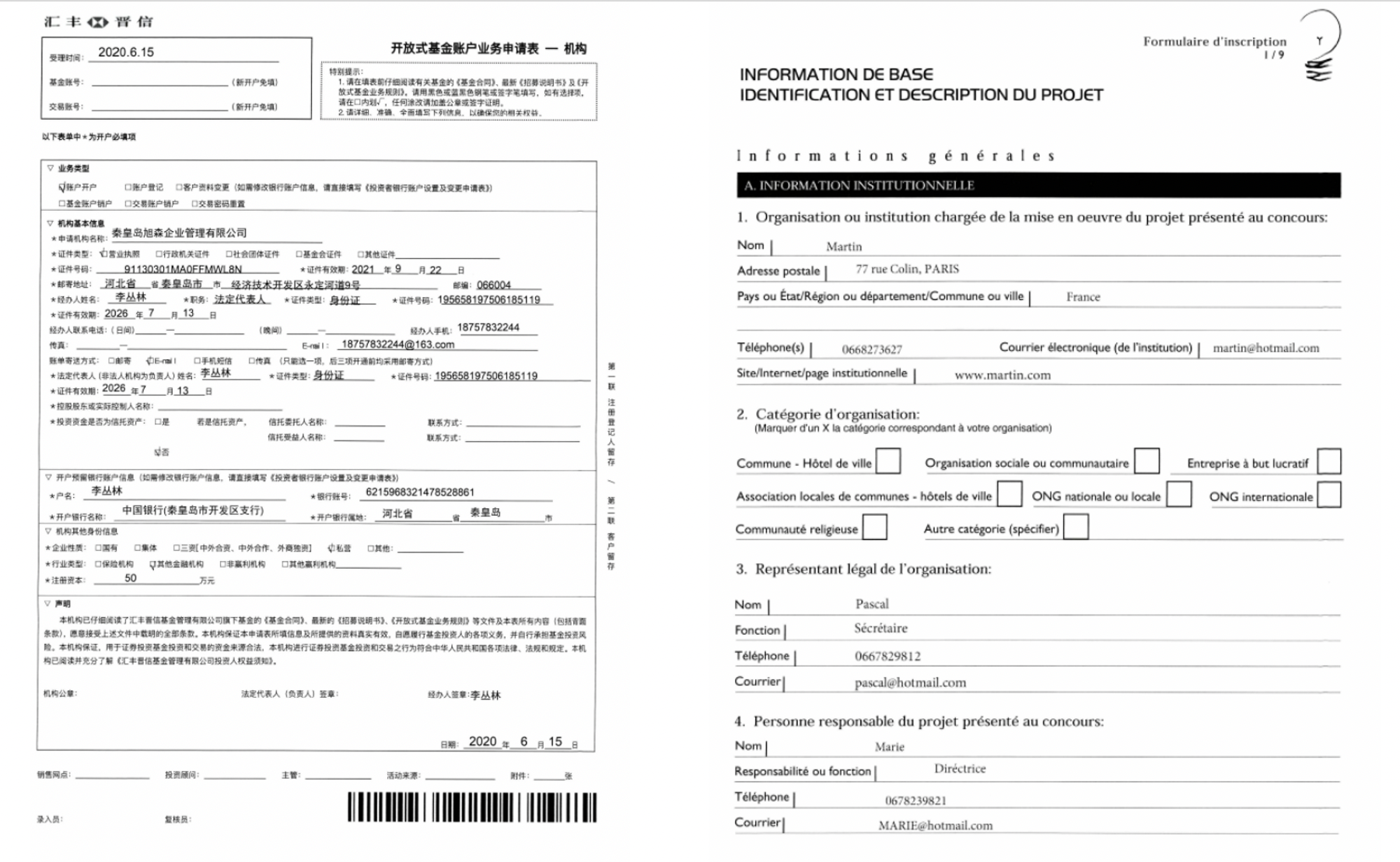

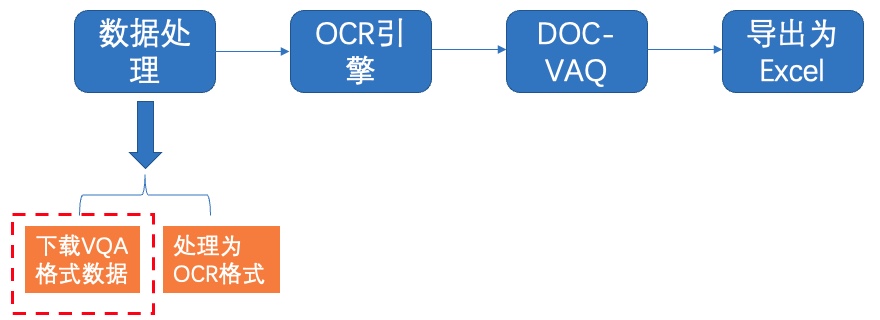

+| 表单VQA | 多模态通用表单结构化提取 | [模型下载](#2) | [中文](./多模态表单识别.md)/English |

|

+| 电表识别 | 大分辨率图像检测调优 | [模型下载](#2) | | |

+| 液晶屏缺陷检测 | 非文字字符识别 | | | |

+

+

+

+### 金融

+

+| 类别 | 亮点 | 模型下载 | 教程 | 示例图 |

+| -------------- | ----------------------------- | -------------- | ----------------------------------------- | ------------------------------------------------------------ |

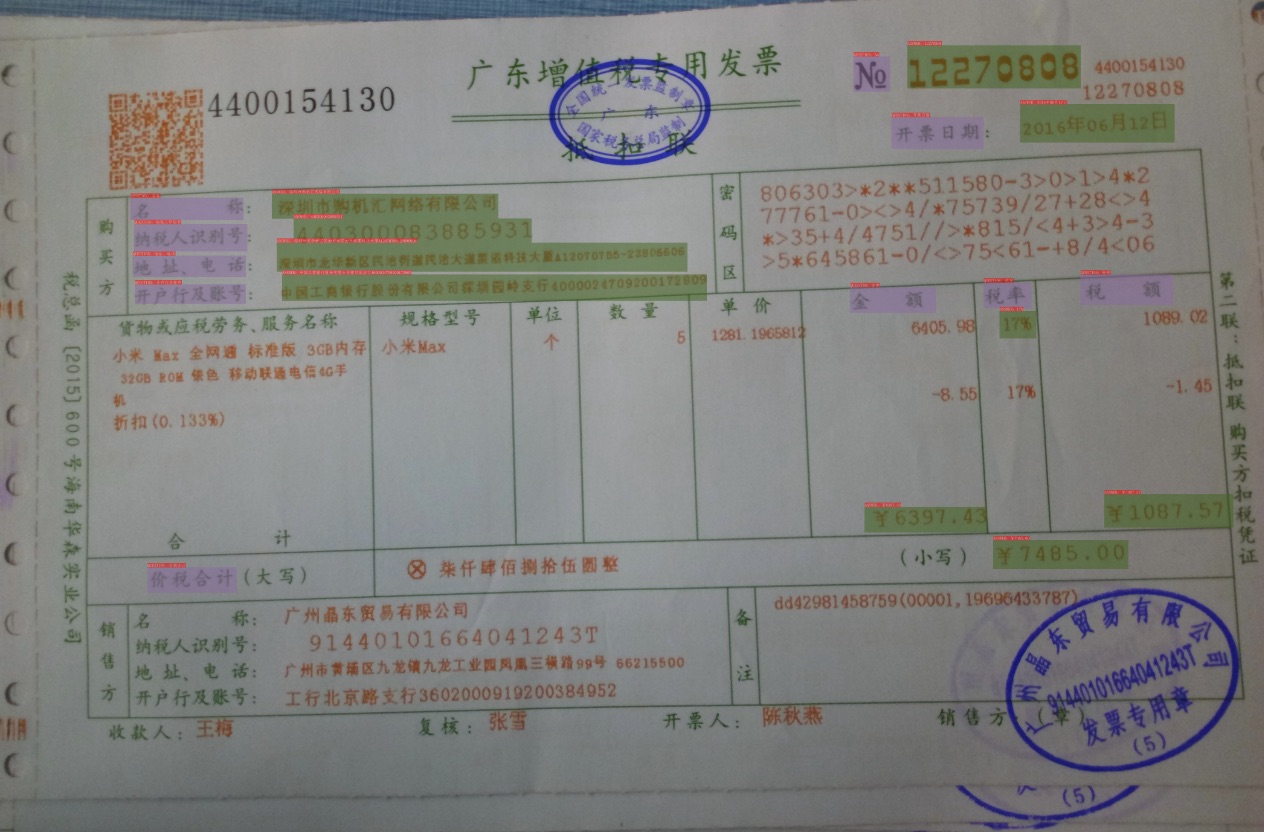

+| 表单VQA | 多模态通用表单结构化提取 | [模型下载](#2) | [中文](./多模态表单识别.md)/English |  |

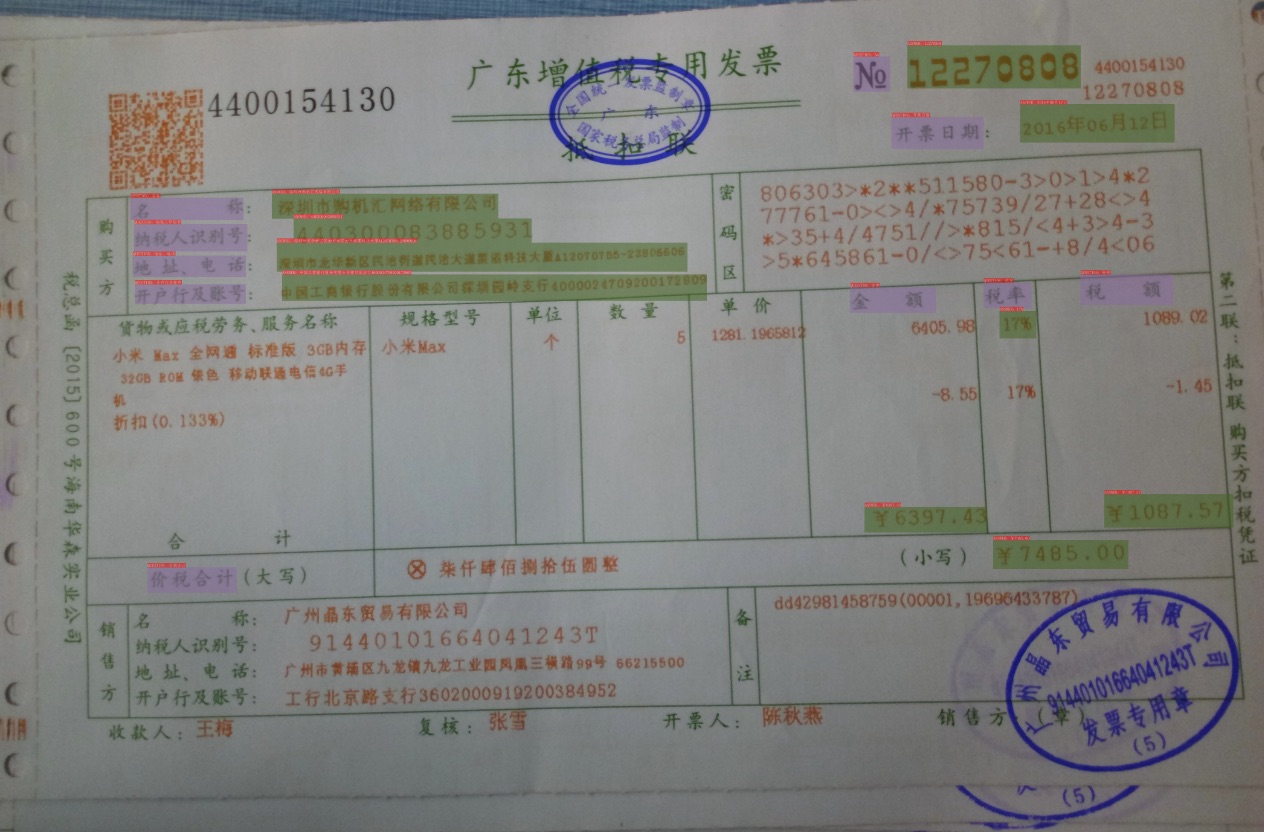

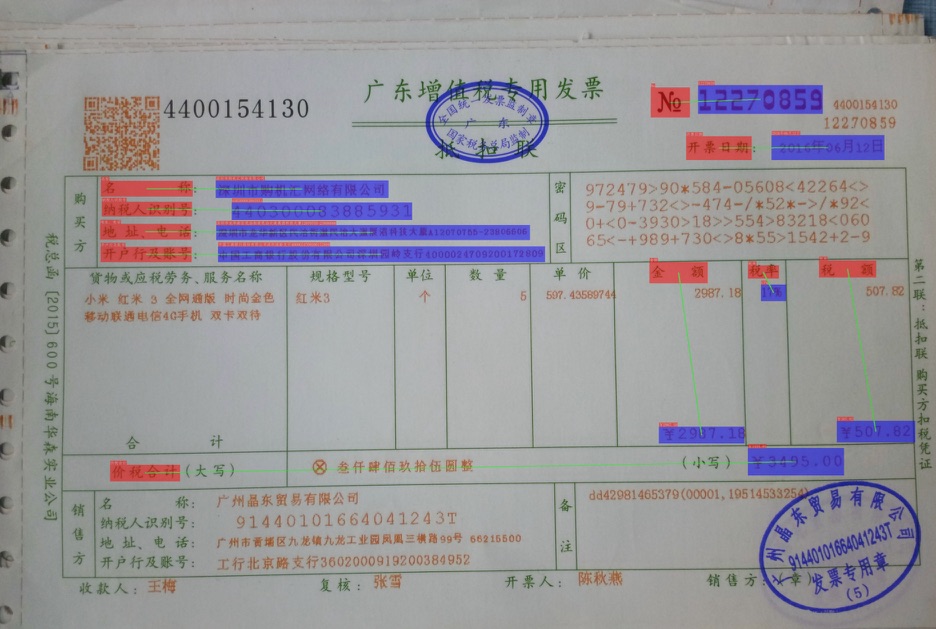

+| 增值税发票 | 关键信息抽取,SER、RE任务训练 | [模型下载](#2) | [中文](./发票关键信息抽取.md)/English |

|

+| 增值税发票 | 关键信息抽取,SER、RE任务训练 | [模型下载](#2) | [中文](./发票关键信息抽取.md)/English |  |

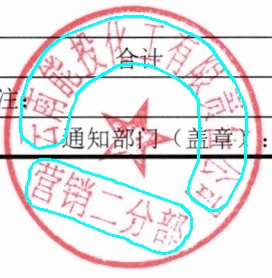

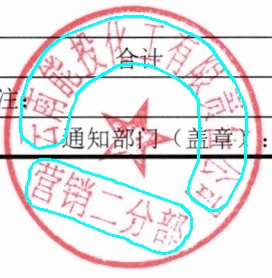

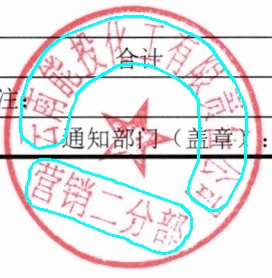

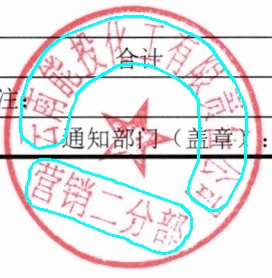

+| 印章检测与识别 | 端到端弯曲文本识别 | [模型下载](#2) | [中文](./印章弯曲文字识别.md)/English |

|

+| 印章检测与识别 | 端到端弯曲文本识别 | [模型下载](#2) | [中文](./印章弯曲文字识别.md)/English |  |

+| 通用卡证识别 | 通用结构化提取 | [模型下载](#2) | [中文](./快速构建卡证类OCR.md)/English |

|

+| 通用卡证识别 | 通用结构化提取 | [模型下载](#2) | [中文](./快速构建卡证类OCR.md)/English |  |

+| 身份证识别 | 结构化提取、图像阴影 | | | |

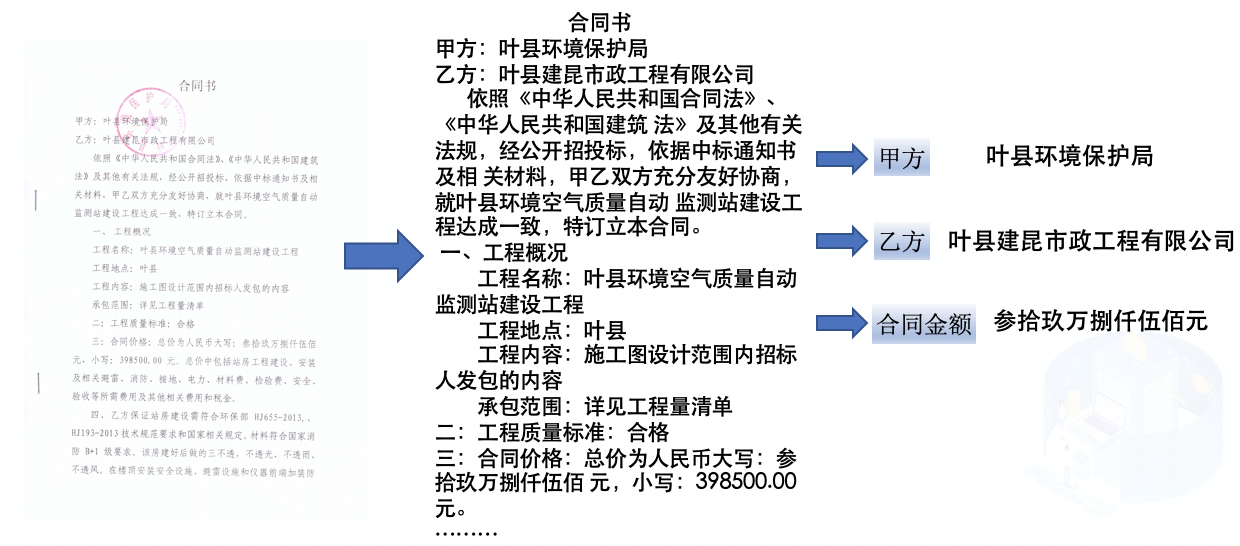

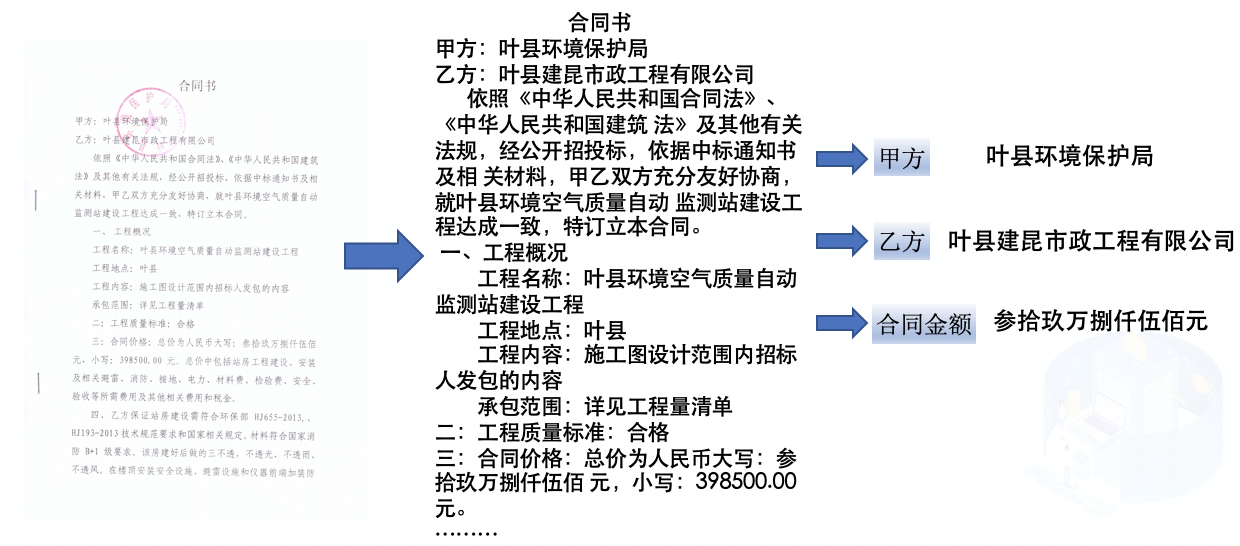

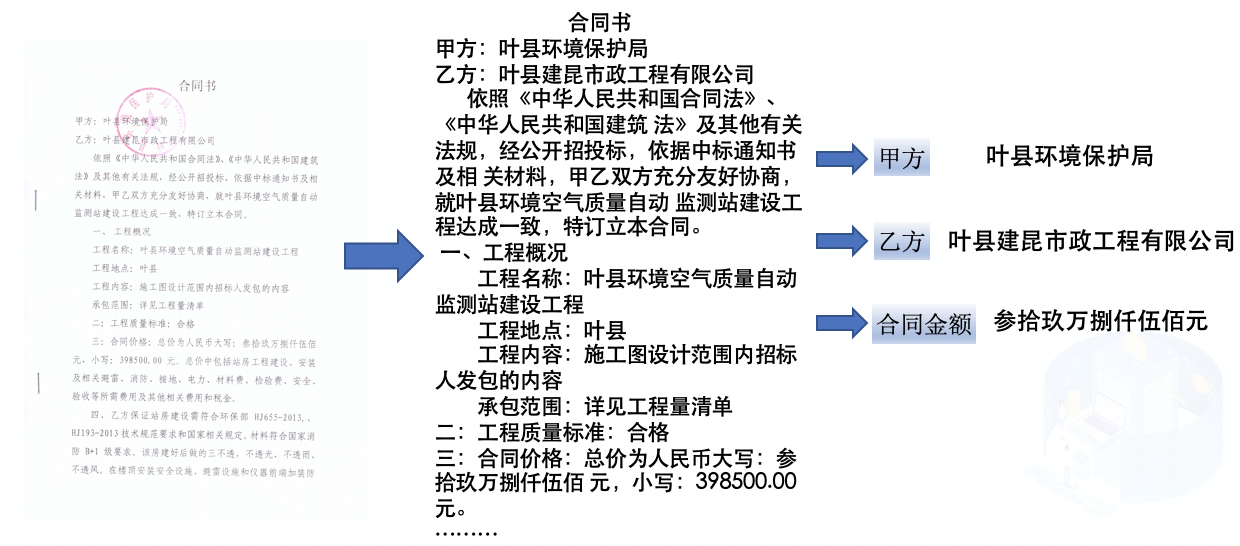

+| 合同比对 | 密集文本检测、NLP关键信息抽取 | [模型下载](#2) | [中文](./扫描合同关键信息提取.md)/English |

|

+| 身份证识别 | 结构化提取、图像阴影 | | | |

+| 合同比对 | 密集文本检测、NLP关键信息抽取 | [模型下载](#2) | [中文](./扫描合同关键信息提取.md)/English |  |

+

+

+

+### 交通

+

+| 类别 | 亮点 | 模型下载 | 教程 | 示例图 |

+| ----------------- | ------------------------------ | -------------- | ----------------------------------- | ------------------------------------------------------------ |

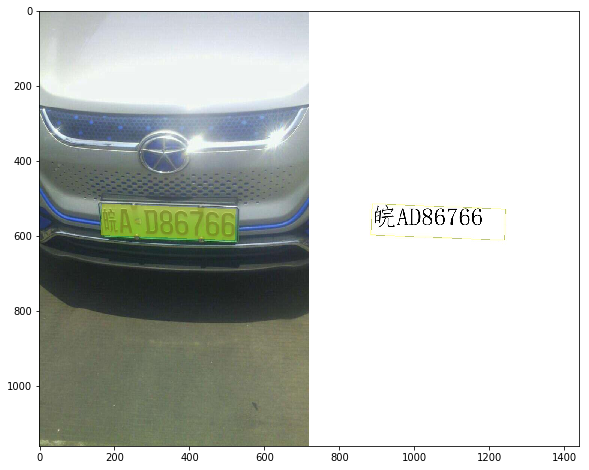

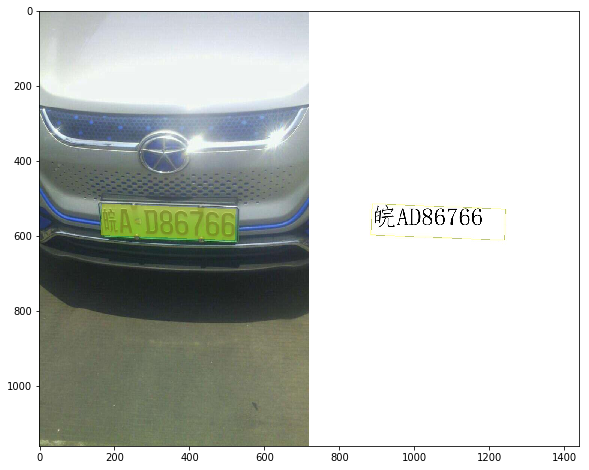

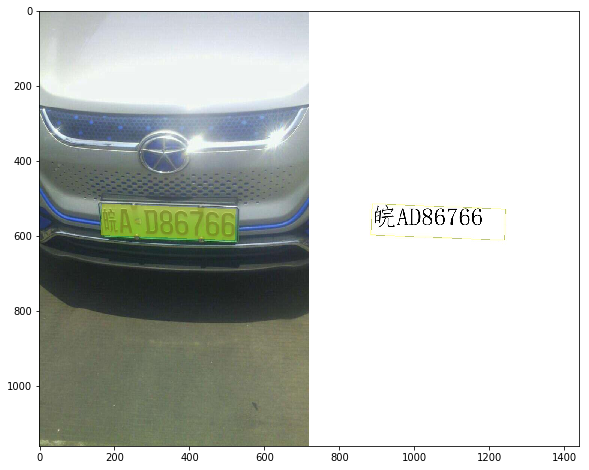

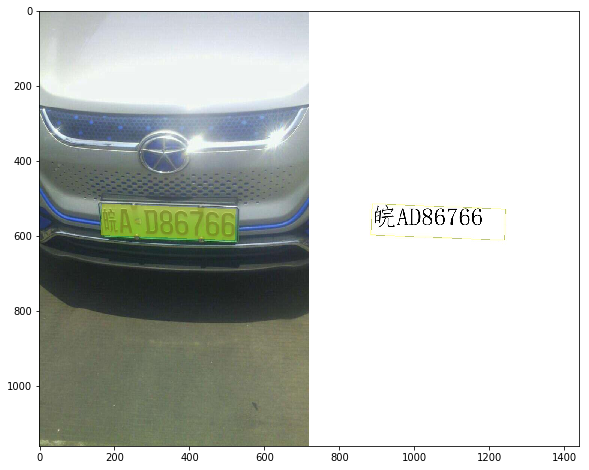

+| 车牌识别 | 多角度图像、轻量模型、端侧部署 | [模型下载](#2) | [中文](./轻量级车牌识别.md)/English |

|

+

+

+

+### 交通

+

+| 类别 | 亮点 | 模型下载 | 教程 | 示例图 |

+| ----------------- | ------------------------------ | -------------- | ----------------------------------- | ------------------------------------------------------------ |

+| 车牌识别 | 多角度图像、轻量模型、端侧部署 | [模型下载](#2) | [中文](./轻量级车牌识别.md)/English |  |

+| 驾驶证/行驶证识别 | 尽请期待 | | | |

+| 快递单识别 | 尽请期待 | | | |

+

+

+

+## 模型下载

+

+如需下载上述场景中已经训练好的垂类模型,可以扫描下方二维码,关注公众号填写问卷后,加入PaddleOCR官方交流群获取20G OCR学习大礼包(内含《动手学OCR》电子书、课程回放视频、前沿论文等重磅资料)

+

+

|

+| 驾驶证/行驶证识别 | 尽请期待 | | | |

+| 快递单识别 | 尽请期待 | | | |

+

+

+

+## 模型下载

+

+如需下载上述场景中已经训练好的垂类模型,可以扫描下方二维码,关注公众号填写问卷后,加入PaddleOCR官方交流群获取20G OCR学习大礼包(内含《动手学OCR》电子书、课程回放视频、前沿论文等重磅资料)

+

+

+

+

+

diff --git a/applications/README_en.md b/applications/README_en.md

new file mode 100644

index 0000000..df18465

--- /dev/null

+++ b/applications/README_en.md

@@ -0,0 +1,79 @@

+English| [简体中文](README.md)

+

+# Application

+

+PaddleOCR scene application covers general, manufacturing, finance, transportation industry of the main OCR vertical applications, on the basis of the general capabilities of PP-OCR, PP-Structure, in the form of notebook to show the use of scene data fine-tuning, model optimization methods, data augmentation and other content, for developers to quickly land OCR applications to provide demonstration and inspiration.

+

+- [Tutorial](#1)

+ - [General](#11)

+ - [Manufacturing](#12)

+ - [Finance](#13)

+ - [Transportation](#14)

+

+- [Model Download](#2)

+

+

+

+## Tutorial

+

+

+

+### General

+

+| Case | Feature | Model Download | Tutorial | Example |

+| ---------------------------------------------- | ---------------- | -------------------- | --------------------------------------- | ------------------------------------------------------------ |

+| High-precision Chineses recognition model SVTR | New model | [Model Download](#2) | [中文](./高精度中文识别模型.md)/English |

+

diff --git a/applications/README_en.md b/applications/README_en.md

new file mode 100644

index 0000000..df18465

--- /dev/null

+++ b/applications/README_en.md

@@ -0,0 +1,79 @@

+English| [简体中文](README.md)

+

+# Application

+

+PaddleOCR scene application covers general, manufacturing, finance, transportation industry of the main OCR vertical applications, on the basis of the general capabilities of PP-OCR, PP-Structure, in the form of notebook to show the use of scene data fine-tuning, model optimization methods, data augmentation and other content, for developers to quickly land OCR applications to provide demonstration and inspiration.

+

+- [Tutorial](#1)

+ - [General](#11)

+ - [Manufacturing](#12)

+ - [Finance](#13)

+ - [Transportation](#14)

+

+- [Model Download](#2)

+

+

+

+## Tutorial

+

+

+

+### General

+

+| Case | Feature | Model Download | Tutorial | Example |

+| ---------------------------------------------- | ---------------- | -------------------- | --------------------------------------- | ------------------------------------------------------------ |

+| High-precision Chineses recognition model SVTR | New model | [Model Download](#2) | [中文](./高精度中文识别模型.md)/English |  |

+| Chinese handwriting recognition | New font support | [Model Download](#2) | [中文](./手写文字识别.md)/English |

|

+| Chinese handwriting recognition | New font support | [Model Download](#2) | [中文](./手写文字识别.md)/English |  |

+

+

+

+### Manufacturing

+

+| Case | Feature | Model Download | Tutorial | Example |

+| ------------------------------ | ------------------------------------------------------------ | -------------------- | ------------------------------------------------------------ | ------------------------------------------------------------ |

+| Digital tube | Digital tube data sythesis, recognition model fine-tuning | [Model Download](#2) | [中文](./光功率计数码管字符识别/光功率计数码管字符识别.md)/English |

|

+

+

+

+### Manufacturing

+

+| Case | Feature | Model Download | Tutorial | Example |

+| ------------------------------ | ------------------------------------------------------------ | -------------------- | ------------------------------------------------------------ | ------------------------------------------------------------ |

+| Digital tube | Digital tube data sythesis, recognition model fine-tuning | [Model Download](#2) | [中文](./光功率计数码管字符识别/光功率计数码管字符识别.md)/English |  |

+| LCD screen | Detection model distillation, serving deployment | [Model Download](#2) | [中文](./液晶屏读数识别.md)/English |

|

+| LCD screen | Detection model distillation, serving deployment | [Model Download](#2) | [中文](./液晶屏读数识别.md)/English |  |

+| Packaging production data | Dot matrix character synthesis, overexposure and overdark text recognition | [Model Download](#2) | [中文](./包装生产日期识别.md)/English |

|

+| Packaging production data | Dot matrix character synthesis, overexposure and overdark text recognition | [Model Download](#2) | [中文](./包装生产日期识别.md)/English |  |

+| PCB text recognition | Small size text detection and recognition | [Model Download](#2) | [中文](./PCB字符识别/PCB字符识别.md)/English |

|

+| PCB text recognition | Small size text detection and recognition | [Model Download](#2) | [中文](./PCB字符识别/PCB字符识别.md)/English |  |

+| Meter text recognition | High-resolution image detection fine-tuning | [Model Download](#2) | | |

+| LCD character defect detection | Non-text character recognition | | | |

+

+

+

+### Finance

+

+| Case | Feature | Model Download | Tutorial | Example |

+| ----------------------------------- | -------------------------------------------------- | -------------------- | ----------------------------------------- | ------------------------------------------------------------ |

+| Form visual question and answer | Multimodal general form structured extraction | [Model Download](#2) | [中文](./多模态表单识别.md)/English |

|

+| Meter text recognition | High-resolution image detection fine-tuning | [Model Download](#2) | | |

+| LCD character defect detection | Non-text character recognition | | | |

+

+

+

+### Finance

+

+| Case | Feature | Model Download | Tutorial | Example |

+| ----------------------------------- | -------------------------------------------------- | -------------------- | ----------------------------------------- | ------------------------------------------------------------ |

+| Form visual question and answer | Multimodal general form structured extraction | [Model Download](#2) | [中文](./多模态表单识别.md)/English |  |

+| VAT invoice | Key information extraction, SER, RE task fine-tune | [Model Download](#2) | [中文](./发票关键信息抽取.md)/English |

|

+| VAT invoice | Key information extraction, SER, RE task fine-tune | [Model Download](#2) | [中文](./发票关键信息抽取.md)/English |  |

+| Seal detection and recognition | End-to-end curved text recognition | [Model Download](#2) | [中文](./印章弯曲文字识别.md)/English |

|

+| Seal detection and recognition | End-to-end curved text recognition | [Model Download](#2) | [中文](./印章弯曲文字识别.md)/English |  |

+| Universal card recognition | Universal structured extraction | [Model Download](#2) | [中文](./快速构建卡证类OCR.md)/English |

|

+| Universal card recognition | Universal structured extraction | [Model Download](#2) | [中文](./快速构建卡证类OCR.md)/English |  |

+| ID card recognition | Structured extraction, image shading | | | |

+| Contract key information extraction | Dense text detection, NLP concatenation | [Model Download](#2) | [中文](./扫描合同关键信息提取.md)/English |

|

+| ID card recognition | Structured extraction, image shading | | | |

+| Contract key information extraction | Dense text detection, NLP concatenation | [Model Download](#2) | [中文](./扫描合同关键信息提取.md)/English |  |

+

+

+

+### Transportation

+

+| Case | Feature | Model Download | Tutorial | Example |

+| ----------------------------------------------- | ------------------------------------------------------------ | -------------------- | ----------------------------------- | ------------------------------------------------------------ |

+| License plate recognition | Multi-angle images, lightweight models, edge-side deployment | [Model Download](#2) | [中文](./轻量级车牌识别.md)/English |

|

+

+

+

+### Transportation

+

+| Case | Feature | Model Download | Tutorial | Example |

+| ----------------------------------------------- | ------------------------------------------------------------ | -------------------- | ----------------------------------- | ------------------------------------------------------------ |

+| License plate recognition | Multi-angle images, lightweight models, edge-side deployment | [Model Download](#2) | [中文](./轻量级车牌识别.md)/English |  |

+| Driver's license/driving license identification | coming soon | | | |

+| Express text recognition | coming soon | | | |

+

+

+

+## Model Download

+

+- For international developers: We're building a way to download these trained models, and since the current tutorials are Chinese, if you are good at both Chinese and English, or willing to polish English documents, please let us know in [discussion](https://github.com/PaddlePaddle/PaddleOCR/discussions).

+- For Chinese developer: If you want to download the trained application model in the above scenarios, scan the QR code below with your WeChat, follow the PaddlePaddle official account to fill in the questionnaire, and join the PaddleOCR official group to get the 20G OCR learning materials (including "Dive into OCR" e-book, course video, application models and other materials)

+

+

|

+| Driver's license/driving license identification | coming soon | | | |

+| Express text recognition | coming soon | | | |

+

+

+

+## Model Download

+

+- For international developers: We're building a way to download these trained models, and since the current tutorials are Chinese, if you are good at both Chinese and English, or willing to polish English documents, please let us know in [discussion](https://github.com/PaddlePaddle/PaddleOCR/discussions).

+- For Chinese developer: If you want to download the trained application model in the above scenarios, scan the QR code below with your WeChat, follow the PaddlePaddle official account to fill in the questionnaire, and join the PaddleOCR official group to get the 20G OCR learning materials (including "Dive into OCR" e-book, course video, application models and other materials)

+

+

+

+

+

diff --git "a/applications/\344\270\255\346\226\207\350\241\250\346\240\274\350\257\206\345\210\253.md" "b/applications/\344\270\255\346\226\207\350\241\250\346\240\274\350\257\206\345\210\253.md"

new file mode 100644

index 0000000..d61514f

--- /dev/null

+++ "b/applications/\344\270\255\346\226\207\350\241\250\346\240\274\350\257\206\345\210\253.md"

@@ -0,0 +1,472 @@

+# 智能运营:通用中文表格识别

+

+- [1. 背景介绍](#1-背景介绍)

+- [2. 中文表格识别](#2-中文表格识别)

+- [2.1 环境准备](#21-环境准备)

+- [2.2 准备数据集](#22-准备数据集)

+ - [2.2.1 划分训练测试集](#221-划分训练测试集)

+ - [2.2.2 查看数据集](#222-查看数据集)

+- [2.3 训练](#23-训练)

+- [2.4 验证](#24-验证)

+- [2.5 训练引擎推理](#25-训练引擎推理)

+- [2.6 模型导出](#26-模型导出)

+- [2.7 预测引擎推理](#27-预测引擎推理)

+- [2.8 表格识别](#28-表格识别)

+- [3. 表格属性识别](#3-表格属性识别)

+- [3.1 代码、环境、数据准备](#31-代码环境数据准备)

+ - [3.1.1 代码准备](#311-代码准备)

+ - [3.1.2 环境准备](#312-环境准备)

+ - [3.1.3 数据准备](#313-数据准备)

+- [3.2 表格属性识别训练](#32-表格属性识别训练)

+- [3.3 表格属性识别推理和部署](#33-表格属性识别推理和部署)

+ - [3.3.1 模型转换](#331-模型转换)

+ - [3.3.2 模型推理](#332-模型推理)

+

+## 1. 背景介绍

+

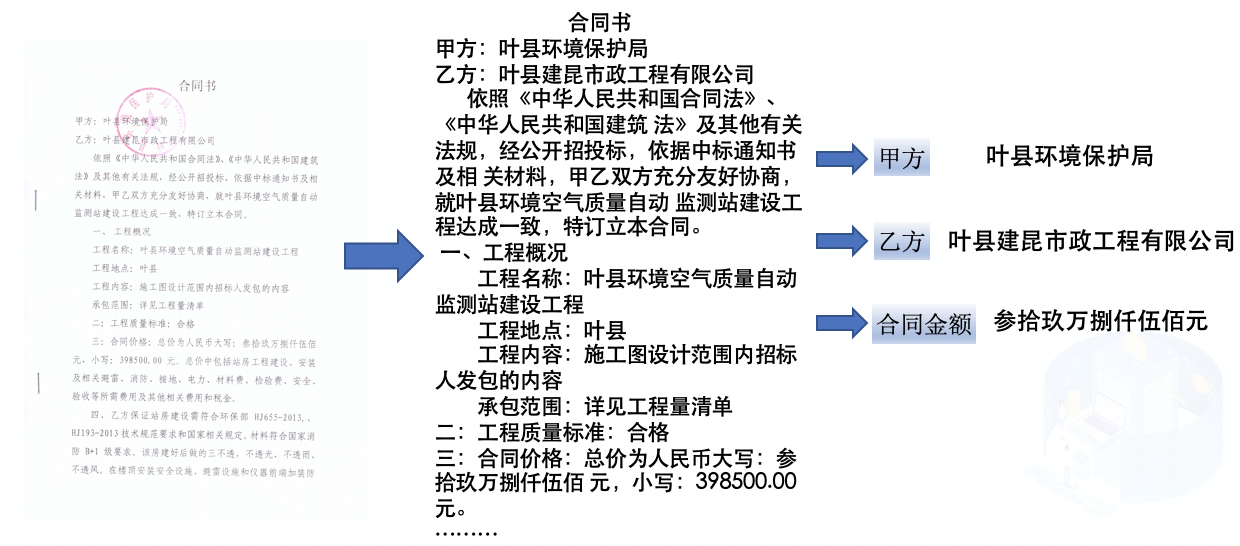

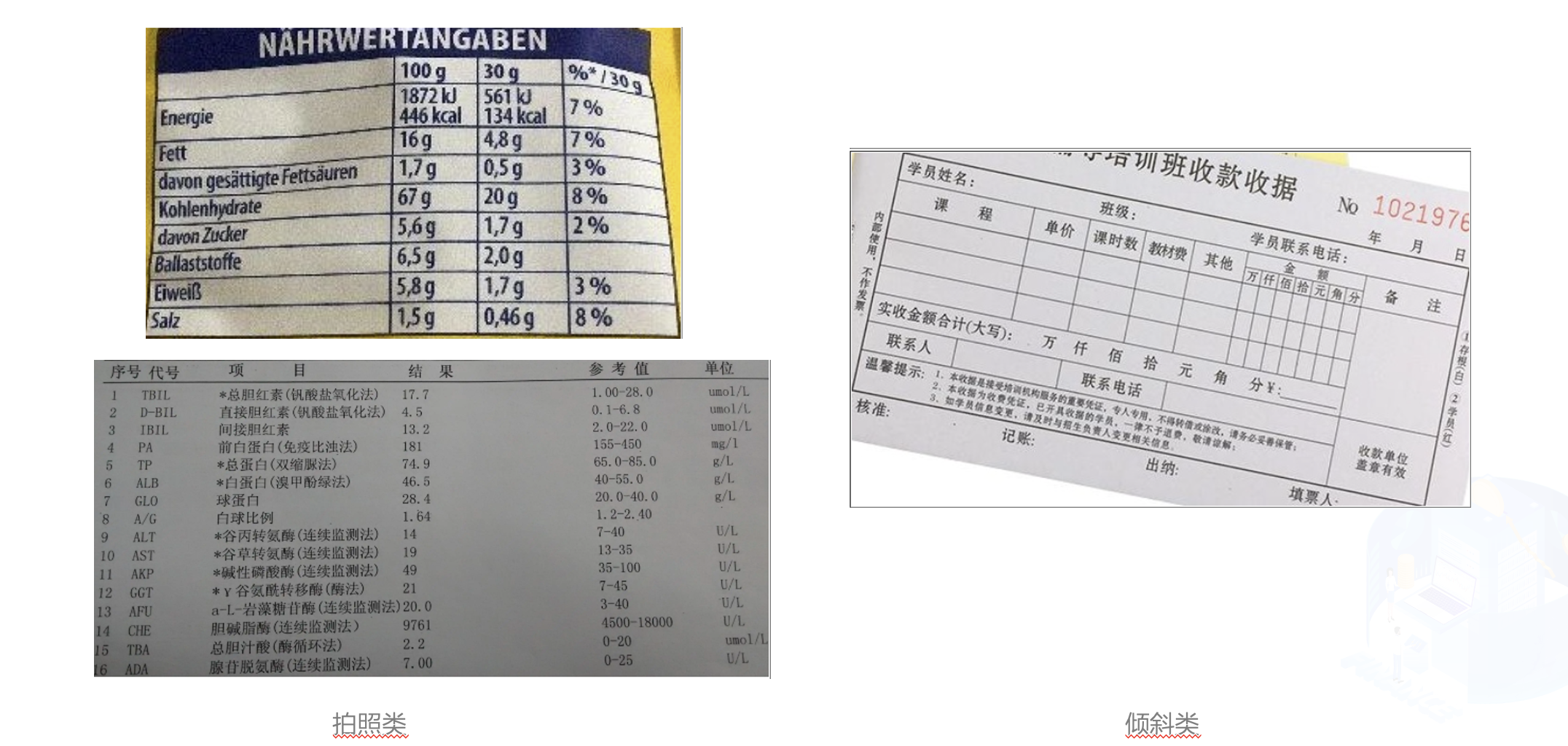

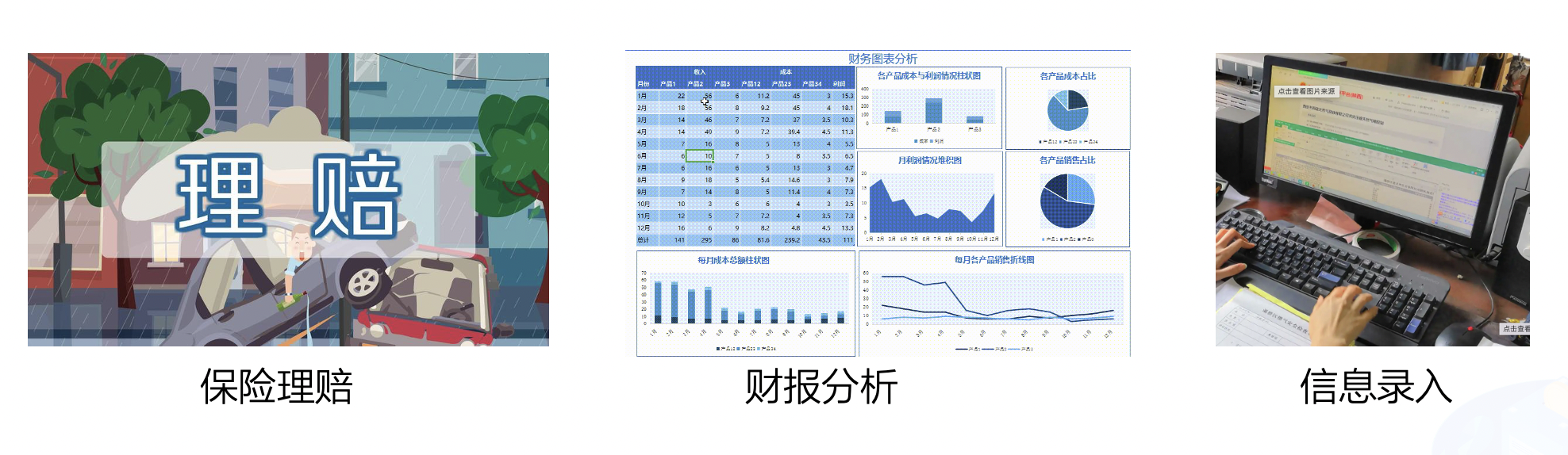

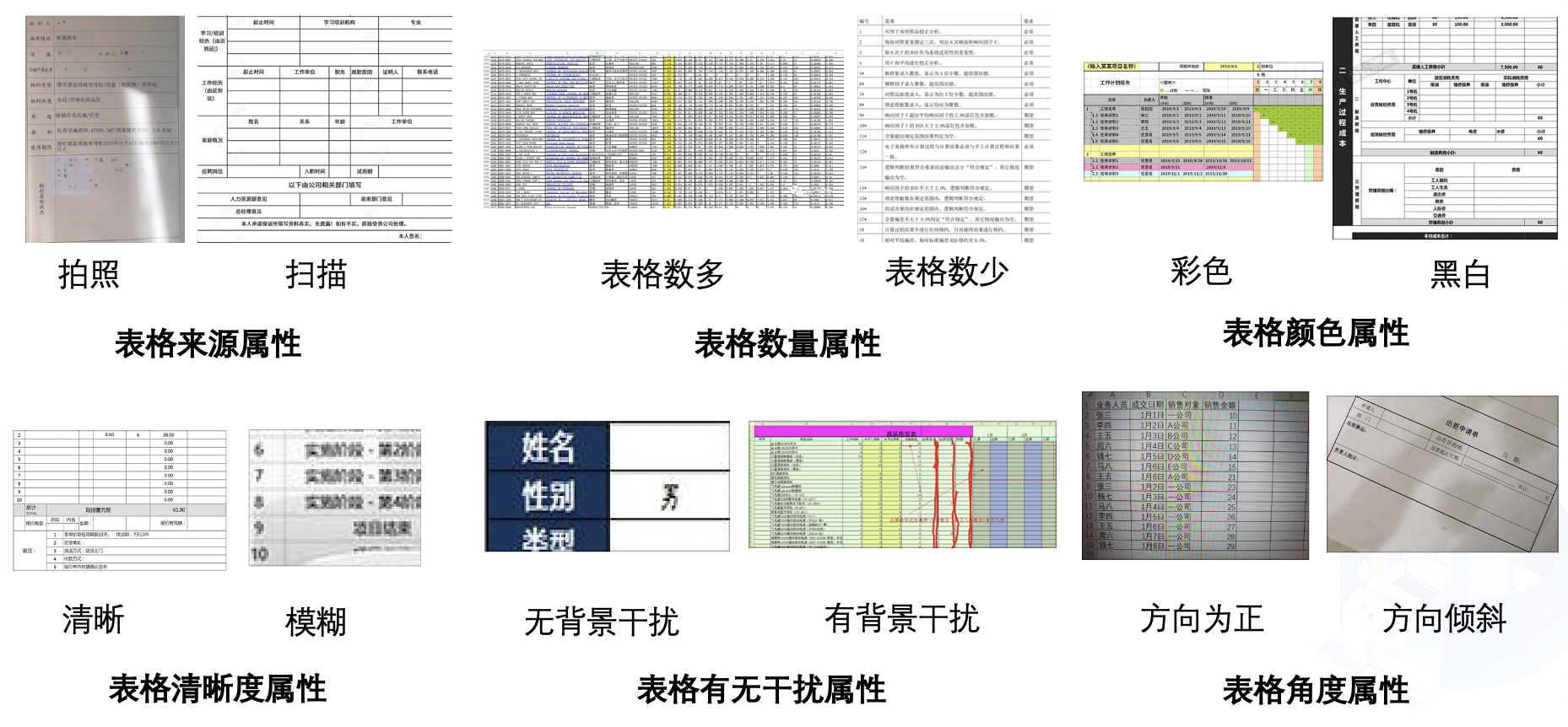

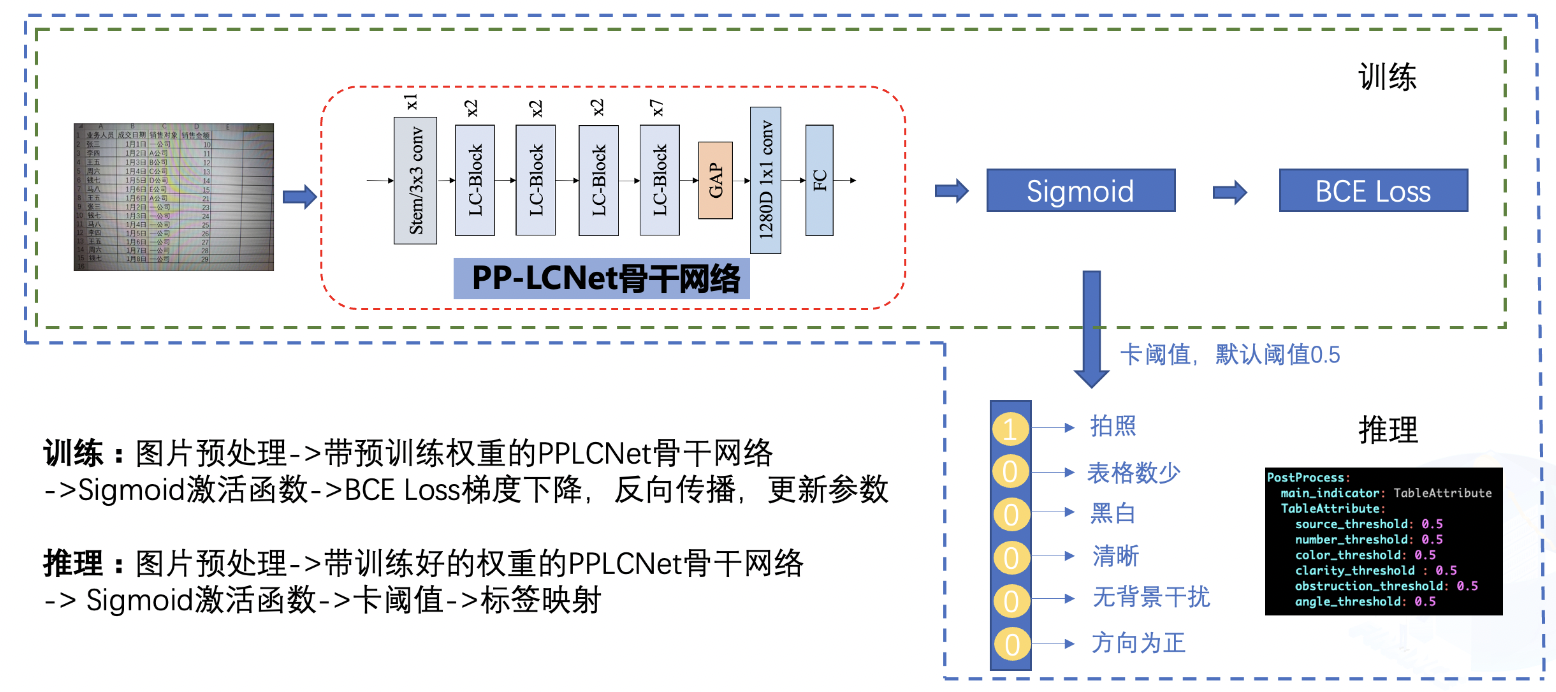

+中文表格识别在金融行业有着广泛的应用,如保险理赔、财报分析和信息录入等领域。当前,金融行业的表格识别主要以手动录入为主,开发一种自动表格识别成为丞待解决的问题。

+

+

+

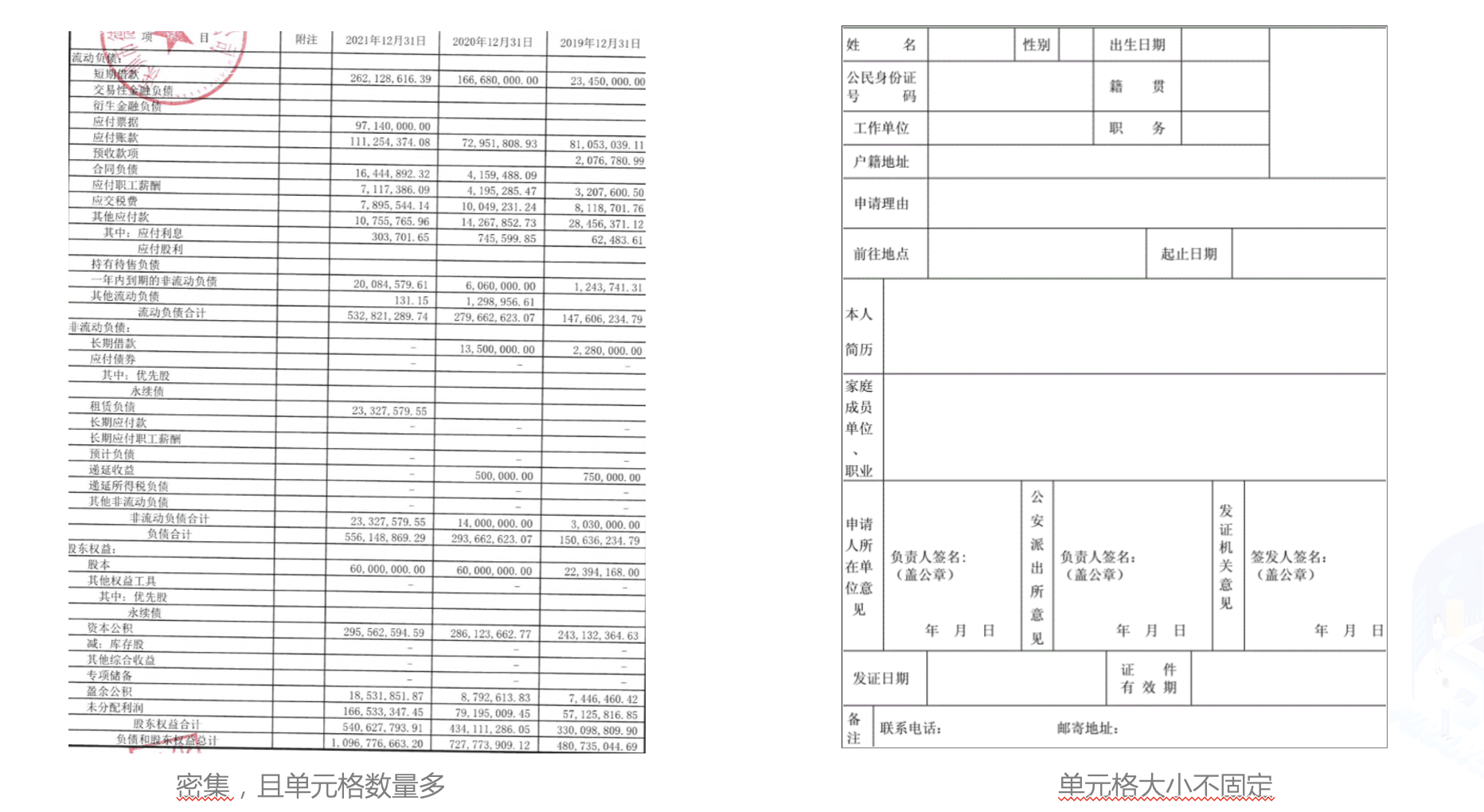

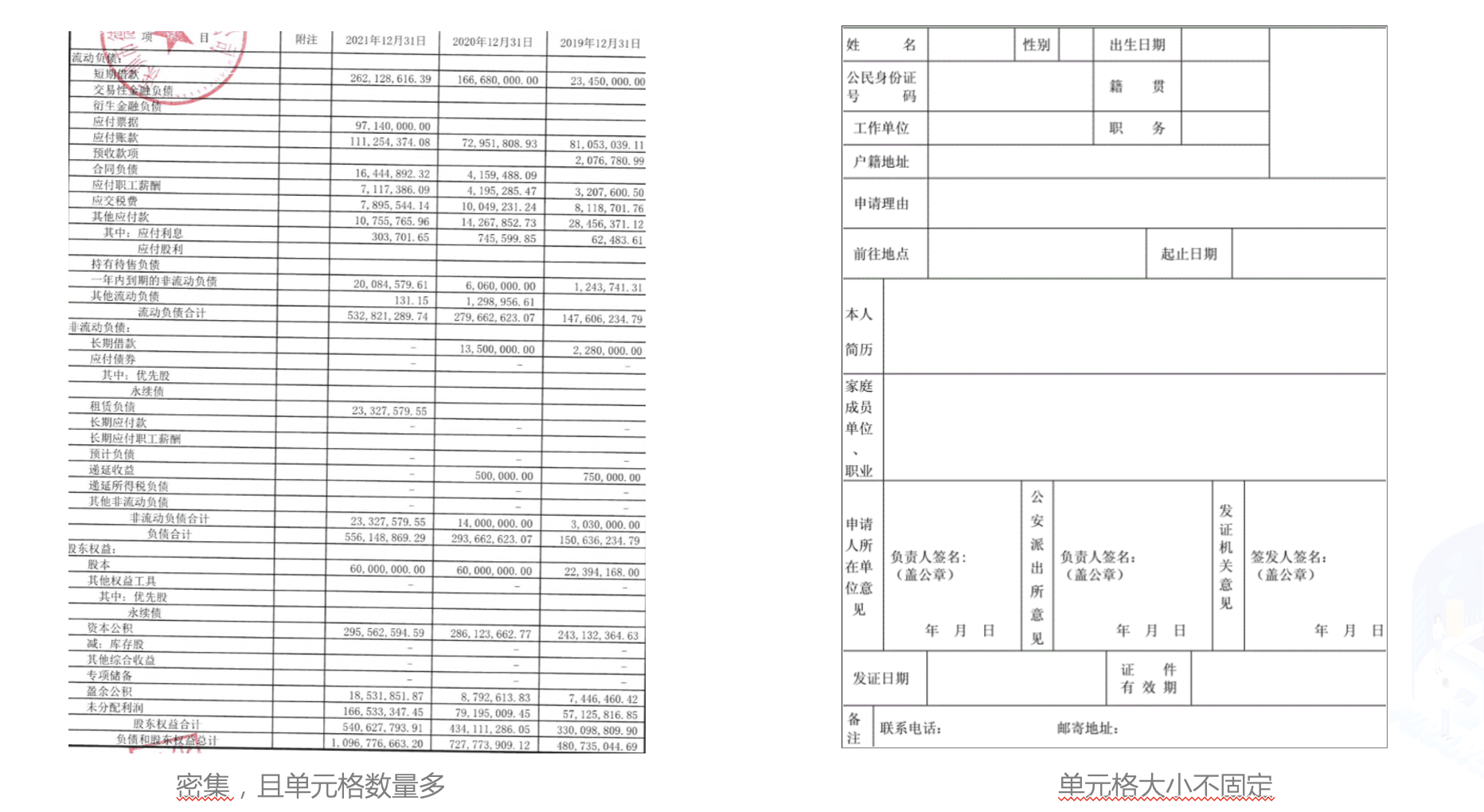

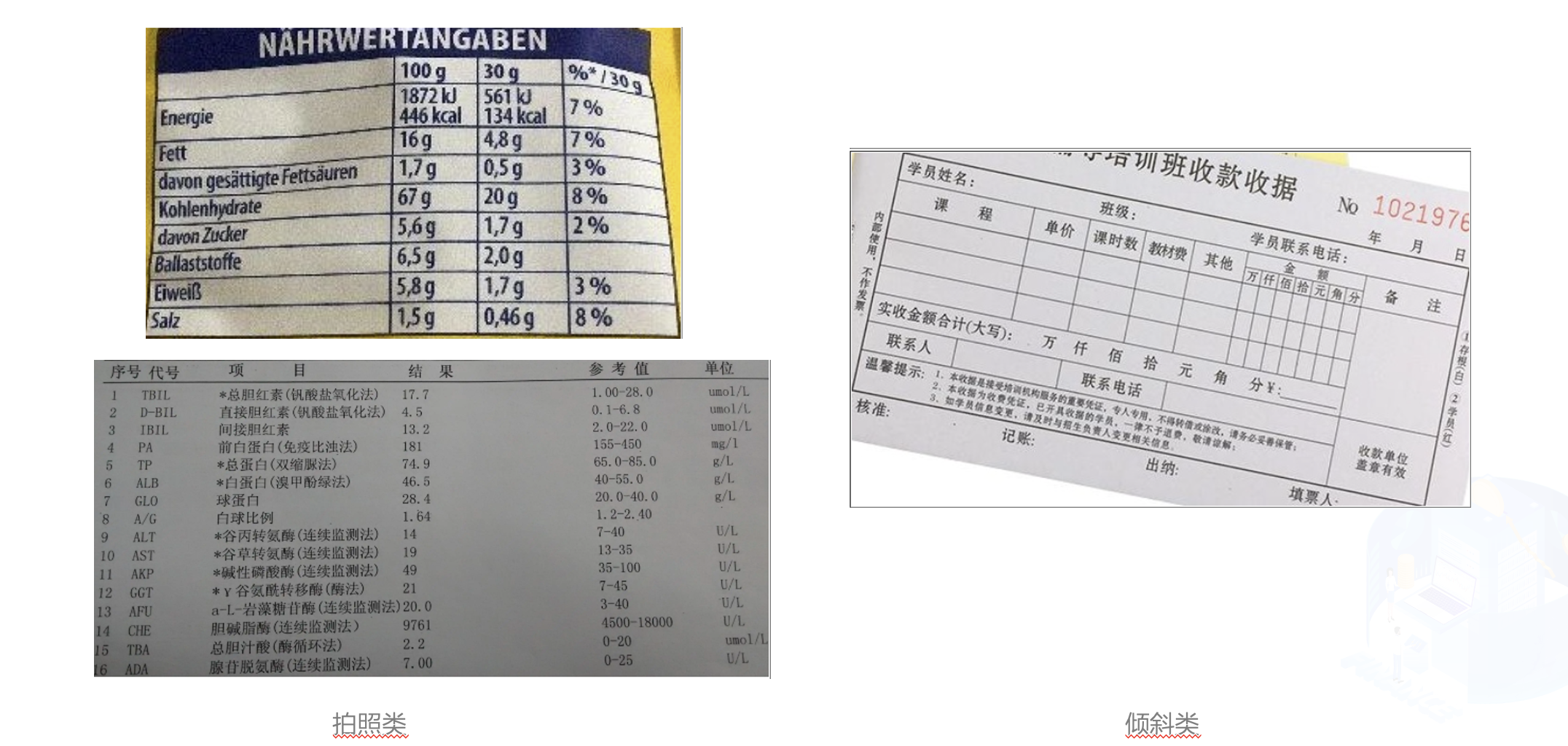

+在金融行业中,表格图像主要有清单类的单元格密集型表格,申请表类的大单元格表格,拍照表格和倾斜表格四种主要形式。

+

+

+

+

+

+当前的表格识别算法不能很好的处理这些场景下的表格图像。在本例中,我们使用PP-StructureV2最新发布的表格识别模型SLANet来演示如何进行中文表格是识别。同时,为了方便作业流程,我们使用表格属性识别模型对表格图像的属性进行识别,对表格的难易程度进行判断,加快人工进行校对速度。

+

+本项目AI Studio链接:https://aistudio.baidu.com/aistudio/projectdetail/4588067

+

+## 2. 中文表格识别

+### 2.1 环境准备

+

+

+```python

+# 下载PaddleOCR代码

+! git clone -b dygraph https://gitee.com/paddlepaddle/PaddleOCR

+```

+

+

+```python

+# 安装PaddleOCR环境

+! pip install -r PaddleOCR/requirements.txt --force-reinstall

+! pip install protobuf==3.19

+```

+

+### 2.2 准备数据集

+

+本例中使用的数据集采用表格[生成工具](https://github.com/WenmuZhou/TableGeneration)制作。

+

+使用如下命令对数据集进行解压,并查看数据集大小

+

+

+```python

+! cd data/data165849 && tar -xf table_gen_dataset.tar && cd -

+! wc -l data/data165849/table_gen_dataset/gt.txt

+```

+

+#### 2.2.1 划分训练测试集

+

+使用下述命令将数据集划分为训练集和测试集, 这里将90%划分为训练集,10%划分为测试集

+

+

+```python

+import random

+with open('/home/aistudio/data/data165849/table_gen_dataset/gt.txt') as f:

+ lines = f.readlines()

+random.shuffle(lines)

+train_len = int(len(lines)*0.9)

+train_list = lines[:train_len]

+val_list = lines[train_len:]

+

+# 保存结果

+with open('/home/aistudio/train.txt','w',encoding='utf-8') as f:

+ f.writelines(train_list)

+with open('/home/aistudio/val.txt','w',encoding='utf-8') as f:

+ f.writelines(val_list)

+```

+

+划分完成后,数据集信息如下

+

+|类型|数量|图片地址|标注文件路径|

+|---|---|---|---|

+|训练集|18000|/home/aistudio/data/data165849/table_gen_dataset|/home/aistudio/train.txt|

+|测试集|2000|/home/aistudio/data/data165849/table_gen_dataset|/home/aistudio/val.txt|

+

+#### 2.2.2 查看数据集

+

+

+```python

+import cv2

+import os, json

+import numpy as np

+from matplotlib import pyplot as plt

+%matplotlib inline

+

+def parse_line(data_dir, line):

+ data_line = line.strip("\n")

+ info = json.loads(data_line)

+ file_name = info['filename']

+ cells = info['html']['cells'].copy()

+ structure = info['html']['structure']['tokens'].copy()

+

+ img_path = os.path.join(data_dir, file_name)

+ if not os.path.exists(img_path):

+ print(img_path)

+ return None

+ data = {

+ 'img_path': img_path,

+ 'cells': cells,

+ 'structure': structure,

+ 'file_name': file_name

+ }

+ return data

+

+def draw_bbox(img_path, points, color=(255, 0, 0), thickness=2):

+ if isinstance(img_path, str):

+ img_path = cv2.imread(img_path)

+ img_path = img_path.copy()

+ for point in points:

+ cv2.polylines(img_path, [point.astype(int)], True, color, thickness)

+ return img_path

+

+

+def rebuild_html(data):

+ html_code = data['structure']

+ cells = data['cells']

+ to_insert = [i for i, tag in enumerate(html_code) if tag in ('

+

diff --git "a/applications/\344\270\255\346\226\207\350\241\250\346\240\274\350\257\206\345\210\253.md" "b/applications/\344\270\255\346\226\207\350\241\250\346\240\274\350\257\206\345\210\253.md"

new file mode 100644

index 0000000..d61514f

--- /dev/null

+++ "b/applications/\344\270\255\346\226\207\350\241\250\346\240\274\350\257\206\345\210\253.md"

@@ -0,0 +1,472 @@

+# 智能运营:通用中文表格识别

+

+- [1. 背景介绍](#1-背景介绍)

+- [2. 中文表格识别](#2-中文表格识别)

+- [2.1 环境准备](#21-环境准备)

+- [2.2 准备数据集](#22-准备数据集)

+ - [2.2.1 划分训练测试集](#221-划分训练测试集)

+ - [2.2.2 查看数据集](#222-查看数据集)

+- [2.3 训练](#23-训练)

+- [2.4 验证](#24-验证)

+- [2.5 训练引擎推理](#25-训练引擎推理)

+- [2.6 模型导出](#26-模型导出)

+- [2.7 预测引擎推理](#27-预测引擎推理)

+- [2.8 表格识别](#28-表格识别)

+- [3. 表格属性识别](#3-表格属性识别)

+- [3.1 代码、环境、数据准备](#31-代码环境数据准备)

+ - [3.1.1 代码准备](#311-代码准备)

+ - [3.1.2 环境准备](#312-环境准备)

+ - [3.1.3 数据准备](#313-数据准备)

+- [3.2 表格属性识别训练](#32-表格属性识别训练)

+- [3.3 表格属性识别推理和部署](#33-表格属性识别推理和部署)

+ - [3.3.1 模型转换](#331-模型转换)

+ - [3.3.2 模型推理](#332-模型推理)

+

+## 1. 背景介绍

+

+中文表格识别在金融行业有着广泛的应用,如保险理赔、财报分析和信息录入等领域。当前,金融行业的表格识别主要以手动录入为主,开发一种自动表格识别成为丞待解决的问题。

+

+

+

+在金融行业中,表格图像主要有清单类的单元格密集型表格,申请表类的大单元格表格,拍照表格和倾斜表格四种主要形式。

+

+

+

+

+

+当前的表格识别算法不能很好的处理这些场景下的表格图像。在本例中,我们使用PP-StructureV2最新发布的表格识别模型SLANet来演示如何进行中文表格是识别。同时,为了方便作业流程,我们使用表格属性识别模型对表格图像的属性进行识别,对表格的难易程度进行判断,加快人工进行校对速度。

+

+本项目AI Studio链接:https://aistudio.baidu.com/aistudio/projectdetail/4588067

+

+## 2. 中文表格识别

+### 2.1 环境准备

+

+

+```python

+# 下载PaddleOCR代码

+! git clone -b dygraph https://gitee.com/paddlepaddle/PaddleOCR

+```

+

+

+```python

+# 安装PaddleOCR环境

+! pip install -r PaddleOCR/requirements.txt --force-reinstall

+! pip install protobuf==3.19

+```

+

+### 2.2 准备数据集

+

+本例中使用的数据集采用表格[生成工具](https://github.com/WenmuZhou/TableGeneration)制作。

+

+使用如下命令对数据集进行解压,并查看数据集大小

+

+

+```python

+! cd data/data165849 && tar -xf table_gen_dataset.tar && cd -

+! wc -l data/data165849/table_gen_dataset/gt.txt

+```

+

+#### 2.2.1 划分训练测试集

+

+使用下述命令将数据集划分为训练集和测试集, 这里将90%划分为训练集,10%划分为测试集

+

+

+```python

+import random

+with open('/home/aistudio/data/data165849/table_gen_dataset/gt.txt') as f:

+ lines = f.readlines()

+random.shuffle(lines)

+train_len = int(len(lines)*0.9)

+train_list = lines[:train_len]

+val_list = lines[train_len:]

+

+# 保存结果

+with open('/home/aistudio/train.txt','w',encoding='utf-8') as f:

+ f.writelines(train_list)

+with open('/home/aistudio/val.txt','w',encoding='utf-8') as f:

+ f.writelines(val_list)

+```

+

+划分完成后,数据集信息如下

+

+|类型|数量|图片地址|标注文件路径|

+|---|---|---|---|

+|训练集|18000|/home/aistudio/data/data165849/table_gen_dataset|/home/aistudio/train.txt|

+|测试集|2000|/home/aistudio/data/data165849/table_gen_dataset|/home/aistudio/val.txt|

+

+#### 2.2.2 查看数据集

+

+

+```python

+import cv2

+import os, json

+import numpy as np

+from matplotlib import pyplot as plt

+%matplotlib inline

+

+def parse_line(data_dir, line):

+ data_line = line.strip("\n")

+ info = json.loads(data_line)

+ file_name = info['filename']

+ cells = info['html']['cells'].copy()

+ structure = info['html']['structure']['tokens'].copy()

+

+ img_path = os.path.join(data_dir, file_name)

+ if not os.path.exists(img_path):

+ print(img_path)

+ return None

+ data = {

+ 'img_path': img_path,

+ 'cells': cells,

+ 'structure': structure,

+ 'file_name': file_name

+ }

+ return data

+

+def draw_bbox(img_path, points, color=(255, 0, 0), thickness=2):

+ if isinstance(img_path, str):

+ img_path = cv2.imread(img_path)

+ img_path = img_path.copy()

+ for point in points:

+ cv2.polylines(img_path, [point.astype(int)], True, color, thickness)

+ return img_path

+

+

+def rebuild_html(data):

+ html_code = data['structure']

+ cells = data['cells']

+ to_insert = [i for i, tag in enumerate(html_code) if tag in ('', '>')]

+

+ for i, cell in zip(to_insert[::-1], cells[::-1]):

+ if cell['tokens']:

+ text = ''.join(cell['tokens'])

+ # skip empty text

+ sp_char_list = ['', '', '\u2028', ' ', '', '']

+ text_remove_style = skip_char(text, sp_char_list)

+ if len(text_remove_style) == 0:

+ continue

+ html_code.insert(i + 1, text)

+

+ html_code = ''.join(html_code)

+ return html_code

+

+

+def skip_char(text, sp_char_list):

+ """

+ skip empty cell

+ @param text: text in cell

+ @param sp_char_list: style char and special code

+ @return:

+ """

+ for sp_char in sp_char_list:

+ text = text.replace(sp_char, '')

+ return text

+

+save_dir = '/home/aistudio/vis'

+os.makedirs(save_dir, exist_ok=True)

+image_dir = '/home/aistudio/data/data165849/'

+html_str = ''

+

+# 解析标注信息并还原html表格

+data = parse_line(image_dir, val_list[0])

+

+img = cv2.imread(data['img_path'])

+img_name = ''.join(os.path.basename(data['file_name']).split('.')[:-1])

+img_save_name = os.path.join(save_dir, img_name)

+boxes = [np.array(x['bbox']) for x in data['cells']]

+show_img = draw_bbox(data['img_path'], boxes)

+cv2.imwrite(img_save_name + '_show.jpg', show_img)

+

+html = rebuild_html(data)

+html_str += html

+html_str += ' '

+

+# 显示标注的html字符串

+from IPython.core.display import display, HTML

+display(HTML(html_str))

+# 显示单元格坐标

+plt.figure(figsize=(15,15))

+plt.imshow(show_img)

+plt.show()

+```

+

+### 2.3 训练

+

+这里选用PP-StructureV2中的表格识别模型[SLANet](https://github.com/PaddlePaddle/PaddleOCR/blob/dygraph/configs/table/SLANet.yml)

+

+SLANet是PP-StructureV2全新推出的表格识别模型,相比PP-StructureV1中TableRec-RARE,在速度不变的情况下精度提升4.7%。TEDS提升2%

+

+

+|算法|Acc|[TEDS(Tree-Edit-Distance-based Similarity)](https://github.com/ibm-aur-nlp/PubTabNet/tree/master/src)|Speed|

+| --- | --- | --- | ---|

+| EDD[2] |x| 88.30% |x|

+| TableRec-RARE(ours) | 71.73%| 93.88% |779ms|

+| SLANet(ours) | 76.31%| 95.89%|766ms|

+

+进行训练之前先使用如下命令下载预训练模型

+

+

+```python

+# 进入PaddleOCR工作目录

+os.chdir('/home/aistudio/PaddleOCR')

+# 下载英文预训练模型

+! wget -nc -P ./pretrain_models/ https://paddleocr.bj.bcebos.com/ppstructure/models/slanet/en_ppstructure_mobile_v2.0_SLANet_train.tar --no-check-certificate

+! cd ./pretrain_models/ && tar xf en_ppstructure_mobile_v2.0_SLANet_train.tar && cd ../

+```

+

+使用如下命令即可启动训练,需要修改的配置有

+

+|字段|修改值|含义|

+|---|---|---|

+|Global.pretrained_model|./pretrain_models/en_ppstructure_mobile_v2.0_SLANet_train/best_accuracy.pdparams|指向英文表格预训练模型地址|

+|Global.eval_batch_step|562|模型多少step评估一次,一般设置为一个epoch总的step数|

+|Optimizer.lr.name|Const|学习率衰减器 |

+|Optimizer.lr.learning_rate|0.0005|学习率设为之前的0.05倍 |

+|Train.dataset.data_dir|/home/aistudio/data/data165849|指向训练集图片存放目录 |

+|Train.dataset.label_file_list|/home/aistudio/data/data165849/table_gen_dataset/train.txt|指向训练集标注文件 |

+|Train.loader.batch_size_per_card|32|训练时每张卡的batch_size |

+|Train.loader.num_workers|1|训练集多进程数据读取的进程数,在aistudio中需要设为1 |

+|Eval.dataset.data_dir|/home/aistudio/data/data165849|指向测试集图片存放目录 |

+|Eval.dataset.label_file_list|/home/aistudio/data/data165849/table_gen_dataset/val.txt|指向测试集标注文件 |

+|Eval.loader.batch_size_per_card|32|测试时每张卡的batch_size |

+|Eval.loader.num_workers|1|测试集多进程数据读取的进程数,在aistudio中需要设为1 |

+

+

+已经修改好的配置存储在 `/home/aistudio/SLANet_ch.yml`

+

+

+```python

+import os

+os.chdir('/home/aistudio/PaddleOCR')

+! python3 tools/train.py -c /home/aistudio/SLANet_ch.yml

+```

+

+大约在7个epoch后达到最高精度 97.49%

+

+### 2.4 验证

+

+训练完成后,可使用如下命令在测试集上评估最优模型的精度

+

+

+```python

+! python3 tools/eval.py -c /home/aistudio/SLANet_ch.yml -o Global.checkpoints=/home/aistudio/PaddleOCR/output/SLANet_ch/best_accuracy.pdparams

+```

+

+### 2.5 训练引擎推理

+使用如下命令可使用训练引擎对单张图片进行推理

+

+

+```python

+import os;os.chdir('/home/aistudio/PaddleOCR')

+! python3 tools/infer_table.py -c /home/aistudio/SLANet_ch.yml -o Global.checkpoints=/home/aistudio/PaddleOCR/output/SLANet_ch/best_accuracy.pdparams Global.infer_img=/home/aistudio/data/data165849/table_gen_dataset/img/no_border_18298_G7XZH93DDCMATGJQ8RW2.jpg

+```

+

+

+```python

+import cv2

+from matplotlib import pyplot as plt

+%matplotlib inline

+

+# 显示原图

+show_img = cv2.imread('/home/aistudio/data/data165849/table_gen_dataset/img/no_border_18298_G7XZH93DDCMATGJQ8RW2.jpg')

+plt.figure(figsize=(15,15))

+plt.imshow(show_img)

+plt.show()

+

+# 显示预测的单元格

+show_img = cv2.imread('/home/aistudio/PaddleOCR/output/infer/no_border_18298_G7XZH93DDCMATGJQ8RW2.jpg')

+plt.figure(figsize=(15,15))

+plt.imshow(show_img)

+plt.show()

+```

+

+### 2.6 模型导出

+

+使用如下命令可将模型导出为inference模型

+

+

+```python

+! python3 tools/export_model.py -c /home/aistudio/SLANet_ch.yml -o Global.checkpoints=/home/aistudio/PaddleOCR/output/SLANet_ch/best_accuracy.pdparams Global.save_inference_dir=/home/aistudio/SLANet_ch/infer

+```

+

+### 2.7 预测引擎推理

+使用如下命令可使用预测引擎对单张图片进行推理

+

+

+

+```python

+os.chdir('/home/aistudio/PaddleOCR/ppstructure')

+! python3 table/predict_structure.py \

+ --table_model_dir=/home/aistudio/SLANet_ch/infer \

+ --table_char_dict_path=../ppocr/utils/dict/table_structure_dict.txt \

+ --image_dir=/home/aistudio/data/data165849/table_gen_dataset/img/no_border_18298_G7XZH93DDCMATGJQ8RW2.jpg \

+ --output=../output/inference

+```

+

+

+```python

+# 显示原图