404

+ +Page not found

+ + +Page not found

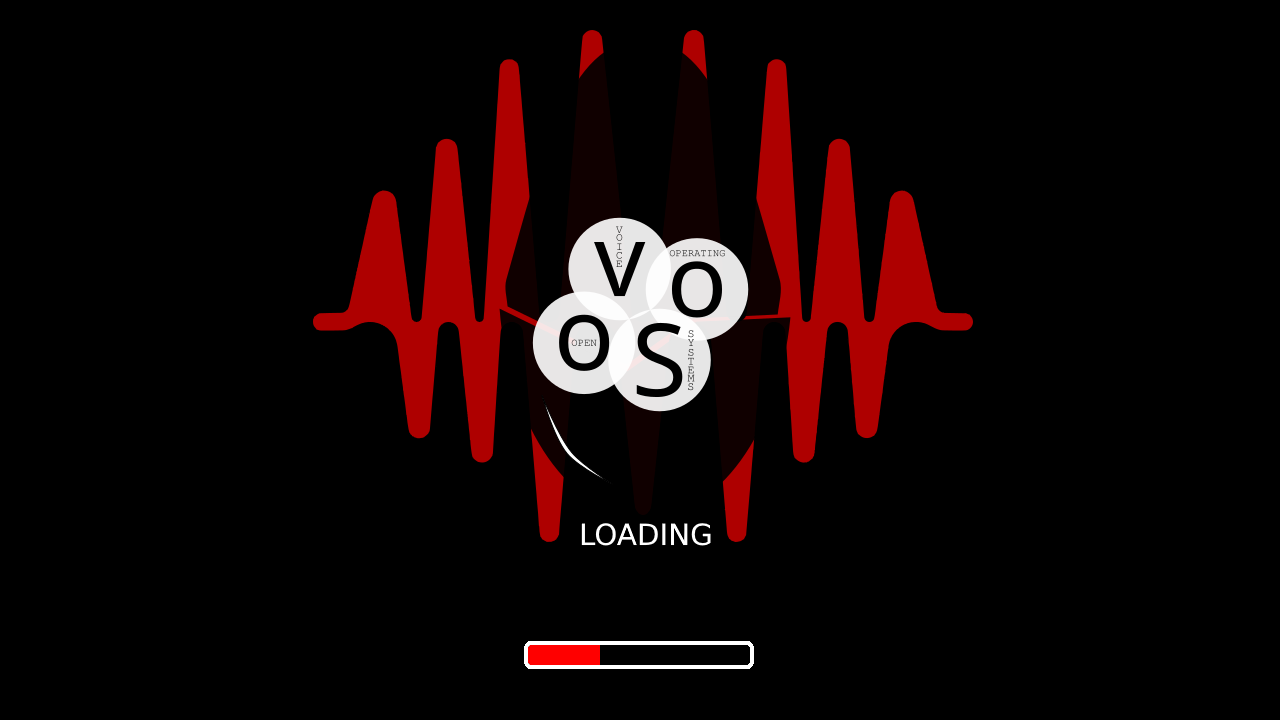

+ + +Introducing OpenVoiceOS - The Free and Open-Source Personal Assistant and Smart Speaker.

+OpenVoiceOS is a new player in the smart speaker market, offering a powerful and flexible alternative to proprietary solutions like Amazon Echo and Google Home.

+With OpenVoiceOS, you have complete control over your personal data and the ability to customize and extend the functionality of your smart speaker.

+Built on open-source software, OpenVoiceOS is designed to provide users with a seamless and intuitive voice interface for controlling their smart home devices, playing music, setting reminders, and much more.

+The platform leverages cutting-edge technology, including machine learning and natural language processing, to deliver a highly responsive and accurate experience.

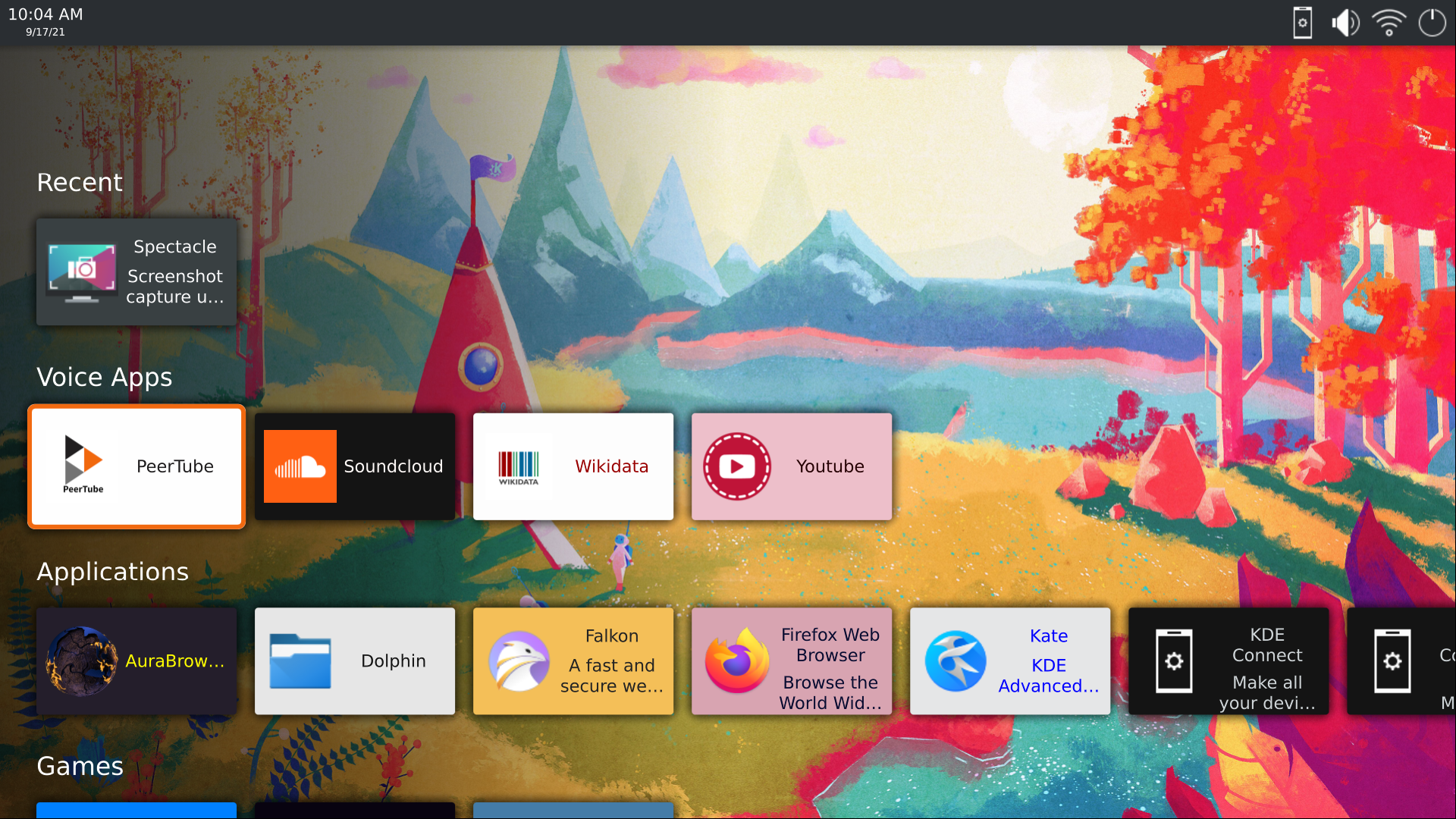

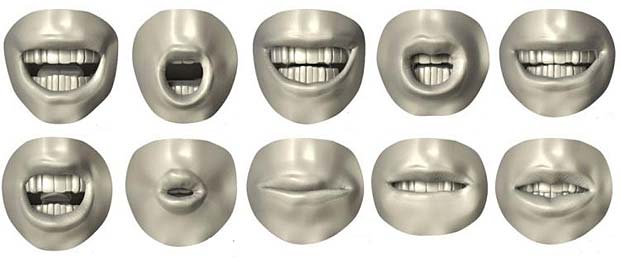

+In addition to its voice capabilities, OpenVoiceOS features a touch-screen GUI made using QT5 and the KF5 framework.

+The GUI provides an intuitive, user-friendly interface that allows you to access the full range of OpenVoiceOS features and functionality.

+Whether you prefer voice commands or a more traditional touch interface, OpenVoiceOS has you covered.

+One of the key advantages of OpenVoiceOS is its open-source nature, which means that anyone with the technical skills can contribute to the platform and help shape its future.

+Whether you're a software developer, data scientist, someone with a passion for technology, or just a casual user that would like to experience what OVOS has to offer, you can get involved and help build the next generation of personal assistants and smart speakers.

+With OpenVoiceOS, you have the option to run the platform fully offline, giving you complete control over your data and ensuring that your information is never shared with third parties. This makes OpenVoiceOS the perfect choice for anyone who values privacy and security.

+So if you're looking for a personal assistant and smart speaker that gives you the freedom and control you deserve, be sure to check out OpenVoiceOS today!

+Disclaimer: This post was written in collaboration with ChatGPT

+ +This section can be a bit technical, but is included for reference. It is not necessary to read this section for day-to-day usage of OVOS.

+OVOS is a collection of modular services that work together to provide a seamless, private, open source voice assistant.

+The suggested way to start OVOS is with systemd service files. Most of the images run these services as a normal user instead of system wide. If you get an error when using the system files, try using it as a system service.

NOTE The ovos.service is just a wrapper to control the other OVOS services. It is used here as an example showing --user vs system.

systemctl --user status ovos.servicesystemctl status ovos.serviceThis service provides the main instance for OVOS and handles all of the skill loading, and intent processing.

+All user queries are handled by the skills service. You can think of it as OVOS's brain

+typical systemd command

+systemctl --user status ovos-skills

systemctl --user restart ovos-skills

C++ version

+NOTE This is an alpha version and mostly Proof of Concept. It has been known to crash often.

You can think of the bus service as OVOS's nervous system.

+The ovos-bus is considered an internal and private websocket, external clients should not connect directly to it. Please do not expose the messagebus to the outside world!

typical systemd command

+systemctl --user start ovos-messagebus

The listener service is used to detect your voice. It controls the WakeWord, STT (Speech To Text), and VAD (Voice Activity Detection) Plugins. You can modify microphone settings and enable additional features under the listener section of your mycroft.conf file, such as wake word / utterance recording / uploading.

The ovos-dinkum-listener is the new OVOS listener that replaced the original ovos-listener and has many more options. Others still work, but are not recommended.

+ +typical systemd command

+systemctl --user start ovos-dinkum-listener

This is where speech is transcribed into text and forwarded to the skills service.

+Two STT plugins may be loaded at once. If the primary plugin fails, the second will be used.

+Having a lower accuracy offline model as fallback will account for internet outages, which ensures your device never becomes fully unusable.

+Several different STT (Speech To Text) plugins are available for use. OVOS provides a number of public services using the ovos-stt-plugin-server plugin which are hosted by OVOS trusted members (Members hosting services). No additional configuration is required.

+ + +OVOS uses "Hotwords" to trigger any number of actions. You can load any number of hotwords in parallel and trigger different actions when they are detected. Each Hotword can do one or more of the following:

+A Wake word is what OVOS uses to activate the device. By default Hey Mycroft is used by OVOS. Like other things in the OVOS ecosystem, this is configurable.

VAD Plugins detect when you are actually speaking to the device, and when you quit talking.

+Most of the time, this will not need changed. If you are having trouble with your microphone hearing you, or stopping listening when you are done talking, you might change this and see if it helps your issue.

+ + +The audio service handles the output of all audio. It is how you hear the voice responses, music, or any other sound from your OVOS device.

+ +TTS (Text To Speech) is the verbal response from OVOS. There are several plugins available that support different engines. Multiple languages and voices are available to use.

+OVOS provides a set of public TTS servers hosted by OVOS trusted members (Members hosting services). It uses the ovos-tts-server-plugin, and no additional configuration is needed.

+ + +PHAL stands for Plugin-based Hardware Abstraction Layer. It is used to allow access of different hardware devices access to use the OVOS software stack. It completely replaces the concept of hardcoded "enclosure" from mycroft-core.

Any number of plugins providing functionality can be loaded and validated at runtime, plugins can be system integrations to handle things like reboot and shutdown, or hardware drivers such as mycroft mark 1 plugin

+ + +Similar to regular PHAL, but is used when sudo or privlidged user is needed

+Be extremely careful when adding admin-phal plugins. They give OVOS administrative privileges, or root privileges to your operating system

+Admin PHAL

OVOS uses the standard mycroft-gui framework, you can find the official documentation here

+The GUI service provides a websocket for GUI clients to connect to, it is responsible for implementing the GUI protocol under ovos-core.

You can find in depth documentation here

+OVOS provides a number of helper scripts to allow the user to control the device at the command line.

+ovos-say-to This provides a way to communicate an intent to ovos.ovos-say-to "what time is it"ovos-listen This opens the microphone for listening, just like if you would have said the WakeWord. It is expecting a verbal command."what time is it"ovos-speak This takes your command and runs it through the TTS (Text To Speech) engine and speaks what was provided.ovos-speak "hello world" will output "hello world" in the configured TTS voiceovos-config is a command line interface that allows you to view and set configuration values.When you first start OVOS, there should not be any configuration needed to have a working device.

+NOTE To continue with the examples, you will need access to a shell on your device. This can be achieved with SSH. Connect to your device with the command ssh ovos@<device_ip_address> and enter the password ovos.

This password is EXTREMELY insecure and should be changed or use ssh keys for logging in.

+ +This section will explain how the configuration works, and how to do basic configuration changes.

+The rest of this section will assume you have shell access to your device.

+OVOS will load configuration files from several locations and combine them into a single json file that is used throughout the software. The file that is loaded last, is what the user should use to modify any configuration values. This is usually located at `~/.config/mycroft/mycroft.conf

{ovos-config-path}/mycroft.conf<python_install_path>/site-packages/ovos_config/mycroft.confovos-coreos.environ.get('MYCROFT_SYSTEM_CONFIG') or /etc/mycroft/mycroft.confos.environ.get('MYCROFT_WEB_CACHE') or XDG_CONFIG_PATH/mycroft/web_cache.json~/.mycroft/mycroft.conf (Deprecated)XDG_CONFIG_DIRS + /mycroft/mycroft.conf/etc/xdg/mycroft/mycroft.confXDG_CONFIG_HOME (default ~/.config) + /mycroft/mycroft.confWhen the configuration loader starts, it looks in these locations in this order, and loads ALL configurations. Keys that exist in multiple configuration files will be overridden by the last file to contain the value. This process results in a minimal amount being written for a specific device and user, without modifying default distribution files.

+ +OVOS provides a command line tool ovos-config for viewing and changing configuration values.

Values can also be set manually in config files instead of using the CLI tool.

+These methods will be used later in the How To section of these Docs.

+ +User configuration should be set in the XDG_CONFIG_HOME file. Usually located at ~/.config/mycroft/mycroft.conf. This file may or may not exist by default. If it does NOT exist, create it.

mkdir -p ~/.config/mycroft

touch ~/.config/mycroft/mycroft.conf

Now you can edit that file. To continue with the previous example, we will change the host of the TTS server, then add the value manually to the user's mycroft.conf file.

Open the file for editing. It is not uncommon for this file to exist, but be empty.

+nano ~/.config/mycroft/mycroft.conf

Enter the following into the file. NOTE this file must be valid json or yaml format. OVOS knows how to read both

+{

+ "tts": {

+ "module": "ovos-tts-plugin-server",

+ "ovos-tts-plugin-server": {

+ "host": "https://pipertts.ziggyai.online"

+ }

+ }

+}

+You can check the formatting of your file with the jq command.

cat ~/.config/mycroft/mycroft.conf | jq

+If your distribution does not include jq it can be installed with the command sudo apt install jq or the equivalent for your distro.

If there are no errors, it will output the complete file. On error, it will output the line where the error is. You can use an online JSON checker if you want also.

+ + +OVOS provides a small command line tool, ovos-config, for viewing and setting configuration values in the OVOS ecosystem.

+NOTE The CLI of this script is new, and may contain some bugs. Please report issues to the ovos-config github page.

ovos-config --help will show a list of commands to use with this tool.

ovos-config show will display a table representing all of the current configuration values.

To get the values of a specific section:

+ovos-config show --section tts will show just the "tts" section of the configuration

ovos-config show --section tts

+┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┓

+┃ Configuration keys (Configuration: Joined, Section: tts) ┃ Value ┃

+┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━┩

+│ pulse_duck │ False │

+│ module │ ovos-tts-plugin-server │

+│ fallback_module │ ovos-tts-plugin-mimic │

+├──────────────────────────────────────────────────────────────┼────────────────────────┤

+│ ovos-tts-plugin-server │ │

+│ host │ │

+└──────────────────────────────────────────────────────────────┴────────────────────────┘

+We will continue with the example above, TTS.

+Change the host of the TTS server:

+ovos-config set -k tts will show a table of values that can be edited

set -k tts

+┏━━━┳━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━┓

+┃ # ┃ Path ┃ Value ┃

+┡━━━╇━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━┩

+│ 0 │ tts/pulse_duck │ False │

+│ 1 │ tts/ovos-tts-plugin-server/host │ │

+└───┴─────────────────────────────────┴───────┘

+Which value should be changed? (2='Exit') [0/1/2]:

+Enter 1 to change the value of tts/ovos-tts-plugin-server/host

Please enter the value to be stored (type: str) :

Enter the value for the tts server that you want ovos to use.

+https://pipertts.ziggyai.online

Use ovos-config show --section tts to check your results

ovos-config show --section tts

+┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┓

+┃ Configuration keys (Configuration: Joined, Section: tts) ┃ Value ┃

+┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┩

+│ pulse_duck │ False │

+│ module │ ovos-tts-plugin-server │

+│ fallback_module │ ovos-tts-plugin-mimic │

+├──────────────────────────────────────────────────────────────┼─────────────────────────────────┤

+│ ovos-tts-plugin-server │ │

+│ host │ https://pipertts.ziggyai.online │

+└──────────────────────────────────────────────────────────────┴─────────────────────────────────┘

+This can be done for any of the values in the configuration stack.

+ +OVOS aims to be a full operating system that is free and open source. The Open Voice Operating System consists of OVOS +packages (programs specifically released by the OVOS Project) as well as free software released by third parties such as +skills and plugins. OVOS makes it possible to voice enable technology without software that would trample your freedom.

+Historically OVOS has been used to refer to several things, the team, the github organization and the reference +buildroot implementation

+OVOS started as MycroftOS, you can find the original mycroft forums +thread here.

+Over time more mycroft community members joined the project, and it was renamed to OpenVoiceOS to avoid trademark issues.

+Initially OVOS was focused on bundling mycroft-core and on creating only companion software, but due to contributions +not being accepted upstream we now maintain an enhanced reference fork of mycroft-core with extra functionality, while +keeping all companion software mycroft-core (dev branch) compatible

+You can think of OVOS as the unsanctioned "Mycroft Community Edition"

+Everyone in the OVOS team is a long term mycroft community member and has experience working with the mycroft code base

+Meet the team:

+Both projects are fully independent, initially OVOS was focused on wrapping mycroft-core with a minimal OS, but as both +projects matured, ovos-core was created to include extra functionality and make OVOS development faster and more +efficient. OVOS has been committed to keeping our components compatible with Mycroft and many of our changes are +submitted to Mycroft to include in their projects at their discretion.

+We don't, OVOS is a volunteer project with no source of income or business model

+However, we want to acknowledge Blue Systems and NeonGeckoCom, a lot of +the work in OVOS is done on paid company time from these projects

+We provide essential skills and those are bundled in all our reference images.

+ovos-core does not manage your skills, unlike mycroft it won't install or update anything by itself. if you installed +ovos-core manually you also need to install skills manually

+By default ovos-core does not require a backend internet server to operate. Some skills can be accessed (via command line) entirely offline. The default speech-to-text (STT) engine currently requires an internet connection, though some self-hosted, offline options are available. Individual skills and plugins may require internet, and most of the time you will want to use those.

+no! you can integrate ovos-core with selene +or personal backend but that is fully optional

+we provide some microservices for some of our skills, but you can also use your own api keys

+hundreds! nearly everything in OVOS is modular and configurable, that includes Text To Speech.

+Voices depend on language and the plugins you have installed, you can find a non-exhaustive list of plugins +in the ovos plugins awesome list

+yes, ovos-core supports several wake word plugins.

+Additionally, OVOS allows you to load any number of hot words in parallel and trigger different actions when they are +detected

+each hotword can do one or more of the following:

+mostly yes, depending on exactly what you mean by this question

+OVOS can run without any wake word configured, in this case you will only be able to interact via CLI or button press, +best for privacy, not so great for a smart speaker

+ovos-core also provides a couple experimental settings, if you enable continuous listening then VAD will be used to +detect speech and no wake word is needed, just speak to mycroft and it should answer! However, this setting is +experimental for a reason, you may find that mycroft answers your TV or even tries to answer itself if your hardware +does not have AEC

+Another experimental setting is hybrid mode, with hybrid mode you can ask follow-up questions, up to 45 seconds after the +last mycroft interaction, if you do not interact with mycroft it will go back to waiting for a wake word

+By default, to answer a request:

+Through this process there are a number of factors that can affect the perceived speed of responses:

+Many schools, universities and workplaces run a proxy on their network. If you need to type in a username and password to access the external internet, then you are likely behind a proxy.

If you plan to use OVOS behind a proxy, then you will need to do an additional configuration step.

+NOTE: In order to complete this step, you will need to know the hostname and port for the proxy server. Your network administrator will be able to provide these details. Your network administrator may want information on what type of traffic OVOS will be using. We use https traffic on port 443, primarily for accessing ReST-based APIs.

If you are using OVOS behind a proxy without authentication, add the following environment variables, changing the proxy_hostname.com and proxy_port for the values for your network. These commands are executed from the Linux command line interface (CLI).

$ export http_proxy=http://proxy_hostname.com:proxy_port

+$ export https_port=http://proxy_hostname.com:proxy_port

+$ export no_proxy="localhost,127.0.0.1,localaddress,.localdomain.com,0.0.0.0,::1"

+If you are behind a proxy which requires authentication, add the following environment variables, changing the proxy_hostname.com and proxy_port for the values for your network. These commands are executed from the Linux command line interface (CLI).

$ export http_proxy=http://user:password@proxy_hostname.com:proxy_port

+$ export https_port=http://user:password@proxy_hostname.com:proxy_port

+$ export no_proxy="localhost,127.0.0.1,localaddress,.localdomain.com,0.0.0.0,::1"

+OpenVoiceOS is part of a larger ecosystem of FOSS voice technology, we work closely with the following projects

+ HiveMind

HiveMindHiveMind is a community-developed superset or extension of OpenVoiceOS

+With HiveMind, you can extend one (or more, but usually just one!) instance of Mycroft to as many devices as you want, +including devices that can't ordinarily run Mycroft!

+HiveMind's developers have successfully connected to Mycroft from a PinePhone, a 2009 MacBook, and a Raspberry Pi 0, +among other devices. Mycroft itself usually runs on our desktop computers or our home servers, but you can use any +Mycroft-branded device, or OpenVoiceOS, as your central unit.

+You find the website here and the source +code here

+ Plasma Bigscreen

Plasma BigscreenPlasma Bigscreen integrates and uses OpenVoiceOS as voice framework stack to serve voice queries and voice +applications (skills with a homescreen), one can easily enable mycroft / ovos integration in the bigscreen launcher by +installing ovos core and required services and enabling the integration switch in the bigscreen KCM

+You find the website here and the source +code here

+

NeonGecko

NeonGeckoNeon was one of the first projects ever to adopt ovos-core as a library to build their own voice assistant, +Neon works closely together with OVOS and both projects are mostly compatible

+You find the website here and the source code here

+ Mycroft

MycroftMycroft AI started it all, it was one of the first ever FOSS voice assistants and is the project OVOS descends from.

+Most applications made for mycroft will work in OVOS and vice-versa

+You find the website here and the source code here

+Secret Sauce AI is a coordinated community of tech minded AI enthusiasts working together on projects to identify +blockers and improve the basic open source tools and pipeline components in the AI (voice) assistant pipeline (wakeword, +ASR, NLU, NLG, TTS). The focus is mostly geared toward deployment on edge devices and self-hosted solutions. This is not +a voice assistant project in and of itself, rather Secret Sauce AI helps AI (voice) assistant projects come together as +individuals and solve basic problems faced by the entire community.

+ + +Editor's Note +Some of the more detailed definitions will be moved to other pages, it's just here to keep track of the information for now.

+All the repositories under OpenVoiceOS organization

+The team behind OVOS

+Confirmation approaches can also be defined by Statements or Prompts , but when we talk about them in the context of confirmations we call them Implicit and Explicit.

+This type of confirmation is also a statement. The idea is to parrot the information back to the user to confirm that it +was correct, but not require additional input from the user. The implicit confirmation can be used in a majority of +situations.

+This type of confirmation requires an input from the user to verify everything is correct.

+Any time the user needs to input a lot of information or the user needs to sort through a variety of options a conversation will be needed. +Users may be used to systems that require them to separate input into different chunks.

+Allows for natural conversation by having skills set a "context" that can be used by subsequent handlers. Context could be anything from person to location. Context can also create "bubbles" of available intent handlers, to make sure certain Intents can't be triggered unless some previous stage in a conversation has occurred.

+You can find an example Tea Skill using conversational context on Github.

+As you can see, Conversational Context lends itself well to implementing a dialog tree or conversation tree.

+All of the letters and letter combinations that represent a phoneme.

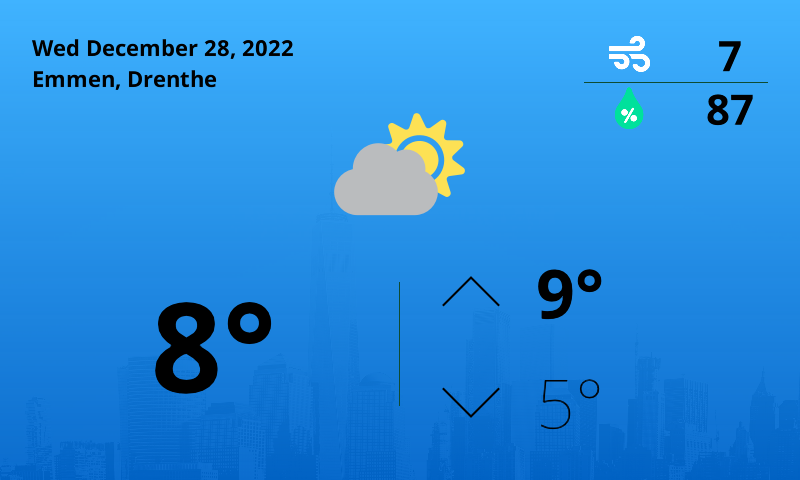

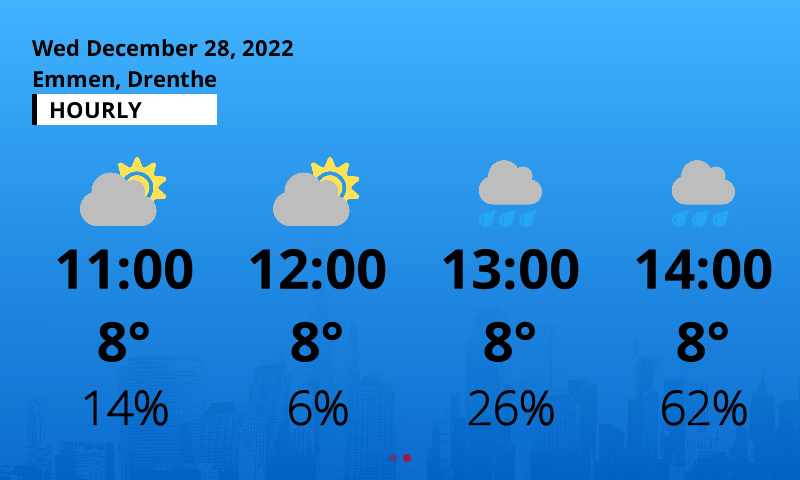

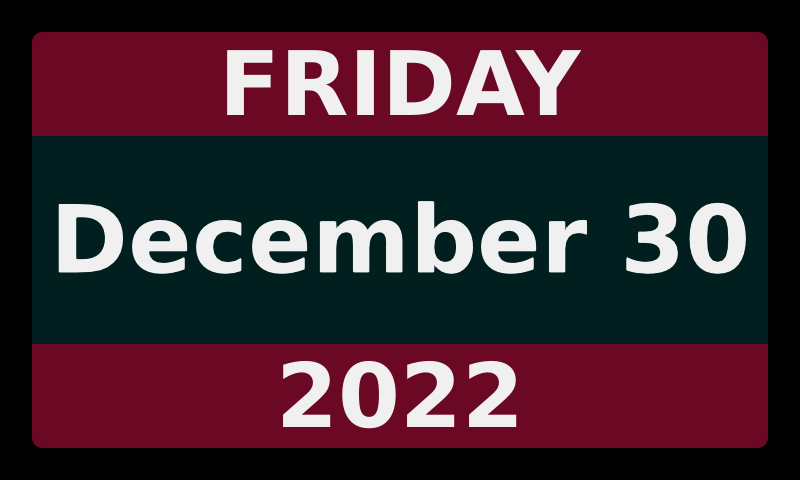

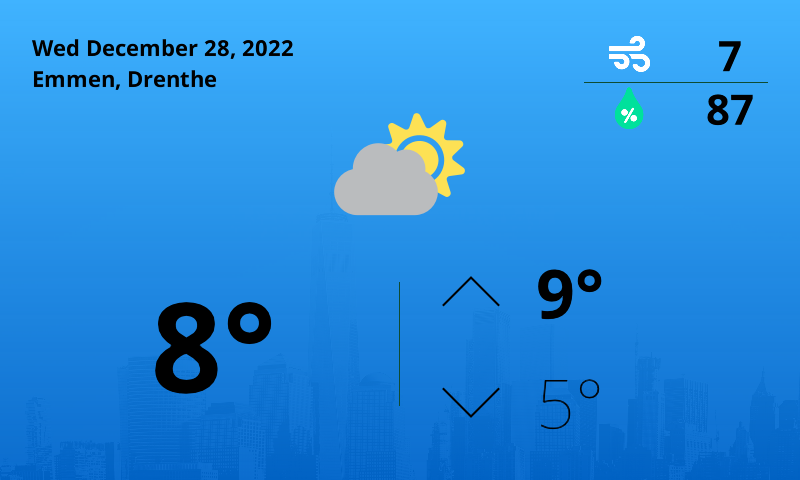

+The OpenVoiceOS home screen is the central place for all your tasks. It is the first thing you will see after completing the onboarding process. It supports a variety of pre-defined widgets which provide you with a quick overview of information you need to know like the current date, time and weather. The home screen contains various features and integrations which you can learn more about in the following sections.

+When an utterance is classified for its action and entities (e.g. 'turn on the kitchen lights' -> skill: home assistant, action: turn on/off, entity: kitchen lights)

+(Media Player Remote Interfacing Specification) is a standard D-Bus interface which aims to provide a common programmatic API for controlling media players. +More Inforamtion

+Primary configuration file for the voice assistant. Possible locations: +- /home/ovos/.local/lib/python3.9/site-packages/mycroft/configuration/mycroft.conf +- /etc/mycroft/mycroft.conf +- /home/ovos/.config/mycroft/mycroft.conf +- /etc/xdg/mycroft/mycroft.conf +- /home/ovos/.mycroft/mycroft.conf +More Information

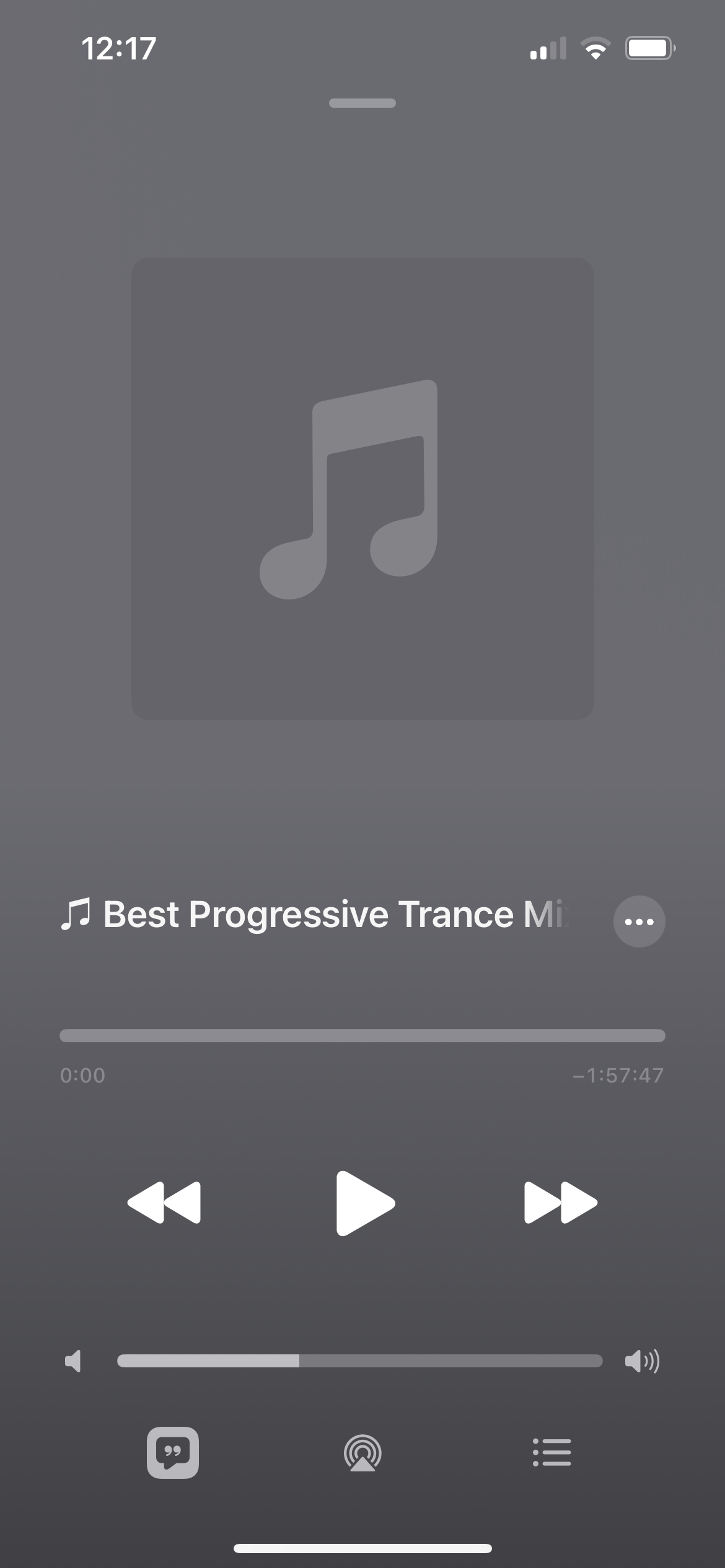

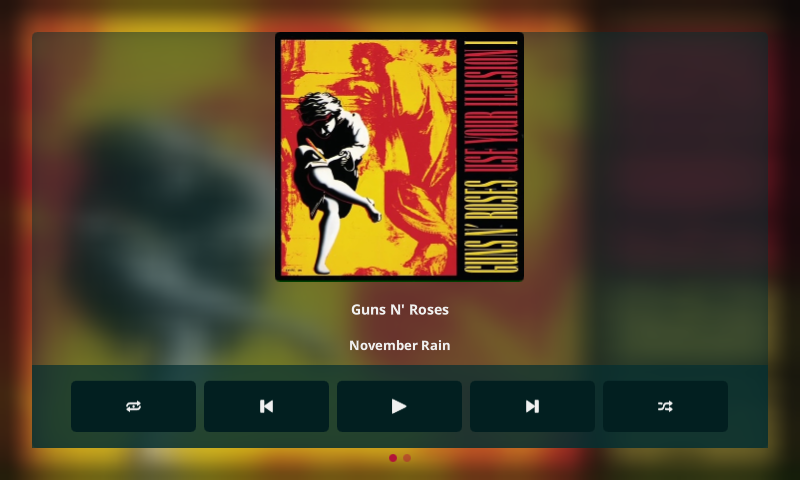

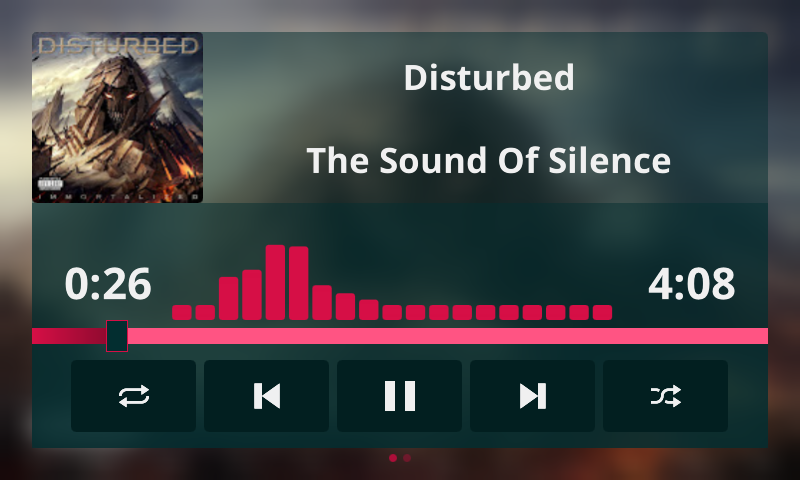

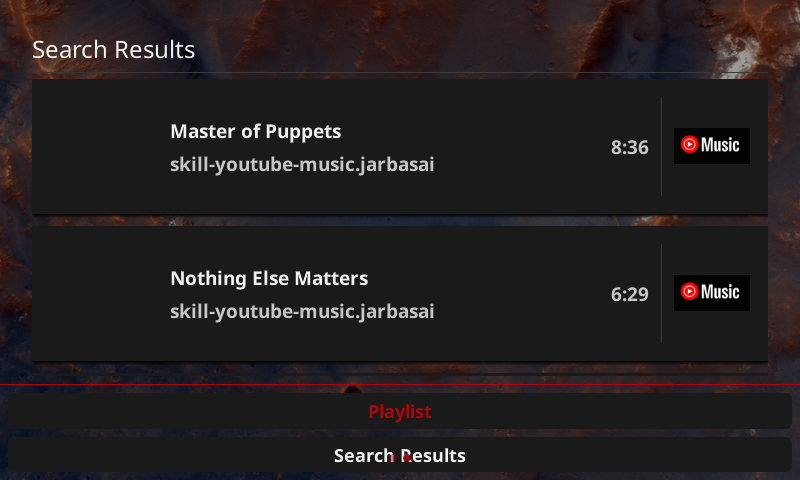

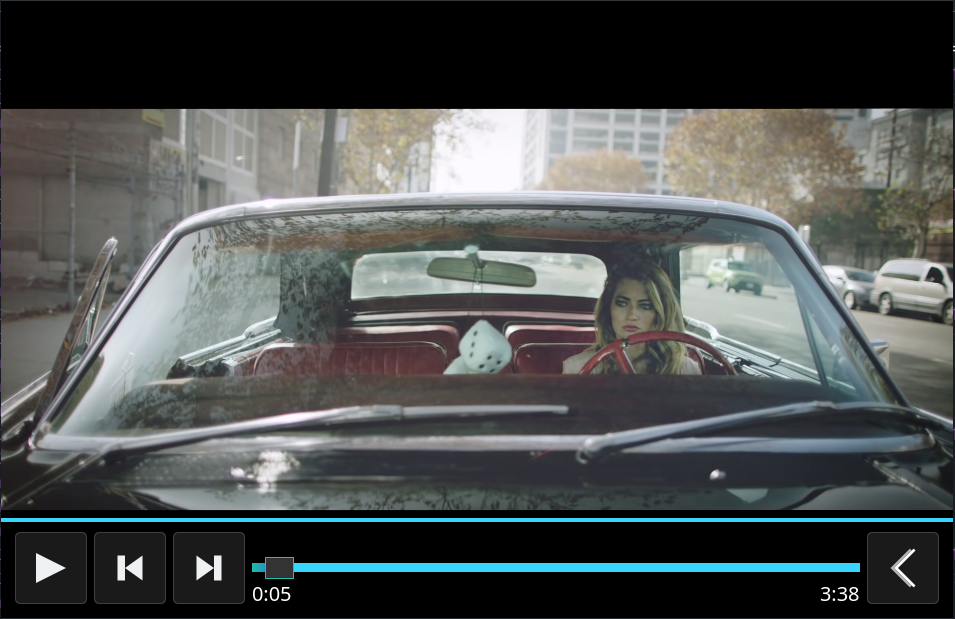

+OCP stands for OpenVoiceOS Common Play, it is a full fledged +media player

+OCP is a OVOSAbstractApplication, this +means it is a standalone but native OVOS application with full voice integration

+OCP differs from mycroft-core in several aspects:

+The central repository where the voice assistant "brain" is developed

+OPM is the OVOS Plugin Manager, this base package provides arbitrary plugins to the ovos ecosystem

+OPM plugins import their base classes from OPM making them portable and independent from core, plugins can be used in your standalone projects

+By using OPM you can ensure a standard interface to plugins and easily make them configurable in your project, plugin code and example configurations are mapped to a string via python entrypoints in setup.py

+Some projects using OPM are ovos-core +, hivemind-voice-sat +, ovos-personal-backend +, ovos-stt-server +and ovos-tts-server

+The gui service in ovos-core will expose a websocket to +the GUI client following the protocol +outlined here

+The GUI library which implements the protocol lives in the mycroft-gui +repository, The repository also hosts a development client for skill developers wanting to develop on the desktop.

+OVOS-shell is the OpenVoiceOS client implementation of the mycroft-gui +library used in our embedded device images, other distributions may offer alternative implementations such +as plasma-bigscreen* +or mycroft mark2

+OVOS-shell is tightly coupled to PHAL, the following companion plugins should be installed if you are +using ovos-shell

+Physical Hardware Abstraction Layer +PHAL is our Platform/Hardware Abstraction Layer, it completely replaces the +concept of hardcoded "enclosure" from mycroft-core

+Any number of plugins providing functionality can be loaded and validated at runtime, plugins can +be system integrations to handle things like reboot and +shutdown, or hardware drivers such as mycroft mark2 plugin

+PHAL plugins can perform actions such as hardware detection before loading, eg, the mark2 plugin will not load if it +does not detect the sj201 hat. This makes plugins safe to install and bundle by default in our base images

+The smallest phonetic unit in a language that is capable of conveying a distinction in meaning, as the m of mat and the b of bat in English.

+Snapcast is a multiroom client-server audio player, where all clients are time synchronized with the server to play perfectly synced audio. It's not a standalone player, but an extension that turns your existing audio player into a Sonos-like multiroom solution. +More Information

+You can think of Prompts as questions and Statements as providing information to the user that does not need a follow-up response.

+Qt Markup Language, the language for Qt Quick UIs. More Information

+The Mycroft GUI Framework uses QML.

+Speech To Text +Also known as ASR, automated speech recognition, the process of converting audio into words

+Text To Speech +The process of generating the audio with the responses

+Command, question, or query from a user (eg 'turn on the kitchen lights')

+A specific word or phrase trained used to activate the STT (eg 'hey mycroft')

+XDG stands for "Cross-Desktop Group", and it's a way to help with compatibility between systems. More Information

+ +OVOS has been confirmed to run on several devices, and more to come.

+Recommendations and notes on speakers and microphones

+Most audio devices are available to use with the help of Plugins and should for the most part work by default.

+If your device does not work, pop in to our Matrix support channel, please create an issue or start a discussion about your device.

+Most USB devices should work without any issues. But, not all devices are created equally.

+HDMI audio should work without issues if your device supports it.

+ +Analog output to headphones, or external speakers should work also. There may be some configuration needed on some devices.

+Audio Troubleshooting - Analog

+There are several HAT's that are available, some with just a microphone, others that play audio out also. Several are supported and tested, others should work with the proper configuration.

+/boot/config.txt modification)/boot/config.txt modification)Some special sound boards are also supported.

+Mark 1 custom sound board

+/boot.config.txt modificationIf your device supports video out, you can use a screen on your device. (RPI3/3b/3b+ will not the OVOS GUI, ovos-shell, due to lack of processing power, but you can access a command prompt on a locally connected screen)

+OVOS supports touchscreen interaction, but not all are created equally. It has been noted that on some USB touchscreens, the touch matrix is not synced with the OVOS display and requires an x11 setup with a window manager to adjust the settings to work.

+ + +WIP

+ +The home screen is the central place for all your tasks. It is the first thing you will see after completing the onboarding process. It supports a variety of pre-defined widgets which provide you with a quick overview of information you need to know like the current date, time and weather. The home screen contains various features and integrations which you can learn more about in the following sections.

+

The Night Mode feature lets you quickly switch your home screen into a dark standby clock, reducing the amount of light emitted by your device. This is especially useful if you are using your device in a dark room or at night. You can enable the night mode feature by tapping on the left edge pill button on the home screen.

+

The Quick Actions Dashboard provides you with a card-based interface to quickly access and add your most used action. The Quick Actions dashboard comes with a variety of pre-defined actions like the ability to quickly add a new alarm, start a new timer or add a new note. You can also add your own custom actions to the dashboard by tapping on the plus button in the top right corner of the dashboard. The Quick Actions dashboard is accessible by tapping on the right edge pill button on the home screen.

+

OpenVoiceOS comes with support for dedicated voice applications. Voice Applications can be dedicated skills or PHAL plugins, providing their own dedicated user interface. The application launcher will show you a list of all available voice applications. You can access the application launcher by tapping on the center pill button on the bottom of the home screen.

+

The home screen supports custom wallpapers and comes with a bunch of wallpapers to choose from. You can easily change your custom wallpaper by swiping from right to left on the home screen.

+

The notifications widget provides you with a quick overview of all your notifications. The notifications bell icon will be displayed in the top left corner of the home screen. You can access the notifications overview by tapping on the bell icon when it is displayed.

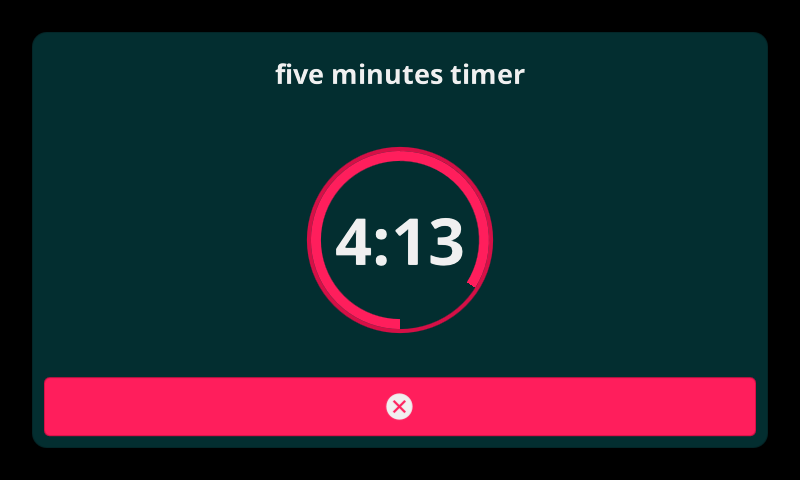

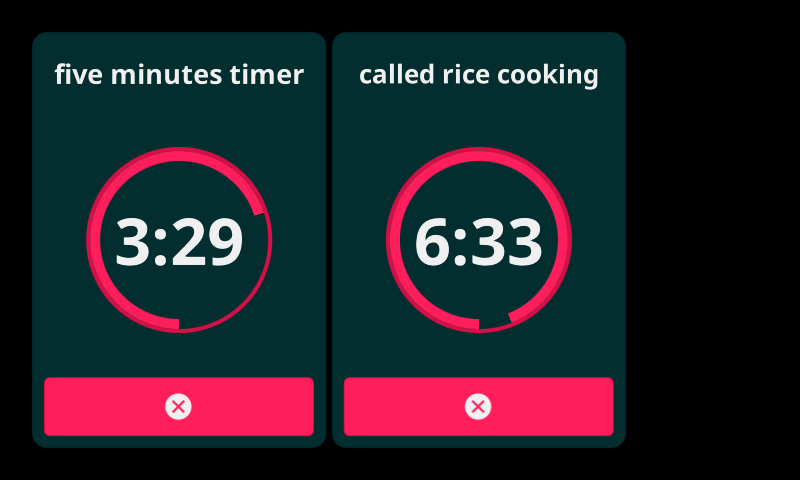

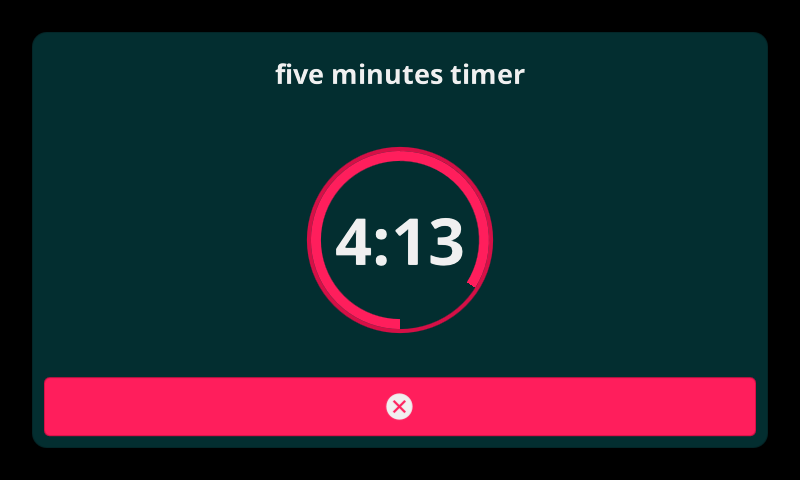

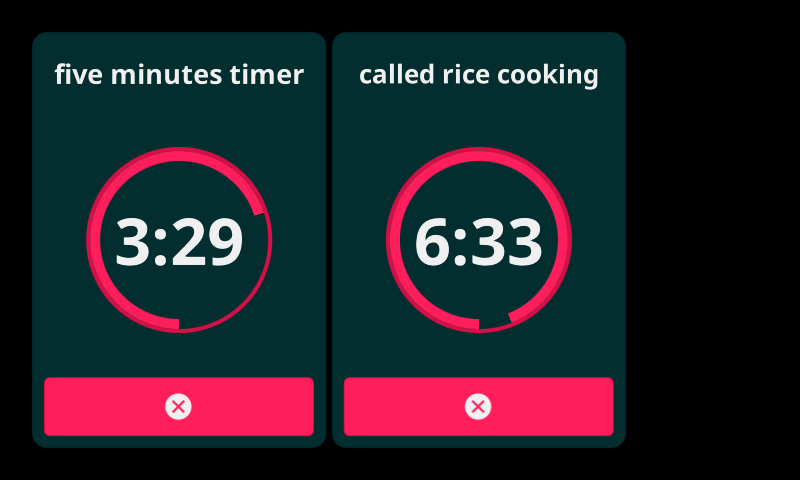

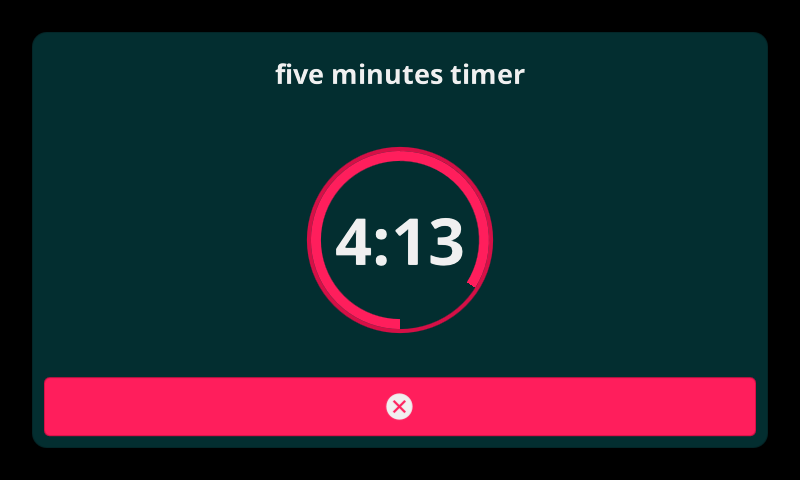

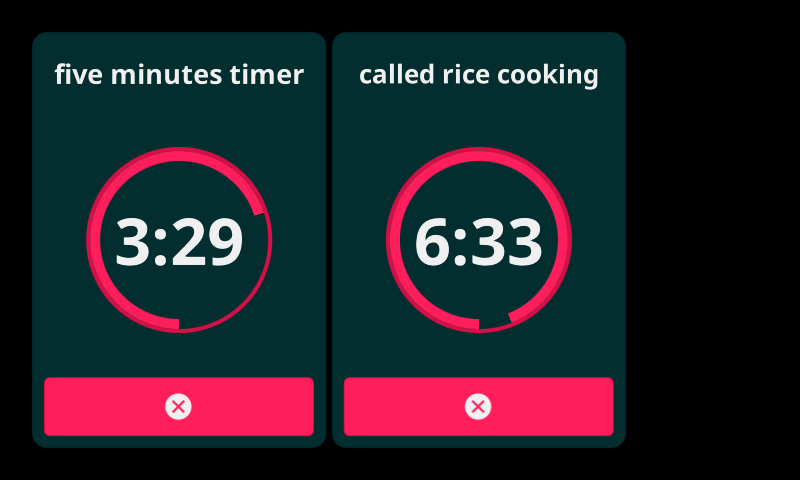

+The timer widget is displayed in top left corner after the notifications bell icon. It will show up when you have an active timer running. Clicking on the timer widget will open the timers overview.

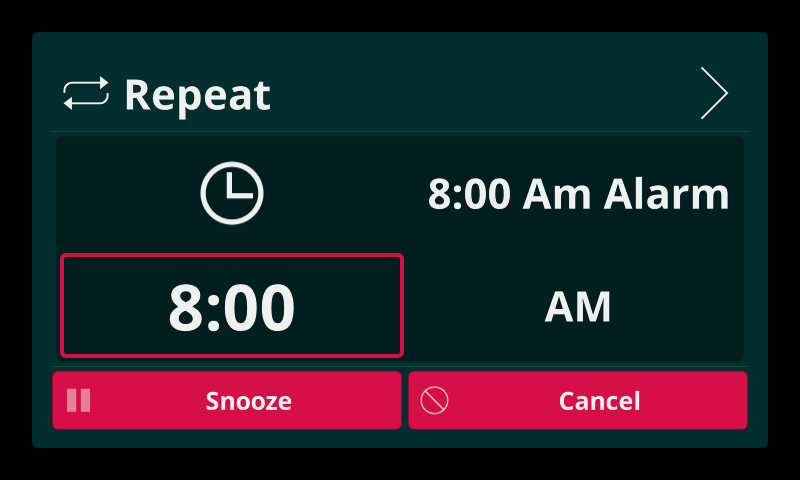

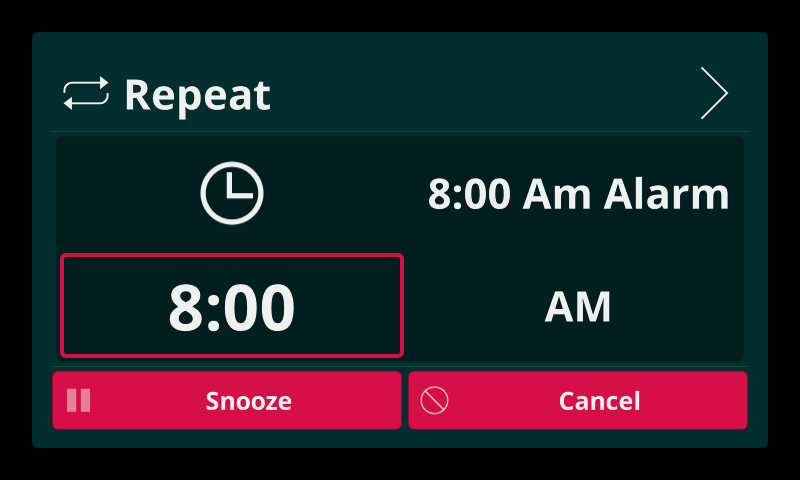

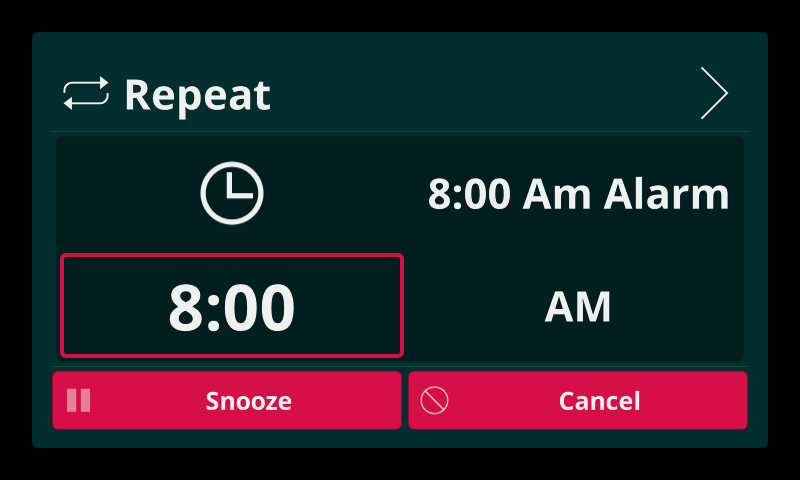

+The alarm widget is displayed in top left corner after the timer widget. It will show up when you have an active alarm set. Clicking on the alarm widget will open the alarms overview.

+The media player widget is displayed in the bottom of the home screen, It replaces the examples widget when a media player is active. The media player widget will show you the currently playing media and provide you with a quick way to pause, resume or skip the current media. You can also quickly access the media player by tapping the quick display media player button on the right side of the media player widget.

+

The homescreen has several customizations available. This is sample settings.json file with all of the options explained

{

+ "__mycroft_skill_firstrun": false,

+ "weather_skill": "skill-weather.openvoiceos",

+ "datetime_skill": "skill-date-time.mycroftai",

+ "examples_skill": "ovos-skills-info.openvoiceos",

+ "wallpaper": "default.jpg",

+ "persistent_menu_hint": false,

+ "examples_enabled": true,

+ "randomize_examples": true,

+ "examples_prefix": true

+}

+skill-ovos-date-time.openvoiceosovos_skills_manager.utils.get_skills_example() function~Most of our guides have you create a user called ovos with a password of ovos, while this makes install easy, it's VERY insecure. As soon as possible, you should secure ssh using a key and disable password authentication.

+Create a keyfile (you can change ovos to whatever you want)

+ssh-keygen -t ed25519 -f ~/.ssh/ovos

+Copy to host (use the same filename as above, specify the user and hostname you are using)

+ssh-copy-id -i ~/.ssh/ovos ovos@mycroft

+On your dekstop, edit ~/.ssh/config and add the following lines

+Host rp2

+ user ovos

+ IdentityFile ~/.ssh/ovos

+On your ovos system, edit /etc/ssh/sshd_config and add or uncomment the following line:

+PasswordAuthentication no

+restart sshd or reboot

+sudo systemctl restart sshd

+Anything connected to the bus can fully control OVOS, and OVOS usually has full control over the whole system!

+You can read more about the security issues over at Nhoya/MycroftAI-RCE

+in mycroft-core all skills share a bus connection, this allows malicious skills to manipulate it and affect other skills

+you can see a demonstration of this problem with BusBrickerSkill

+"shared_connection": false ensures each skill gets its own websocket connection and avoids this problem

Additionally, it is recommended you change "host": "127.0.0.1", this will ensure no outside world connections are allowed

This section is provided as a basic Q&A for common questions.

+ + + + + + + + +The listener is responsible for loading STT, VAD and Wake Word plugins

+Speech is transcribed into text and forwarded to the skills service.

+The newest listener that OVOS uses is ovos-dinkum-listener. It is a version of the listener from the Mycroft Dinkum software for the Mark 2 modified for use with OVOS.

+ +OVOS uses microphone plugins to support different setups and devices.

+NOTE only ovos-dinkum-listener has this support.

+The default plugin that OVOS uses is ovos-microphone-plugin-alsa and for most cases should work fine.

+If you are running OVOS on a Mac, you need a different plugin to access the audio. ovos-microphone-plugin-sounddevice

+OVOS microphone plugins are available on PyPi

+pip install ovos-microphone-plugin-sounddevice

or

+pip install --pre ovos-microphone-plugin-sounddevice

for the latest alpha versions.

NOTE The alpha versions may be needed until the release of ovos-core 0.1.0

| Plugin | +Usage | +

|---|---|

| ovos-microphone-plugin-alsa | +Default plugin - should work in most cases | +

| ovos-microphone-plugin-sounddevice | +This plugin is needed when running OVOS on a Mac but also works on other platforms | +

| ovos-microphone-plugin-socket | +Used to connect a websocket microphone for remote usage | +

| ovos-microphone-plugin-files | +Will use a file as the voice input instead of a microphone | +

| ovos-microphone-plugin-pyaudio | +Uses PyAudio for audio processing | +

| ovos-microphone-plugin-arecord | +Uses arecord to get input from the microphone. In some cases this may be faster than the default alsa |

+

Microphone plugin configuration is located under the top level listener value.

{

+ "listener": {

+ "microphone": {

+ "module": "ovos-microphone-plugin-alsa",

+ "ovos-microphone-plugin-alsa": {

+ "device": "default"

+ }

+ }

+ }

+}

+The only required section is "module". The plugin will then use the default values.

The "device" section is used if you have several microphones attached, this can be used to specify which one to use.

Specific plugins may have other values that can be set. Check the GitHub repo of each plugin for more details.

+ +PHAL is our Platform/Hardware Abstraction Layer, it completely replaces the concept of hardcoded "enclosure" from mycroft-core.

Any number of plugins providing functionality can be loaded and validated at runtime, plugins can +be system integrations to handle things like reboot and shutdown, or hardware drivers such as Mycroft Mark 2 plugin.

+PHAL plugins can perform actions such as hardware detection before loading, eg, the mark2 plugin will not load if it does not detect the sj201 hat. This makes plugins safe to install and bundle by default in our base images.

+Platform/Hardware specific integrations are loaded by PHAL, these plugins can handle all sorts of system activities.

+| Plugin | +Description | +

|---|---|

| ovos-PHAL-plugin-alsa | +volume control | +

| ovos-PHAL-plugin-system | +reboot / shutdown / factory reset | +

| ovos-PHAL-plugin-mk1 | +mycroft mark1 integration | +

| ovos-PHAL-plugin-mk2 | +mycroft mark2 integration | +

| ovos-PHAL-plugin-respeaker-2mic | +respeaker 2mic hat integration | +

| ovos-PHAL-plugin-respeaker-4mic | +respeaker 4mic hat integration | +

| ovos-PHAL-plugin-wifi-setup | +wifi setup (central plugin) | +

| ovos-PHAL-plugin-gui-network-client | +wifi setup (GUI interface) | +

| ovos-PHAL-plugin-balena-wifi | +wifi setup (hotspot) | +

| ovos-PHAL-plugin-network-manager | +wifi setup (network manager) | +

| ovos-PHAL-plugin-brightness-control-rpi | +brightness control | +

| ovos-PHAL-plugin-ipgeo | +automatic geolocation (IP address) | +

| ovos-PHAL-plugin-gpsd | +automatic geolocation (GPS) | +

| ovos-PHAL-plugin-dashboard | +dashboard control (ovos-shell) | +

| ovos-PHAL-plugin-notification-widgets | +system notifications (ovos-shell) | +

| ovos-PHAL-plugin-color-scheme-manager | +GUI color schemes (ovos-shell) | +

| ovos-PHAL-plugin-configuration-provider | +UI to edit mycroft.conf (ovos-shell) | +

| ovos-PHAL-plugin-analog-media-devices | +video/audio capture devices (OCP) | +

AdminPHAL performs the exact same function as PHAL, but plugins it loads will have root privileges.

This service is intended for handling any OS-level interactions requiring escalation of privileges. Be very careful when installing Admin plugins and scrutinize them closely

+NOTE: Because this service runs as root, plugins it loads are responsible for not writing configuration changes which would result in breaking config file permissions.

+AdminPlugins are just like regular PHAL plugins that run with root privileges.

Admin plugins will only load if their configuration contains "enabled": true. All admin plugins need to be explicitly enabled.

You can find plugin packaging documentation here.

+ +Skills give OVOS the ability to perform a variety of functions. They can be installed or removed by the user, and can be easily updated to expand functionality. To get a good idea of what skills to build, let’s talk about the best use cases for a voice assistant, and what types of things OVOS can do.

+OVOS can run on a variety of platforms from the Linux Desktop to Single Board Computers (SBCs) like the Raspberry Pi. Different devices will have slightly different use cases. Devices in the home are generally located in the living room or kitchen and are ideal for listening to the news, playing music, general information, using timers while cooking, checking the weather, and other similar activities that are easily accomplished hands-free.

+We cover a lot of the basics with our Default Skills, things like Timers, Alarms, Weather, Time and Date, and more.

+We also call this General Question and Answer, and it covers all of those factual questions someone might think to ask a voice assistant. Questions like “who was the 32nd President of the United States?”, or “how tall is Eiffel Tower?” Although the Default Skills cover a great deal of questions there is room for more. There are many topics that could use a specific skill such as Science, Academics, Movie Info, TV info, and Music info, etc.

+ +One of the biggest use cases for Smart Speakers is playing media. The reason media playback is so popular is that it makes playing a song so easy, all you have to do is say “Hey Mycroft play the Beatles,” and you can be enjoying music without having to reach for a phone or remote. In addition to listening to music, there are skills that handle videos as well.

+Much like listening to music, getting the latest news with a simple voice interaction is extremely convenient. OVOS supports multiple news feeds, and has the ability to support multiple news skills.

+Another popular use case for Voice Assistants is to control Smart Home and IoT products. Within the OVOS ecosystem there are skills for Home Assistant, Wink IoT, Lifx and more, but there are many products that we do not have skill for yet. The open source community has been enthusiastically expanding OVOS's ability to voice control all kinds of smart home products.

+Voice games are becoming more and more popular, especially those that allow multiple users to play together. Trivia games are some of the most popular types of games to develop for voice assistants. There are several games already available for OVOS. There are native voice adventure games, ports of the popular text adventure games from infocom, a Crystal Ball game, a Number Guessing game and much more!

+Your OpenVoiceOS device comes with certain skills pre-installed for basic functionality out of the box. You can also install new skills however more about that at a later stage.

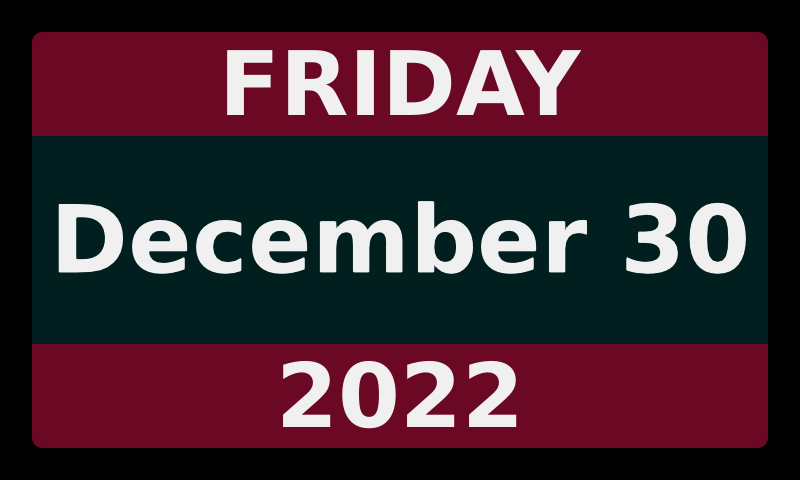

+You can ask your device what time or date it is just in case you lost your watch.

+++Hey Mycroft, what time is it?

+

++Hey Mycroft, what is the date?

+

Having your OpenVoiceOS device knowing and showing the time is great, but it is even better to be woken up in the morning by your device.

+++Hey Mycroft, set an alarm for 8 AM.

+

Sometimes you are just busy but want to be alerted after a certain time. For that you can use timers.

+++Hey Mycroft, set a timer for 5 minutes.

+

You can always set more timers and even name them, so you know which timers is for what.

+++Hey, Mycroft, set another timer called rice cooking for 7 minutes.

+

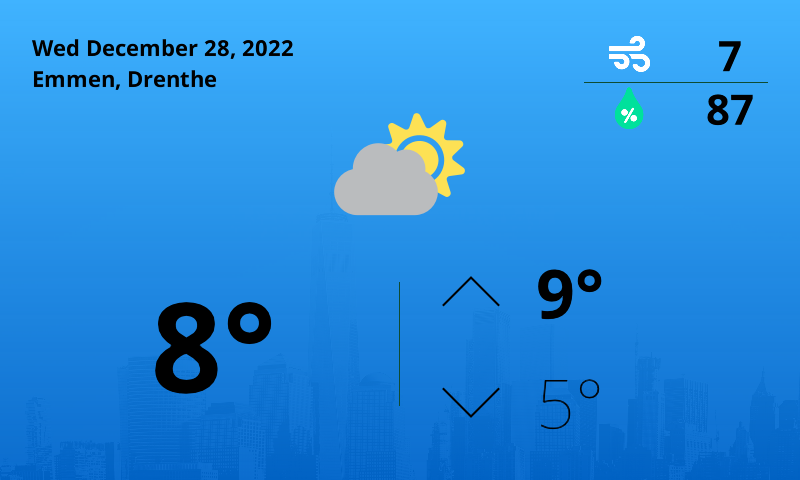

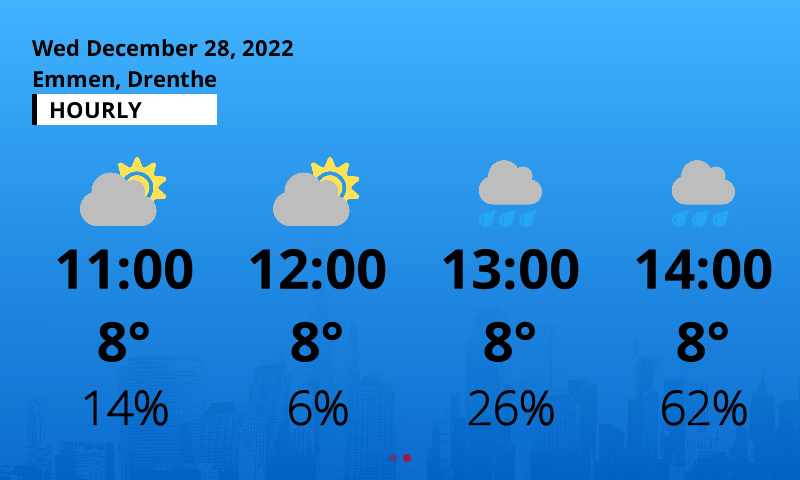

You can ask your device what the weather is or would be at any given time or place.

+++Hey Mycroft, what is the weather like today?

+

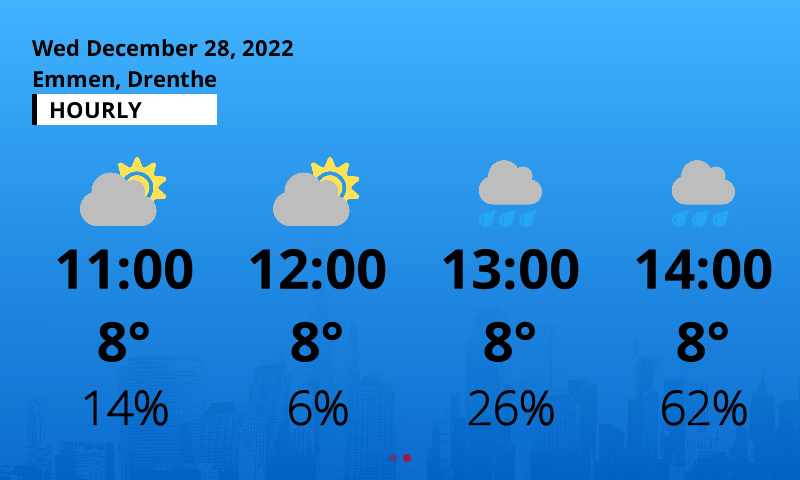

The weather skill actually uses multiple pages indicated by the small dots at the bottom of the screen.

+

There are more installed, just try. If you don't get the response you expected, see the section on installing new skills

+ +Each skill will have its own config file usually located at ~/.local/share/mycroft/skills/<skill_id>/settings.json

Skill settings provide the ability for users to configure a Skill using the command line or a web-based interface.

+This is often used to:

+Skill settings are completely optional.

+Refer to each skill repository for valid configuration values.

+ +This section will help you to understand what a skill is and how to install and use skills with OVOS.

+OVOS official skills can be found on PyPi and the latest stable version can be installed with a pip install command.

pip install ovos-skill-naptime

If you have issues installing with this command, you may need to use the alpha versions. Pip has a command line flag for this --pre.

pip install --pre ovos-skill-naptime

will install the latest alpha version. This should fix dependency issues with the stable versions.

Most skills are found throughout github. The official skills can be found with a simple search in the OVOS GitHub page. There are a few other places they can be found. Neon AI has several skills, and a search through GitHub will surley find more.

+There are a few ways to install skills in ovos. The preferred way is with pip and a setup.py file.

The preferred method is with pip. If a skill has a setup.py file, it can be installed this way.

The syntax is pip install git+<github/repository.git>.

ex. pip install git+https://github.com/OpenVoiceOS/skill-ovos-date-time.git should install the ovos-date-time skill.

Skills can be installed from a local file also.

+Clone the repository.

+git clone https://github.com/OpenVoiceOS/skill-ovos-date-time

pip install ./skill-ovos-date-time

After installing skills this way, ovos skills service needs to be restarted.

+systemctl --user restart ovos-skills

This is NOT the preferred method and is here for backward compatibility with the original mycroft-core skills.

Skills can also be directly cloned to the skill directory, usually located at ~/.local/share/mycroft/skills/.

enter the skill directory.

+cd ~/.local/share/mycroft/skills

and clone the found skill here with git.

+git clone <github/repository.git>

ex. git clone https://github.com/OpenVoiceOS/skill-ovos-date-time.git will install the ovos-date-time skill.

A restart of the ovos-skills service is not required when installing this way.

+The OVOS skills manager is in need of some love, and when official skill stores are created, this will be updated to use the new methods. Until then, this method is NOT recommended, and NOT supported. The following is included just as reference.

+Install skills from any appstore!

+The mycroft-skills-manager alternative that is not vendor locked, this means you must use it responsibly!

+Do not install random skills, different appstores have different policies!

+Keep in mind any skill you install can modify mycroft-core at runtime, and very likely has root access if you are running on a Raspberry Pi.

+pip install ovos-skills-manager

+Enable a skill store

+osm enable --appstore [ovos|mycroft|pling|andlo|all]

+Search for a skill and install it

+osm install --search

+See more osm commands

+osm --help

+osm install --help

+STT (Speech to Text) is what converts your voice into commands that OVOS recognizes, then converts to an intent that is used to activate skills.

+There are several STT engines available and OVOS uses ovos-stt-plugin-server and a list of public servers hosted by OVOS community members by default.

+| Plugin | +Offline | +Type | +

|---|---|---|

| ovos-stt-plugin-vosk | +yes | +FOSS | +

| ovos-stt-plugin-chromium | +no | +API (free) | +

| neon-stt-plugin-google_cloud_streaming | +no | +API (key) | +

| neon-stt-plugin-scribosermo | +yes | +FOSS | +

| neon-stt-plugin-silero | +yes | +FOSS | +

| neon-stt-plugin-polyglot | +yes | +FOSS | +

| neon-stt-plugin-deepspeech_stream_local | +yes | +FOSS | +

| ovos-stt-plugin-selene | +no | +API (free) | +

| ovos-stt-plugin-http-server | +no | +API (self hosted) | +

| ovos-stt-plugin-pocketsphinx | +yes | +FOSS | +

Several STT engines have different configuration settings for optimizing its use. Several have different voices to use, or you can specify a specific STT server to use.

+We will cover basic configuration of the default STT engine ovos-stt-plugin-server

All changes will be made in the User configuration file, eg. ~/.config/mycroft/mycroft.conf

Open the file for editing. nano ~/.config/mycroft/mycroft.conf

If your file is empty, or does not have a "stt" section, you need to create it. Add this to your config

{

+ "stt": {

+ "module": "ovos-stt-plugin-server",

+ "fallback_module": "ovos-stt-plugin-vosk",

+ "ovos-stt-plugin-server": {

+ "url": "https://fasterwhisper.ziggyai.online/stt"

+ }

+ "ovos-stt-plugin-vosk": {}

+ }

+}

+By default, the language that is configured with OVOS will be used, but should (WIP), detect the spoken language and convert it as necessary.

+"module" - This is where you specify what STT module to use.

"fallback_module" - If by chance your first STT engine fails, OVOS will try to use this one. It is usually configured to use an on device engine so that you always have some output even if you are disconnected from the internet.

"ovos-tts-server-plugin"

"ovos-tts-plugin-piper" - Specify specific plugin settings here. Multiple entries are allowed. If an empty dict is provided, {}, the plugin will use its default values.

Refer to the STT plugin GitHub repository for specifications on each plugin

+ +TTS plugins are responsible for converting text into audio for playback. Several options are available each with different attributes and supported languages. Some can be run on device, others need an internet connection to work.

+As with most OVOS packages, the TTS plugins are available on PyPi and can be installed with pip install

pip install ovos-tts-plugin-piper

will install the latest stable version. If there are installation errors, you can install the latest alpha versions of the plugins.

pip install --pre ovos-tts-plugin-piper

By default, OVOS uses ovos-tts-server-plugin and a series of public TTS servers, provided by OVOS community members, to send speech to your device. If you host your own TTS server, or this option is not acceptable to you, there are many other options to use.

+TTS plugins are responsible for converting text into audio for playback.

+ +Advanced TTS Plugin Documentation

+Several TTS engines have different configuration settings for optimizing its use. Several have different voices to use, or you can specify a TTS server to use.

+We will cover basic configuration of the default TTS engine ovos-tts-server-plugin.

All changes will be made in the User configuration file, eg. ~/.config/mycroft/mycroft.conf.

Open the file for editing. nano ~/.config/mycroft/mycroft.conf.

If your file is empty, or does not have a "tts" section, you need to create it. Add this to your config

{

+ "tts": {

+ "module": "ovos-tts-server-plugin",

+ "fallback_module": "ovos-tts-plugin-piper",

+ "ovos-tts-server-plugin": {

+ "host": "https://pipertts.ziggyai.online",

+ "voice": "alan-low"

+ }

+ "ovos-tts-plugin-piper": {}

+ }

+}

+"module" - This is where you specify what TTS plugin to use.

+- ovos-tts-server-plugin in this example.

+ - This plugin, by default, uses a random selection of public TTS servers provided by the OVOS community. With no "host" provided, one of those will be used.

+ - You can still change your voice without changing the "host". The default voice is "alan-low", or the Mycroft original voice `"Alan Pope".

Changing your assistant's voice

+"fallback_module"

+- If by chance your first TTS engine fails, OVOS will try to use this one. It is usually configured to use an on device engine so that you always have some output even if you are disconnected from the internet.

"ovos-tts-server-plugin"

"ovos-tts-plugin-piper"

+- Specify specific plugin settings here. Multiple entries are allowed. If an empty dict is provided, {}, the plugin will use its default values.

Refer to the TTS github repository for specifications on each plugin

+OVOS uses "wakewords" to activate the system. This is what "hey Google" or "Alexa" is on proprietary devices. By default, OVOS uses the WakeWord "hey Mycroft".

+OVOS "hotwords" is the configuration section to specify what the WakeWord do. Multiple "hotwords" can be used to do a variety of things from putting OVOS into active listening mode, a WakeWord like "hey Mycroft", to issuing a command such as "stop" or "wake up"

+As with everything else, this too can be changed, and several plugins are available. Some work better than others.

+| Plugin | +Type | +Description | +

|---|---|---|

| ovos-ww-plugin-precise-lite | +Model | +The most accurate plugin available as it uses pretrained models and community models are available also | +

| ovos-ww-plugin-openWakeWord | +Model | +Uses openWakeWord for detection | +

| ovos-ww-plugin-vosk | +Full Word | +Uses full word detection from a loaded model. | +

| ovos-ww-plugin-pocketsphinx | +Phonomes | +Probably the least accurate, but can be used on almost any device | +

| ovos-ww-plugin-hotkeys | +Model | +Use an input from keyboard or button to emulate a wakeword being said. Useful for privacy, but not so much for a smart speaker. | +

| ovos-ww-plugin-snowboy | +Model | +Uses snowboy wakeword engine | +

| ovos-ww-plugin-nyumaya | +Model | +WakeWord plugin using Nyumaya | +

The configuration for wakewords are in the "listener" section of mycroft.conf and configuration of hotwords is in the "hotwords" section of the same file.

This example will use the vosk plugin and change the wake word to "hey Ziggy".

+Add the following to your ~/.config/mycroft/mycroft.conf file.

{

+ "listener": {

+ "wake_word": "hey_ziggy"

+ }

+ "hotwords": {

+ "hey_ziggy": {

+ "module": "ovos-ww-plugin-vosk",

+ "listen": true,

+ "active": true,

+ "sound": "snd/start_listening.wav",

+ "debug": false

+ "rule": "fuzzy",

+ "lang": "en",

+ "samples": [

+ "hey ziggy",

+ "hay ziggy"

+ ]

+ }

+ }

+}

+The most important section is "wake_word": "hey_ziggy" in the "listener" section.

This tells OVOS what the default wakeword should be.

+In the "hotwords" section, "active": true, is only used if multiple wakewords are being used. By default, what ever wake_word is set in the listener section is automatically set to true.

If you want to disable a wakeword, you can set this to false.

If enabling a wakeword, be sure to also set "listen": true.

Multiple hotwords can be configured at the same time, even the same word with different plugins. This allows for more accurate ones to be used before the less accurate, but only if the plugin is installed.

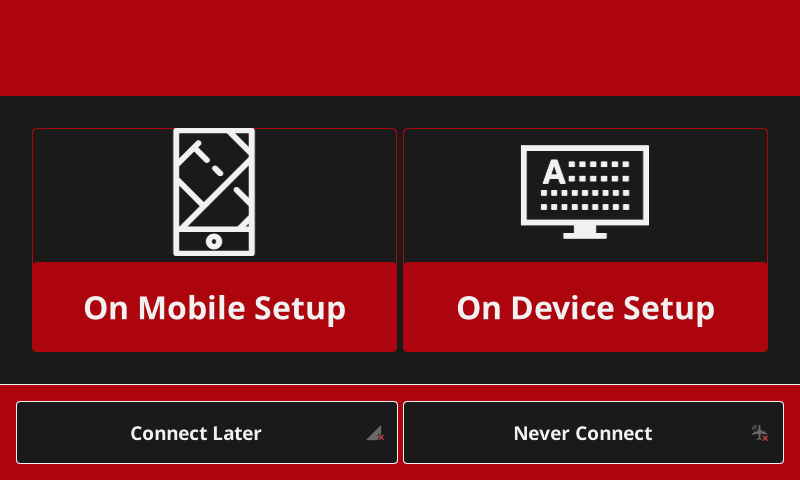

+ + +OpenVoiceOS ready to use images come in two flavours; The buildroot version, being the minimal consumer type of image and the Manjaro version, being the full distribution easy / easier for developing.

+| + | OpenVoiceOS (Buildroot) |

+OpenVoiceOS (Manjaro) |

+Neon AI | +Mark II (Dinkum) |

+Mycroft A.I. (PiCroft) |

+

|---|---|---|---|---|---|

| Software - Architecture | ++ | + | + | + | + |

| Core | +ovos-core | +ovos-core | +neon-core | +Dinkum | +mycroft-core | +

| GUI | +ovos-shell (mycroft-gui based) |

+ovos-shell (mycroft-gui based) |

+ovos-shell (mycroft-gui based) |

+plasma-nano (mycroft-gui based) |

+N/A | +

| Services | +systemd user session |

+systemd system session |

+systemd system session |

+systemd system session |

+N/A | +

| Hardware - Compatibility | ++ | + | + | + | + |

| Raspberry Pi | +3/3b/3b/4 | +4 | +4 | +Mark II (only) |

+3/3b/3b/4 | +

| X86_64 | +planned | +No | +WIP | +No | +No | +

| Virtual Appliance | +planned | +No | +Unknown | +No | +No | +

| Docker | +No possibly in future |

+Yes | +Yes | +No | +No | +

| Mark-1 | +Yes WIP |

+No | +No | +No | +No | +

| Mark-2 | +Yes Dev-Kit Retail (WIP) |

+Yes Dev-Kit Retail |

+Yes Dev-Kit Retail |

+Yes Retail ONLY |

+No | +

| Hardware - Peripherals | ++ | + | + | + | + |

| ReSpeaker | +2-mic 4-mic squared 4-mic linear 6-mic |

+2-mic 4-mic squared 4-mic linear 6-mic |

+Unknown | +No | +Yes manual installation? |

+

| USB | +Yes | +Yes | +Unknown | +No | +Yes manual installation |

+

| SJ-201 | +Yes | +Yes | +Yes | +Yes | +No sandbox image maybe |

+

| Google AIY v1 | +Yes manual configuration |

+Yes manual installation |

+Unknown | +No | +No manual installation? |

+

| Google AIY v2 | +No perhaps in the future |

+Yes manual installation |

+Unknown | +No | +No manual installation? |

+

| Screen - GUI | ++ | + | + | + | + |

| GUI supported Showing a GUI if a screen is attached |

+Yes ovos-shell on eglfs |

+Yes ovos-shell on eglfs |

+Yes ovos-shell on eglfs |

+Yes plasma-nano on X11 |

+No | +

| Network Setup - Options | ++ | + | + | + | + |

| Mobile WiFi Setup Easy device "hotspot" to connect to preset network from phone or pad. |

+Yes | +No | +No | +Yes | +No | +

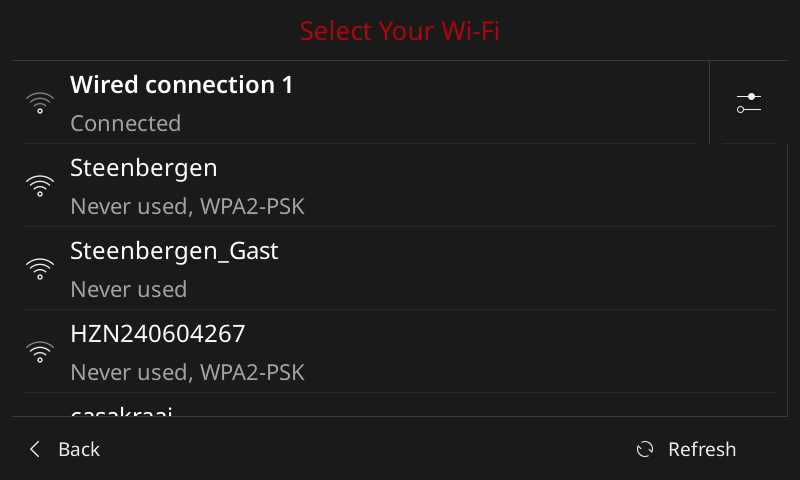

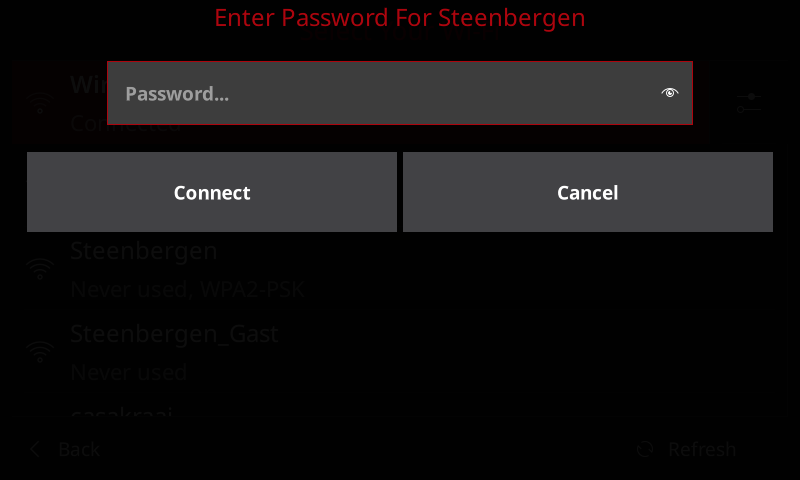

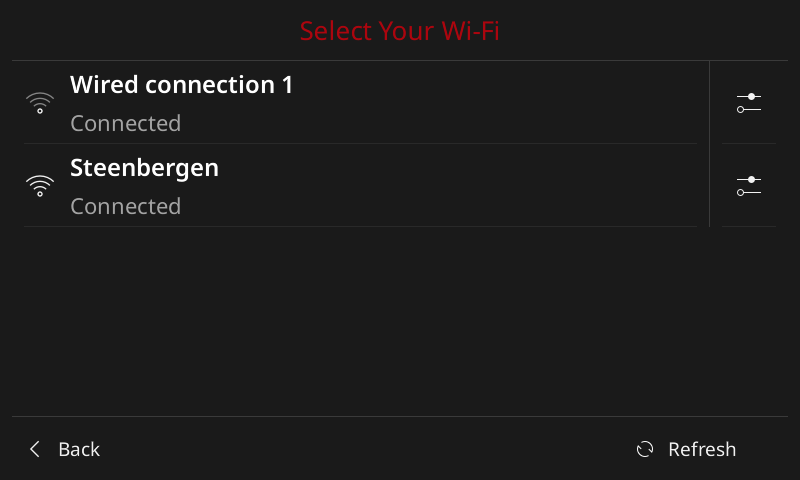

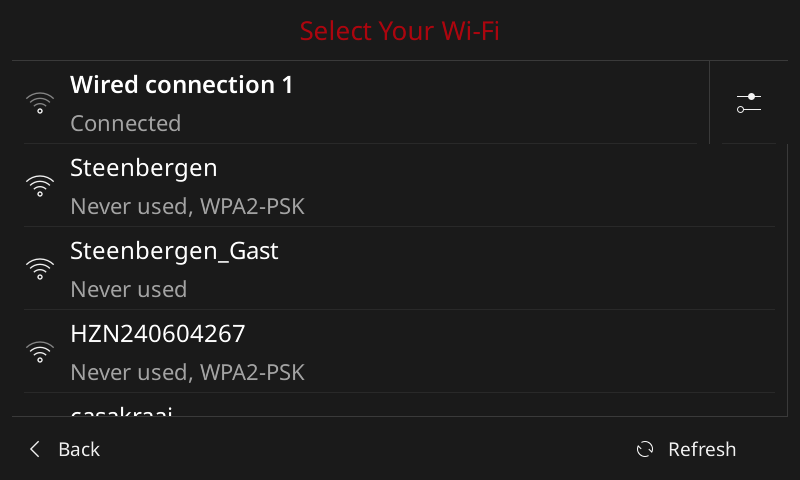

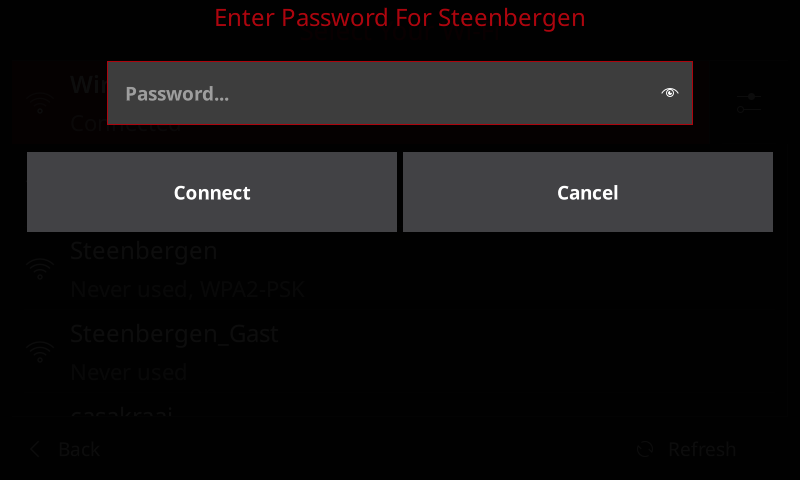

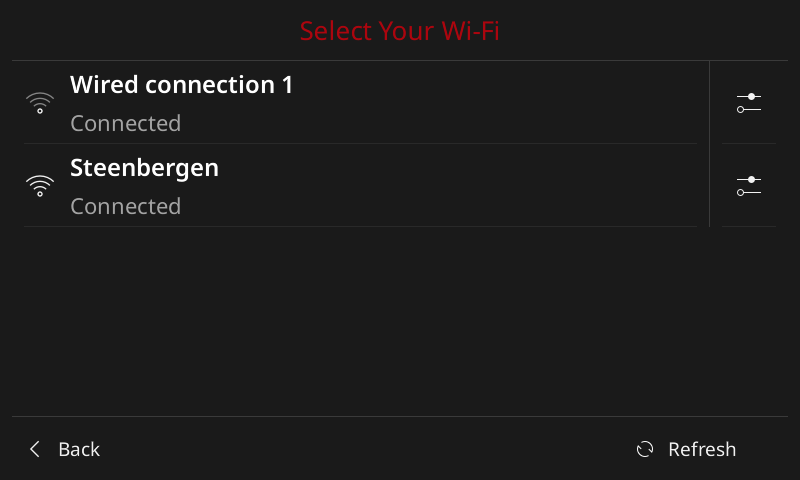

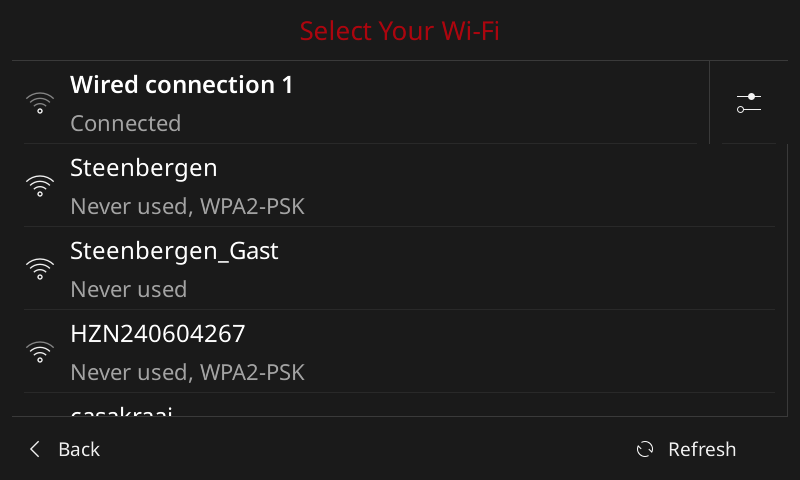

| On device WiFi Setup Configure the WiFi connection on the device itself |

+Yes | +Yes | +Yes | +No | +No | +

| On screen keyboard | +Yes | +Yes | +Yes | +Yes | +No | +

| Reconfigure network Easy way to change the network settings |

+Yes | +Yes | +Yes | +No | +No | +

| Configuration - Option | ++ | + | + | + | + |

| Data privacy | +Yes | +Yes | +Yes | +Partial | +Partial | +

| Offline mode | +Yes | +Yes | +Yes | +No | +No | +

| Color theming | +Yes | +Yes | +Yes | +No | +No | +

| Non-Pairing mode | +Yes | +Yes | +Yes | +No | +No | +

| API Access w/o pairing | +Yes | +Yes | +Yes | +No | +No | +

| On-Device configuration | +Yes | +Yes | +Yes | +No | +No | +

| Online configuration | +Dashboard wip |

+Dashboard wip |

+WIP | +Yes | +Yes | +

| Customization | ++ | + | + | + | + |

| Open Build System | +Yes | +Yes | +Yes | +Partial build tools are not public |

+Yes | +

| Package manager | +No No buildtools available. Perhaps opkg in the future |

+Yes (pacman) |

+Yes | +Yes *limited becuase of read-only filesystem |

+Yes | +

| Updating | ++ | + | + | + | + |

| Update mechanism(s) | +pip In the future: Firmware updates. On-device and Over The Air |

+pip package manager |

+Plugin-based update mechanismOS Updates WIP | +OTA controlled by Mycroft |

+pip package manager |

+

| Voice Assistant - Functionality | ++ | + | + | + | + |

| STT - On device | +Yes Kaldi/Vosk-API WhisperCPP (WIP) Whisper TFlite (WIP) |

+Yes Kaldi/Vosk-API |

+Yes Vosk Deepspeech |

+Yes Vosk Coqui |

+No | +

| STT - On premises | +Yes Ovos STT Server (any plugin) |

+Yes Ovos STT Server (any plugin) |

+Yes Ovos STT Server (any plugin) |

+No | +No | +

| STT - Cloud | +Yes Ovos Server Proxy More...? |

+Yes Ovos Server Proxy |

+Yes |

+Yes Selene Google Cloud Proxy |

+Yes Selene Google (Chromium) Proxy |

+

| TTS - On device | +Yes Mimic 1 More...? |

+Yes Mimic 1 More...? |

+Yes Mimic 1 Mimic 3 Coqui |

+Yes Mimic 3 |

+Yes Mimic 1 |

+

| TTS - On premises | +Yes ? |

+Yes ? |

+Yes CoquiMozillaLarynx |

+No | +No | +

| TTS - Cloud | +Yes Mimic 2 Mimic 3 More...? |

+Yes Mimic 2 Mimic 3 More...? |

+Yes Amazon Polly |

+No | +No | +

| Smart Speaker - Functionality | ++ | + | + | + | + |

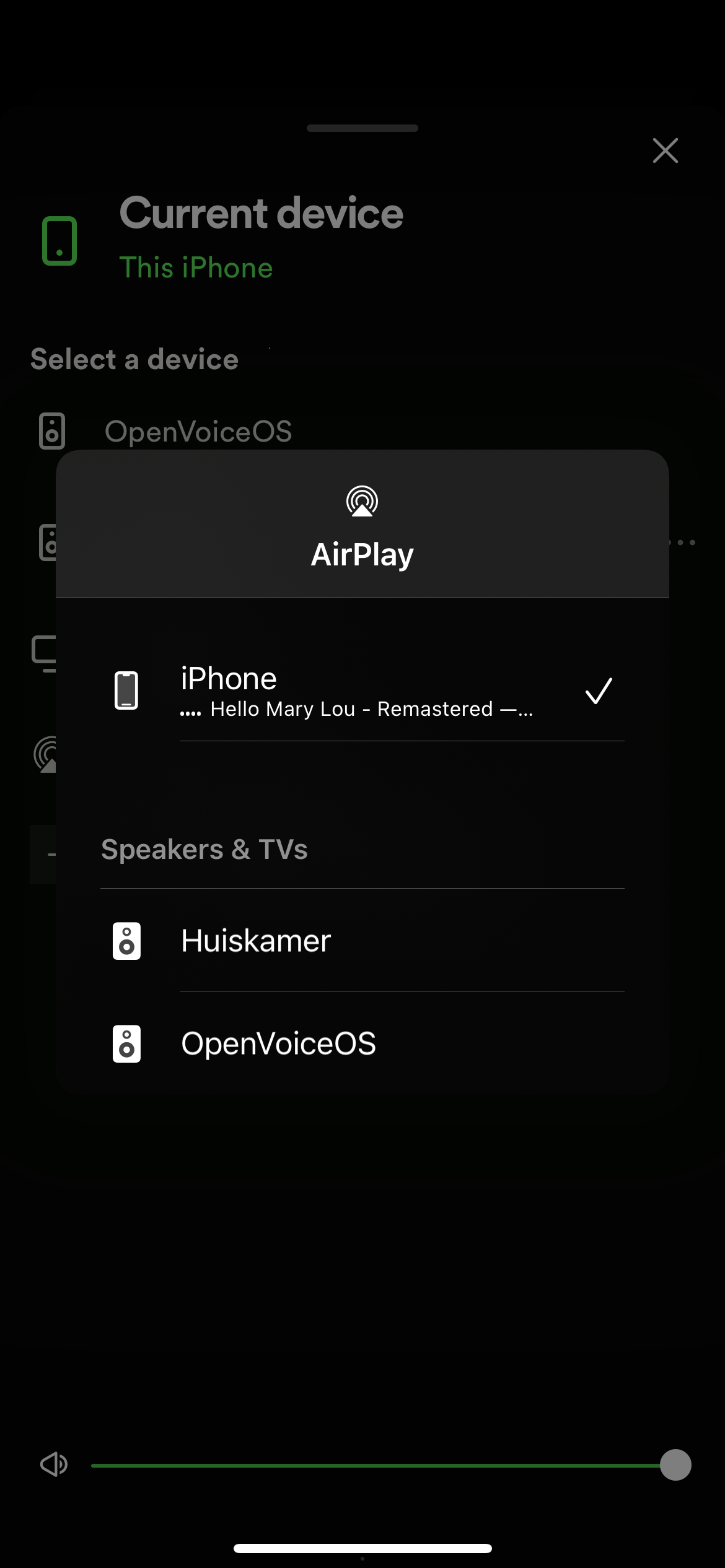

| Music player connectivity The use of external application on other devices to connect to your device. |

+Yes Airplay Spotifyd Bluetooth Snapcast KDE Connect |

+Unknown | +Unknown | +Yes MPD Local Files |

+No manual installation? |

+

| Music player sync | +Yes OCP MPRIS |

+Yes OCP MPRIS |

+Yes OCP MPRIS |

+No | +No | +

| HomeAssistant integration | +unknown | +Yes HomeAssistant PHAL Plugin |

+WIPMycroft Skill reported working | +unknown | +unknown | +

| Camera support | +Yes | +wip | +Yes | +unknown | +unknown | +

The buildroot OpenVoiceOS editions is considered to be consumer friendly type of device, or as Mycroft A.I. would like to call, a retail version. However as we so not target a specific hardware platform and would like to support custom made systems we are implementing a smart way to detect and configure different type of Raspberry Pi HAT's.

+At boot the system scan the I2C bus for known and supported HAT's and if found configures the underlying linux sound system. At the moment this is still very much in development, however the below HAT's are or should soon be supported by this system; +- ReSpeaker 2-mic HAT +- ReSpeaker 4-mic Square HAT +- ReSpeaker 4-mic linear / 6-mic HAT +- USB devices such as the PS2 EYE +- SJ-201 Dev Kits +- SJ-201 Mark2 retail device

+TODO - write docs

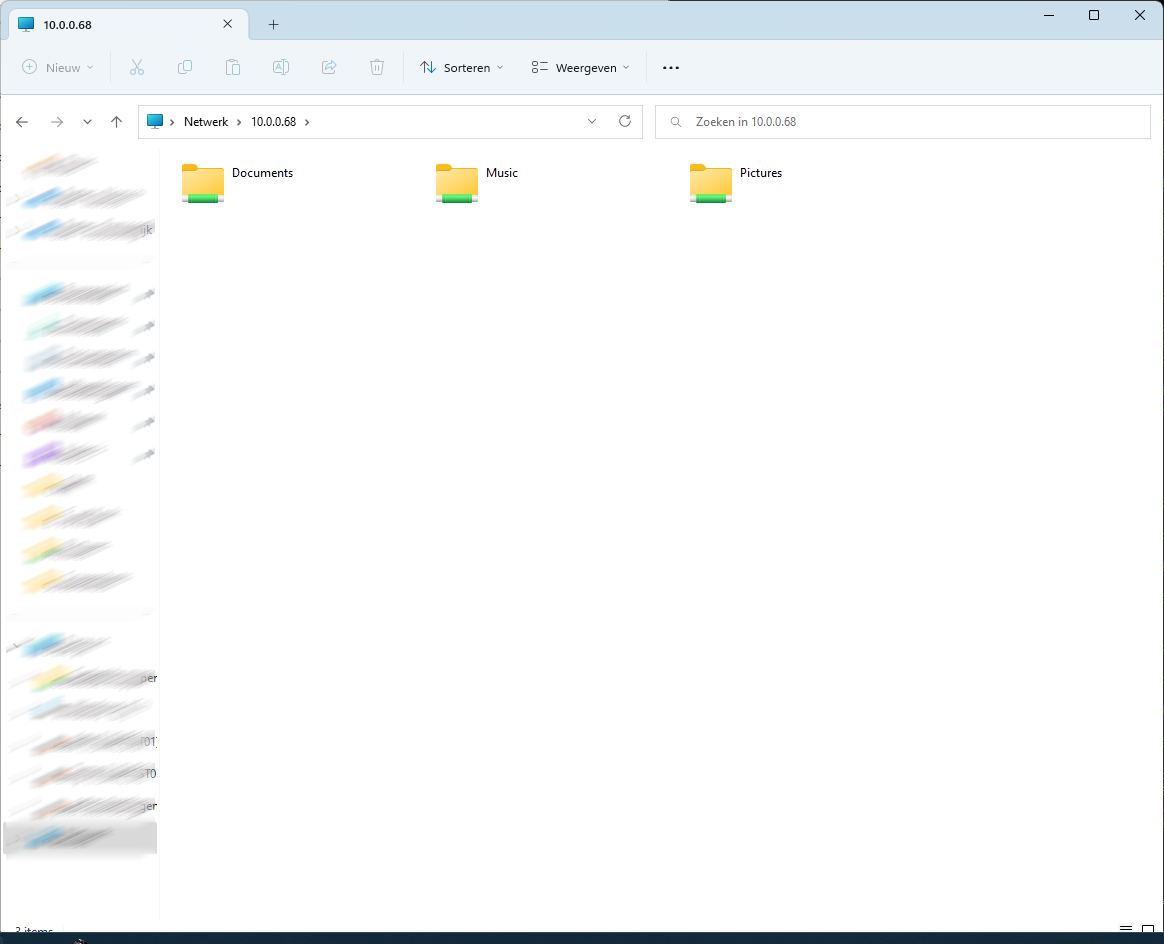

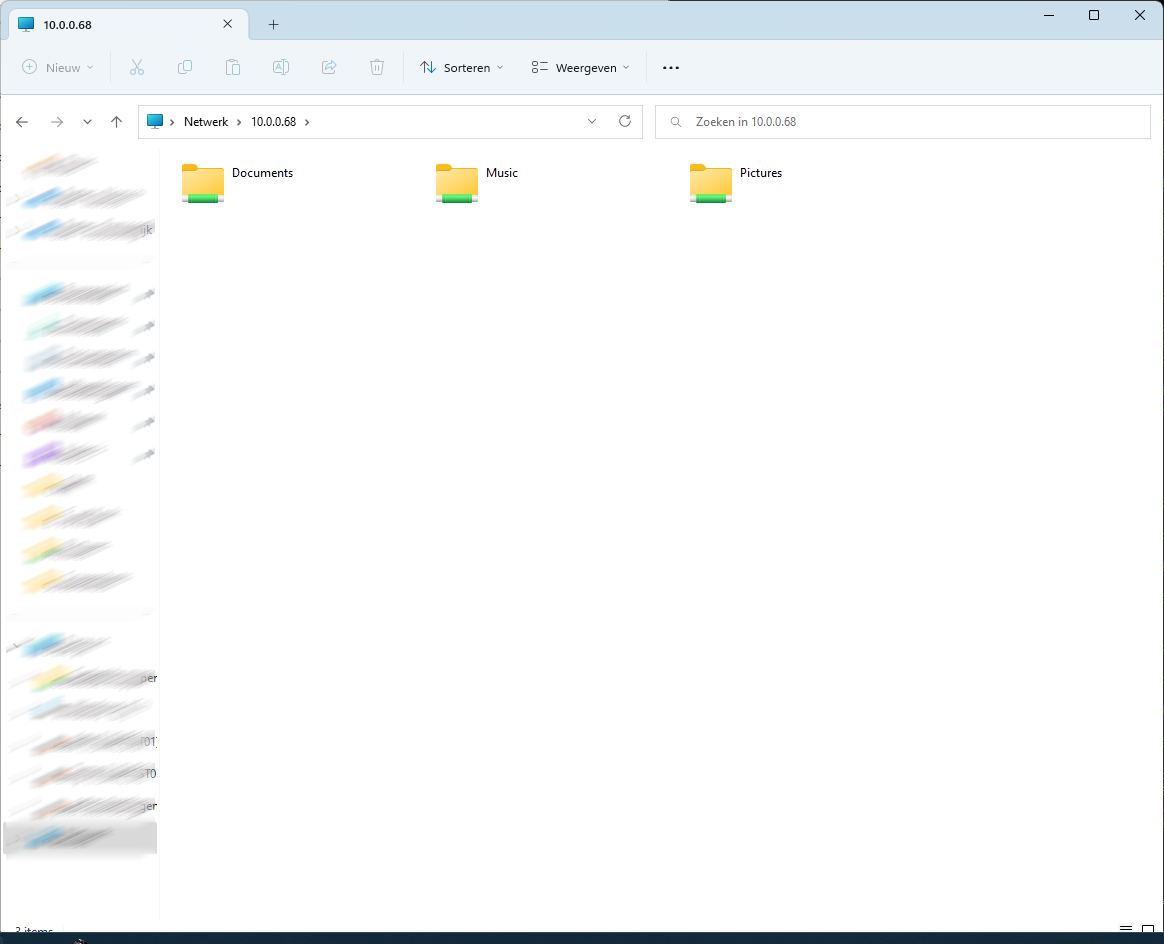

+Your OpenVoiceOS device is accessible over the network from your Windows computer. This is still a work in process, but you can open a file explorer and navigate to you OpenVoiceOS device.

+ +At the moment the following directories within the user's home directory are shared over the network.

+- Documents

+- Music

+- Pictures

+These folders are also used by KDE Connect file transfer plugins and for instance the Camera skill (Hey Mycroft, take a selfie) and / or Homescreen Skill (Hey Mycroft, take a screenshot)

+At the moment the following directories within the user's home directory are shared over the network.

+- Documents

+- Music

+- Pictures

+These folders are also used by KDE Connect file transfer plugins and for instance the Camera skill (Hey Mycroft, take a selfie) and / or Homescreen Skill (Hey Mycroft, take a screenshot)

In the near future the above Windows network shares are also made available over NFS for Linux clients. This is still a Work In Progress / To Do item.

+At this moment development is in very early stages and focussed on the Raspberry Pi 3B & 4. As soon as an initial first workable version +is created, other hardware might be added.

+Source code: https://github.com/OpenVoiceOS/ovos-buildroot

+Only use x86_64 based architecture/ hardware to build the image.

+The following example Build environment has been tested :

+The following system packages are required to build the image:

+In addition to the usual http/https ports (tcp 80, tcp 443) a couple of other ports need to be allowed to the internet : +- tcp 9418 (git). +- tcp 21 (ftp PASV) and random ports for DATA channel. This can be optional but better to have this allowed along with the corresponding random data channel ports. (knowledge of firewalls required)

+First, get the code on your system! The simplest method is via git.

+

+- cd ~/

+- git clone --recurse-submodules https://github.com/OpenVoiceOS/OpenVoiceOS.git

+- cd OpenVoiceOS

(ONLY at the first clean checkout/clone) If this is the very first time you are going to build an image, you need to execute the following command once;

+

+- ./scripts/br-patches.sh

+

+This will patch the Buildroot packages.

Building the image(s) can be done by utilizing a proper Makefile;

+

+To see the available commands, just run: 'make help'

+

+As example to build the rpi4 version;

+- make clean

+- make rpi4_64-gui-config

+- make rpi4_64-gui

Now grab a cup of coffee, go for a walk, sleep and repeat as the build process takes up a long time pulling everything from source and cross compiling everything for the device. Especially the qtwebengine package is taking a LONG time.

+

+(At the moment there is an outstanding issue which prevents the build to run completely to the end. The plasma-workspace package will error out, not finding the libGLESv4 properly linked within QT5GUI. When the build stopped because of this error, edit the following file;

+

+buildroot/output/host/aarch64-buildroot-linux-gnu/sysroot/usr/lib/cmake/Qt5Gui/Qt5GuiConfigExtras.cmake

+

+at the bottom of the file replace this line;

+

+_qt5gui_find_extra_libs(OPENGL "GLESv2" "" "")

+

And replace it bit this line;

+_qt5gui_find_extra_libs(OPENGL "${CMAKE_SYSROOT}/usr/lib/libGLESv2.so" "" "${CMAKE_SYSROOT}/usr/include/libdrm")

+

+Then you can continue the build process by re-running the "make rpi4_64-gui" command. (DO NOT, run "make clean" and/or "make rpi4_64-gui-config" again, or you will start from scratch again !!!)

+

+When everything goes fine the xz compressed image will be available within the release directory.

1.Ensure all required peripherals (mic, speakers, HDMI, usb mouse etc) are plugged in before powering on your RPI4 for the first time.

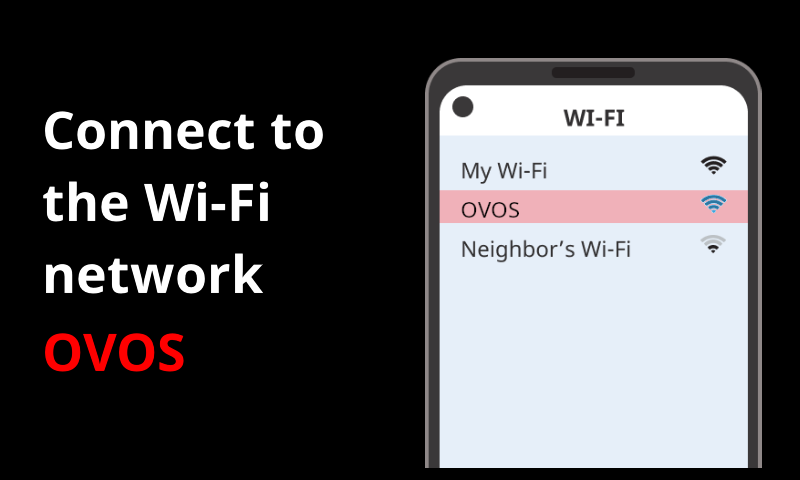

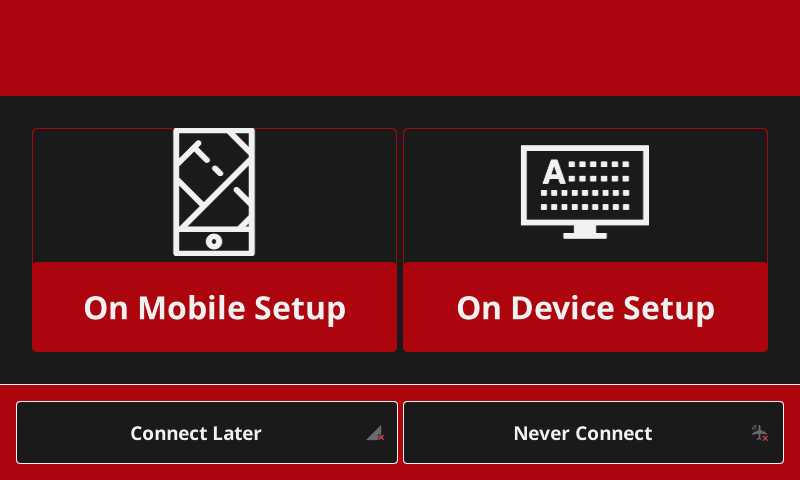

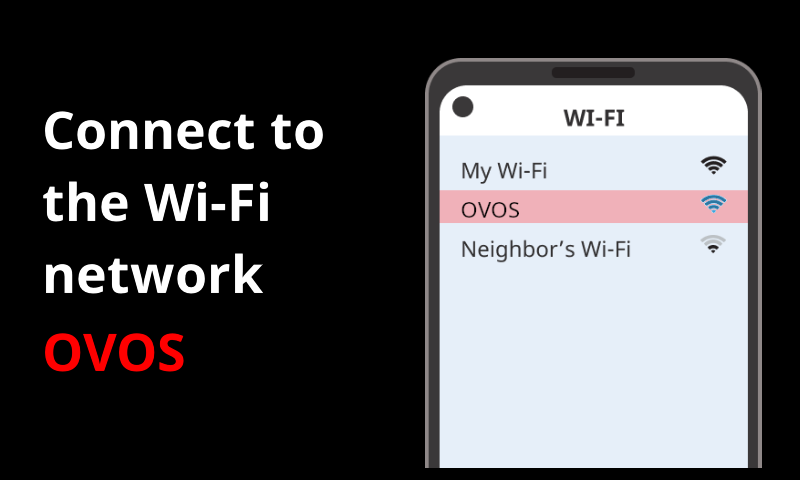

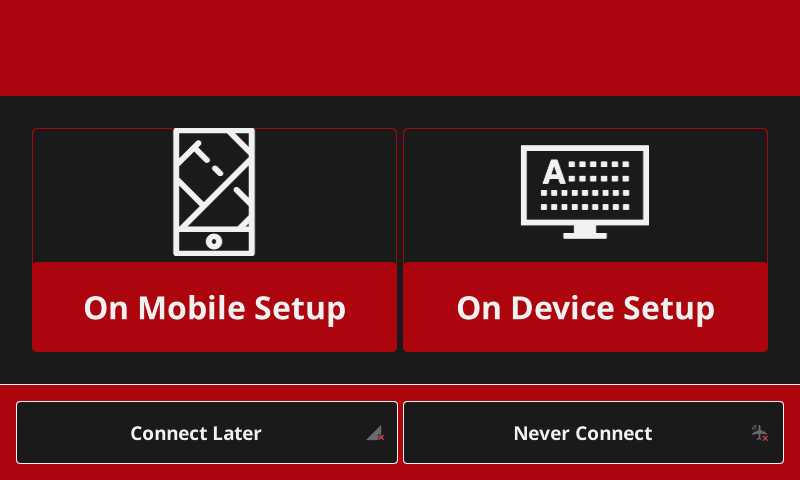

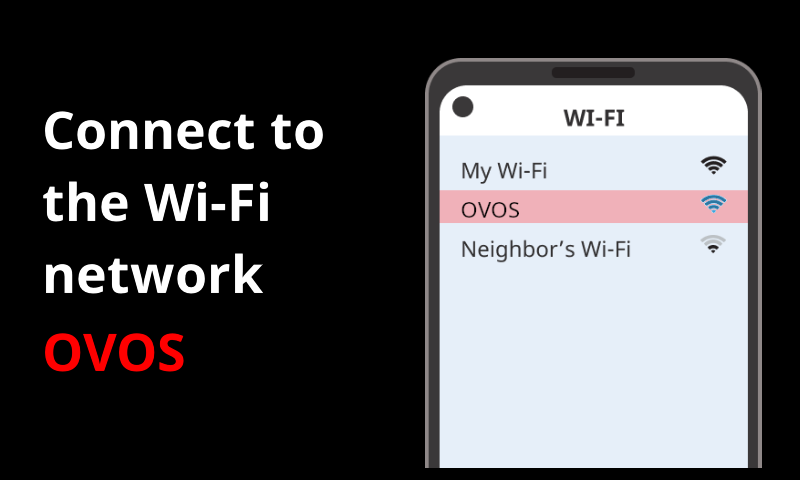

+

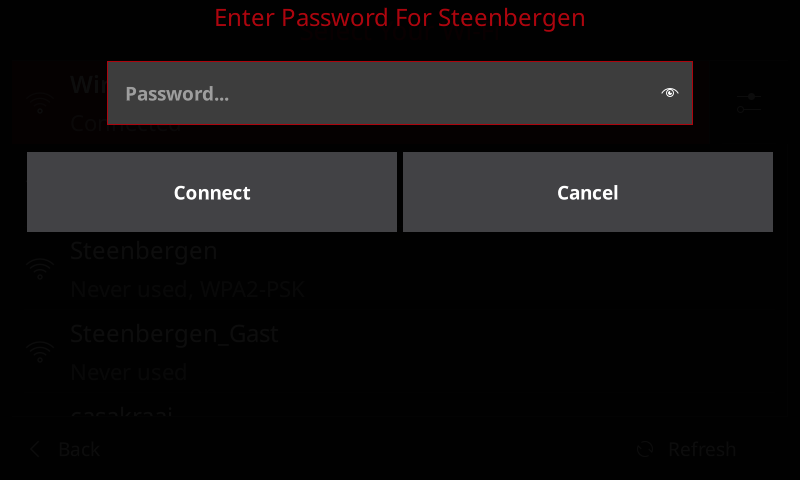

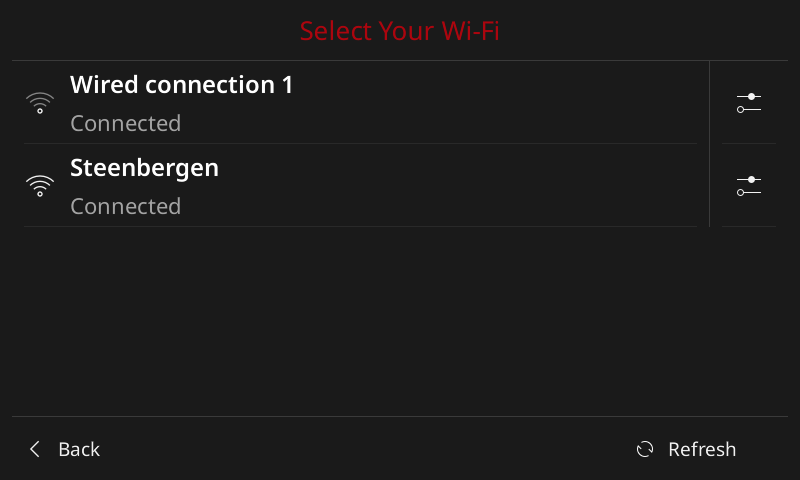

+2. Skip this step if RPI4 is using an ethernet cable. Once powered on, the screen will present the Wifi setup screen ( a Wifi HotSpot is created). Connect to the Wifi HotSpot (ssid OVOS) from another device and follow the on-screen instructions to set up Wifi.

+

+3.Once Wifi is setup a choice of Mycroft backend and Local backend is presented. Choose the Mycroft backend for now and follow the on-screen instructions, Local backend is not ready to use yet. After the pairing process has completed and skills downloaded it's time to test/ use it.

** Coming soon **

+ +

The OVOS project documentation is written and maintained by users just like you!

+These documents are your starting point for installing and using OpenVoiceOS software

+Note some sections may be incomplete or outdated

+Please open Issues and Pull Requests!

+Check out our Quick Start Guide for help with installing an image, your first boot, and basic configuration.

+If this is your first experience with OpenVoiceOS, or you're not sure where to get started, +say hi in OpenVoiceOS Chat and a team member would be happy to mentor you. +Join the Discussions for questions and answers.

+The below links are in the process of being deprecated.

+ + +The GUI is a totaly optional component of OVOS, but adds a ton more functionality to the device. Some skills will not work without one.

+The OVOS GUI is an independent component of OVOS which uses QT5/6 to display information on your devices screen. It is touchscreen compliant 1, and has an on-screen keyboard for entering data. On a Raspberry Pi, the GUI runs in a framebuffer, therefore not needing a full window manager. This saves resources on underpowered devices.

+mycroft-gui-qt5 is a fork of the original mycroft-gui

+mycroft-gui-qt6 is in the works, but not all skills support it yet.

+The GUI software comes with a nice script which will install the needed packages for you.

+To get the software we will use git and the dev_setup.sh script that is provided.

cd ~

+git clone https://github.com/OpenVoiceOS/mycroft-gui-qt5

+cd mycroft-gui-qt5

+bash dev_setup.sh

+NOTE The mycroft-gui is NOT a python script, therefore will not run in the venv created for the rest of the software stack.

+That is all it takes to install the GUI for OVOS. Invoke the GUI with the command:

+ovos-gui-app

You can refer to the README in the mycroft-gui-qt5 repository for more information

+ +It has been my experience that while the touchscreen will work with OVOS, some have the touch matrix opposite of what the screen is displayed. With one of these screens, it is still possible to use it, but you will need a full window manager installed instead of the GUI running in a framebuffer.

+ +OpenVoiceOS is an open source platform for smart speakers and other voice-centric devices.

+ovos-core is a backwards-compatible descendant of Mycroft-core, the central component of Mycroft. It contains extensions and features not present upstream.

All Mycroft Skills and Plugins should work normally with OVOS-core.

+ovos-core is fully modular. Furthermore, common components have been repackaged as plugins. That means it isn't just a great assistant on its own, but also a pretty small library!

There a couple of ways to install and use the OVOS ecosystem.

+The easiest and fastest way to experience what OVOS has to offer is to use one of the prebuilt images that the OVOS team has provided.

+NOTE Images are currently only available for a RPi3b/b+/4. More may be on the way.

+Images are not the only way to use OVOS. It can be installed on almost any system as a set of Python libraries. ovos-core is very modular; depending on where you are running ovos-core you may want to run only a subset of the services

This is an advanced setup and requires access to a command shell and can take more effort to get working.

+Get started with OVOS libraries

+Docker images are also available and have been tested and working on Linux, Windows, and even Mac.

+ + +The OVOS ecosystem is very modular, depending on where you are running ovos-core you may want to run only a subset of the services.

By default ovos-core only installs the minimum components common to all services, for the purposes of this document we will assume you want a full install with a GUI.

NOTE The GUI requires separate packages in addition to what is required by ovos-core. The GUI installation is covered in its own section.

OVOS requires some system dependencies, how to do this will depend on your distro.

+Ubuntu/Debian based images.

+sudo apt install build-essential python3-dev python3-pip swig libssl-dev libfann-dev portaudio19-dev libpulse-dev

+A few packages are not necessary, but are needed to install from source and may be required for some plugins. To add these packages run this command.

+sudo apt install git libpulse-dev cmake libncurses-dev pulseaudio-utils pulseaudio

+NOTE: MycroftAI's dev_setup.sh does not exist in OVOS-core. See the community provided, WIP, manual_user_install for a minimal, almost, replacement.

We suggest you do this in a virtualenv.

+Create and activate the virtual environment.

+python -m venv .venv

+. .venv/bin/activate

+Update pip and install wheel

pip install -U pip wheel

ovos-coreTo install a full OVOS software stack with enough skills and plugins to have a working system, the OVOS team includes a subset of packages that can be installed automatically with pip.

+It is recommended to use the latest alpha versions until the 0.1.0 release as it contains all of the latest bug fixes and improvements.

latest stable

+ovos-core 0.0.7 does not include the new extras [mycroft] so we use [all].

pip install ovos-core[all]

alpha version

+pip install --pre ovos-core[mycroft]

This should install everything needed for a basic OVOS software stack.

+There are additional extras options available other than [mycroft] and can be found in the ovos-core setup.py file.

Each module can be installed independently to only include the parts needed or wanted for a specific system.

+ovos-core

+pip install --pre ovos-core

ovos-messagebus

+pip install --pre ovos-messagebus

ovos-audio

+pip install --pre ovos-audio

dinkum-listener

+pip install --pre ovos-dinkum-listener

ovos-phal

+pip install --pre ovos-phal

We will use git to clone the repositories to a local directory. While not specifically necessary, we are assuming this to be the users HOME directory.

Install ovos-core from github source files.

git clone https://github.com/OpenVoiceOS/ovos-core

The ovos-core repository provides extra requirements files. For the complete stack, we will use the mycroft.txt file.

pip install ~/ovos-core[mycroft]

This should install everything needed to use the basic OVOS software stack.

+NOTE this also installs lgpl licenced software.

Some systems may not require a full install of OVOS. Luckily, it can be installed as individual modules.

+core library

+git clone https://github.com/OpenVoiceOS/ovos-core

pip install ~/ovos-core

This is the minimal library needed as the brain of the system. There are no skills, no messagebus, and no plugins installed yet.

+ +messagebus

+git clone https://github.com/OpenVoiceOS/ovos-messagebus

pip install ~/ovos-messagebus

This is the nervous system of OVOS needed for modules to talk to each other.

+ +listener

+OVOS has updated their listener to use ovos-dinkum-listener instead of ovos-listener. It is code from mycroft-dinkum adopted for use with the OVOS ecosystem. Previous listeners are still available, but not recommended.

git clone https://github.com/OpenVoiceOS/ovos-dinkum-listener

pip install ~/ovos-dinkum-listener

You now have what is needed for OVOS to use a microphone and its associated services, WakeWords, HotWords, and STT

+ +PHAL

+The OVOS Plugin based Hardware Abstraction Layer is what is used to allow the OVOS software to communicate with hardware devices such as the operating system or interacting with the Mycroft Mark 1 device.

+The PHAL system consists of two interfaces.

+ovos-phal is the basic interface that normal plugins would use.

ovos-admin-phal is used where superuser privileges are needed.

Be extremely careful when installing admin-phal plugins as they provide full control over the host system.

git clone https://github.com/OpenVoiceOS/ovos-PHAL

pip install ~/ovos-PHAL

This just installs the basic system that allows the plugins to work.

+ +audio

+This is the service that is used by OVOS to play all of the audio. It can be a voice response, or a stream from somewhere such as music, or a podcast.

+It also installs OVOS Common Play which can be used as a standalone media player and is required for OVOS audio playback.

+git clone https://github.com/OpenVoiceOS/ovos-audio

pip install ~/ovos-audio

This will enable the default TTS (Text To Speech) engine for voice feedback from your OVOS device. However, plenty of alternative TTS engines are available.

+ +You now should have all of the separate components needed to run a full OVOS software stack.

+ + +KDE Connect is a multi-platform application developed by KDE, which facilitates wireless communications and data transfer between devices over local networks and is installed and configured by default on the Buildroot based image.

+A couple of features of KDE Connect are:

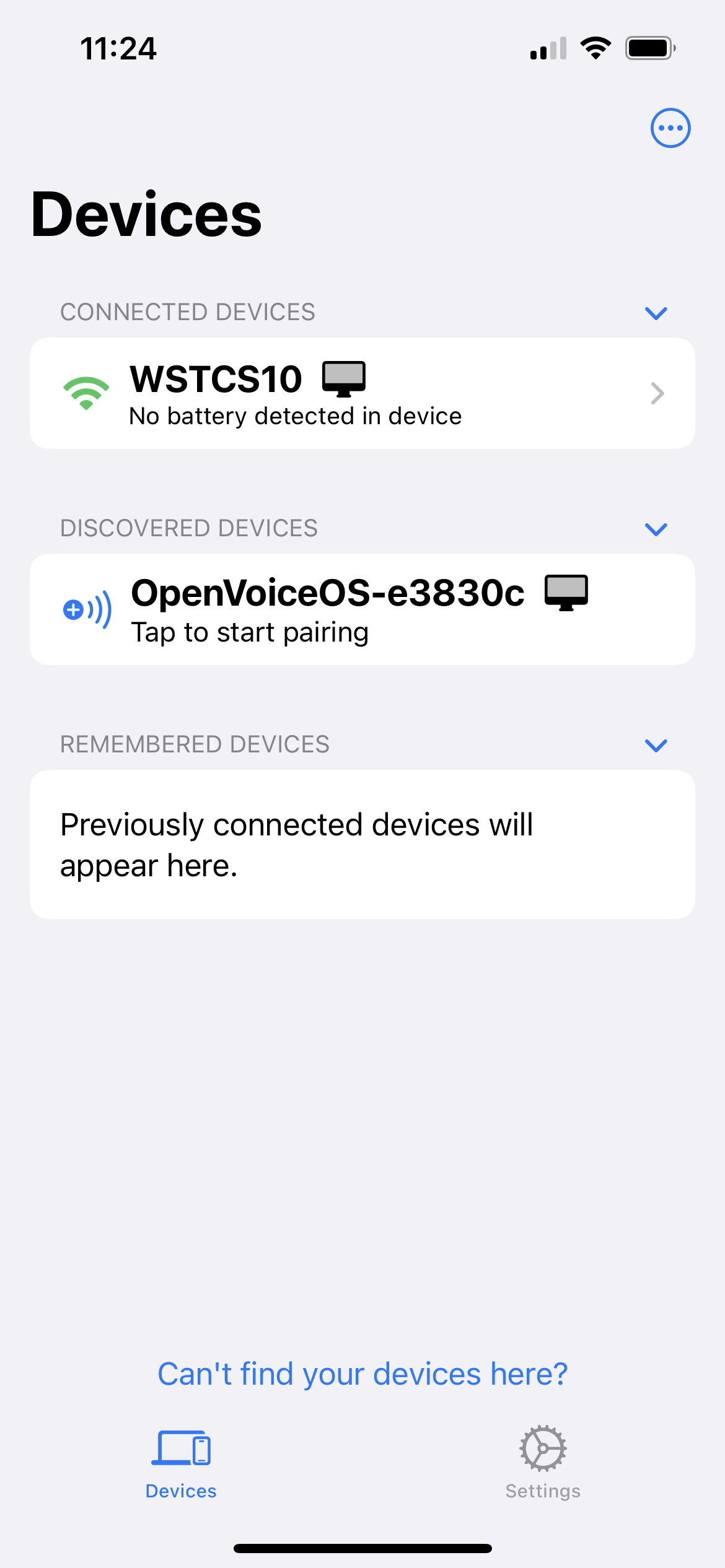

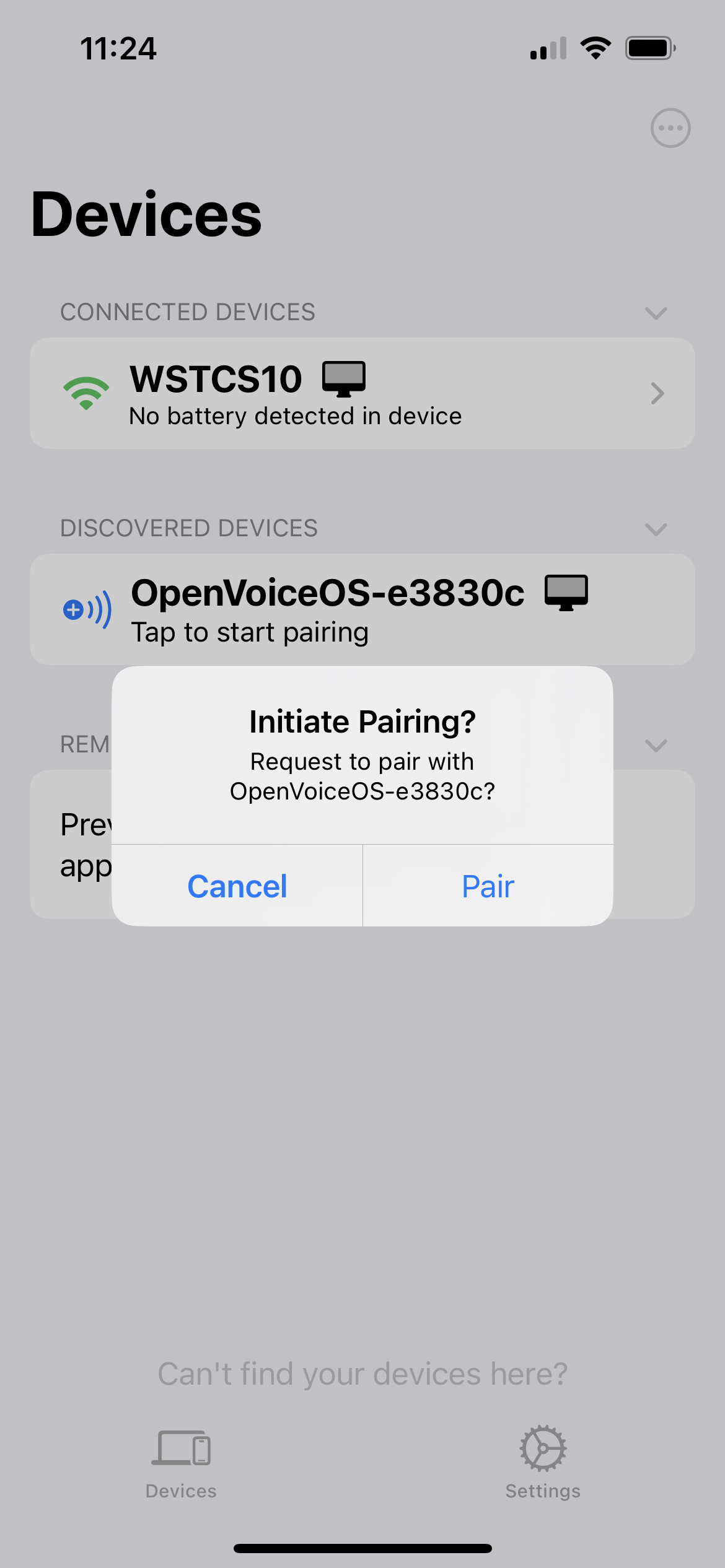

+For the sake of simplicity the below screenshots are made using the iPhone KDE Connect client, however as it is not yet fully feature complete and / or stable, it is recommended to use the Android and / or Linux client. Especially if you would like to have full MPRIS control of your OpenVoiceOS device.

+On your mobile device, open the KDE Connect app and it will see the advertised OpenVoiceOS KDE Connect device automatically.

+

We have a universal donor policy, our code should be able to be used anywhere by anyone, no ifs or conditions attached.

+OVOS is predominately Apache2 or BSD licensed. There are only a few exceptions to this, which are all licensed under other compatible open source licenses.

+Individual plugins or skills may have their own license, for example mimic3 is AGPL, so we can not change the license of our plugin.

+We are committed to maintain all core components fully free, any code that we have no control over the license will live in an optional plugin and be flagged as such.

+This includes avoiding LGPL code for reasons explained here.

+Our license policy has the following properties:

+The license does not restrict the software that may run on OVOS, however -- and thanks to the plugin architecture, even traditionally tightly-coupled components such as drivers can be distributed separately, so maintainers are free to choose whatever license they like for their projects.

+The following repositories do not respect our universal donor policy, please ensure their licenses are compatible before you use them

+| Repository | +License | +Reason | +

|---|---|---|

| ovos-intent-plugin-padatious | +Apache2.0 | +padatious license might not be valid, depends on libfann2 (LGPL) | +

| ovos-tts-plugin-mimic3 | +AGPL | +depends on mimic3 (AGPL) | +

| ovos-tts-plugin-SAM | +? | +reverse engineered abandonware | +

JarbasAI

+Daniel McKnight

+j1nx

+forslund

+ChanceNCounter

+5trongthany

+builderjer

+goldyfruit

+mikejgray

+emphasize

+dscripka

use with ovos-tts-server-plugin

+Mimic1 TTS

+Mimic3 TTS

+Piper TTS

use with ovos-tts-server-plugin

+ +use with ovos-stt-server-plugin

+ +use with ovos-tts-plugin-mimic3-server

+ +use with ovos-stt-server-plugin

+ +use with ovos-tts-plugin-mimic3-server

+ + +| Feature | +Mycroft | +OVOS | +Description | +

|---|---|---|---|

| Wake Word (listen) | +yes | +yes | +Only transcribe speech (STT) after a certain word is spoken | +

| Wake Up Word (sleep mode) | +yes | +yes | +When in sleep mode only listen for "wake up" (no STT) | +

| Hotword (bus event) | +no | +yes | +Emit bus events when a hotword is detected (no STT) | +

| Multiple Wake Words | +no | +yes | +Load multiple hotword engines/models simultaneously | +

| Fallback STT | +no | +yes | +fallback STT if the main one fails (eg, internet outage) | +

| Instant Listen | +no | +yes | +Do not pause between wake word detection and recording start | +

| Hybrid Listen | +no | +WIP | +Do not require wake word for follow up questions | +

| Continuous Listen | +no | +WIP | +Do not require wake word, always listen using VAD | +

| Recording mode | +no | +WIP | +Save audio instead of processing speech | +

| Wake Word Plugins | +yes | +yes | +Supports 3rd party integrations for hotword detection | +

| STT Plugins | +yes | +yes | +Supports 3rd party integrations for STT | +

| VAD plugins | +no * | +yes | +Supports 3rd party integrations for voice activity detection | +

NOTES:

+mycroft.listenermodule| Feature | +Mycroft | +OVOS | +Description | +

|---|---|---|---|

| MPRIS integration | +no | +yes | +Integrate with MPRIS protocol | +

NOTES:

+| Feature | +Mycroft | +OVOS | +Description | +

|---|---|---|---|