diff --git a/datahub-web-react/src/appConfigContext.tsx b/datahub-web-react/src/appConfigContext.tsx

index 3b34b108ecc93..807a17c4fd6a4 100644

--- a/datahub-web-react/src/appConfigContext.tsx

+++ b/datahub-web-react/src/appConfigContext.tsx

@@ -27,6 +27,9 @@ export const DEFAULT_APP_CONFIG = {

entityProfile: {

domainDefaultTab: null,

},

+ searchResult: {

+ enableNameHighlight: false,

+ },

},

authConfig: {

tokenAuthEnabled: false,

diff --git a/datahub-web-react/src/conf/theme/theme_dark.config.json b/datahub-web-react/src/conf/theme/theme_dark.config.json

index b648f3d997f21..9746c3ddde5f3 100644

--- a/datahub-web-react/src/conf/theme/theme_dark.config.json

+++ b/datahub-web-react/src/conf/theme/theme_dark.config.json

@@ -17,7 +17,9 @@

"disabled-color": "fade(white, 25%)",

"steps-nav-arrow-color": "fade(white, 25%)",

"homepage-background-upper-fade": "#FFFFFF",

- "homepage-background-lower-fade": "#333E4C"

+ "homepage-background-lower-fade": "#333E4C",

+ "highlight-color": "#E6F4FF",

+ "highlight-border-color": "#BAE0FF"

},

"assets": {

"logoUrl": "/assets/logo.png"

diff --git a/datahub-web-react/src/conf/theme/theme_light.config.json b/datahub-web-react/src/conf/theme/theme_light.config.json

index e842fdb1bb8aa..906c04e38a1ba 100644

--- a/datahub-web-react/src/conf/theme/theme_light.config.json

+++ b/datahub-web-react/src/conf/theme/theme_light.config.json

@@ -20,7 +20,9 @@

"homepage-background-lower-fade": "#FFFFFF",

"homepage-text-color": "#434343",

"box-shadow": "0px 0px 30px 0px rgb(239 239 239)",

- "box-shadow-hover": "0px 1px 0px 0.5px rgb(239 239 239)"

+ "box-shadow-hover": "0px 1px 0px 0.5px rgb(239 239 239)",

+ "highlight-color": "#E6F4FF",

+ "highlight-border-color": "#BAE0FF"

},

"assets": {

"logoUrl": "/assets/logo.png"

diff --git a/datahub-web-react/src/conf/theme/types.ts b/datahub-web-react/src/conf/theme/types.ts

index 98140cbbd553d..7d78230092700 100644

--- a/datahub-web-react/src/conf/theme/types.ts

+++ b/datahub-web-react/src/conf/theme/types.ts

@@ -18,6 +18,8 @@ export type Theme = {

'homepage-background-lower-fade': string;

'box-shadow': string;

'box-shadow-hover': string;

+ 'highlight-color': string;

+ 'highlight-border-color': string;

};

assets: {

logoUrl: string;

diff --git a/datahub-web-react/src/graphql/app.graphql b/datahub-web-react/src/graphql/app.graphql

index 4b1295f1024a2..bf15e5f757f8f 100644

--- a/datahub-web-react/src/graphql/app.graphql

+++ b/datahub-web-react/src/graphql/app.graphql

@@ -45,6 +45,9 @@ query appConfig {

defaultTab

}

}

+ searchResult {

+ enableNameHighlight

+ }

}

telemetryConfig {

enableThirdPartyLogging

diff --git a/datahub-web-react/src/graphql/search.graphql b/datahub-web-react/src/graphql/search.graphql

index 172a6d957e287..7cd868d7cd2b2 100644

--- a/datahub-web-react/src/graphql/search.graphql

+++ b/datahub-web-react/src/graphql/search.graphql

@@ -832,6 +832,11 @@ fragment searchResults on SearchResults {

matchedFields {

name

value

+ entity {

+ urn

+ type

+ ...entityDisplayNameFields

+ }

}

insights {

text

@@ -841,6 +846,11 @@ fragment searchResults on SearchResults {

facets {

...facetFields

}

+ suggestions {

+ text

+ frequency

+ score

+ }

}

fragment schemaFieldEntityFields on SchemaFieldEntity {

diff --git a/docker/airflow/local_airflow.md b/docker/airflow/local_airflow.md

index d0a2b18cff2d2..55a64f5c122c5 100644

--- a/docker/airflow/local_airflow.md

+++ b/docker/airflow/local_airflow.md

@@ -138,25 +138,57 @@ Successfully added `conn_id`=datahub_rest_default : datahub_rest://:@http://data

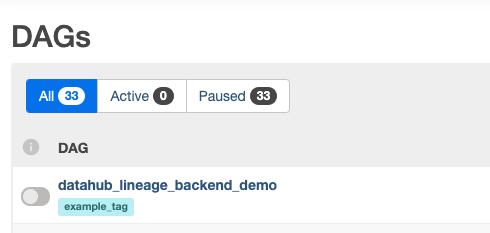

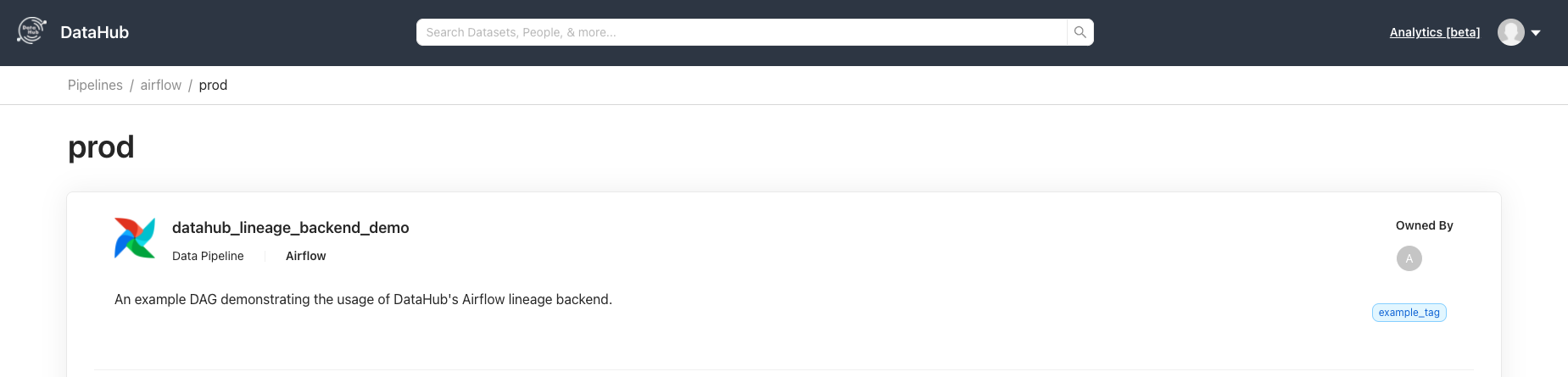

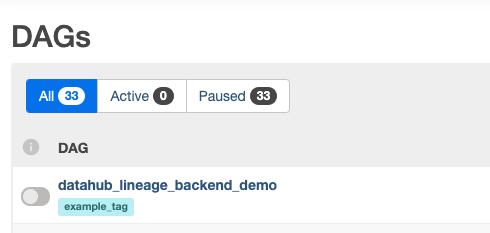

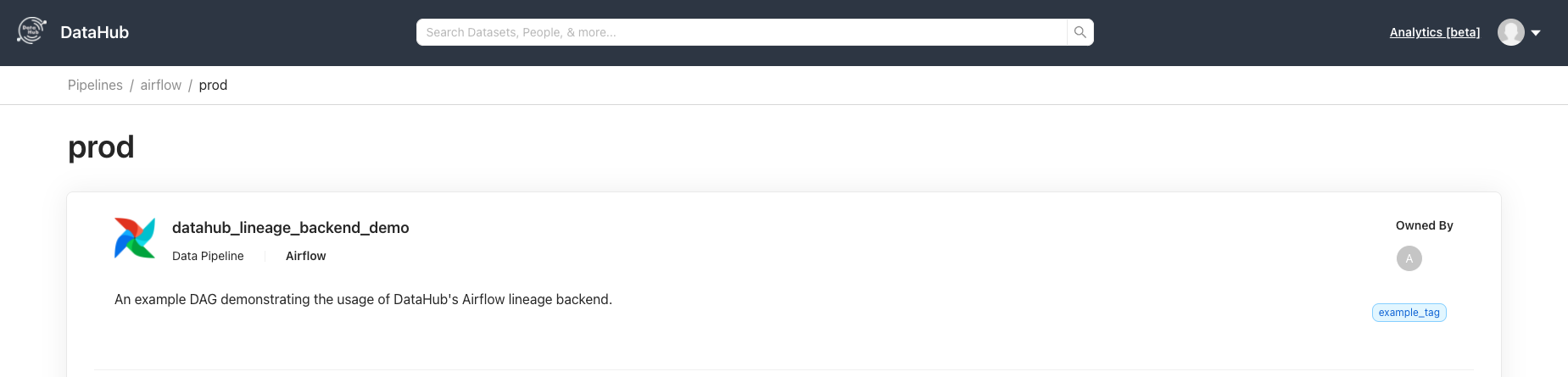

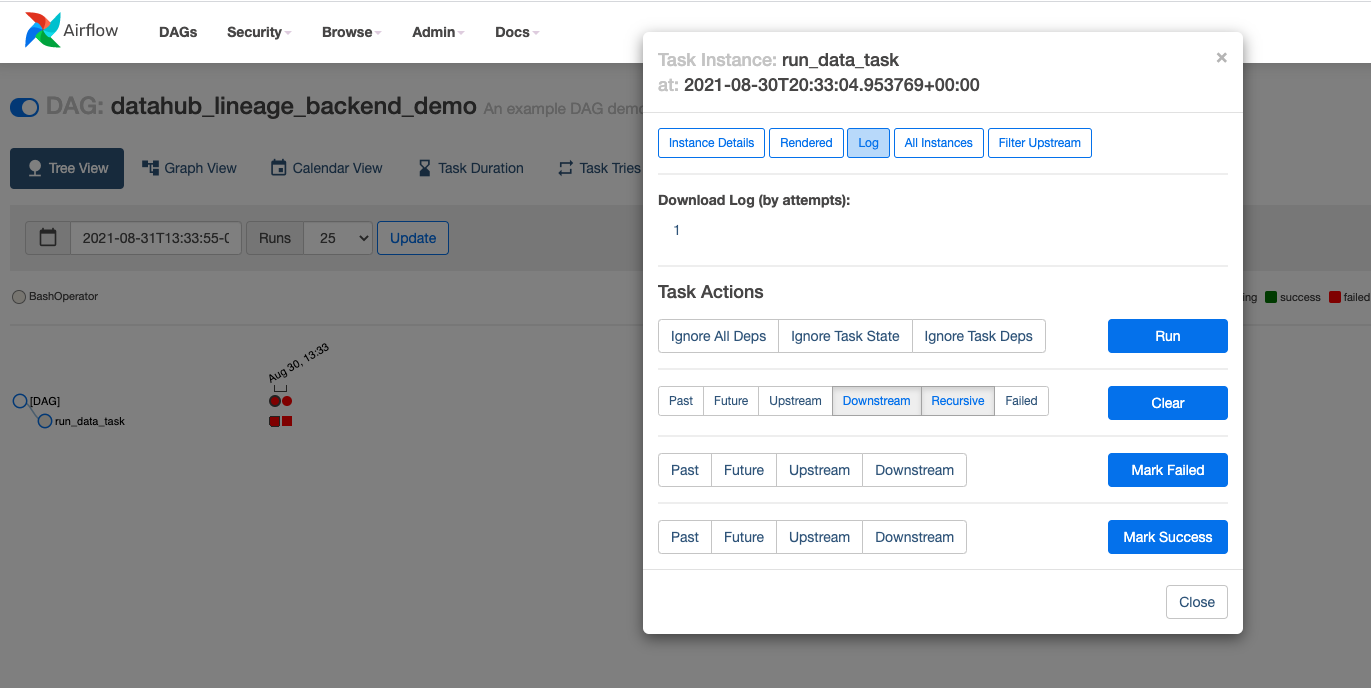

Navigate the Airflow UI to find the sample Airflow dag we just brought in

-

+

+

+  +

+

+

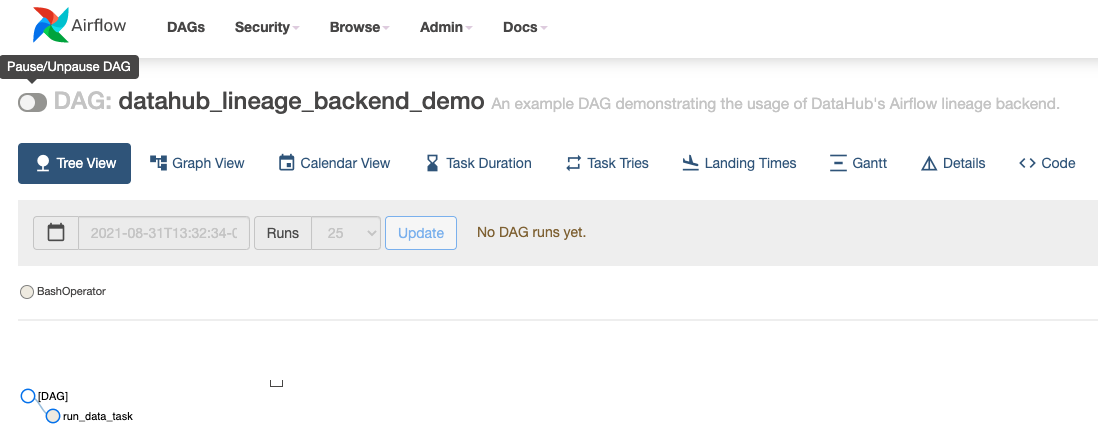

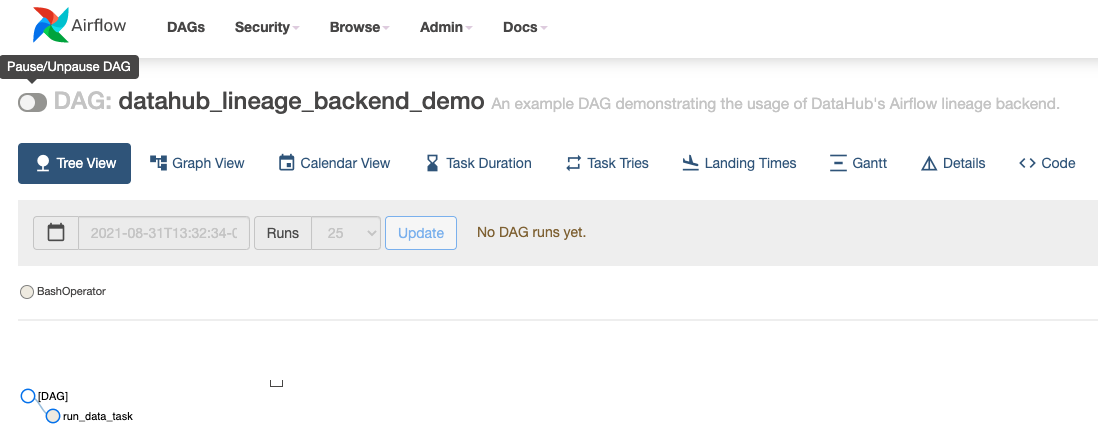

By default, Airflow loads all DAG-s in paused status. Unpause the sample DAG to use it.

-

-

+

+

+  +

+

+

+

+

+  +

+

+

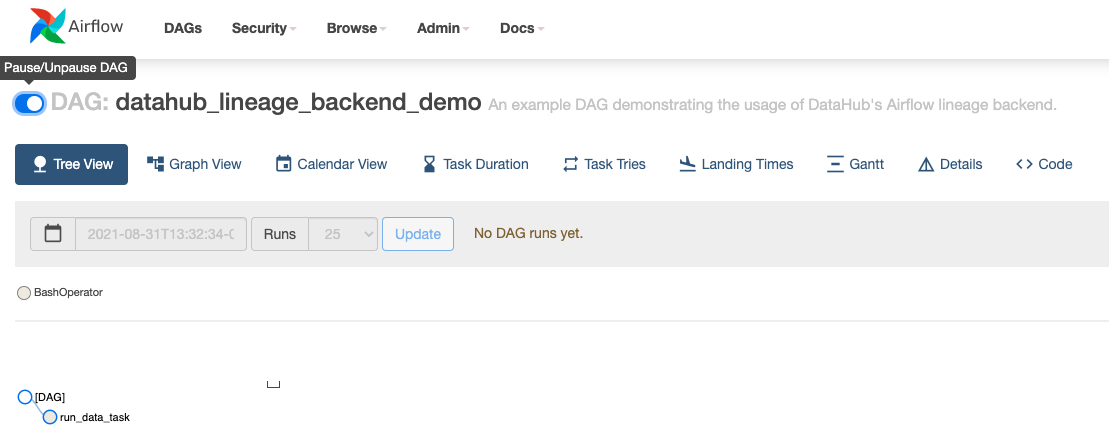

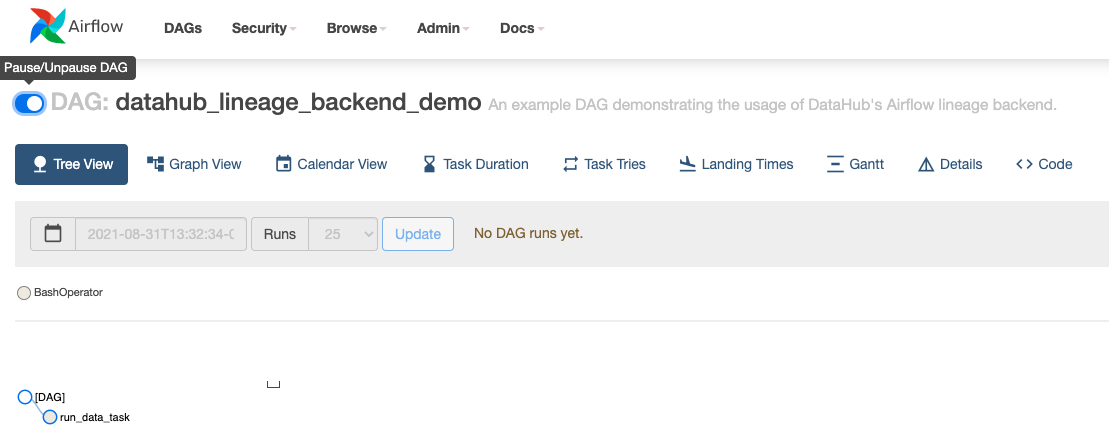

Then trigger the DAG to run.

-

+

+

+  +

+

+

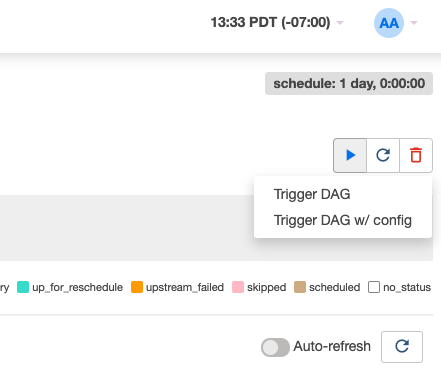

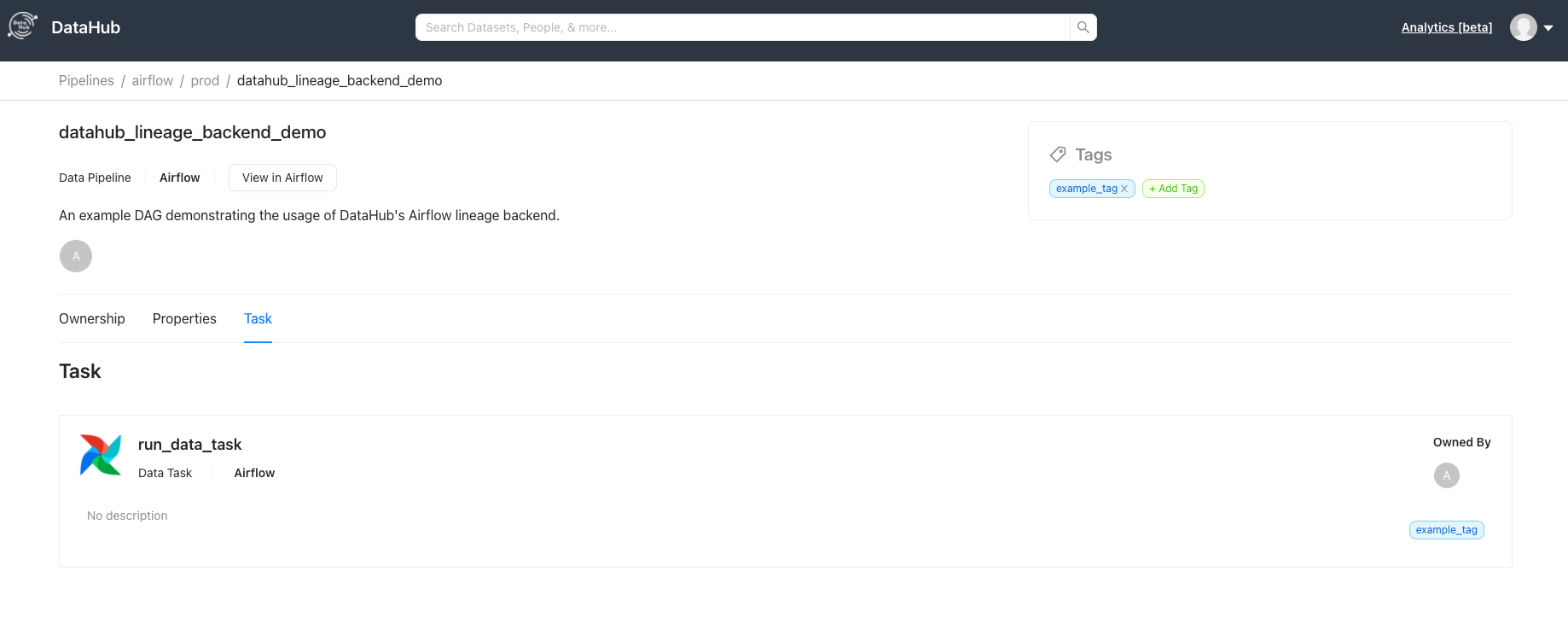

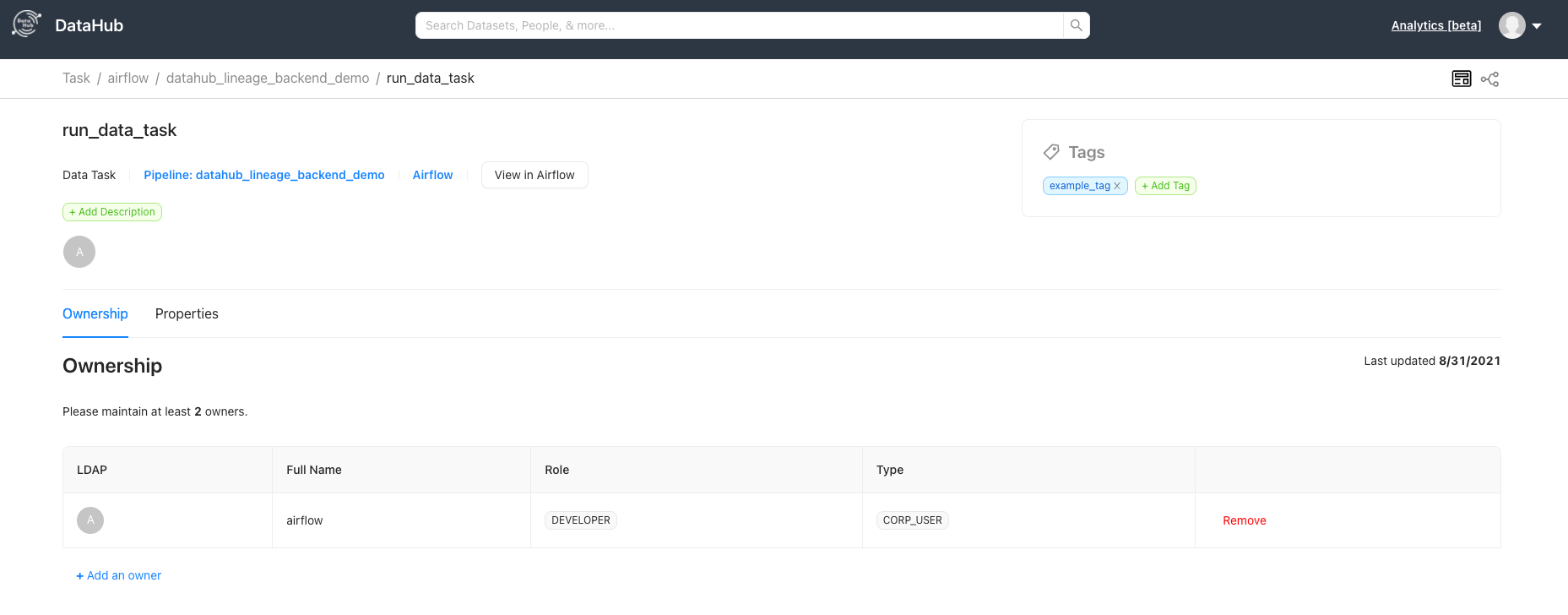

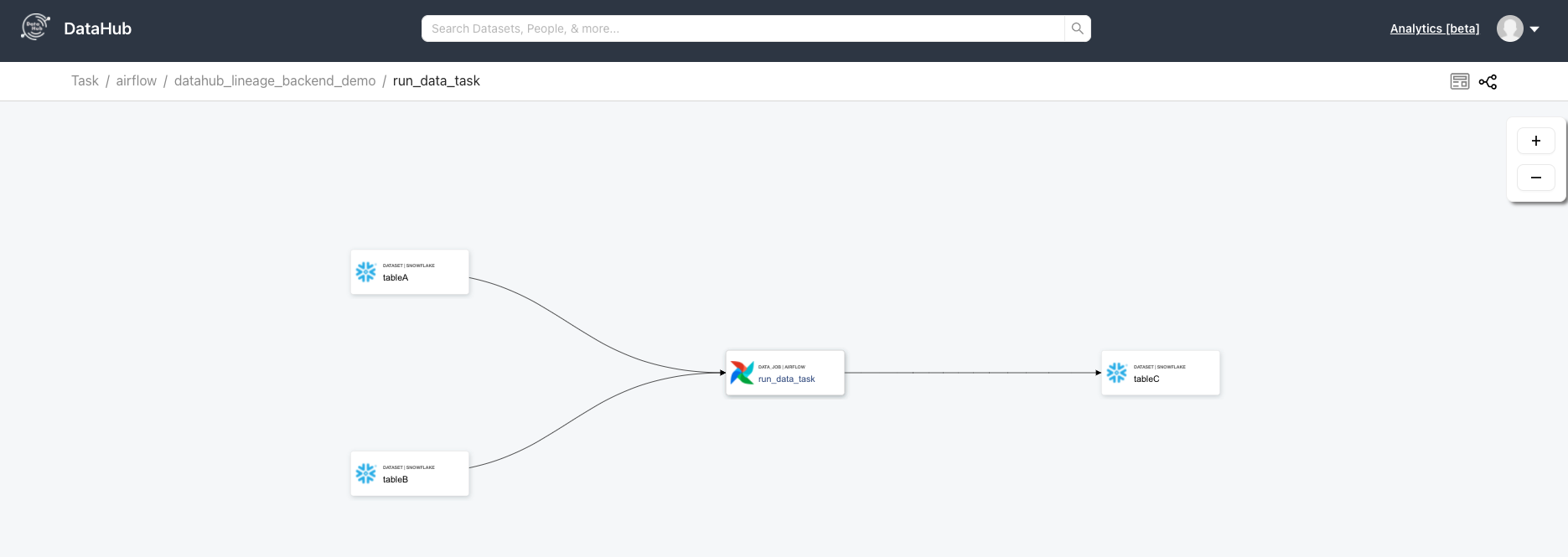

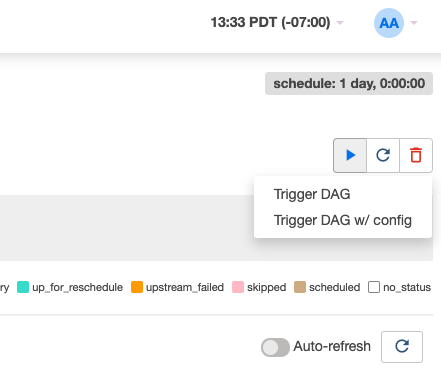

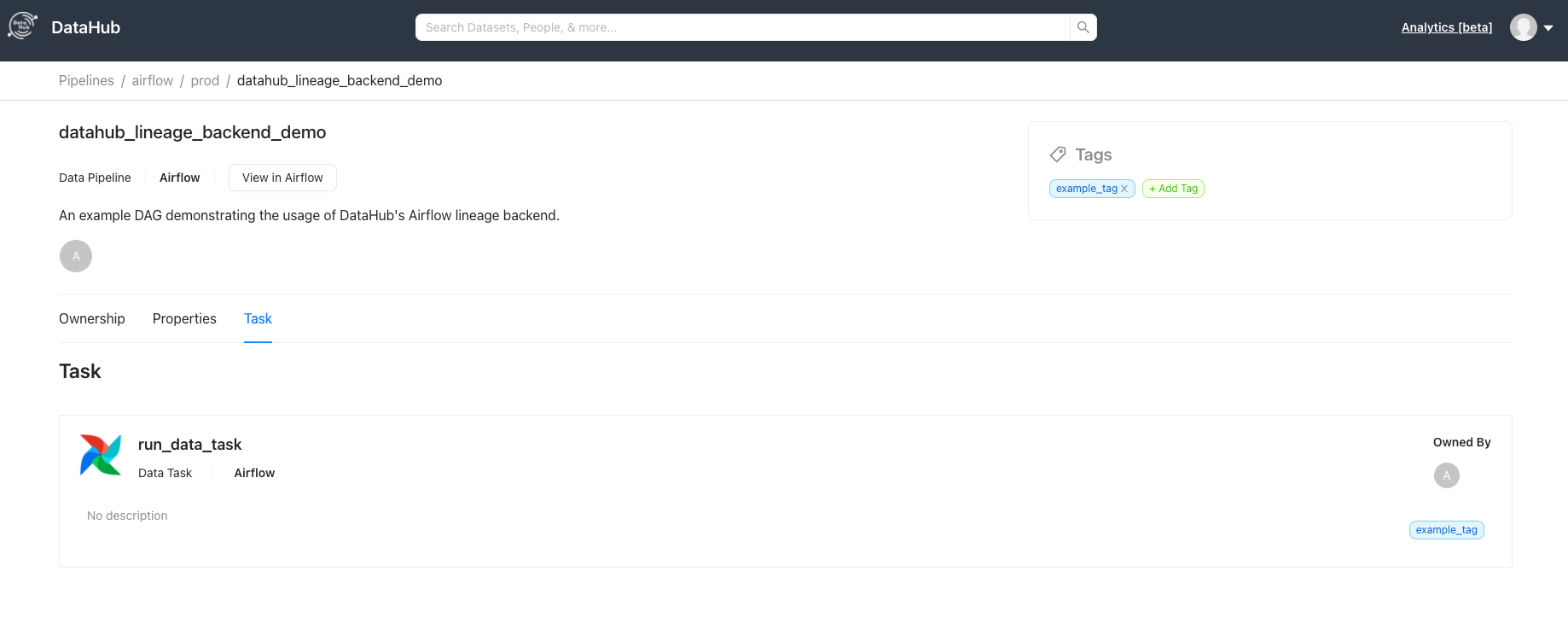

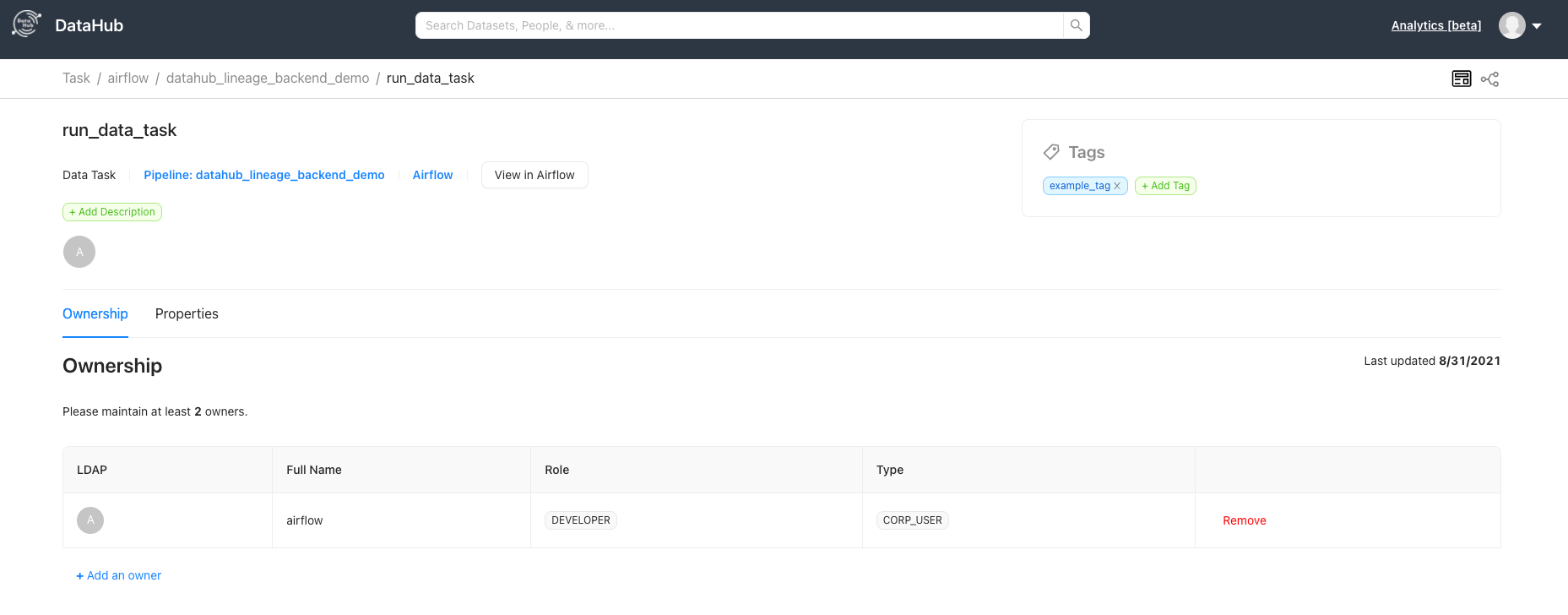

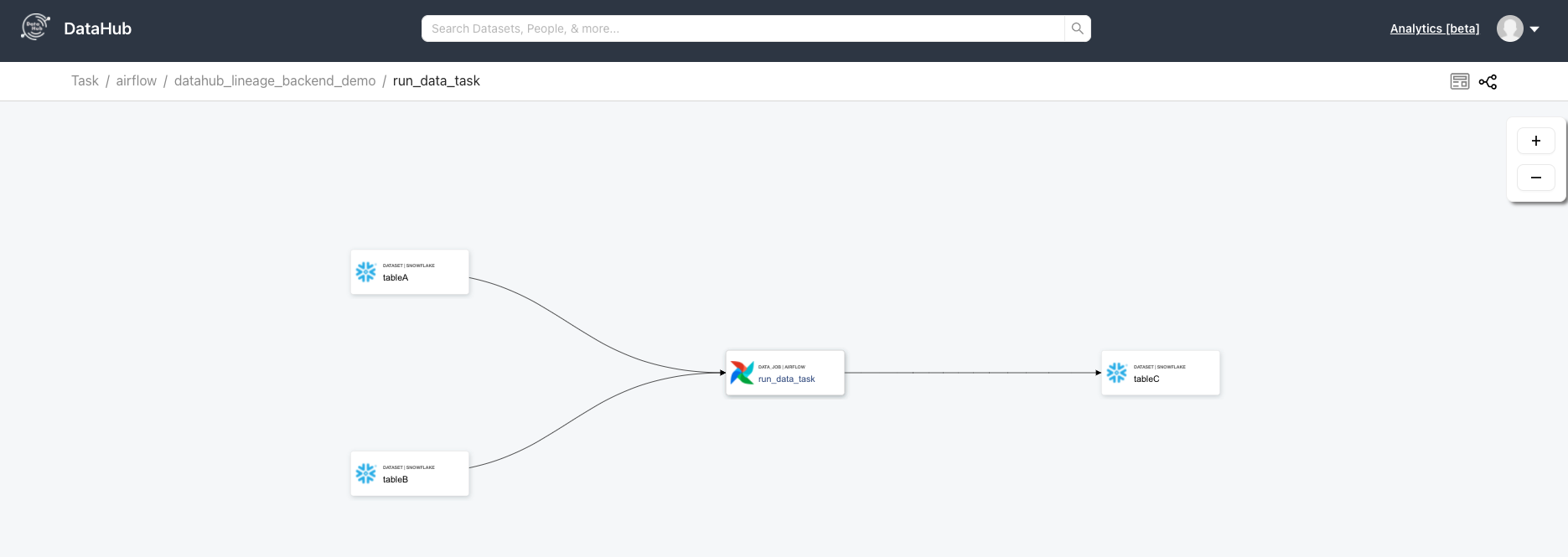

After the DAG runs successfully, go over to your DataHub instance to see the Pipeline and navigate its lineage.

-

-

+

+  +

+

+

+

+

+

+  +

+

-

-

+

+

+  +

+

+

+

+

+

+  +

+

+

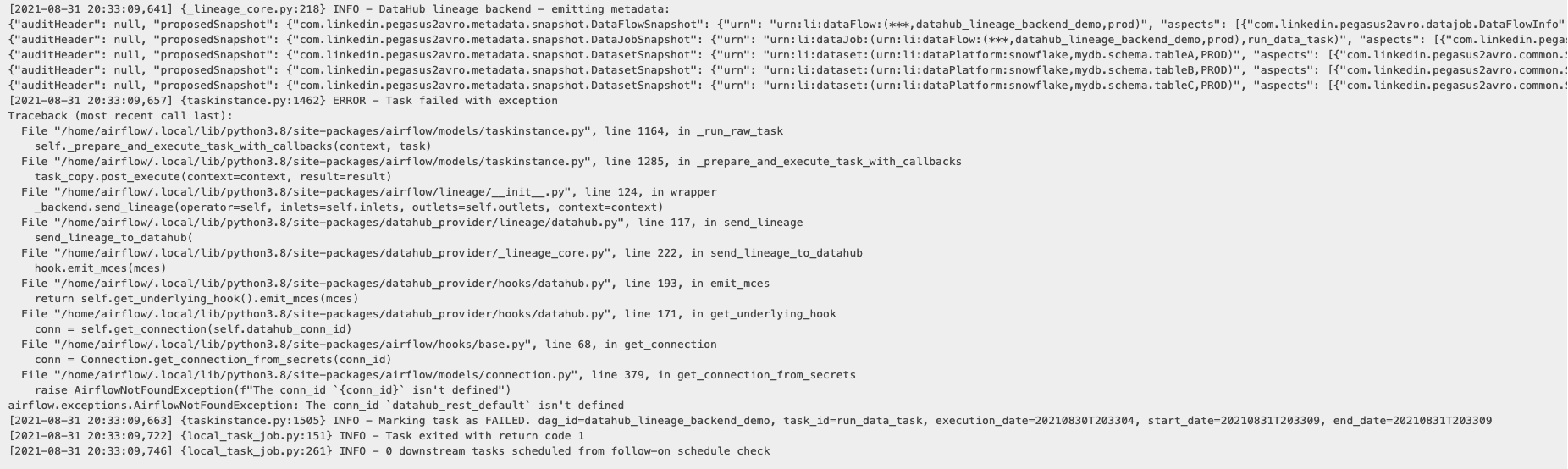

## TroubleShooting

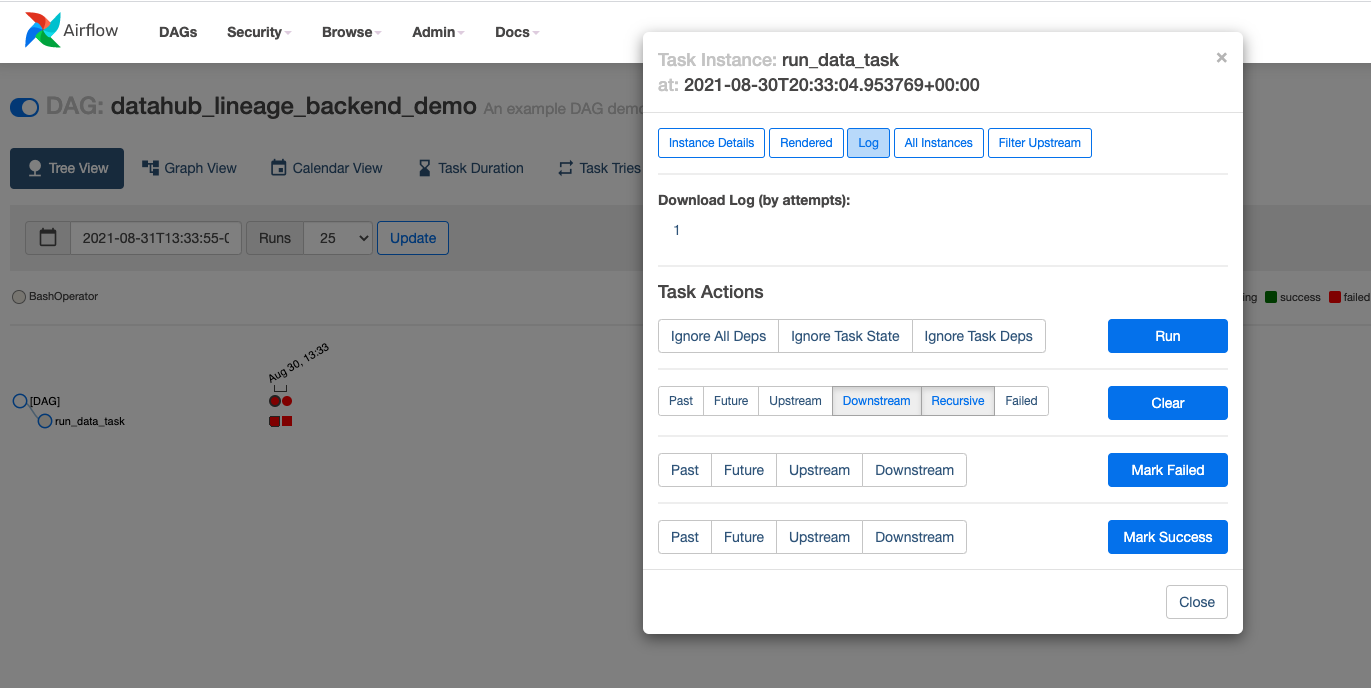

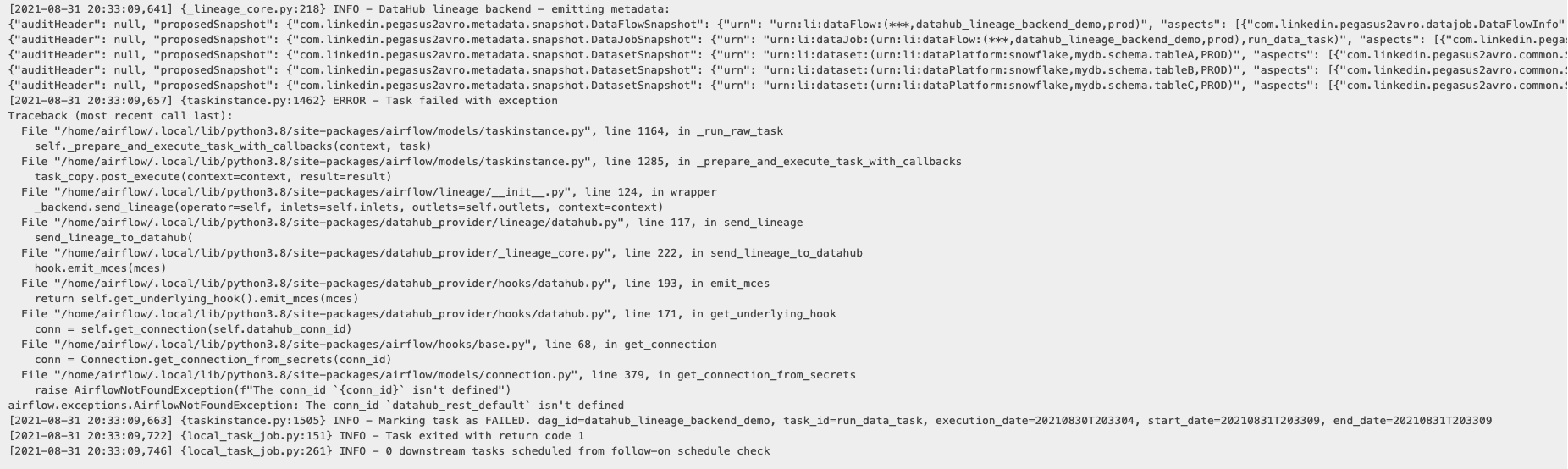

@@ -164,9 +196,17 @@ Most issues are related to connectivity between Airflow and DataHub.

Here is how you can debug them.

-

-

+

+  +

+

+

+

+

+

+  +

+

+

In this case, clearly the connection `datahub-rest` has not been registered. Looks like we forgot to register the connection with Airflow!

Let's execute Step 4 to register the datahub connection with Airflow.

@@ -175,4 +215,8 @@ In case the connection was registered successfully but you are still seeing `Fai

After re-running the DAG, we see success!

-

+

+

+  +

+

+

diff --git a/docker/kafka-setup/Dockerfile b/docker/kafka-setup/Dockerfile

index 5707234b85f57..a9c75521fead1 100644

--- a/docker/kafka-setup/Dockerfile

+++ b/docker/kafka-setup/Dockerfile

@@ -15,9 +15,6 @@ FROM python:3-alpine

ENV KAFKA_VERSION 3.4.1

ENV SCALA_VERSION 2.13

-# Set the classpath for JARs required by `cub`

-ENV CUB_CLASSPATH='"/usr/share/java/cp-base-new/*"'

-

LABEL name="kafka" version=${KAFKA_VERSION}

RUN apk add --no-cache bash coreutils

@@ -31,10 +28,6 @@ RUN mkdir -p /opt \

&& mv /opt/kafka_${SCALA_VERSION}-${KAFKA_VERSION} /opt/kafka \

&& adduser -DH -s /sbin/nologin kafka \

&& chown -R kafka: /opt/kafka \

- && echo "===> Installing python packages ..." \

- && pip install --no-cache-dir --upgrade pip wheel setuptools \

- && pip install jinja2 requests \

- && pip install "Cython<3.0" "PyYAML<6" --no-build-isolation \

&& rm -rf /tmp/* \

&& apk del --purge .build-deps

diff --git a/docs-website/build.gradle b/docs-website/build.gradle

index 12f37033efc2f..851c10d9ea97f 100644

--- a/docs-website/build.gradle

+++ b/docs-website/build.gradle

@@ -77,7 +77,12 @@ task yarnGenerate(type: YarnTask, dependsOn: [yarnInstall,

args = ['run', 'generate']

}

-task yarnStart(type: YarnTask, dependsOn: [yarnInstall, yarnGenerate]) {

+task downloadHistoricalVersions(type: Exec) {

+ workingDir '.'

+ commandLine 'python3', 'download_historical_versions.py'

+}

+

+task yarnStart(type: YarnTask, dependsOn: [yarnInstall, yarnGenerate, downloadHistoricalVersions]) {

args = ['run', 'start']

}

task fastReload(type: YarnTask) {

@@ -105,7 +110,7 @@ task serve(type: YarnTask, dependsOn: [yarnInstall] ) {

}

-task yarnBuild(type: YarnTask, dependsOn: [yarnLint, yarnGenerate]) {

+task yarnBuild(type: YarnTask, dependsOn: [yarnLint, yarnGenerate, downloadHistoricalVersions]) {

inputs.files(projectMdFiles)

inputs.file("package.json").withPathSensitivity(PathSensitivity.RELATIVE)

inputs.dir("src").withPathSensitivity(PathSensitivity.RELATIVE)

diff --git a/docs-website/docusaurus.config.js b/docs-website/docusaurus.config.js

index c10c178424b53..df69e8513fbfc 100644

--- a/docs-website/docusaurus.config.js

+++ b/docs-website/docusaurus.config.js

@@ -69,6 +69,11 @@ module.exports = {

label: "Roadmap",

position: "right",

},

+ {

+ type: 'docsVersionDropdown',

+ position: 'right',

+ dropdownActiveClassDisabled: true,

+ },

{

href: "https://slack.datahubproject.io",

"aria-label": "Slack",

diff --git a/docs-website/download_historical_versions.py b/docs-website/download_historical_versions.py

new file mode 100644

index 0000000000000..a005445cb1497

--- /dev/null

+++ b/docs-website/download_historical_versions.py

@@ -0,0 +1,60 @@

+import os

+import tarfile

+import urllib.request

+import json

+

+repo_url = "https://api.github.com/repos/datahub-project/static-assets"

+

+

+def download_file(url, destination):

+ with urllib.request.urlopen(url) as response:

+ with open(destination, "wb") as f:

+ while True:

+ chunk = response.read(8192)

+ if not chunk:

+ break

+ f.write(chunk)

+

+

+def fetch_tar_urls(repo_url, folder_path):

+ api_url = f"{repo_url}/contents/{folder_path}"

+ response = urllib.request.urlopen(api_url)

+ data = response.read().decode('utf-8')

+ tar_urls = [

+ file["download_url"] for file in json.loads(data) if file["name"].endswith(".tar.gz")

+ ]

+ print(tar_urls)

+ return tar_urls

+

+

+def main():

+ folder_path = "versioned_docs"

+ destination_dir = "versioned_docs"

+ if not os.path.exists(destination_dir):

+ os.makedirs(destination_dir)

+

+ tar_urls = fetch_tar_urls(repo_url, folder_path)

+

+ for url in tar_urls:

+ filename = os.path.basename(url)

+ destination_path = os.path.join(destination_dir, filename)

+

+ version = '.'.join(filename.split('.')[:3])

+ extracted_path = os.path.join(destination_dir, version)

+ print("extracted_path", extracted_path)

+ if os.path.exists(extracted_path):

+ print(f"{extracted_path} already exists, skipping downloads")

+ continue

+ try:

+ download_file(url, destination_path)

+ print(f"Downloaded {filename} to {destination_dir}")

+ with tarfile.open(destination_path, "r:gz") as tar:

+ tar.extractall()

+ os.remove(destination_path)

+ except urllib.error.URLError as e:

+ print(f"Error while downloading {filename}: {e}")

+ continue

+

+

+if __name__ == "__main__":

+ main()

diff --git a/docs-website/src/pages/docs/_components/SearchBar/index.jsx b/docs-website/src/pages/docs/_components/SearchBar/index.jsx

index 37f8a5c252aee..054c041d8a9e5 100644

--- a/docs-website/src/pages/docs/_components/SearchBar/index.jsx

+++ b/docs-website/src/pages/docs/_components/SearchBar/index.jsx

@@ -303,11 +303,16 @@ function SearchBar() {

strokeLinejoin="round"

>

-

- {docsSearchVersionsHelpers.versioningEnabled && }

-

- {!!searchResultState.totalResults && documentsFoundPlural(searchResultState.totalResults)}

+ {docsSearchVersionsHelpers.versioningEnabled && (

+

+ )}

+

+ {!!searchResultState.totalResults &&

+ documentsFoundPlural(searchResultState.totalResults)}

+

{searchResultState.items.length > 0 ? (

@@ -369,4 +374,4 @@ function SearchBar() {

);

}

-export default SearchBar;

+export default SearchBar;

\ No newline at end of file

diff --git a/docs-website/src/pages/docs/_components/SearchBar/search.module.scss b/docs-website/src/pages/docs/_components/SearchBar/search.module.scss

index 17e5f22490664..30a2973384ba6 100644

--- a/docs-website/src/pages/docs/_components/SearchBar/search.module.scss

+++ b/docs-website/src/pages/docs/_components/SearchBar/search.module.scss

@@ -21,13 +21,21 @@

height: 1.5rem;

}

+.searchQueryInput {

+ padding: 0.8rem 0.8rem 0.8rem 3rem;

+}

+

+.searchVersionInput {

+ padding: 0.8rem 2rem 0.8rem 2rem;

+ text-align: center;

+}

+

.searchQueryInput,

.searchVersionInput {

border-radius: 1000em;

border-style: solid;

border-color: transparent;

font: var(--ifm-font-size-base) var(--ifm-font-family-base);

- padding: 0.8rem 0.8rem 0.8rem 3rem;

width: 100%;

background: var(--docsearch-searchbox-background);

color: var(--docsearch-text-color);

@@ -93,6 +101,7 @@

@media only screen and (max-width: 996px) {

.searchVersionColumn {

max-width: 40% !important;

+ margin: auto;

}

.searchResultsColumn {

@@ -113,9 +122,15 @@

.searchVersionColumn {

max-width: 100% !important;

padding-left: var(--ifm-spacing-horizontal) !important;

+ margin: auto;

}

}

+.searchVersionColumn {

+ margin: auto;

+}

+

+

.loadingSpinner {

width: 3rem;

height: 3rem;

diff --git a/docs-website/versioned_sidebars/version-0.10.5-sidebars.json b/docs-website/versioned_sidebars/version-0.10.5-sidebars.json

new file mode 100644

index 0000000000000..67179075fc994

--- /dev/null

+++ b/docs-website/versioned_sidebars/version-0.10.5-sidebars.json

@@ -0,0 +1,594 @@

+{

+ "overviewSidebar": [

+ {

+ "label": "Getting Started",

+ "type": "category",

+ "collapsed": true,

+ "items": [

+ {

+ "type": "doc",

+ "label": "Introduction",

+ "id": "docs/features"

+ },

+ {

+ "type": "doc",

+ "label": "Quickstart",

+ "id": "docs/quickstart"

+ },

+ {

+ "type": "link",

+ "label": "Demo",

+ "href": "https://demo.datahubproject.io/"

+ },

+ "docs/what-is-datahub/datahub-concepts",

+ "docs/saas"

+ ]

+ },

+ {

+ "Integrations": [

+ {

+ "type": "doc",

+ "label": "Introduction",

+ "id": "metadata-ingestion/README"

+ },

+ {

+ "Quickstart Guides": [

+ {

+ "BigQuery": [

+ "docs/quick-ingestion-guides/bigquery/overview",

+ "docs/quick-ingestion-guides/bigquery/setup",

+ "docs/quick-ingestion-guides/bigquery/configuration"

+ ]

+ },

+ {

+ "Redshift": [

+ "docs/quick-ingestion-guides/redshift/overview",

+ "docs/quick-ingestion-guides/redshift/setup",

+ "docs/quick-ingestion-guides/redshift/configuration"

+ ]

+ },

+ {

+ "Snowflake": [

+ "docs/quick-ingestion-guides/snowflake/overview",

+ "docs/quick-ingestion-guides/snowflake/setup",

+ "docs/quick-ingestion-guides/snowflake/configuration"

+ ]

+ },

+ {

+ "Tableau": [

+ "docs/quick-ingestion-guides/tableau/overview",

+ "docs/quick-ingestion-guides/tableau/setup",

+ "docs/quick-ingestion-guides/tableau/configuration"

+ ]

+ },

+ {

+ "PowerBI": [

+ "docs/quick-ingestion-guides/powerbi/overview",

+ "docs/quick-ingestion-guides/powerbi/setup",

+ "docs/quick-ingestion-guides/powerbi/configuration"

+ ]

+ }

+ ]

+ },

+ {

+ "Sources": [

+ {

+ "type": "doc",

+ "id": "docs/lineage/airflow",

+ "label": "Airflow"

+ },

+ "metadata-integration/java/spark-lineage/README",

+ "metadata-ingestion/integration_docs/great-expectations",

+ "metadata-integration/java/datahub-protobuf/README",

+ {

+ "type": "autogenerated",

+ "dirName": "docs/generated/ingestion/sources"

+ }

+ ]

+ },

+ {

+ "Sinks": [

+ {

+ "type": "autogenerated",

+ "dirName": "metadata-ingestion/sink_docs"

+ }

+ ]

+ },

+ {

+ "Transformers": [

+ "metadata-ingestion/docs/transformer/intro",

+ "metadata-ingestion/docs/transformer/dataset_transformer"

+ ]

+ },

+ {

+ "Advanced Guides": [

+ {

+ "Scheduling Ingestion": [

+ "metadata-ingestion/schedule_docs/intro",

+ "metadata-ingestion/schedule_docs/cron",

+ "metadata-ingestion/schedule_docs/airflow",

+ "metadata-ingestion/schedule_docs/kubernetes"

+ ]

+ },

+ "docs/platform-instances",

+ "metadata-ingestion/docs/dev_guides/stateful",

+ "metadata-ingestion/docs/dev_guides/classification",

+ "metadata-ingestion/docs/dev_guides/add_stateful_ingestion_to_source",

+ "metadata-ingestion/docs/dev_guides/sql_profiles"

+ ]

+ }

+ ]

+ },

+ {

+ "Deployment": [

+ "docs/deploy/aws",

+ "docs/deploy/gcp",

+ "docker/README",

+ "docs/deploy/kubernetes",

+ "docs/deploy/environment-vars",

+ {

+ "Authentication": [

+ "docs/authentication/README",

+ "docs/authentication/concepts",

+ "docs/authentication/changing-default-credentials",

+ "docs/authentication/guides/add-users",

+ {

+ "Frontend Authentication": [

+ "docs/authentication/guides/jaas",

+ {

+ "OIDC Authentication": [

+ "docs/authentication/guides/sso/configure-oidc-react",

+ "docs/authentication/guides/sso/configure-oidc-react-google",

+ "docs/authentication/guides/sso/configure-oidc-react-okta",

+ "docs/authentication/guides/sso/configure-oidc-react-azure"

+ ]

+ }

+ ]

+ },

+ "docs/authentication/introducing-metadata-service-authentication",

+ "docs/authentication/personal-access-tokens"

+ ]

+ },

+ {

+ "Authorization": [

+ "docs/authorization/README",

+ "docs/authorization/roles",

+ "docs/authorization/policies",

+ "docs/authorization/groups"

+ ]

+ },

+ {

+ "Advanced Guides": [

+ "docs/how/delete-metadata",

+ "docs/how/configuring-authorization-with-apache-ranger",

+ "docs/how/backup-datahub",

+ "docs/how/restore-indices",

+ "docs/advanced/db-retention",

+ "docs/advanced/monitoring",

+ "docs/how/extract-container-logs",

+ "docs/deploy/telemetry",

+ "docs/how/kafka-config",

+ "docs/deploy/confluent-cloud",

+ "docs/advanced/no-code-upgrade",

+ "docs/how/jattach-guide"

+ ]

+ },

+ "docs/how/updating-datahub"

+ ]

+ },

+ {

+ "API": [

+ "docs/api/datahub-apis",

+ {

+ "GraphQL API": [

+ {

+ "label": "Overview",

+ "type": "doc",

+ "id": "docs/api/graphql/overview"

+ },

+ {

+ "Reference": [

+ {

+ "type": "doc",

+ "label": "Queries",

+ "id": "graphql/queries"

+ },

+ {

+ "type": "doc",

+ "label": "Mutations",

+ "id": "graphql/mutations"

+ },

+ {

+ "type": "doc",

+ "label": "Objects",

+ "id": "graphql/objects"

+ },

+ {

+ "type": "doc",

+ "label": "Inputs",

+ "id": "graphql/inputObjects"

+ },

+ {

+ "type": "doc",

+ "label": "Interfaces",

+ "id": "graphql/interfaces"

+ },

+ {

+ "type": "doc",

+ "label": "Unions",

+ "id": "graphql/unions"

+ },

+ {

+ "type": "doc",

+ "label": "Enums",

+ "id": "graphql/enums"

+ },

+ {

+ "type": "doc",

+ "label": "Scalars",

+ "id": "graphql/scalars"

+ }

+ ]

+ },

+ {

+ "Guides": [

+ {

+ "type": "doc",

+ "label": "How To Set Up GraphQL",

+ "id": "docs/api/graphql/how-to-set-up-graphql"

+ },

+ {

+ "type": "doc",

+ "label": "Getting Started With GraphQL",

+ "id": "docs/api/graphql/getting-started"

+ },

+ {

+ "type": "doc",

+ "label": "Access Token Management",

+ "id": "docs/api/graphql/token-management"

+ }

+ ]

+ }

+ ]

+ },

+ {

+ "type": "doc",

+ "label": "OpenAPI",

+ "id": "docs/api/openapi/openapi-usage-guide"

+ },

+ "docs/dev-guides/timeline",

+ {

+ "Rest.li API": [

+ {

+ "type": "doc",

+ "label": "Rest.li API Guide",

+ "id": "docs/api/restli/restli-overview"

+ },

+ {

+ "type": "doc",

+ "label": "Restore Indices",

+ "id": "docs/api/restli/restore-indices"

+ },

+ {

+ "type": "doc",

+ "label": "Get Index Sizes",

+ "id": "docs/api/restli/get-index-sizes"

+ },

+ {

+ "type": "doc",

+ "label": "Truncate Timeseries Aspect",

+ "id": "docs/api/restli/truncate-time-series-aspect"

+ },

+ {

+ "type": "doc",

+ "label": "Get ElasticSearch Task Status Endpoint",

+ "id": "docs/api/restli/get-elastic-task-status"

+ },

+ {

+ "type": "doc",

+ "label": "Evaluate Tests",

+ "id": "docs/api/restli/evaluate-tests"

+ },

+ {

+ "type": "doc",

+ "label": "Aspect Versioning and Rest.li Modeling",

+ "id": "docs/advanced/aspect-versioning"

+ }

+ ]

+ },

+ {

+ "Python SDK": [

+ "metadata-ingestion/as-a-library",

+ {

+ "Python SDK Reference": [

+ {

+ "type": "autogenerated",

+ "dirName": "python-sdk"

+ }

+ ]

+ }

+ ]

+ },

+ "metadata-integration/java/as-a-library",

+ {

+ "API and SDK Guides": [

+ "docs/advanced/patch",

+ "docs/api/tutorials/datasets",

+ "docs/api/tutorials/lineage",

+ "docs/api/tutorials/tags",

+ "docs/api/tutorials/terms",

+ "docs/api/tutorials/owners",

+ "docs/api/tutorials/domains",

+ "docs/api/tutorials/deprecation",

+ "docs/api/tutorials/descriptions",

+ "docs/api/tutorials/custom-properties",

+ "docs/api/tutorials/ml"

+ ]

+ },

+ {

+ "type": "category",

+ "label": "DataHub CLI",

+ "link": {

+ "type": "doc",

+ "id": "docs/cli"

+ },

+ "items": [

+ "docs/datahub_lite"

+ ]

+ },

+ {

+ "type": "category",

+ "label": "Datahub Actions",

+ "link": {

+ "type": "doc",

+ "id": "docs/act-on-metadata"

+ },

+ "items": [

+ "docs/actions/README",

+ "docs/actions/quickstart",

+ "docs/actions/concepts",

+ {

+ "Sources": [

+ {

+ "type": "autogenerated",

+ "dirName": "docs/actions/sources"

+ }

+ ]

+ },

+ {

+ "Events": [

+ {

+ "type": "autogenerated",

+ "dirName": "docs/actions/events"

+ }

+ ]

+ },

+ {

+ "Actions": [

+ {

+ "type": "autogenerated",

+ "dirName": "docs/actions/actions"

+ }

+ ]

+ },

+ {

+ "Guides": [

+ {

+ "type": "autogenerated",

+ "dirName": "docs/actions/guides"

+ }

+ ]

+ }

+ ]

+ }

+ ]

+ },

+ {

+ "Features": [

+ "docs/ui-ingestion",

+ "docs/how/search",

+ "docs/schema-history",

+ "docs/domains",

+ "docs/dataproducts",

+ "docs/glossary/business-glossary",

+ "docs/tags",

+ "docs/ownership/ownership-types",

+ "docs/browse",

+ "docs/authorization/access-policies-guide",

+ "docs/features/dataset-usage-and-query-history",

+ "docs/posts",

+ "docs/sync-status",

+ "docs/lineage/lineage-feature-guide",

+ {

+ "type": "doc",

+ "id": "docs/tests/metadata-tests",

+ "className": "saasOnly"

+ },

+ "docs/act-on-metadata/impact-analysis",

+ {

+ "Observability": [

+ "docs/managed-datahub/observe/freshness-assertions"

+ ]

+ }

+ ]

+ },

+ {

+ "Develop": [

+ {

+ "DataHub Metadata Model": [

+ "docs/modeling/metadata-model",

+ "docs/modeling/extending-the-metadata-model",

+ "docs/what/mxe",

+ {

+ "Entities": [

+ {

+ "type": "autogenerated",

+ "dirName": "docs/generated/metamodel/entities"

+ }

+ ]

+ }

+ ]

+ },

+ {

+ "Architecture": [

+ "docs/architecture/architecture",

+ "docs/components",

+ "docs/architecture/metadata-ingestion",

+ "docs/architecture/metadata-serving",

+ "docs/architecture/docker-containers"

+ ]

+ },

+ {

+ "Developing on DataHub": [

+ "docs/developers",

+ "docs/docker/development",

+ "metadata-ingestion/developing",

+ "docs/api/graphql/graphql-endpoint-development",

+ {

+ "Modules": [

+ "datahub-web-react/README",

+ "datahub-frontend/README",

+ "datahub-graphql-core/README",

+ "metadata-service/README",

+ "metadata-jobs/mae-consumer-job/README",

+ "metadata-jobs/mce-consumer-job/README"

+ ]

+ }

+ ]

+ },

+ "docs/plugins",

+ {

+ "Troubleshooting": [

+ "docs/troubleshooting/quickstart",

+ "docs/troubleshooting/build",

+ "docs/troubleshooting/general"

+ ]

+ },

+ {

+ "Advanced": [

+ "metadata-ingestion/docs/dev_guides/reporting_telemetry",

+ "docs/advanced/mcp-mcl",

+ "docker/datahub-upgrade/README",

+ "docs/advanced/no-code-modeling",

+ "datahub-web-react/src/app/analytics/README",

+ "docs/how/migrating-graph-service-implementation",

+ "docs/advanced/field-path-spec-v2",

+ "metadata-ingestion/adding-source",

+ "docs/how/add-custom-ingestion-source",

+ "docs/how/add-custom-data-platform",

+ "docs/advanced/browse-paths-upgrade",

+ "docs/browseV2/browse-paths-v2"

+ ]

+ }

+ ]

+ },

+ {

+ "Community": [

+ "docs/slack",

+ "docs/townhalls",

+ "docs/townhall-history",

+ "docs/CODE_OF_CONDUCT",

+ "docs/CONTRIBUTING",

+ "docs/links",

+ "docs/rfc"

+ ]

+ },

+ {

+ "Managed DataHub": [

+ "docs/managed-datahub/managed-datahub-overview",

+ "docs/managed-datahub/welcome-acryl",

+ {

+ "type": "doc",

+ "id": "docs/managed-datahub/saas-slack-setup",

+ "className": "saasOnly"

+ },

+ {

+ "type": "doc",

+ "id": "docs/managed-datahub/approval-workflows",

+ "className": "saasOnly"

+ },

+ {

+ "Metadata Ingestion With Acryl": [

+ "docs/managed-datahub/metadata-ingestion-with-acryl/ingestion"

+ ]

+ },

+ {

+ "DataHub API": [

+ {

+ "type": "doc",

+ "id": "docs/managed-datahub/datahub-api/entity-events-api",

+ "className": "saasOnly"

+ },

+ {

+ "GraphQL API": [

+ "docs/managed-datahub/datahub-api/graphql-api/getting-started",

+ {

+ "type": "doc",

+ "id": "docs/managed-datahub/datahub-api/graphql-api/incidents-api-beta",

+ "className": "saasOnly"

+ }

+ ]

+ }

+ ]

+ },

+ {

+ "Integrations": [

+ {

+ "type": "doc",

+ "id": "docs/managed-datahub/integrations/aws-privatelink",

+ "className": "saasOnly"

+ },

+ {

+ "type": "doc",

+ "id": "docs/managed-datahub/integrations/oidc-sso-integration",

+ "className": "saasOnly"

+ }

+ ]

+ },

+ {

+ "Operator Guide": [

+ {

+ "type": "doc",

+ "id": "docs/managed-datahub/operator-guide/setting-up-remote-ingestion-executor-on-aws",

+ "className": "saasOnly"

+ },

+ {

+ "type": "doc",

+ "id": "docs/managed-datahub/operator-guide/setting-up-events-api-on-aws-eventbridge",

+ "className": "saasOnly"

+ }

+ ]

+ },

+ {

+ "type": "doc",

+ "id": "docs/managed-datahub/chrome-extension",

+ "className": "saasOnly"

+ },

+ {

+ "Managed DataHub Release History": [

+ "docs/managed-datahub/release-notes/v_0_2_10",

+ "docs/managed-datahub/release-notes/v_0_2_9",

+ "docs/managed-datahub/release-notes/v_0_2_8",

+ "docs/managed-datahub/release-notes/v_0_2_7",

+ "docs/managed-datahub/release-notes/v_0_2_6",

+ "docs/managed-datahub/release-notes/v_0_2_5",

+ "docs/managed-datahub/release-notes/v_0_2_4",

+ "docs/managed-datahub/release-notes/v_0_2_3",

+ "docs/managed-datahub/release-notes/v_0_2_2",

+ "docs/managed-datahub/release-notes/v_0_2_1",

+ "docs/managed-datahub/release-notes/v_0_2_0",

+ "docs/managed-datahub/release-notes/v_0_1_73",

+ "docs/managed-datahub/release-notes/v_0_1_72",

+ "docs/managed-datahub/release-notes/v_0_1_70",

+ "docs/managed-datahub/release-notes/v_0_1_69"

+ ]

+ }

+ ]

+ },

+ {

+ "Release History": [

+ "releases"

+ ]

+ }

+ ]

+}

diff --git a/docs-website/versions.json b/docs-website/versions.json

new file mode 100644

index 0000000000000..0b79ac9498e06

--- /dev/null

+++ b/docs-website/versions.json

@@ -0,0 +1,3 @@

+[

+ "0.10.5"

+]

diff --git a/docs/actions/concepts.md b/docs/actions/concepts.md

index 381f2551d2237..5b05a0c586a5d 100644

--- a/docs/actions/concepts.md

+++ b/docs/actions/concepts.md

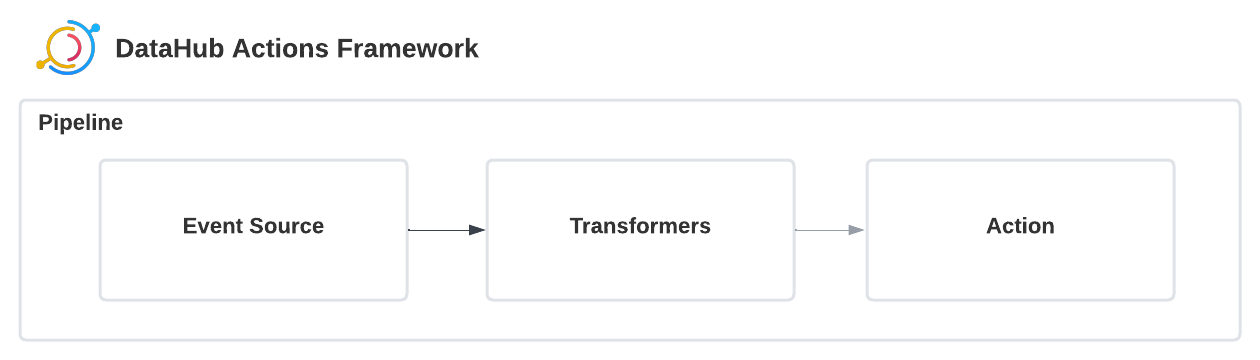

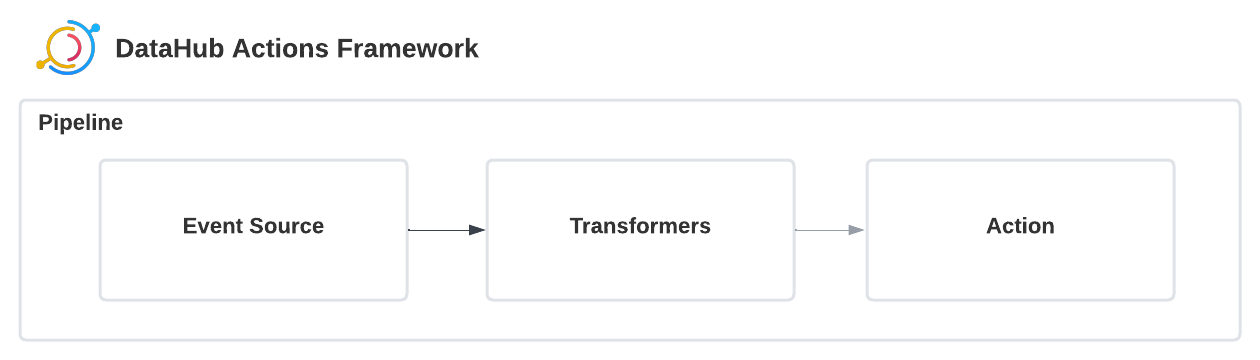

@@ -40,7 +40,11 @@ The Actions Framework consists of a few core concepts--

Each of these will be described in detail below.

-

+

+

+  +

+

+

**In the Actions Framework, Events flow continuously from left-to-right.**

### Pipelines

diff --git a/docs/advanced/no-code-modeling.md b/docs/advanced/no-code-modeling.md

index 9c8f6761a62bc..d76b776d3dddb 100644

--- a/docs/advanced/no-code-modeling.md

+++ b/docs/advanced/no-code-modeling.md

@@ -159,11 +159,19 @@ along with simplifying the number of raw data models that need defined, includin

From an architectural PoV, we will move from a before that looks something like this:

-

+

+

+  +

+

+

to an after that looks like this

-

+

+

+  +

+

+

That is, a move away from patterns of strong-typing-everywhere to a more generic + flexible world.

diff --git a/docs/api/graphql/how-to-set-up-graphql.md b/docs/api/graphql/how-to-set-up-graphql.md

index 562e8edb9f5d9..584bf34ad3f92 100644

--- a/docs/api/graphql/how-to-set-up-graphql.md

+++ b/docs/api/graphql/how-to-set-up-graphql.md

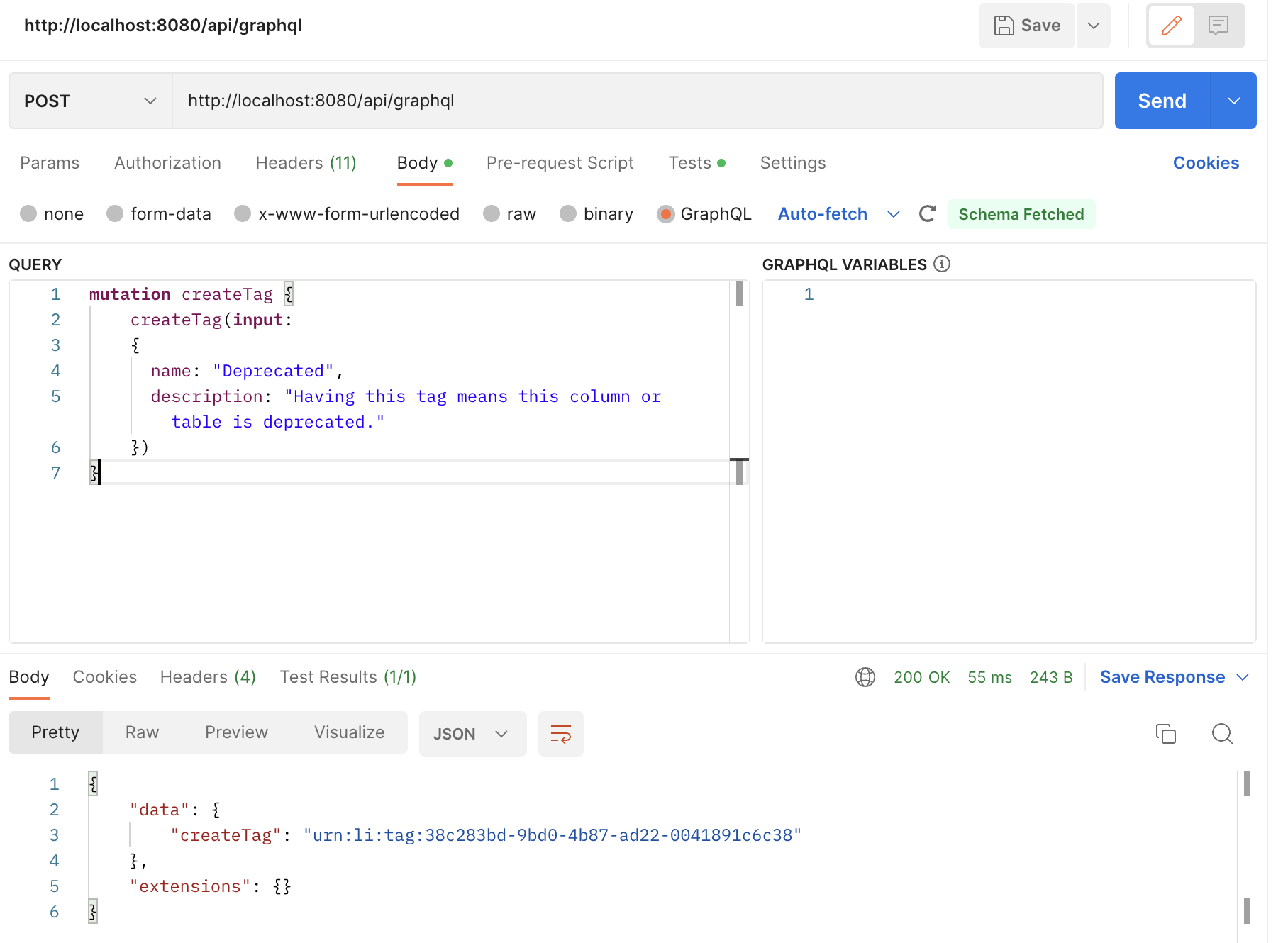

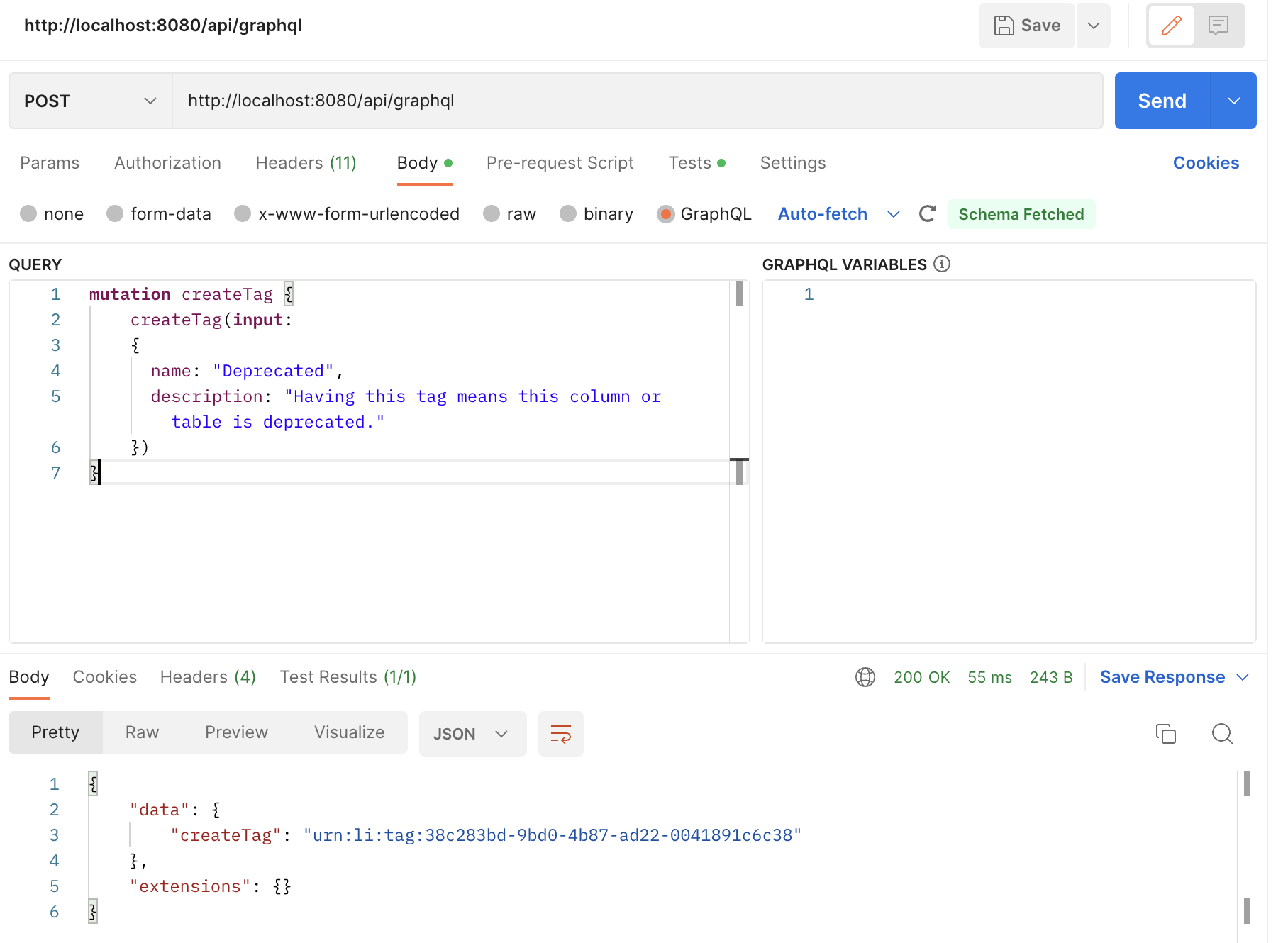

@@ -62,7 +62,11 @@ Postman is a popular API client that provides a graphical user interface for sen

Within Postman, you can create a `POST` request and set the request URL to the `/api/graphql` endpoint.

In the request body, select the `GraphQL` option and enter your GraphQL query in the request body.

-

+

+

+  +

+

+

Please refer to [Querying with GraphQL](https://learning.postman.com/docs/sending-requests/graphql/graphql/) in the Postman documentation for more information.

diff --git a/docs/api/tutorials/custom-properties.md b/docs/api/tutorials/custom-properties.md

index dbc07bfaa712e..fe0d7e62dcde8 100644

--- a/docs/api/tutorials/custom-properties.md

+++ b/docs/api/tutorials/custom-properties.md

@@ -34,7 +34,11 @@ In this example, we will add some custom properties `cluster_name` and `retentio

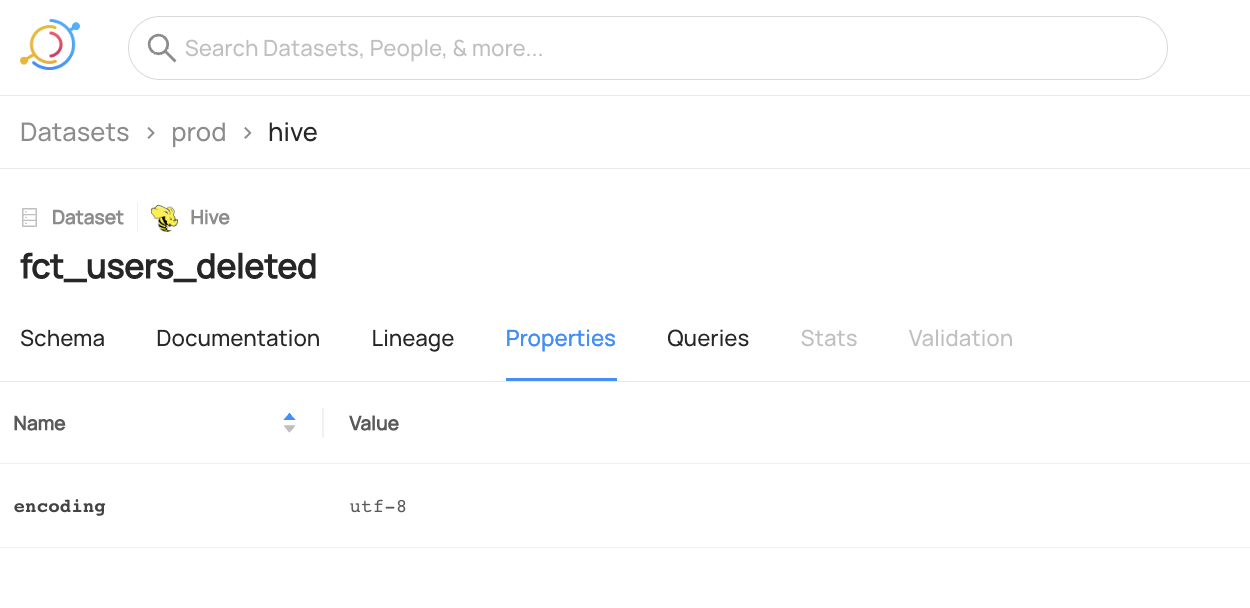

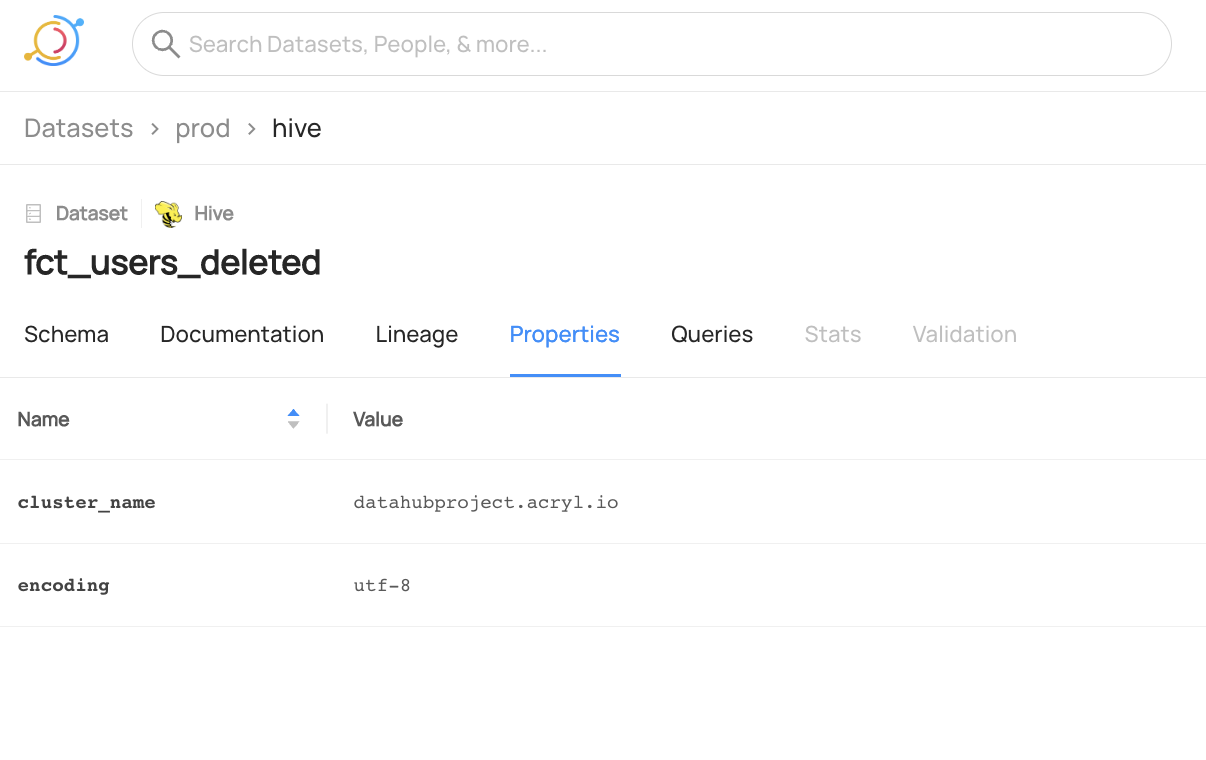

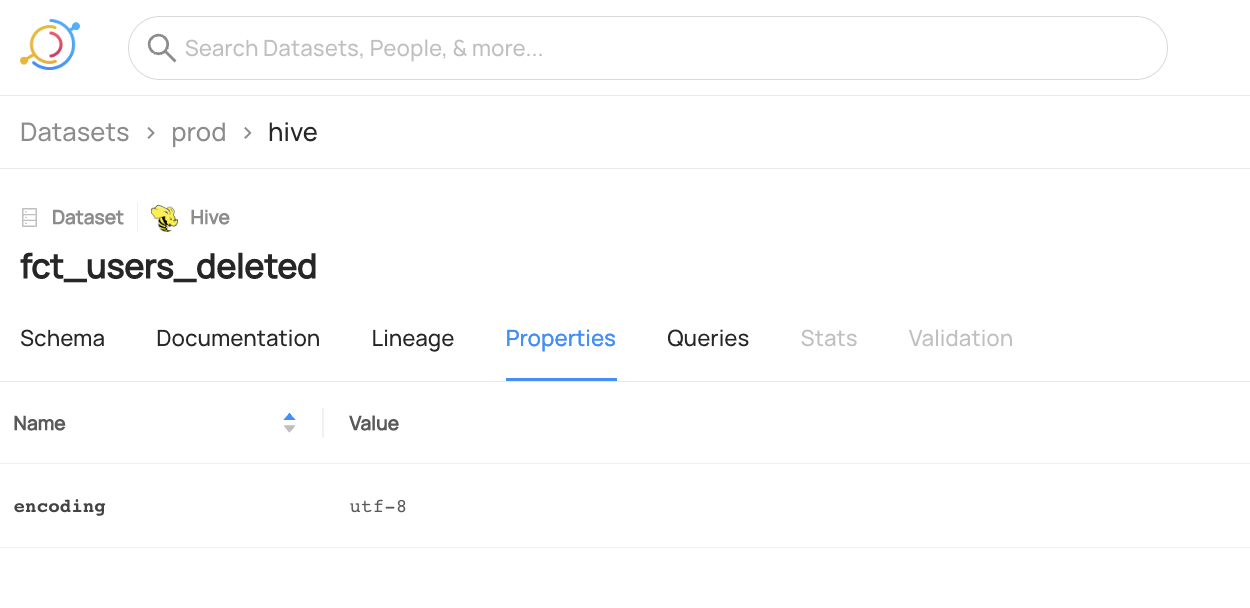

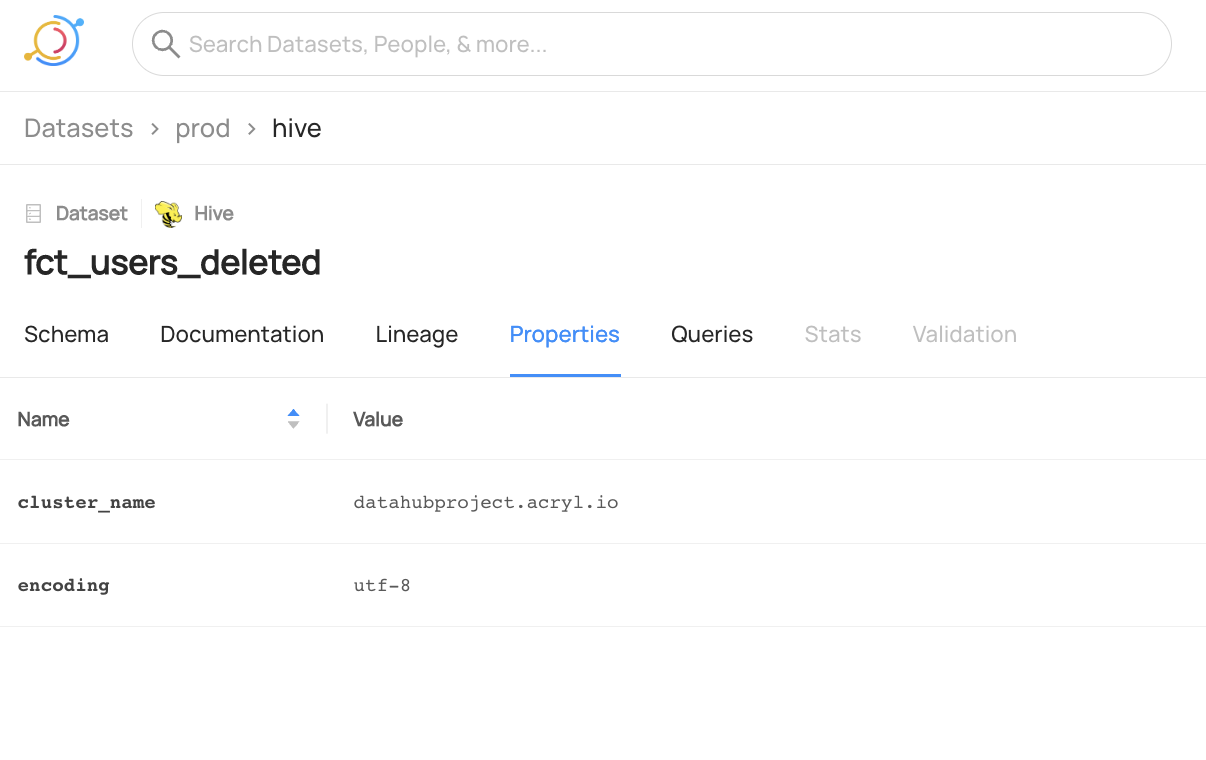

After you have ingested sample data, the dataset `fct_users_deleted` should have a custom properties section with `encoding` set to `utf-8`.

-

+

+

+  +

+

+

```shell

datahub get --urn "urn:li:dataset:(urn:li:dataPlatform:hive,fct_users_deleted,PROD)" --aspect datasetProperties

@@ -80,7 +84,11 @@ The following code adds custom properties `cluster_name` and `retention_time` to

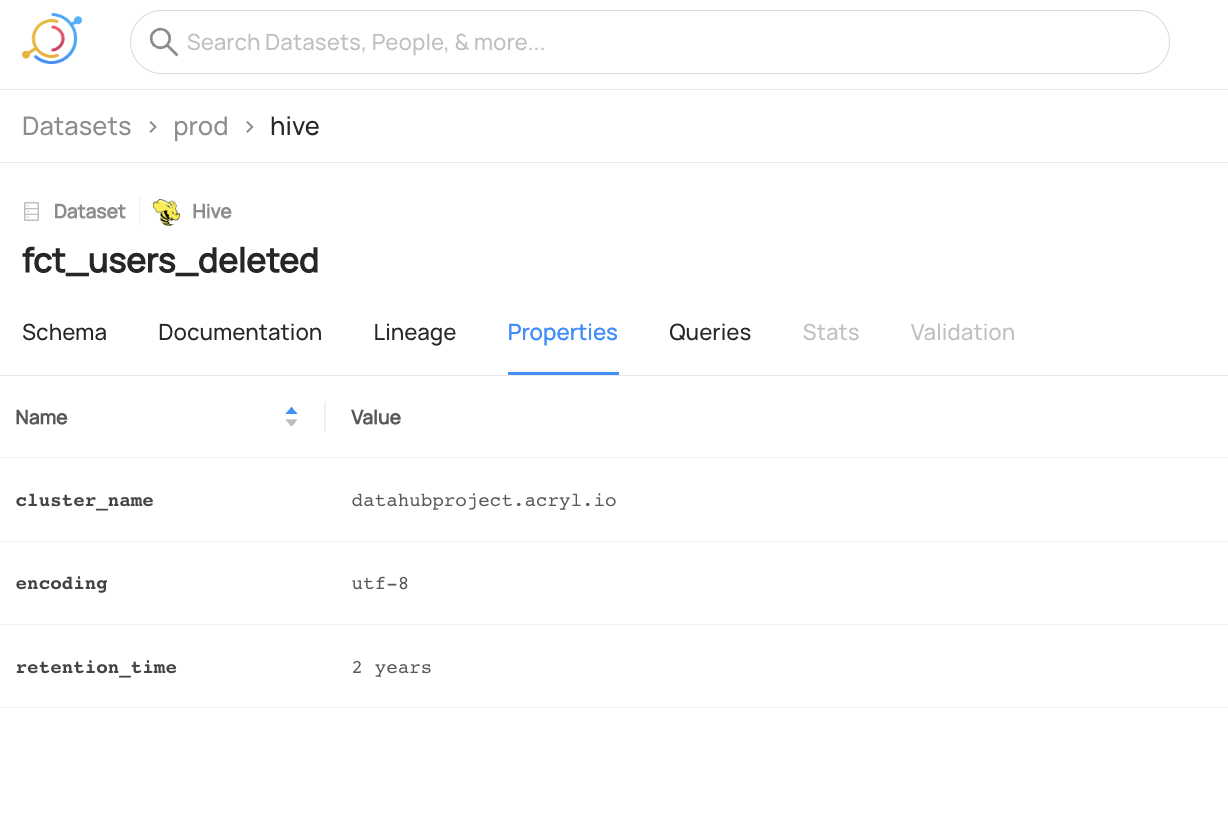

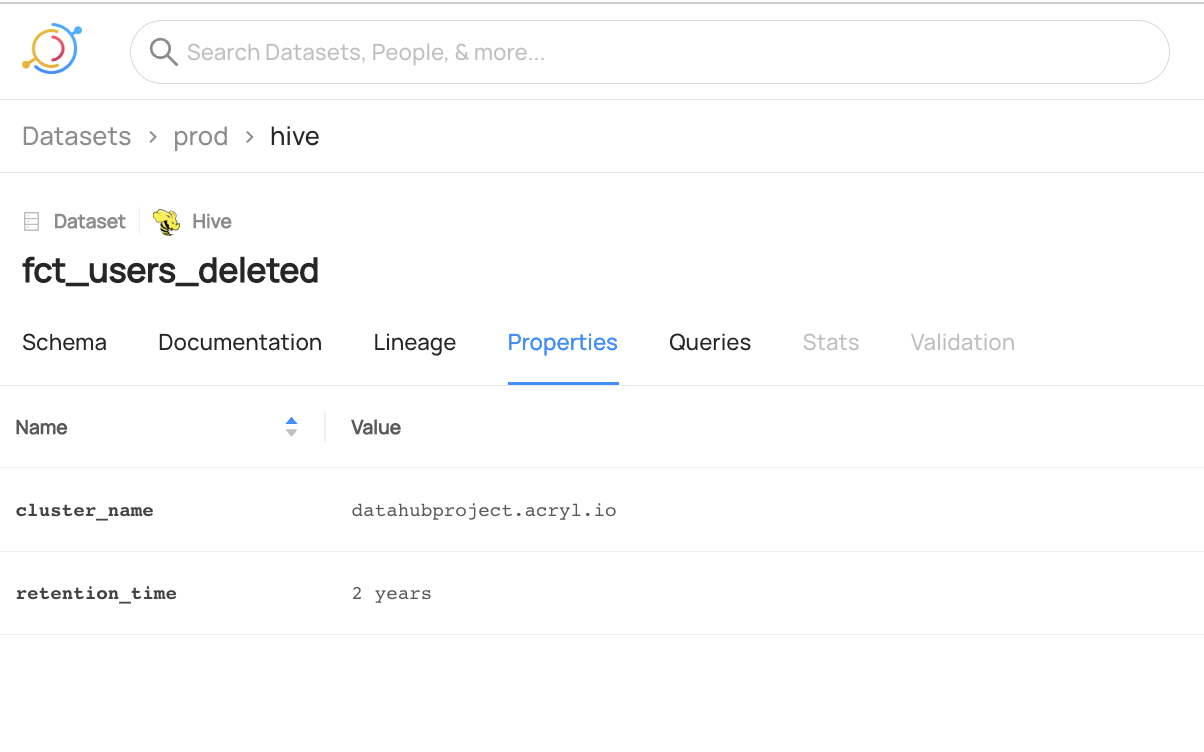

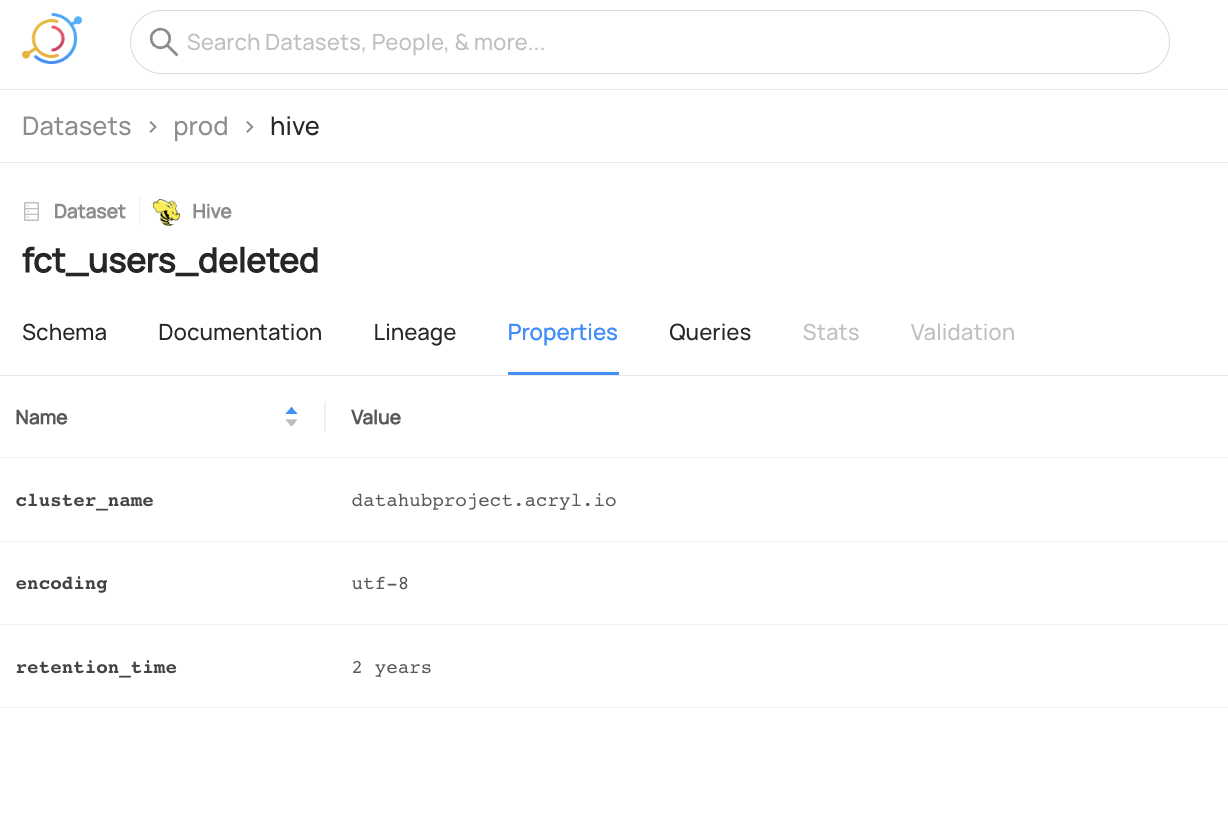

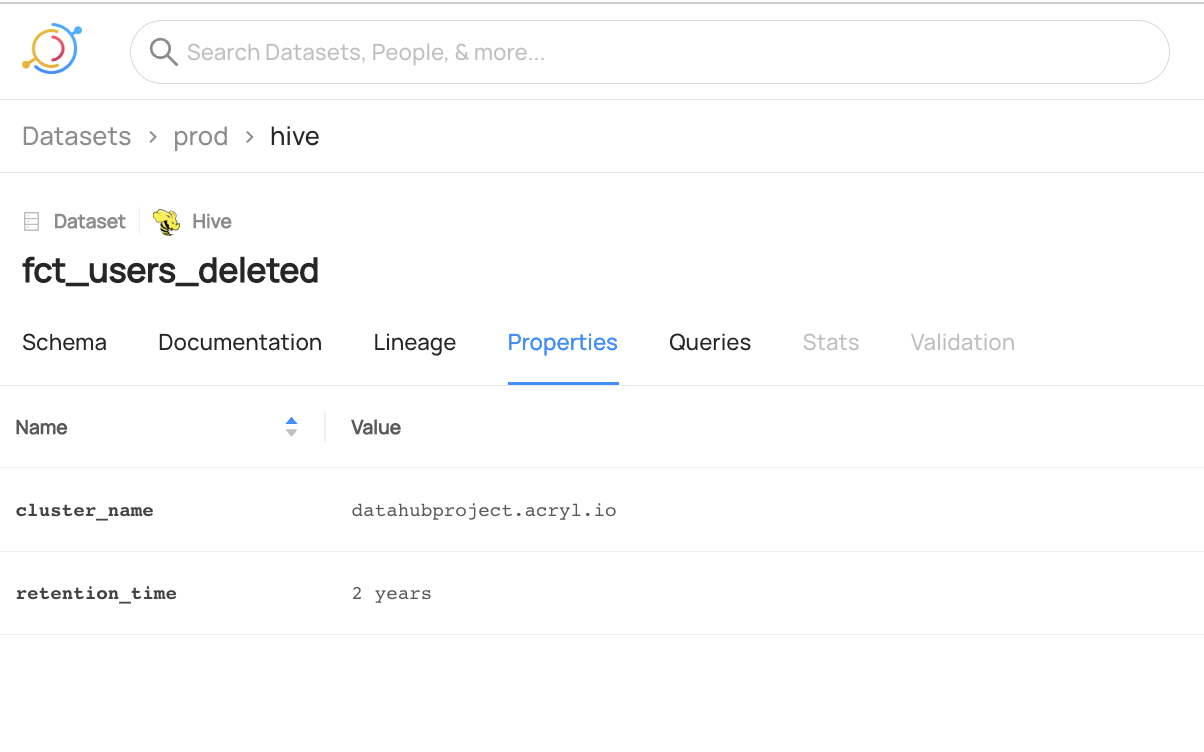

You can now see the two new properties are added to `fct_users_deleted` and the previous property `encoding` is unchanged.

-

+

+

+  +

+

+

We can also verify this operation by programmatically checking the `datasetProperties` aspect after running this code using the `datahub` cli.

@@ -130,7 +138,11 @@ The following code shows you how can add and remove custom properties in the sam

You can now see the `cluster_name` property is added to `fct_users_deleted` and the `retention_time` property is removed.

-

+

+

+  +

+

+

We can also verify this operation programmatically by checking the `datasetProperties` aspect using the `datahub` cli.

@@ -179,7 +191,11 @@ The following code replaces the current custom properties with a new properties

You can now see the `cluster_name` and `retention_time` properties are added to `fct_users_deleted` but the previous `encoding` property is no longer present.

-

+

+

+  +

+

+

We can also verify this operation programmatically by checking the `datasetProperties` aspect using the `datahub` cli.

diff --git a/docs/api/tutorials/datasets.md b/docs/api/tutorials/datasets.md

index 62b30e97c8020..7c6d4a88d4190 100644

--- a/docs/api/tutorials/datasets.md

+++ b/docs/api/tutorials/datasets.md

@@ -42,7 +42,11 @@ For detailed steps, please refer to [Datahub Quickstart Guide](/docs/quickstart.

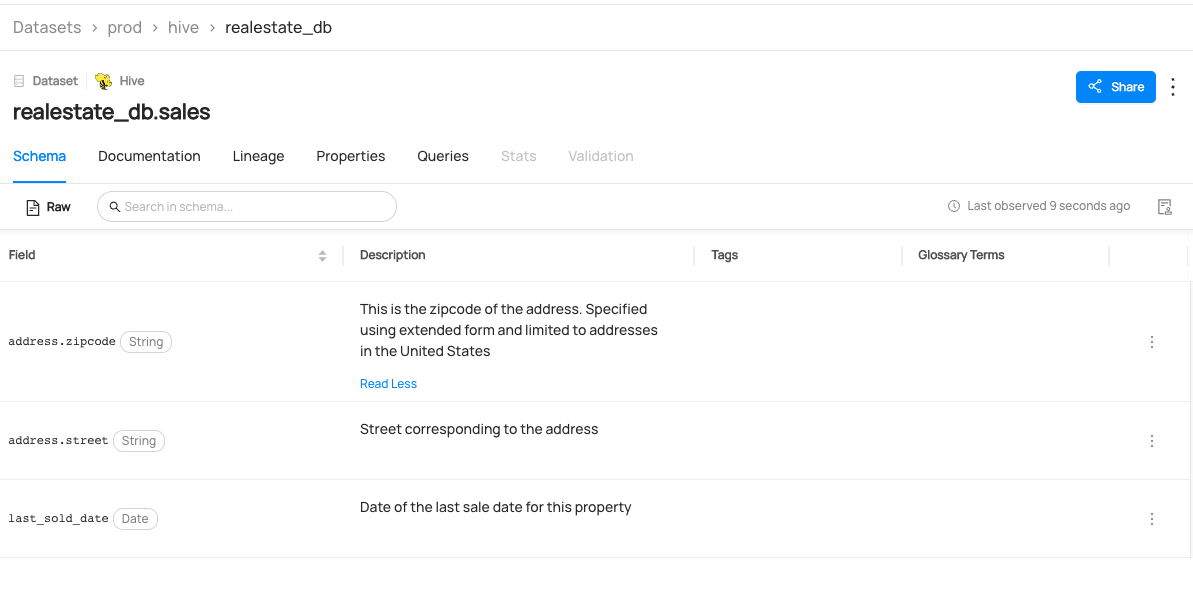

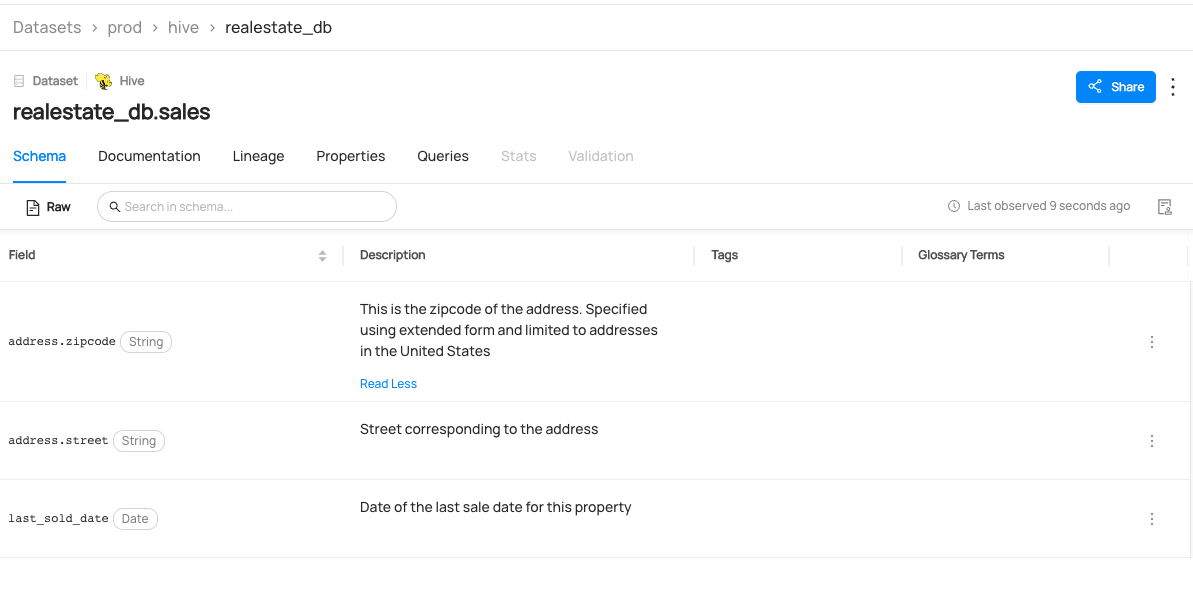

You can now see `realestate_db.sales` dataset has been created.

-

+

+

+  +

+

+

## Delete Dataset

@@ -110,4 +114,8 @@ Expected Response:

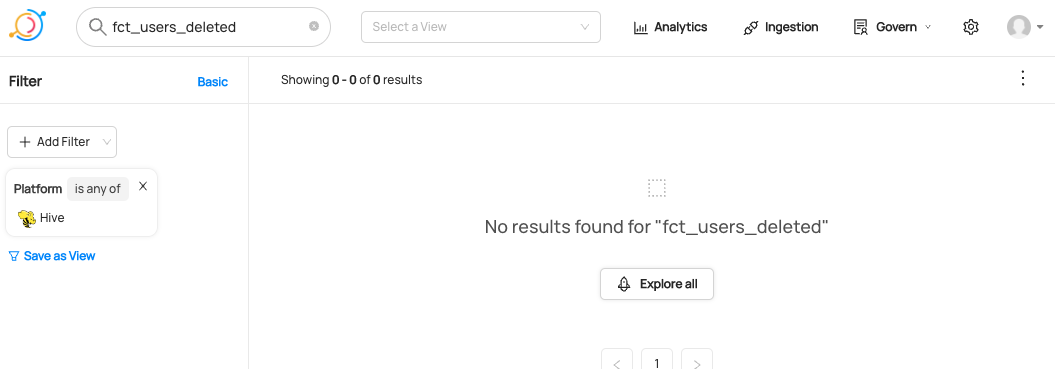

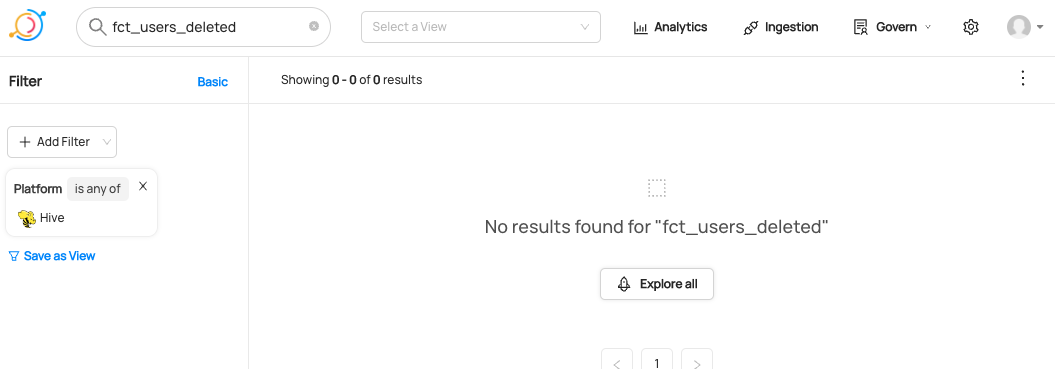

The dataset `fct_users_deleted` has now been deleted, so if you search for a hive dataset named `fct_users_delete`, you will no longer be able to see it.

-

+

+

+  +

+

+

diff --git a/docs/api/tutorials/deprecation.md b/docs/api/tutorials/deprecation.md

index 6a8f7c8a1d2be..73e73f5224cbc 100644

--- a/docs/api/tutorials/deprecation.md

+++ b/docs/api/tutorials/deprecation.md

@@ -155,4 +155,8 @@ Expected Response:

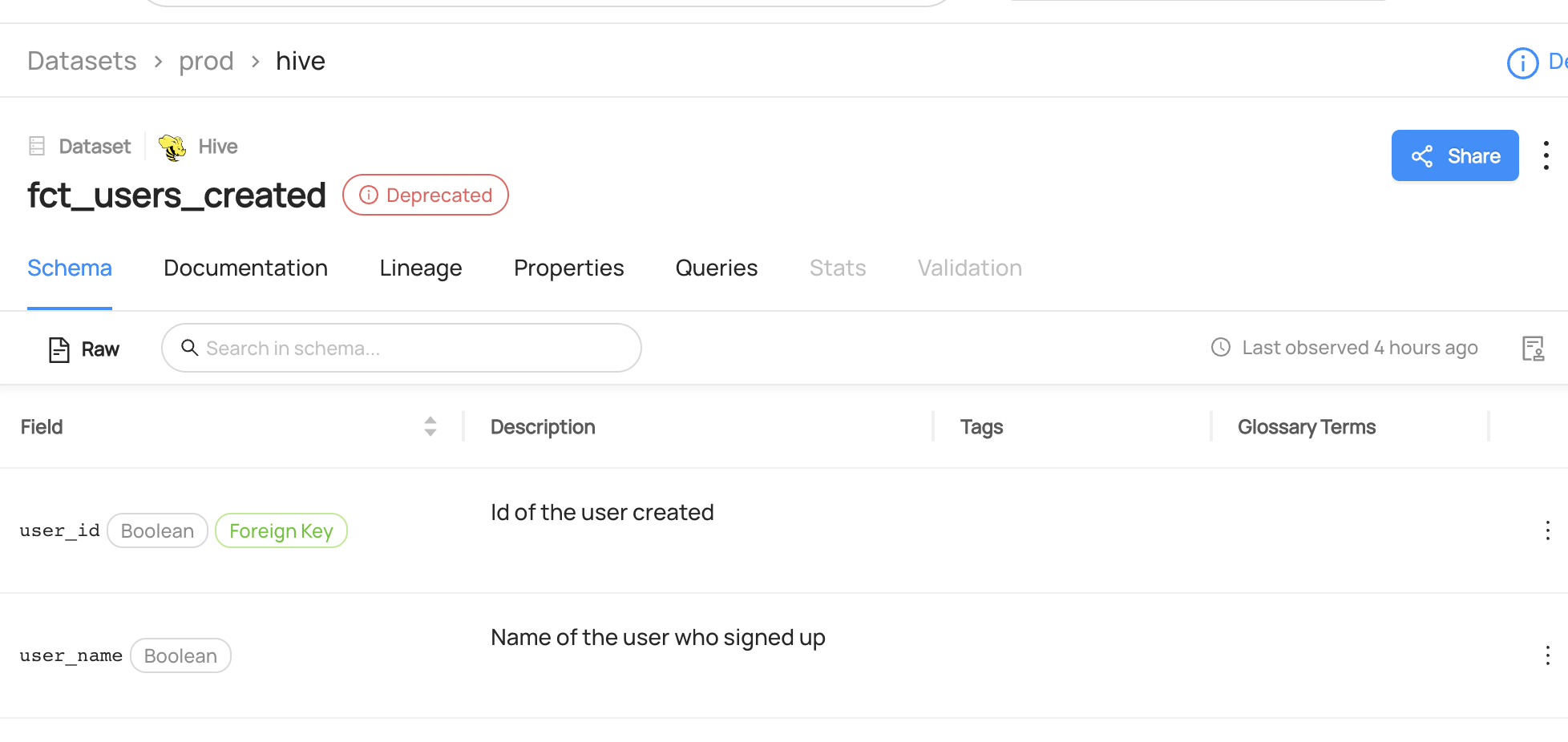

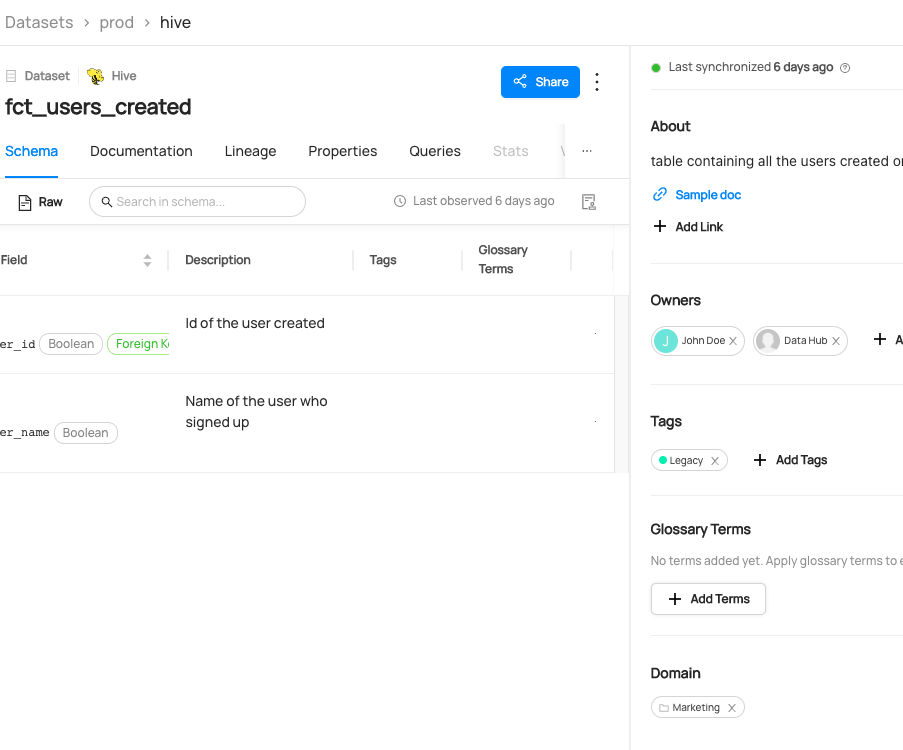

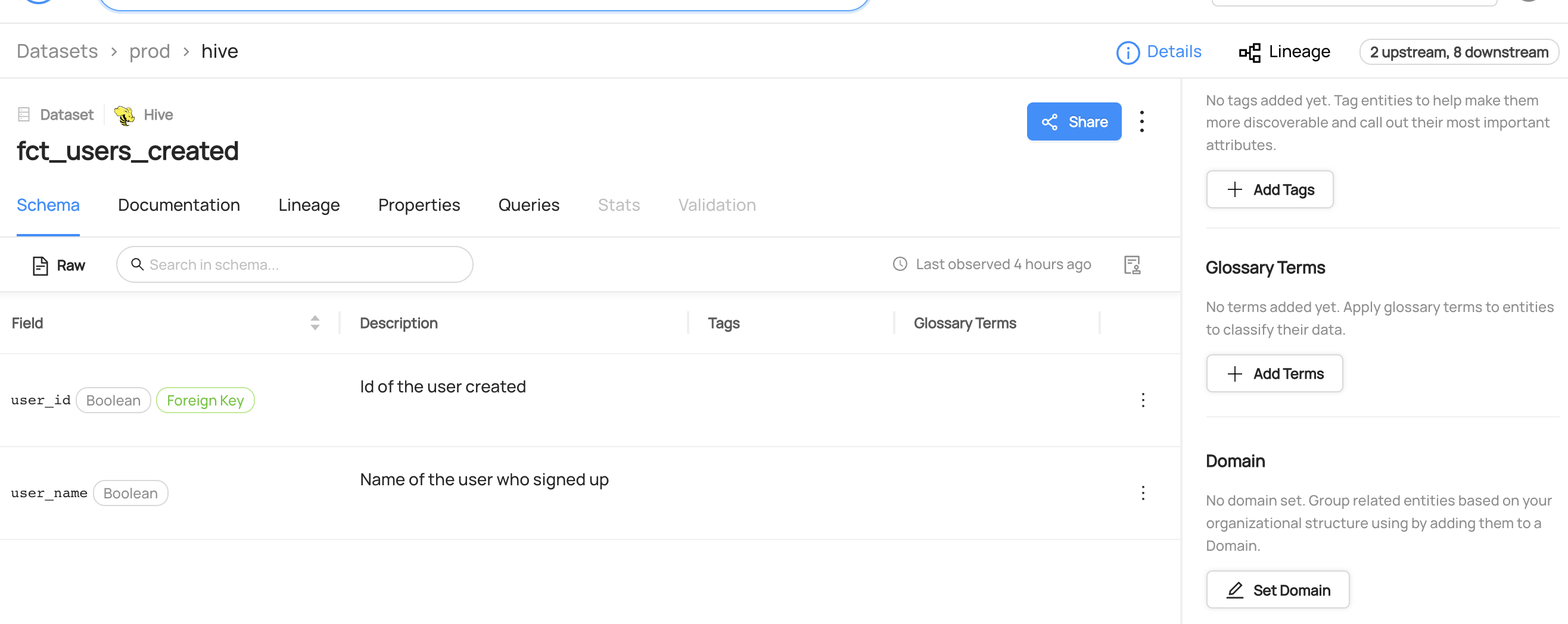

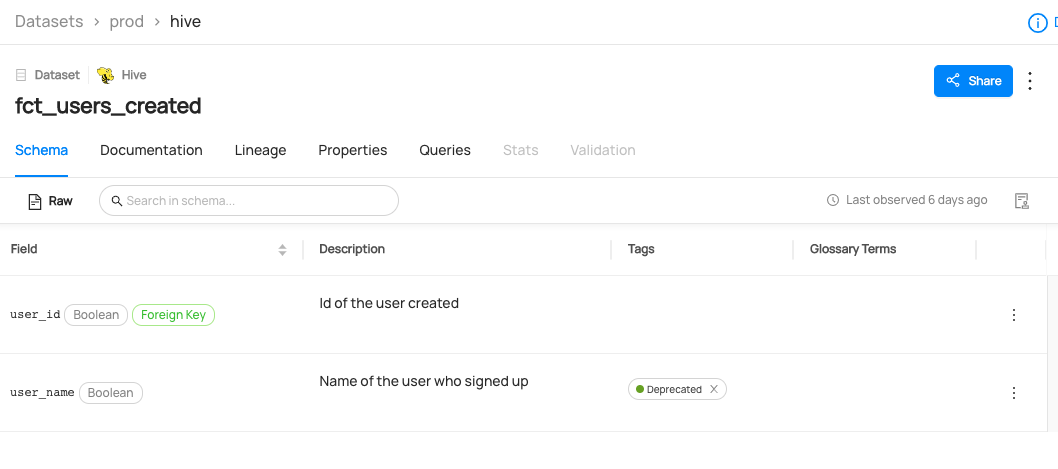

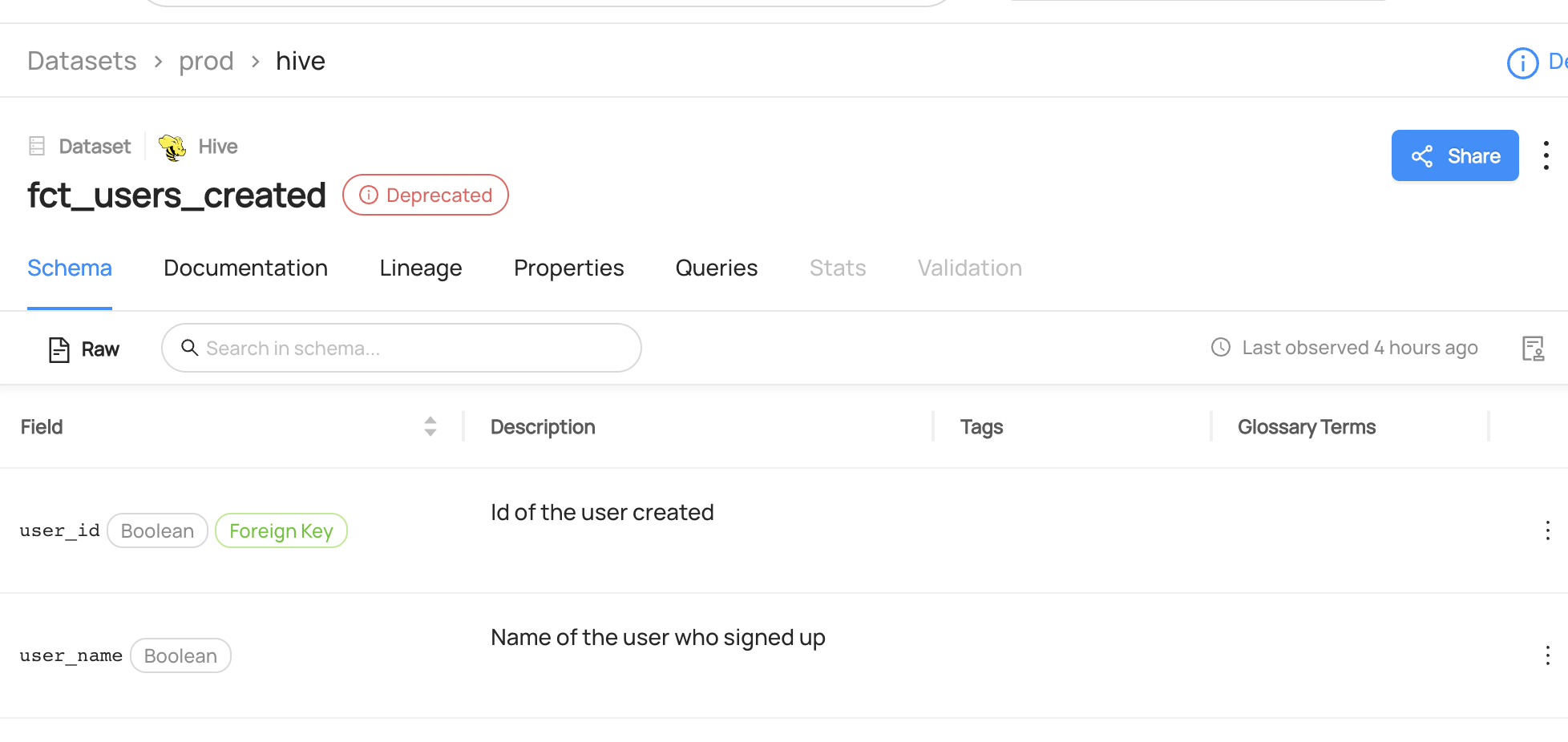

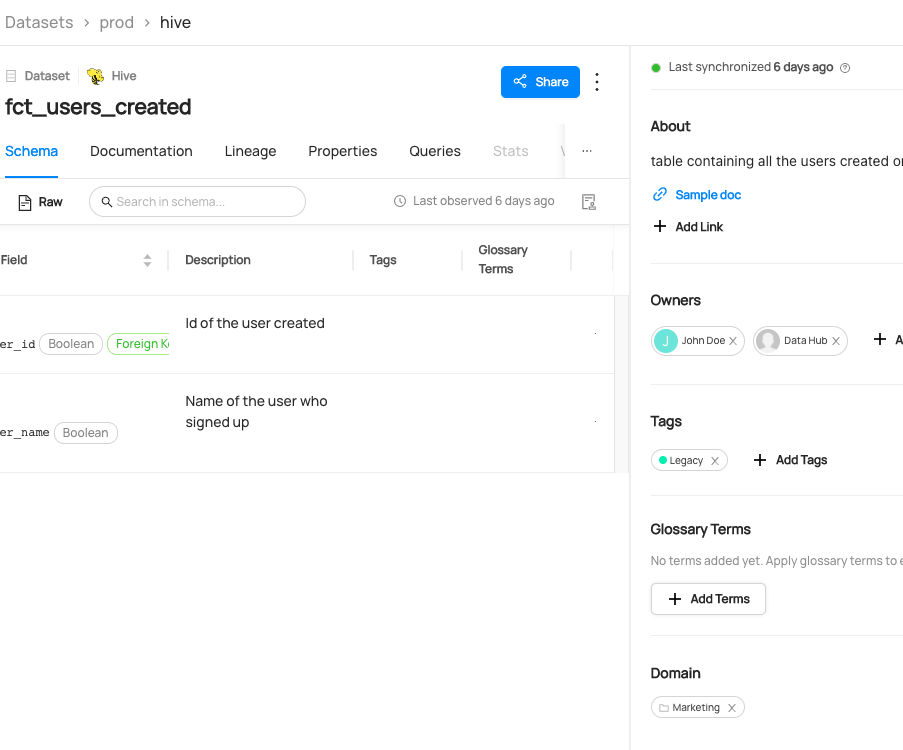

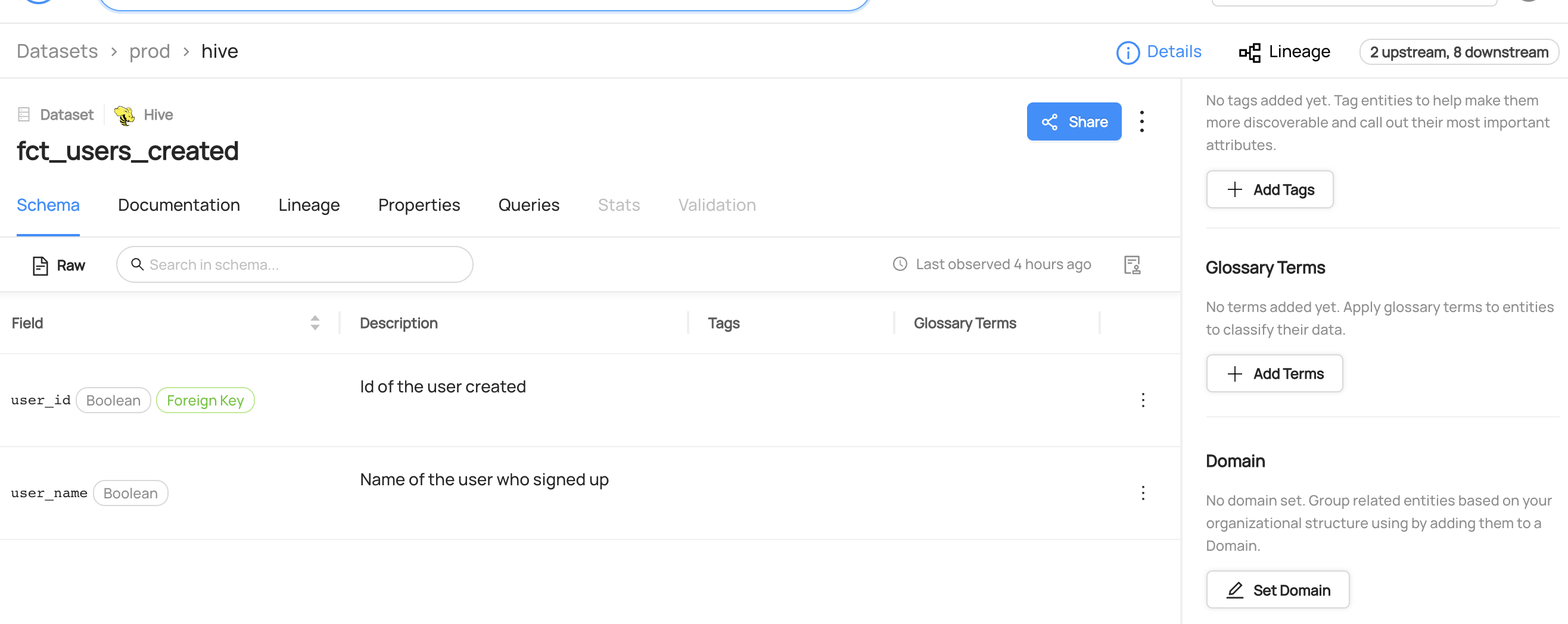

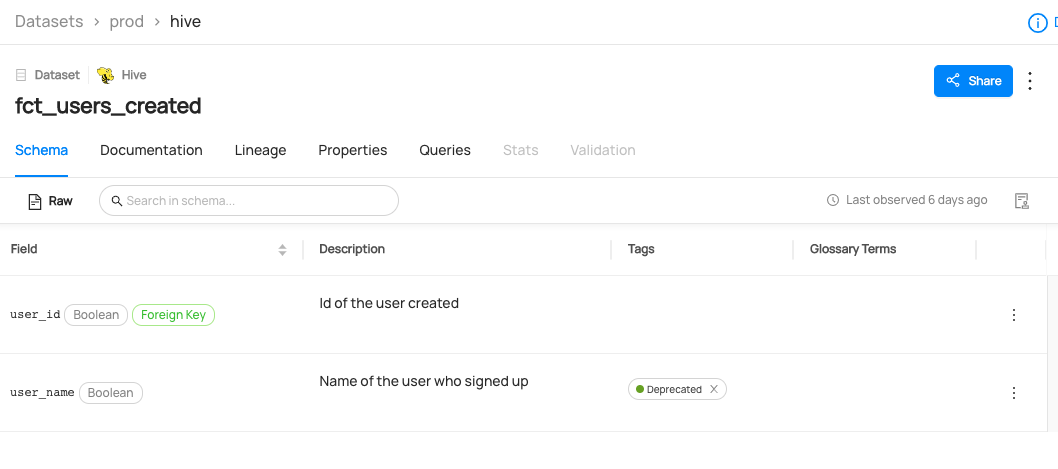

You can now see the dataset `fct_users_created` has been marked as `Deprecated.`

-

+

+

+  +

+

+

diff --git a/docs/api/tutorials/descriptions.md b/docs/api/tutorials/descriptions.md

index 46f42b7a05be6..27c57309ba76a 100644

--- a/docs/api/tutorials/descriptions.md

+++ b/docs/api/tutorials/descriptions.md

@@ -275,7 +275,11 @@ Expected Response:

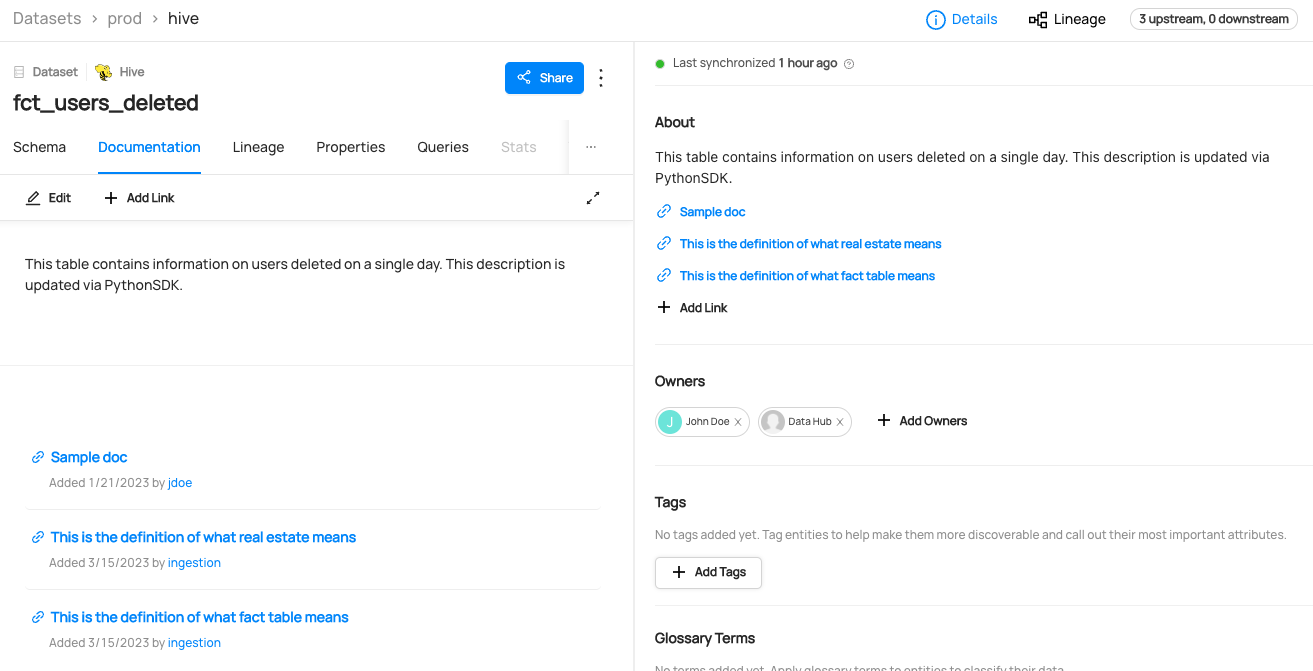

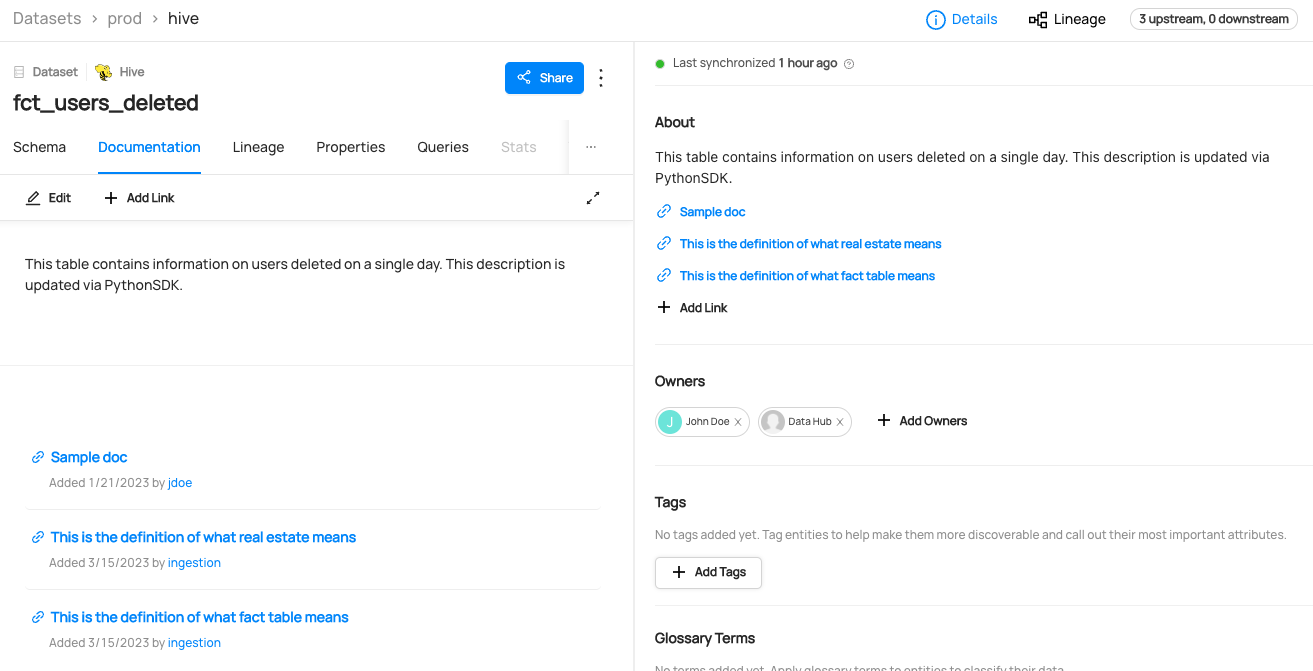

You can now see the description is added to `fct_users_deleted`.

-

+

+

+  +

+

+

## Add Description on Column

@@ -357,4 +361,8 @@ Expected Response:

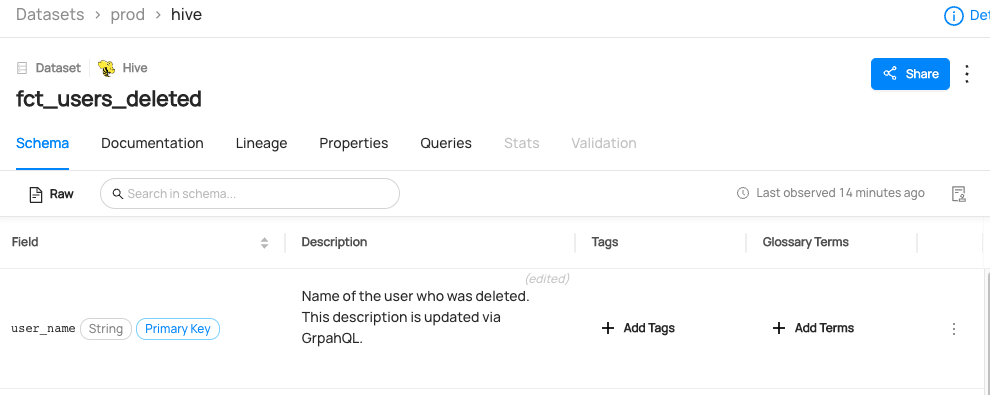

You can now see column description is added to `user_name` column of `fct_users_deleted`.

-

+

+

+  +

+

+

diff --git a/docs/api/tutorials/domains.md b/docs/api/tutorials/domains.md

index c8c47f85c570f..617864d233b7a 100644

--- a/docs/api/tutorials/domains.md

+++ b/docs/api/tutorials/domains.md

@@ -74,7 +74,11 @@ Expected Response:

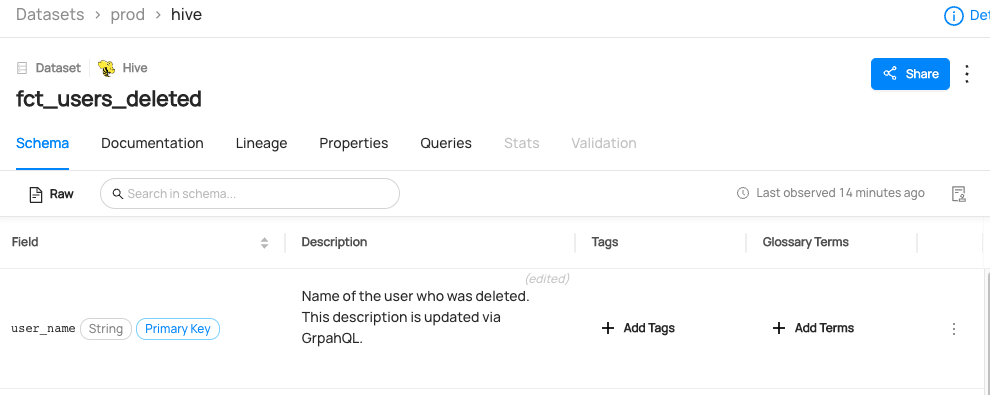

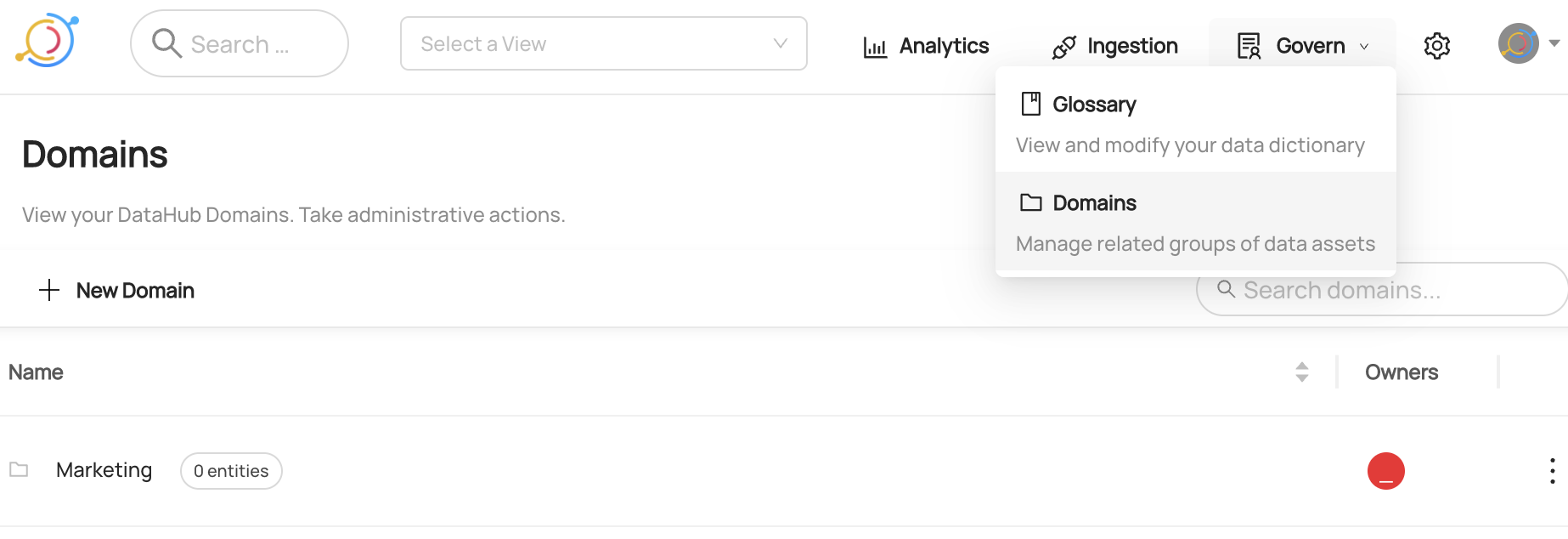

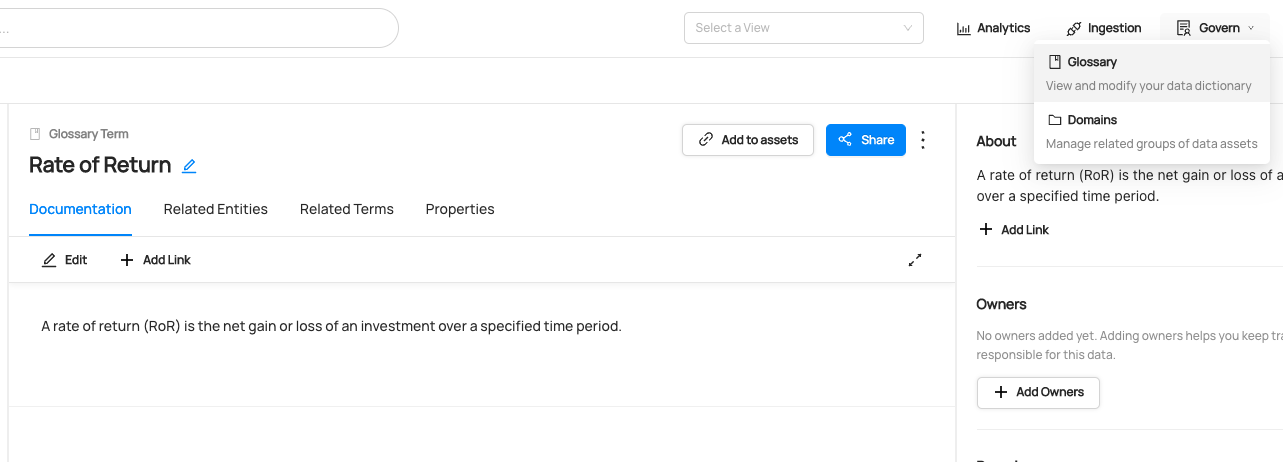

You can now see `Marketing` domain has been created under `Govern > Domains`.

-

+

+

+  +

+

+

## Read Domains

@@ -209,7 +213,11 @@ Expected Response:

You can now see `Marketing` domain has been added to the dataset.

-

+

+

+  +

+

+

## Remove Domains

@@ -259,4 +267,8 @@ curl --location --request POST 'http://localhost:8080/api/graphql' \

You can now see a domain `Marketing` has been removed from the `fct_users_created` dataset.

-

+

+

+  +

+

+

diff --git a/docs/api/tutorials/lineage.md b/docs/api/tutorials/lineage.md

index e37986af7bbbd..ce23a4d274e8e 100644

--- a/docs/api/tutorials/lineage.md

+++ b/docs/api/tutorials/lineage.md

@@ -112,7 +112,11 @@ Expected Response:

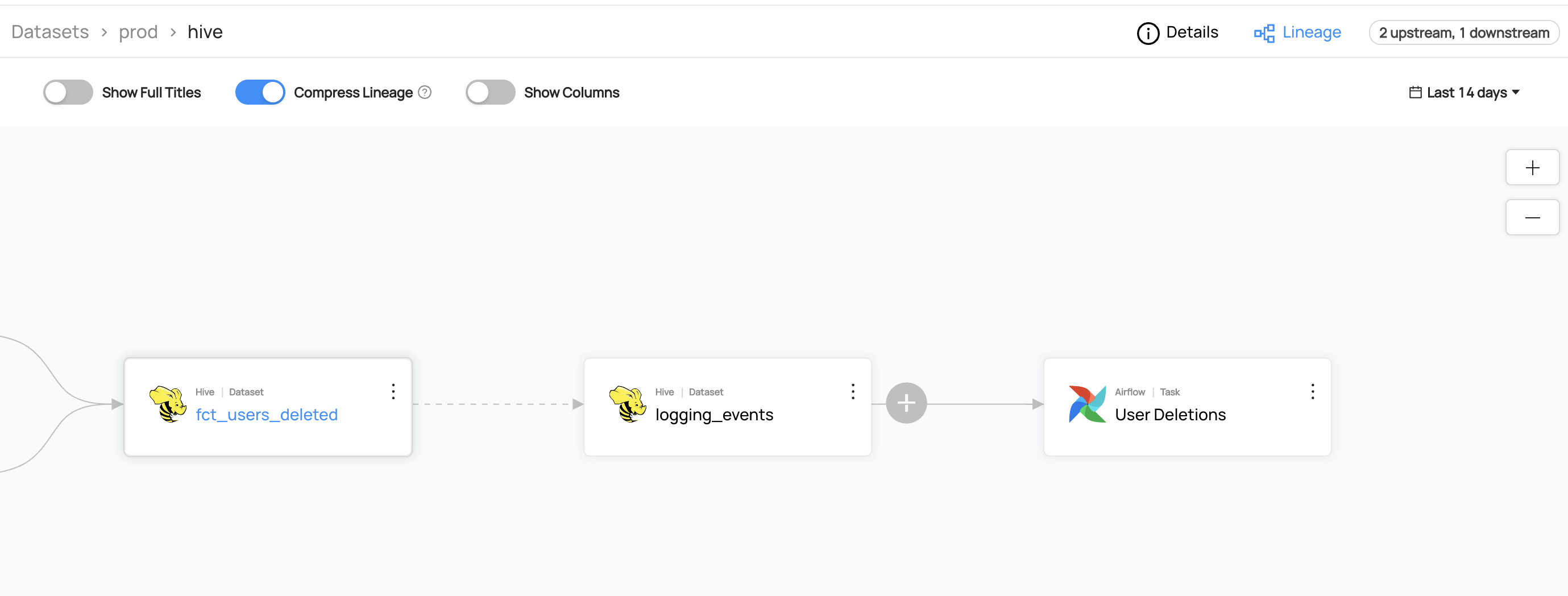

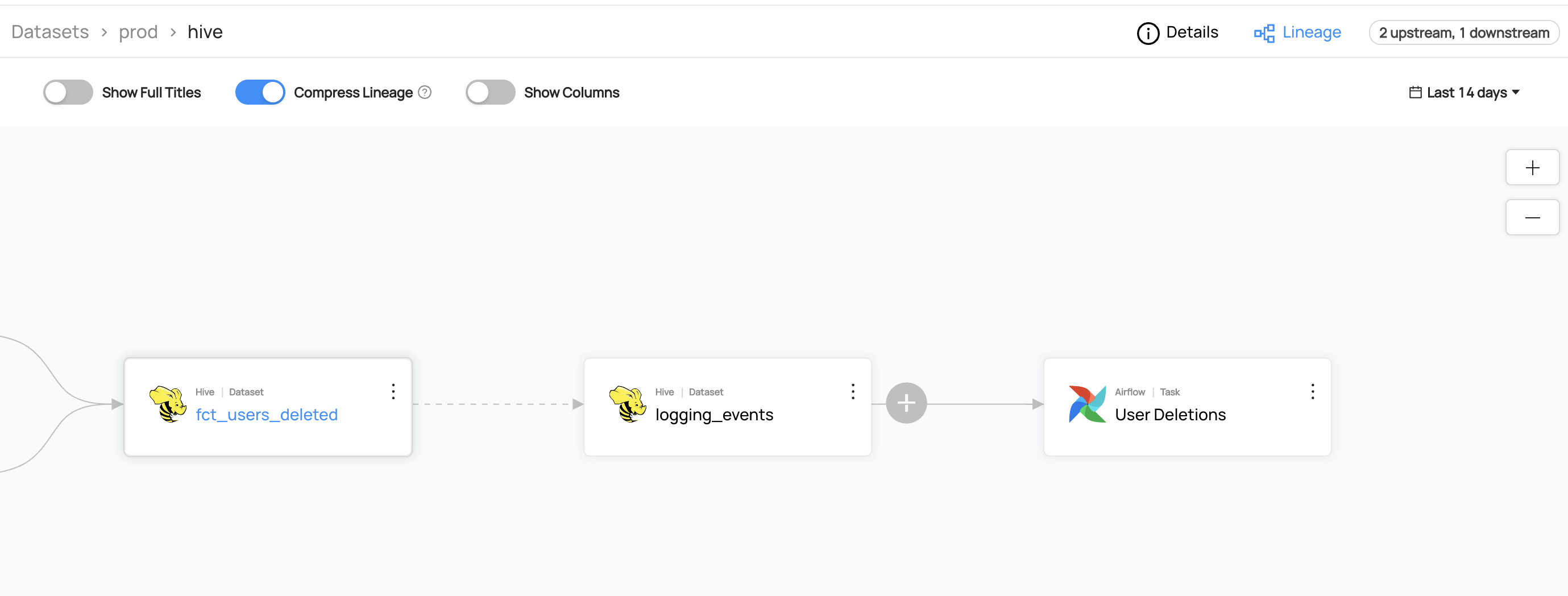

You can now see the lineage between `fct_users_deleted` and `logging_events`.

-

+

+

+  +

+

+

## Add Column-level Lineage

@@ -130,7 +134,11 @@ You can now see the lineage between `fct_users_deleted` and `logging_events`.

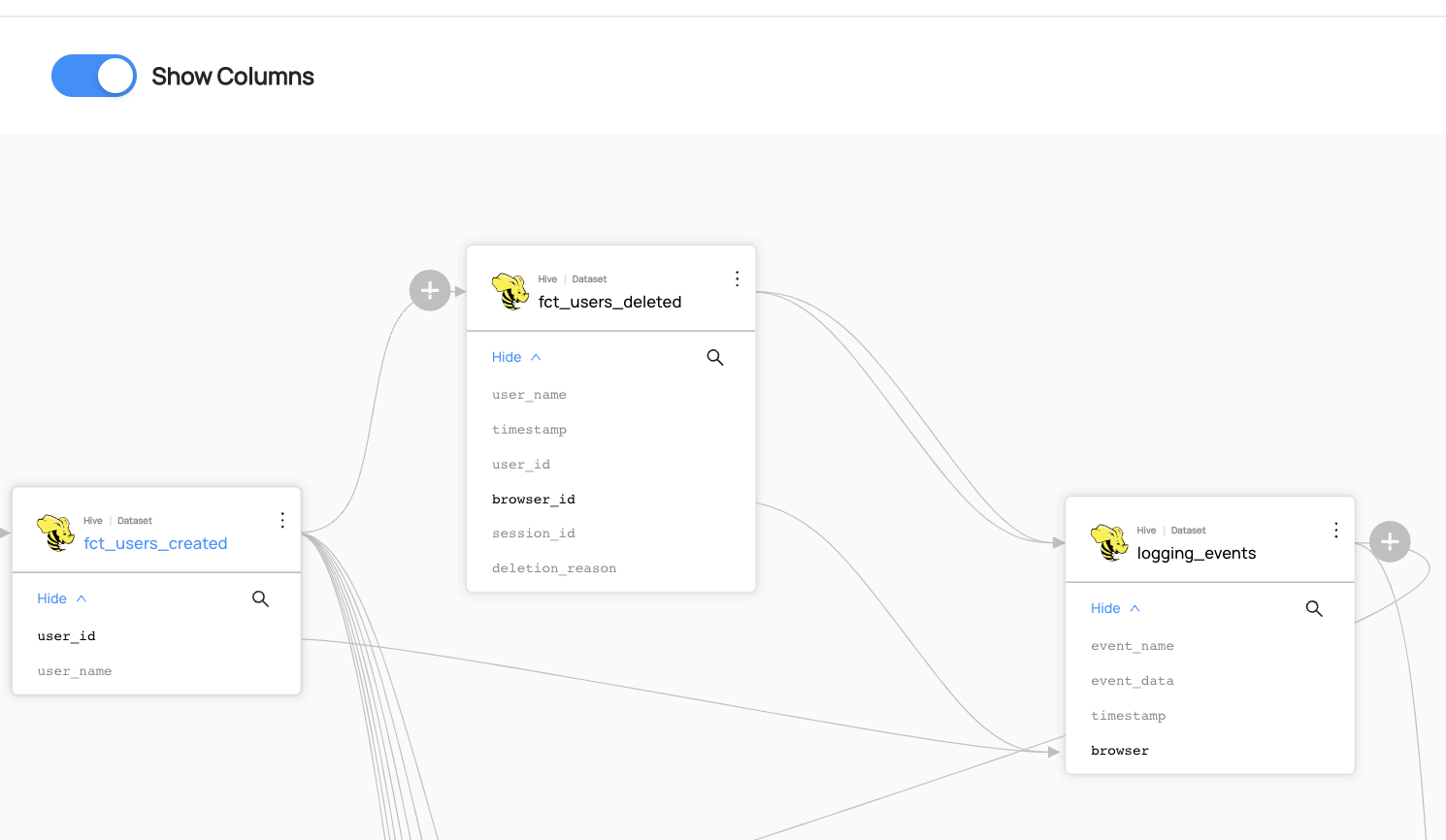

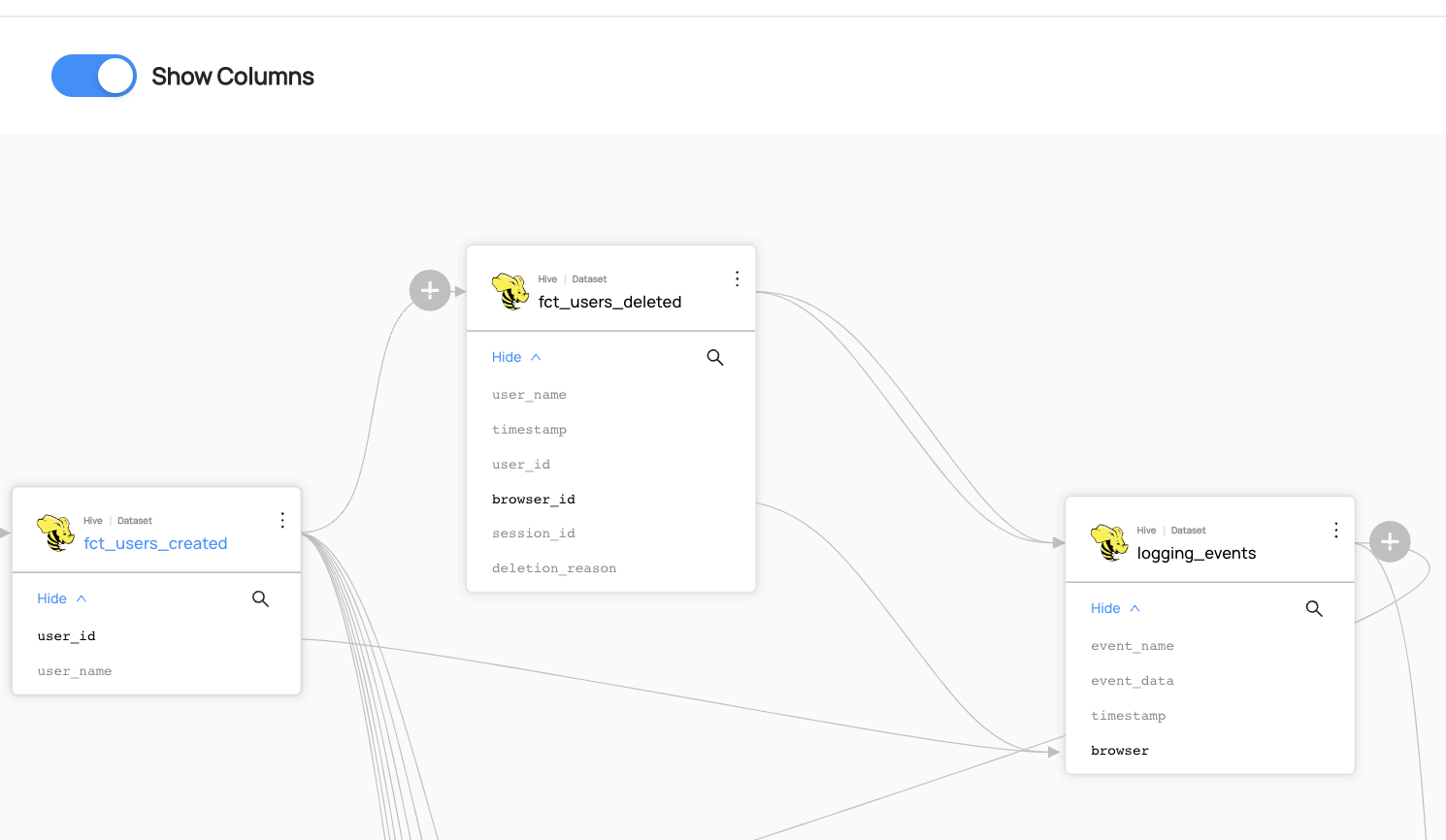

You can now see the column-level lineage between datasets. Note that you have to enable `Show Columns` to be able to see the column-level lineage.

-

+

+

+  +

+

+

## Read Lineage

diff --git a/docs/api/tutorials/ml.md b/docs/api/tutorials/ml.md

index b16f2669b30c7..cb77556d48ebf 100644

--- a/docs/api/tutorials/ml.md

+++ b/docs/api/tutorials/ml.md

@@ -94,9 +94,17 @@ Please note that an MlModelGroup serves as a container for all the runs of a sin

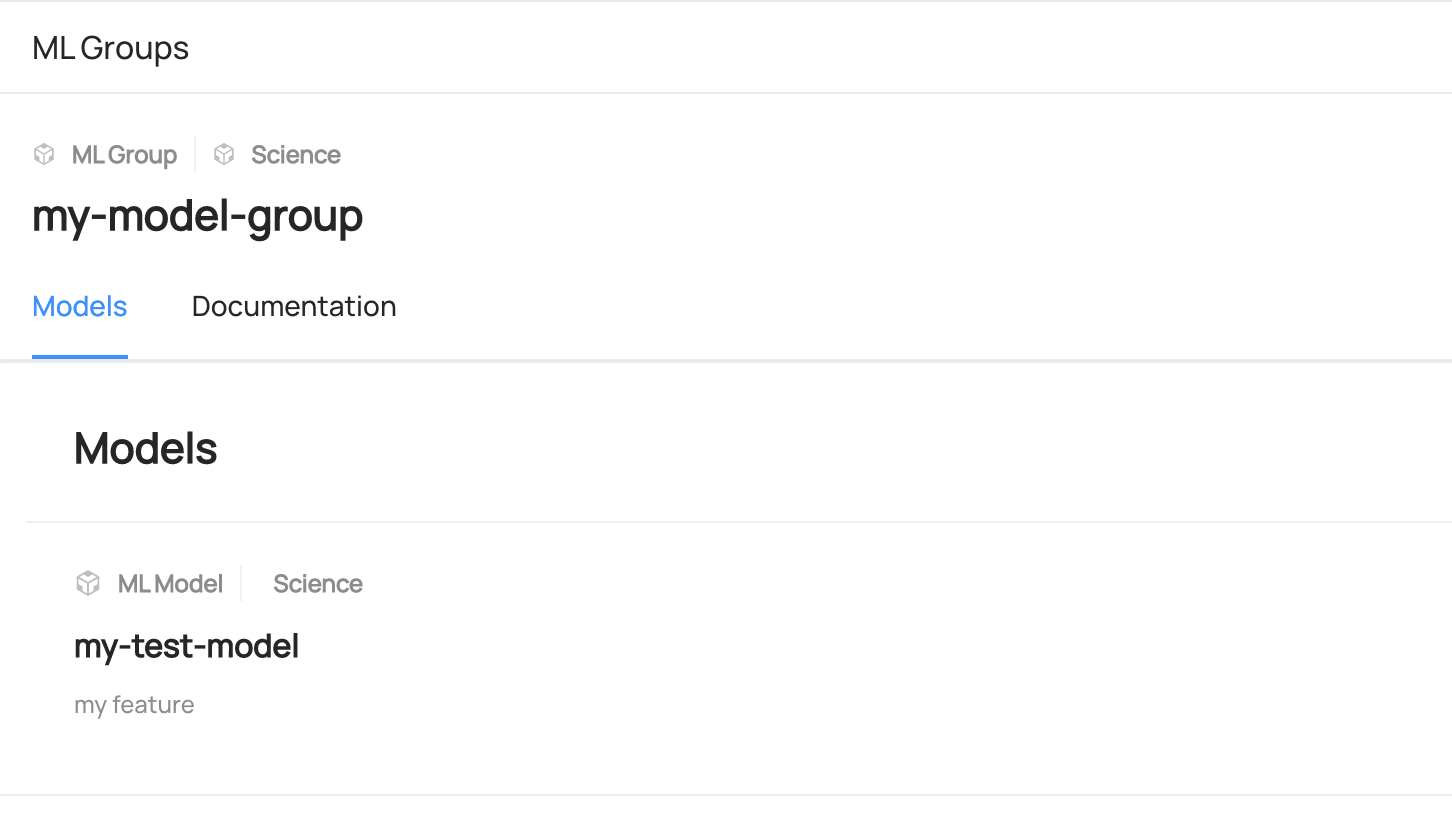

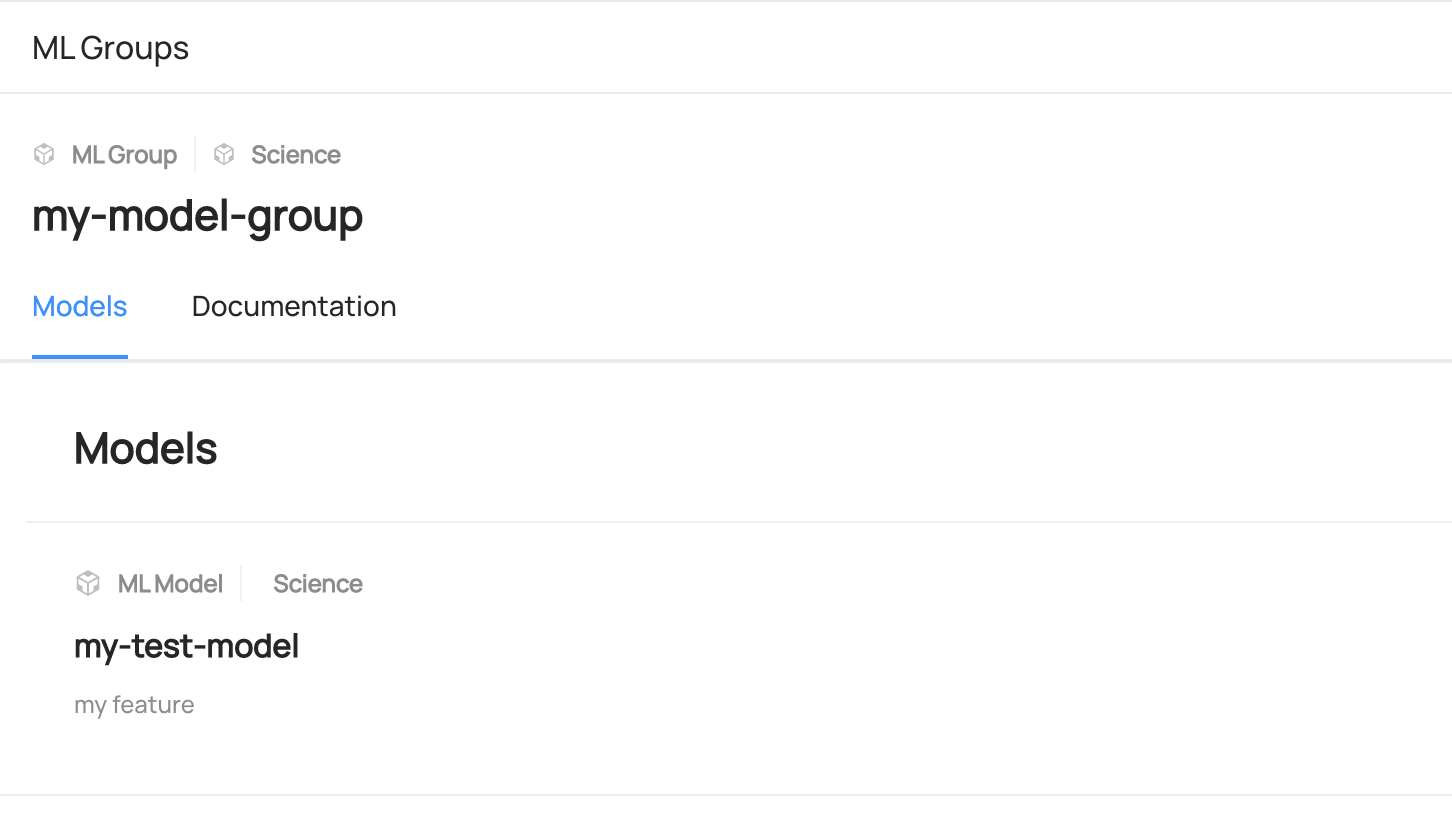

You can search the entities in DataHub UI.

-

-

+

+  +

+

+

+

+

+

+  +

+

+

## Read ML Entities

@@ -499,6 +507,14 @@ Expected Response: (Note that this entity does not exist in the sample ingestion

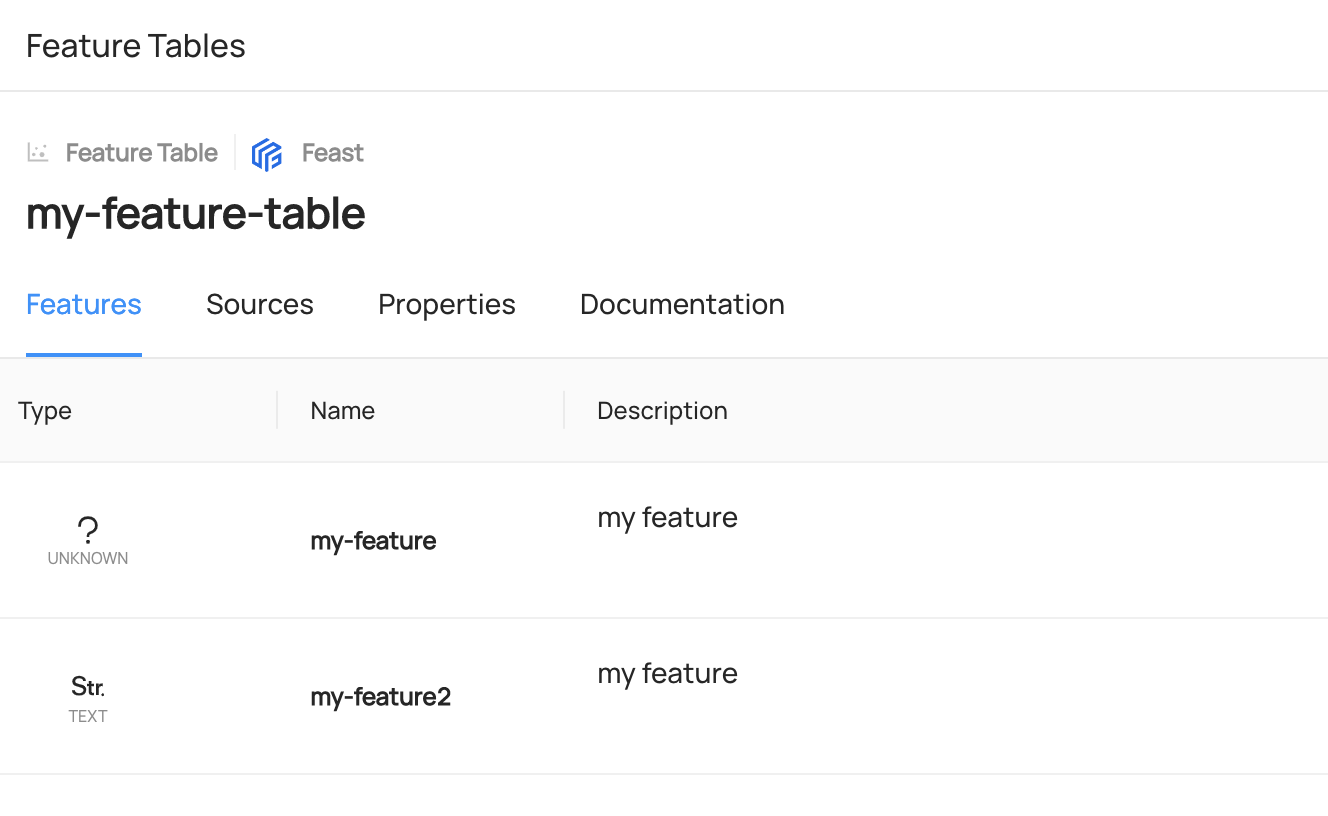

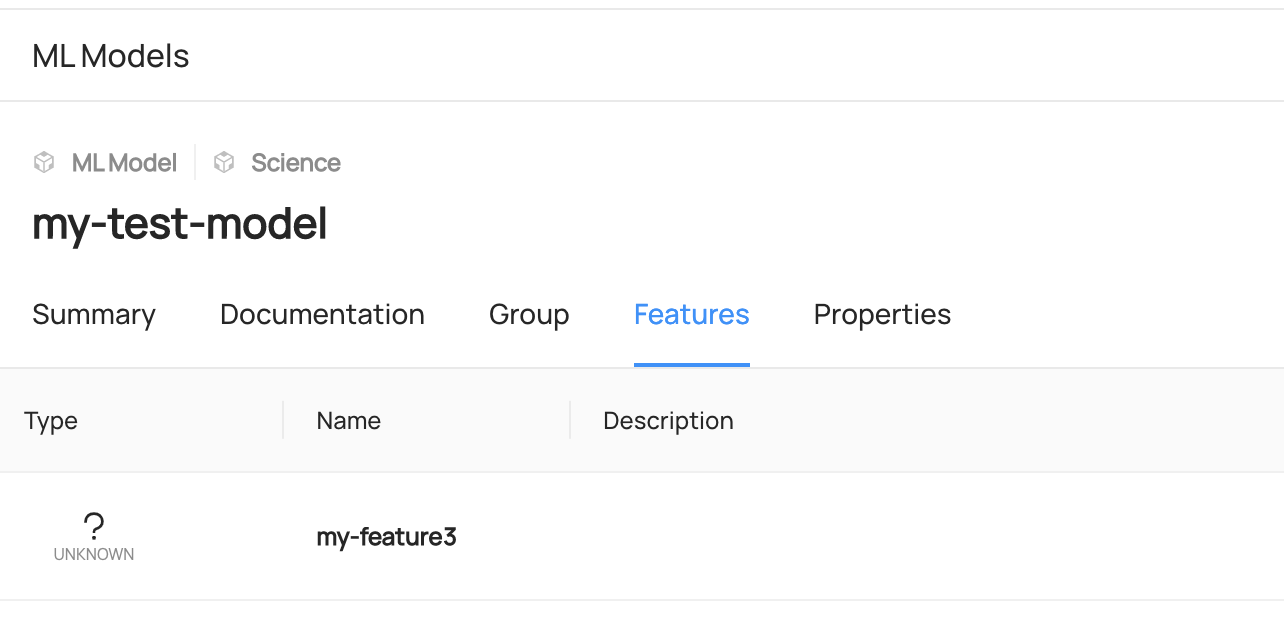

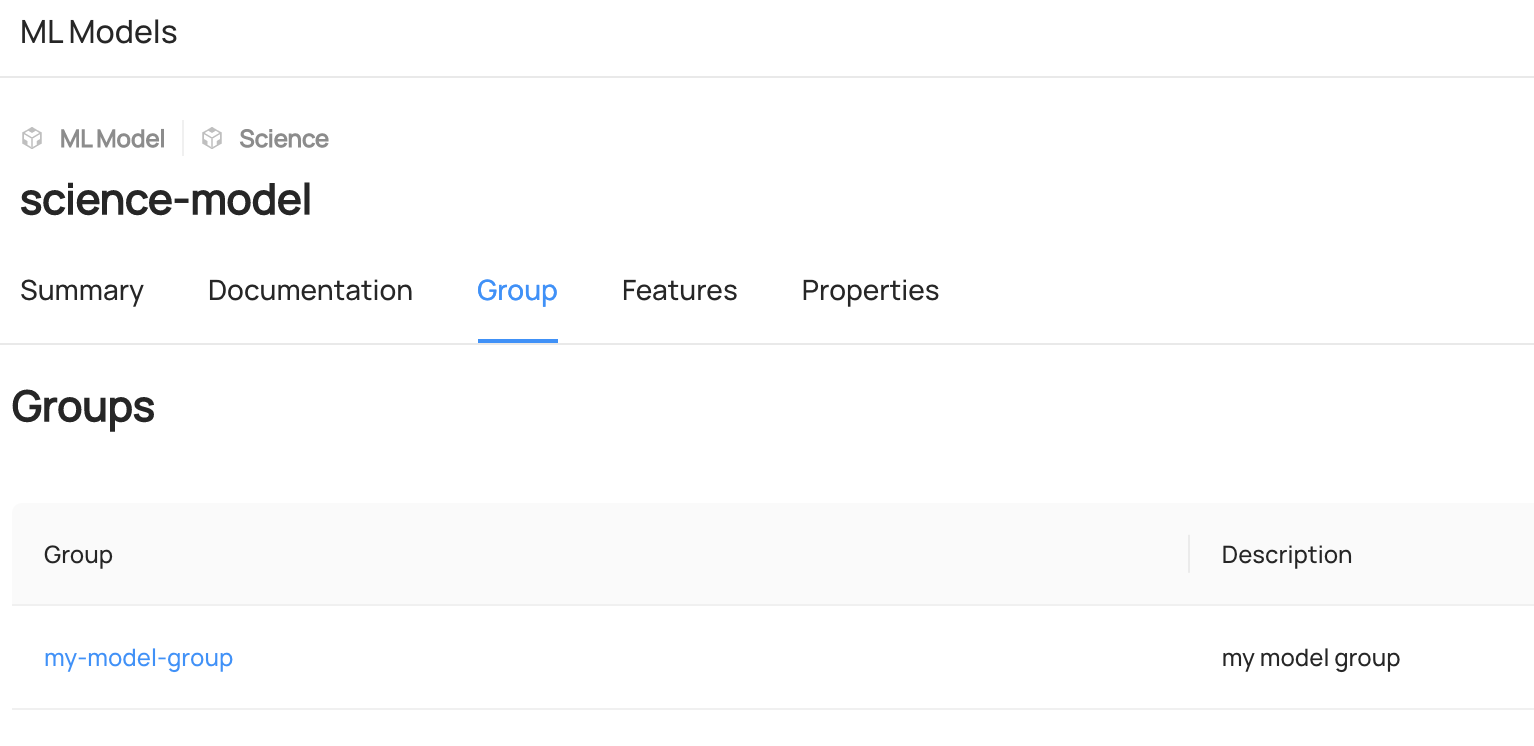

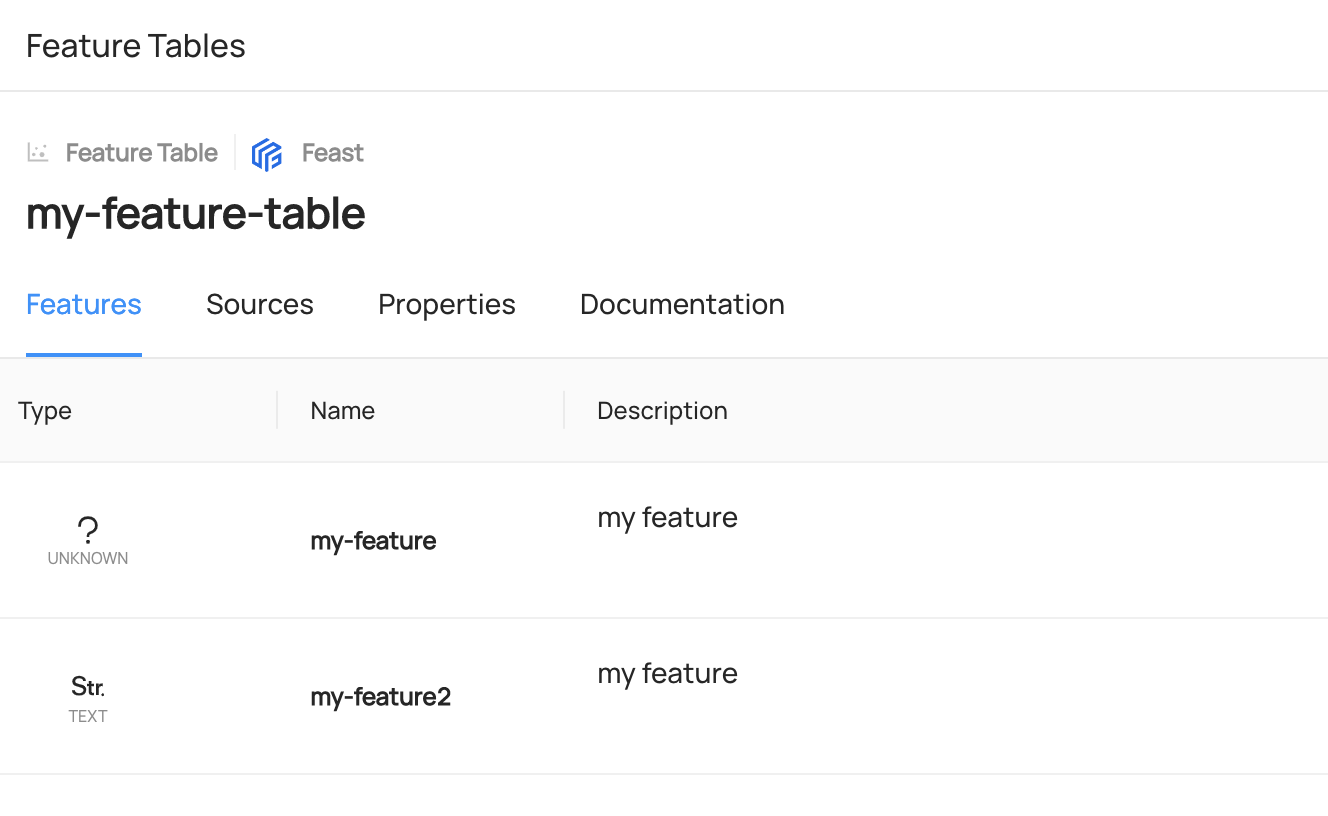

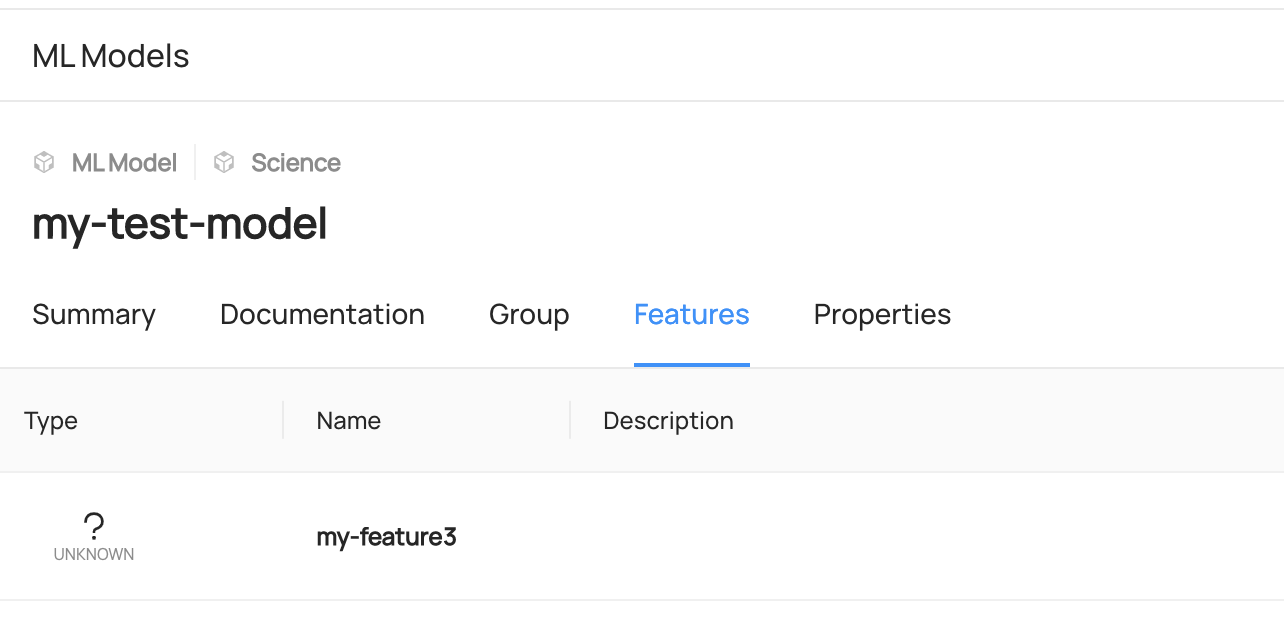

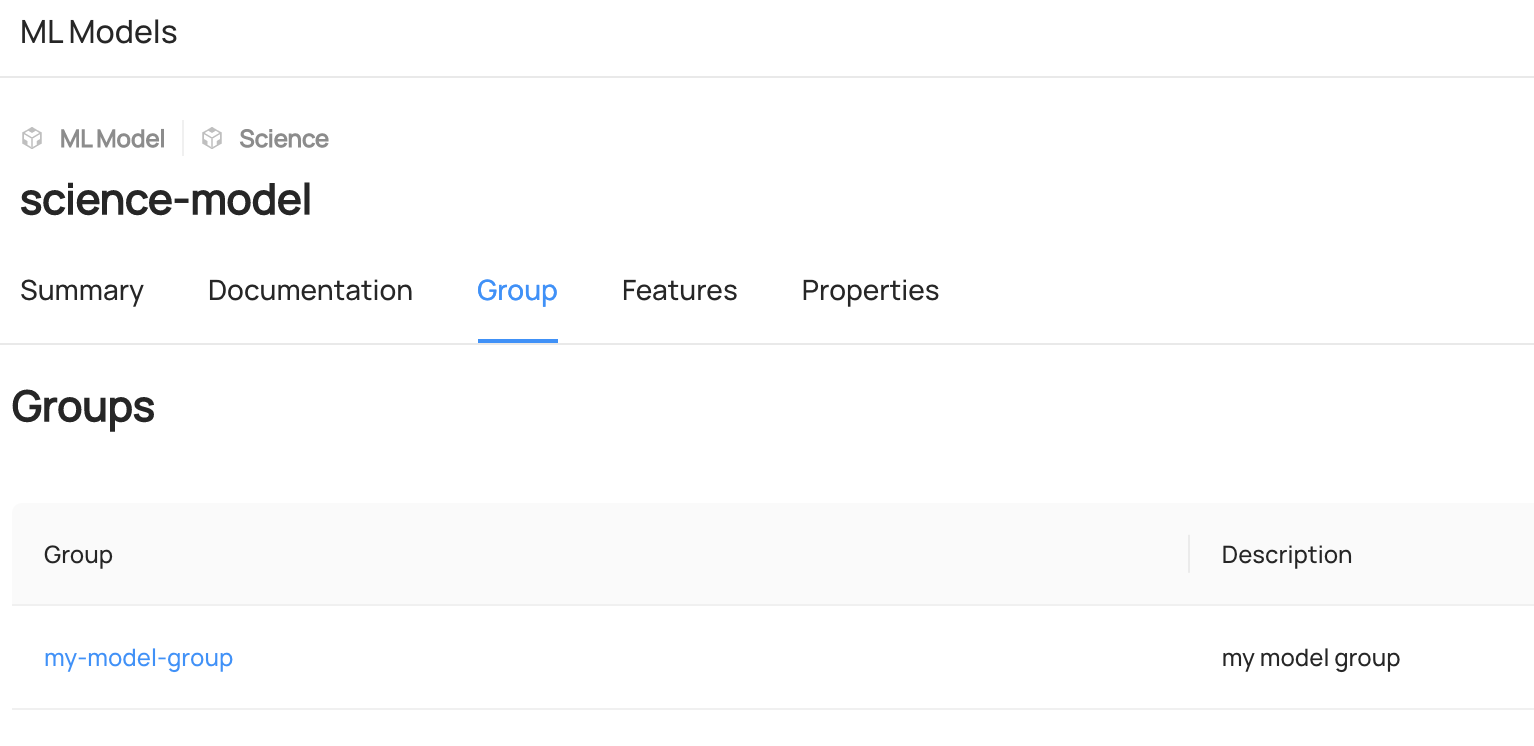

You can access to `Features` or `Group` Tab of each entity to view the added entities.

-

-

+

+  +

+

+

+

+

+

+  +

+

+

diff --git a/docs/api/tutorials/owners.md b/docs/api/tutorials/owners.md

index 3c7a46b136d76..5bc3b95cb5631 100644

--- a/docs/api/tutorials/owners.md

+++ b/docs/api/tutorials/owners.md

@@ -77,7 +77,11 @@ Update succeeded for urn urn:li:corpuser:datahub.

### Expected Outcomes of Upserting User

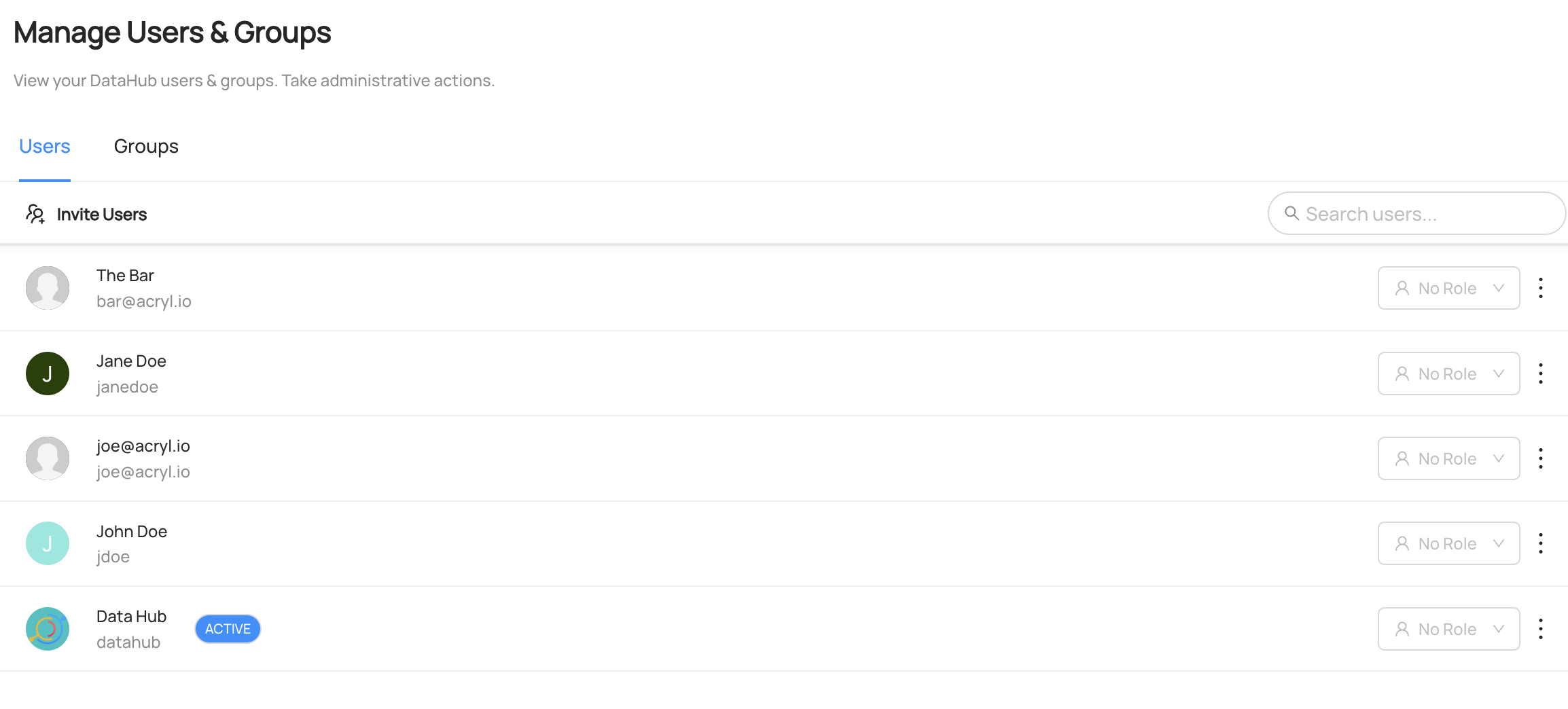

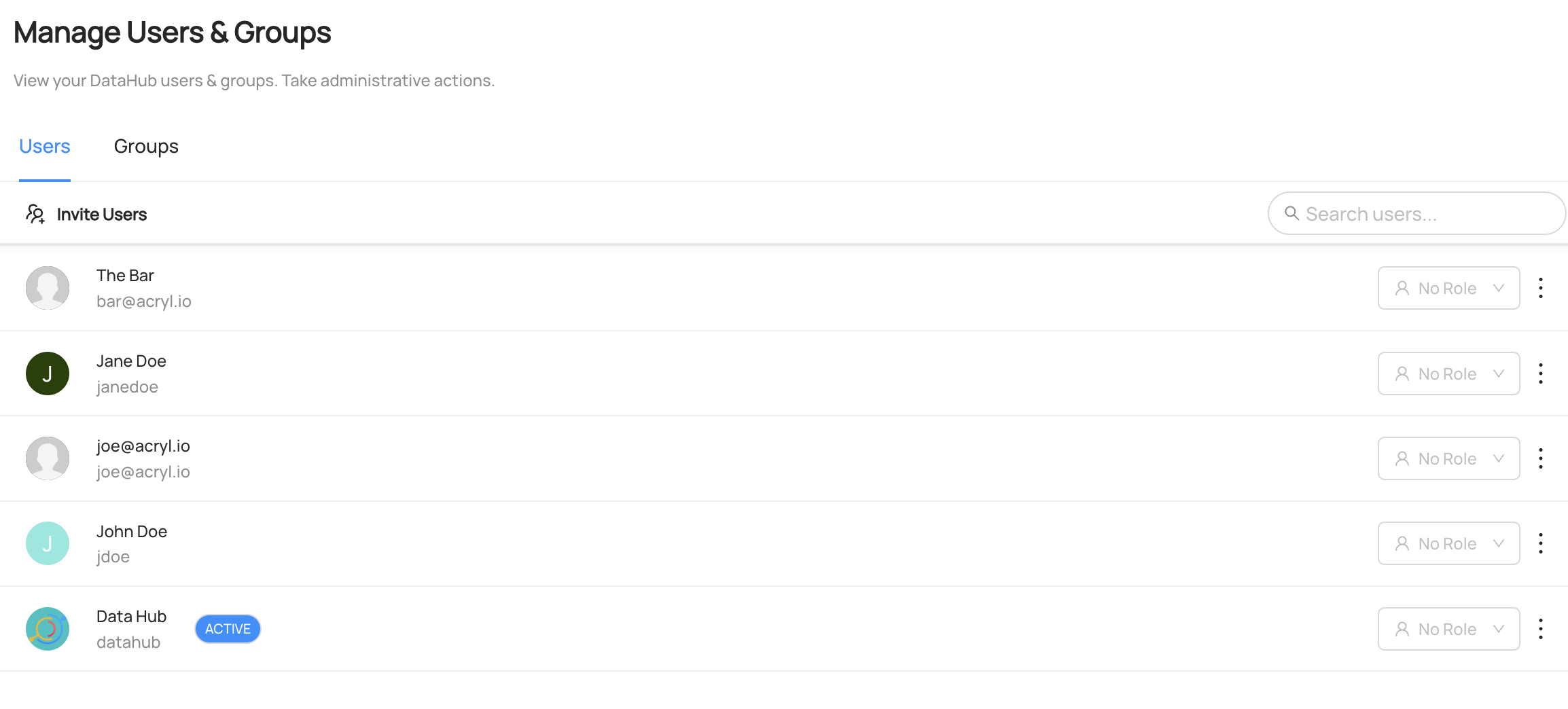

You can see the user `The bar` has been created and the user `Datahub` has been updated under `Settings > Access > Users & Groups`

-

+

+

+  +

+

+

## Upsert Group

@@ -125,7 +129,11 @@ Update succeeded for group urn:li:corpGroup:foogroup@acryl.io.

### Expected Outcomes of Upserting Group

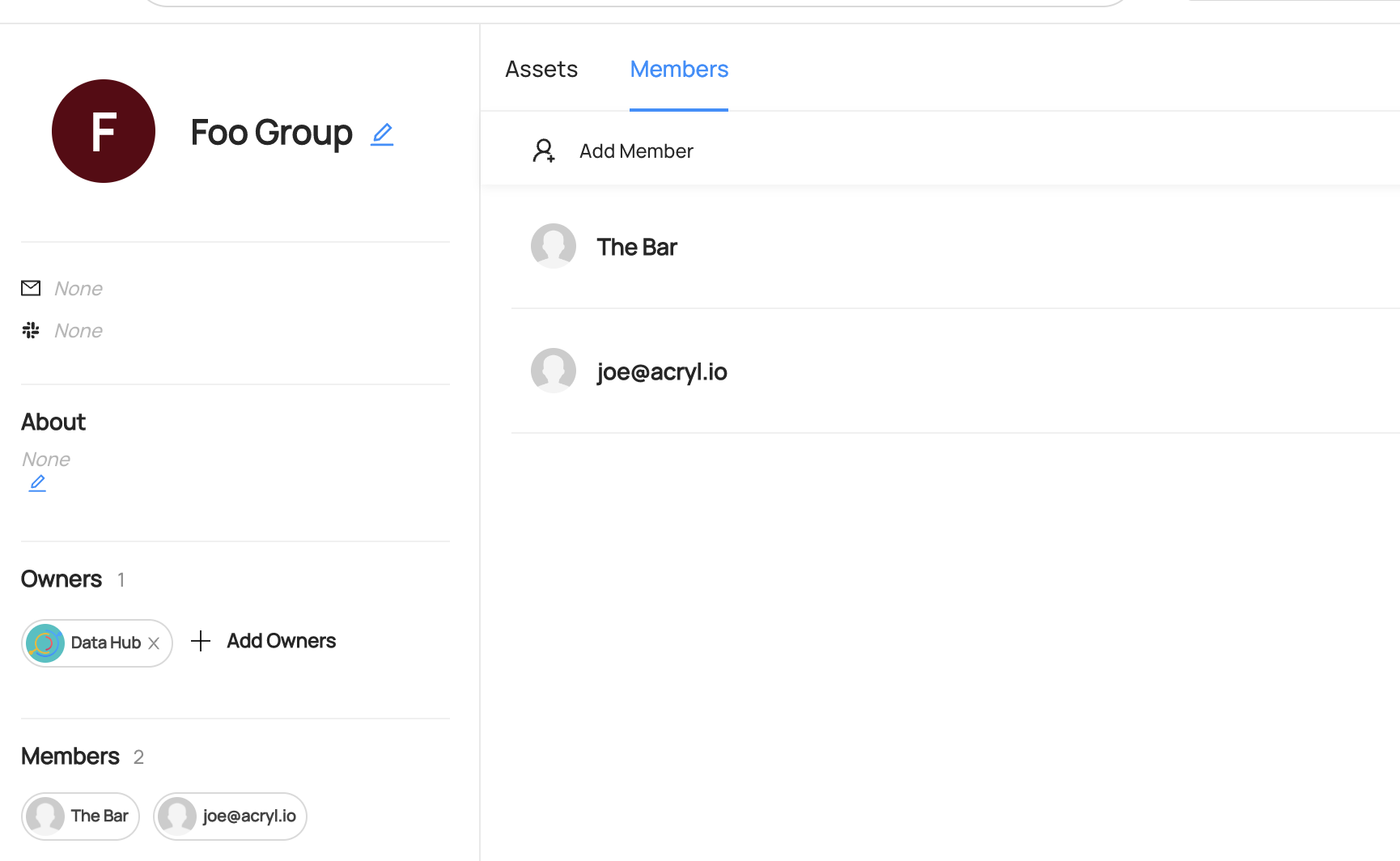

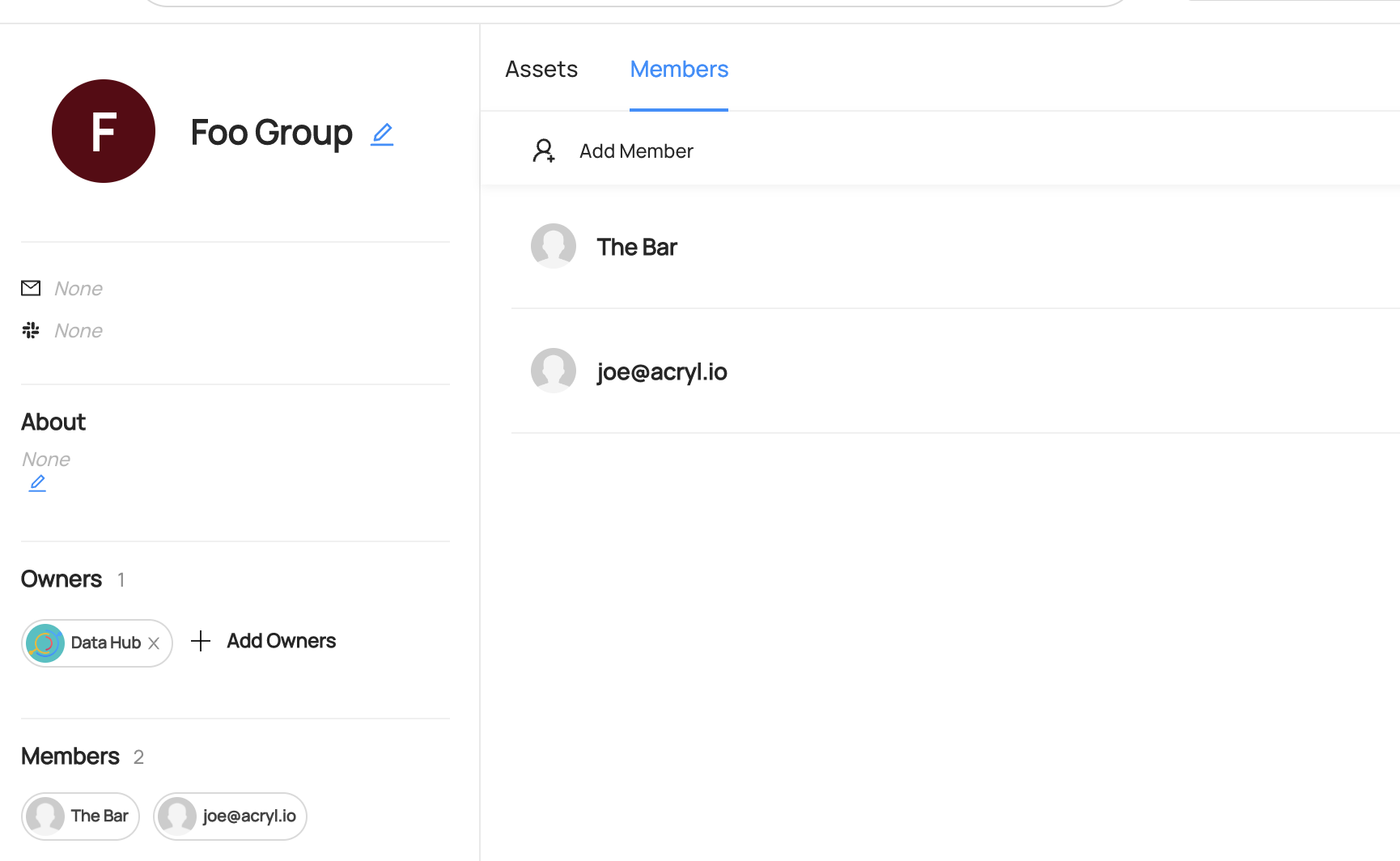

You can see the group `Foo Group` has been created under `Settings > Access > Users & Groups`

-

+

+

+  +

+

+

## Read Owners

@@ -272,7 +280,11 @@ curl --location --request POST 'http://localhost:8080/api/graphql' \

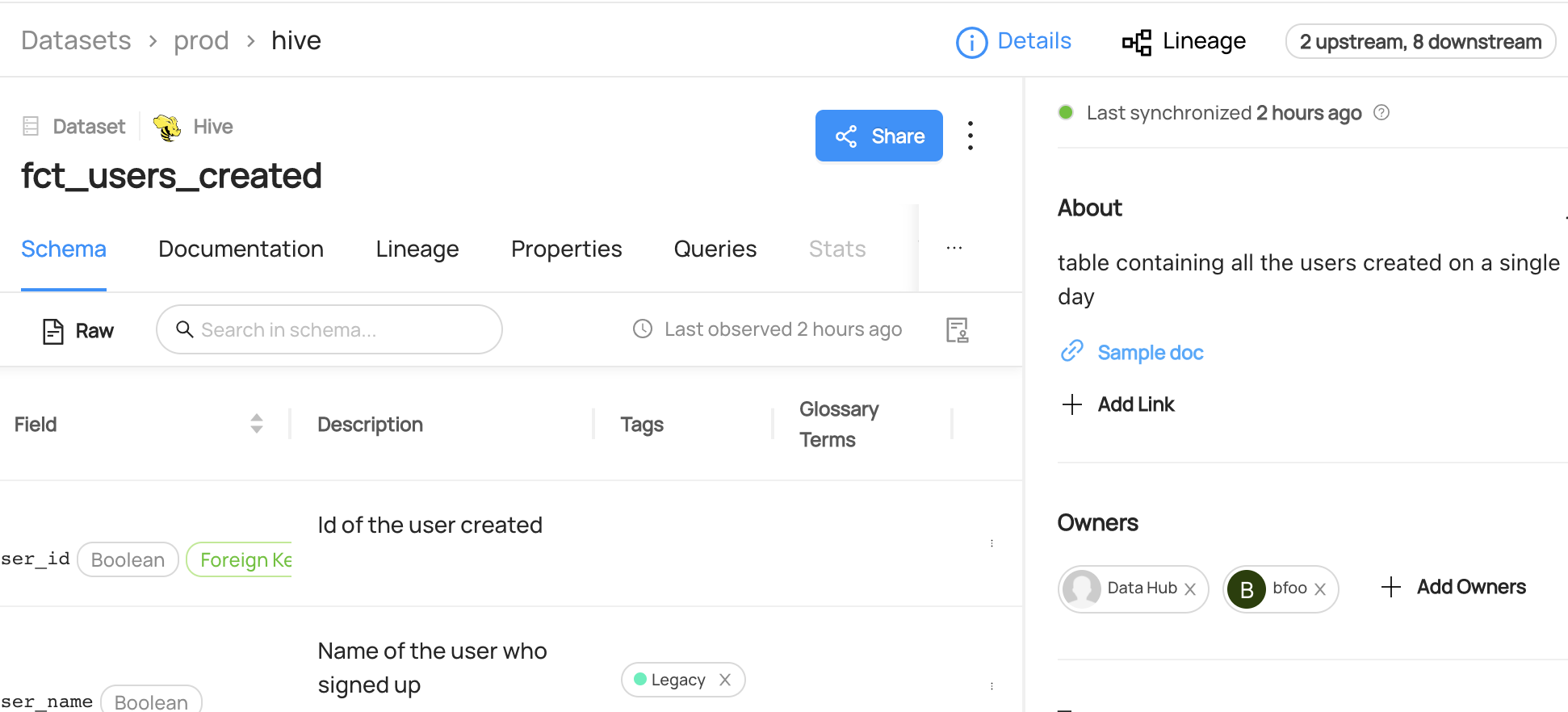

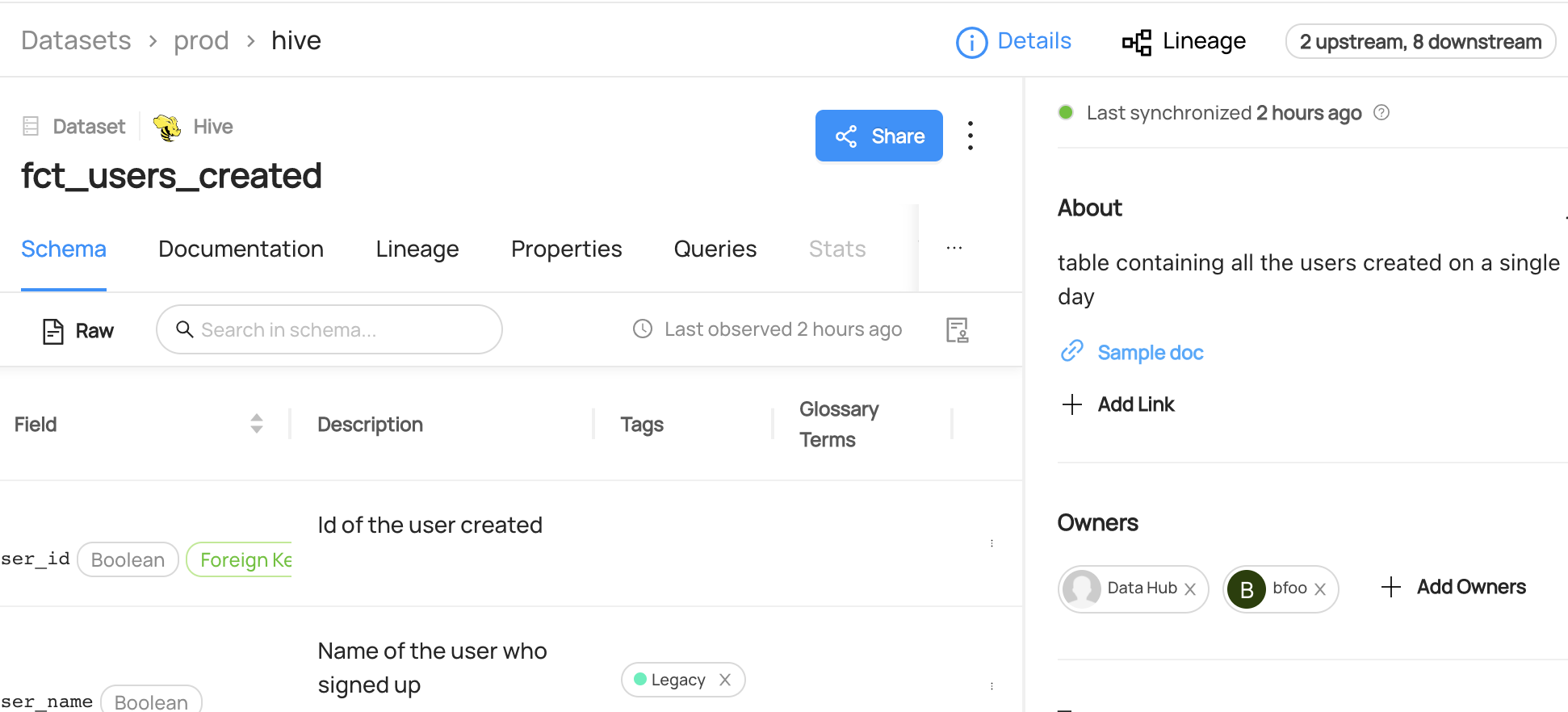

You can now see `bfoo` has been added as an owner to the `fct_users_created` dataset.

-

+

+

+  +

+

+

## Remove Owners

@@ -340,4 +352,8 @@ curl --location --request POST 'http://localhost:8080/api/graphql' \

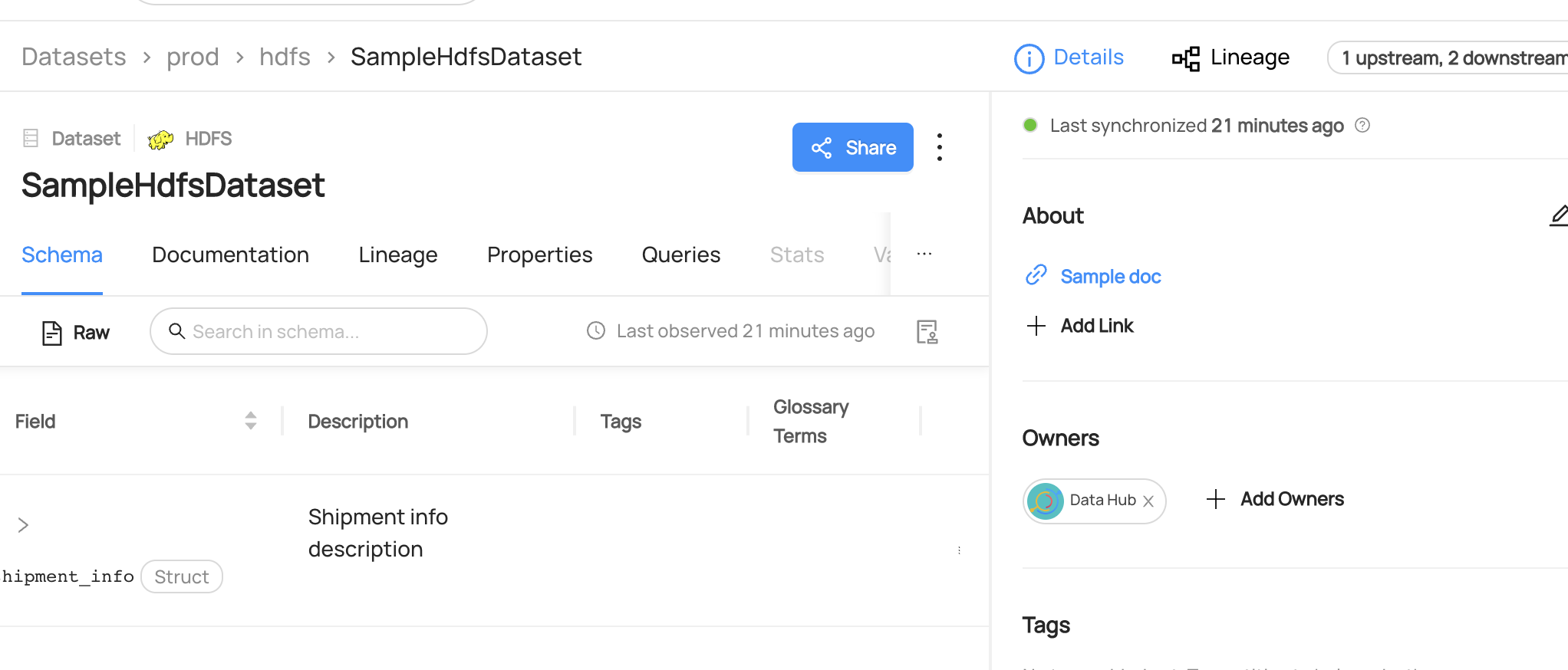

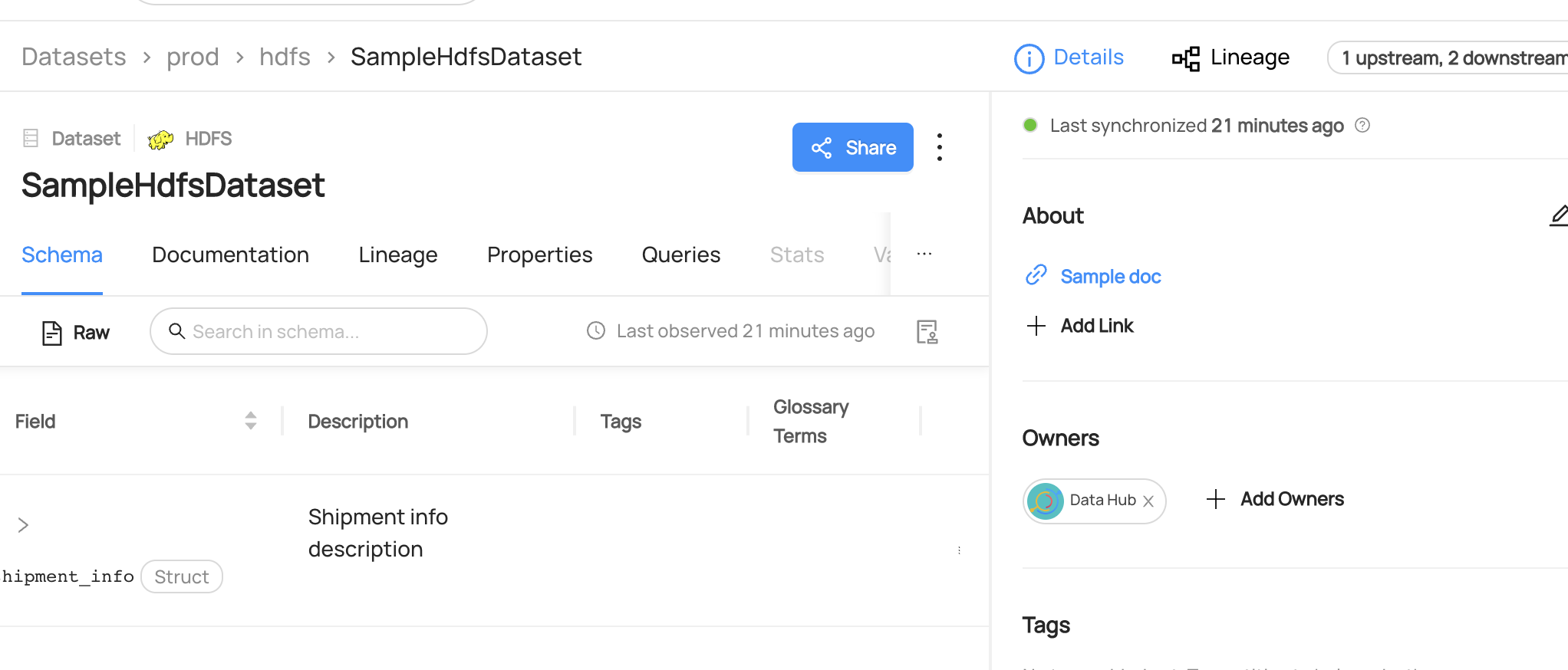

You can now see `John Doe` has been removed as an owner from the `fct_users_created` dataset.

-

+

+

+  +

+

+

diff --git a/docs/api/tutorials/tags.md b/docs/api/tutorials/tags.md

index 2f80a833136c1..b2234bf00bcb9 100644

--- a/docs/api/tutorials/tags.md

+++ b/docs/api/tutorials/tags.md

@@ -91,7 +91,11 @@ Expected Response:

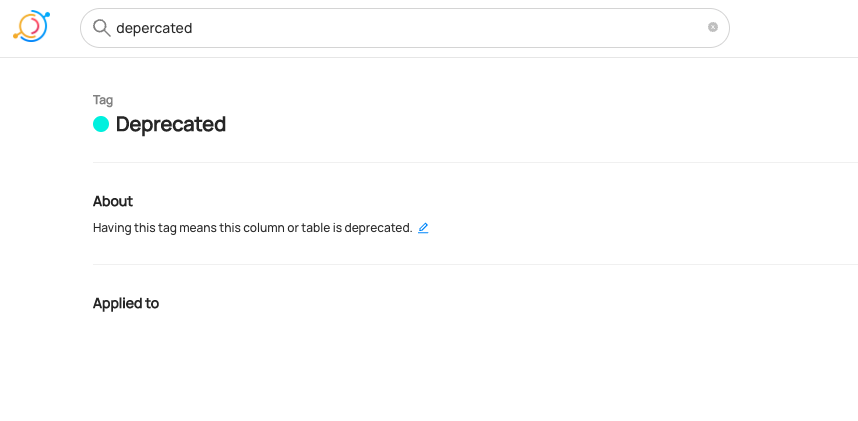

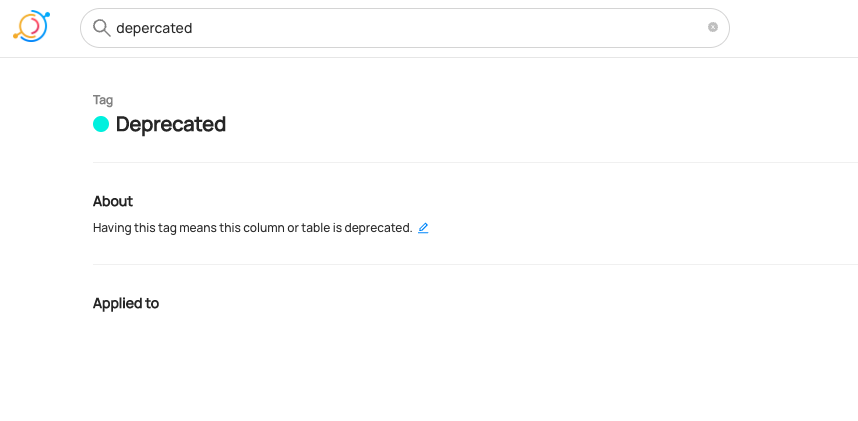

You can now see the new tag `Deprecated` has been created.

-

+

+

+  +

+

+

We can also verify this operation by programmatically searching `Deprecated` tag after running this code using the `datahub` cli.

@@ -307,7 +311,11 @@ Expected Response:

You can now see `Deprecated` tag has been added to `user_name` column.

-

+

+

+  +

+

+

We can also verify this operation programmatically by checking the `globalTags` aspect using the `datahub` cli.

@@ -359,7 +367,11 @@ curl --location --request POST 'http://localhost:8080/api/graphql' \

You can now see `Deprecated` tag has been removed to `user_name` column.

-

+

+

+  +

+

+

We can also verify this operation programmatically by checking the `gloablTags` aspect using the `datahub` cli.

diff --git a/docs/api/tutorials/terms.md b/docs/api/tutorials/terms.md

index 207e14ea4afe8..99acf77d26ab0 100644

--- a/docs/api/tutorials/terms.md

+++ b/docs/api/tutorials/terms.md

@@ -95,7 +95,11 @@ Expected Response:

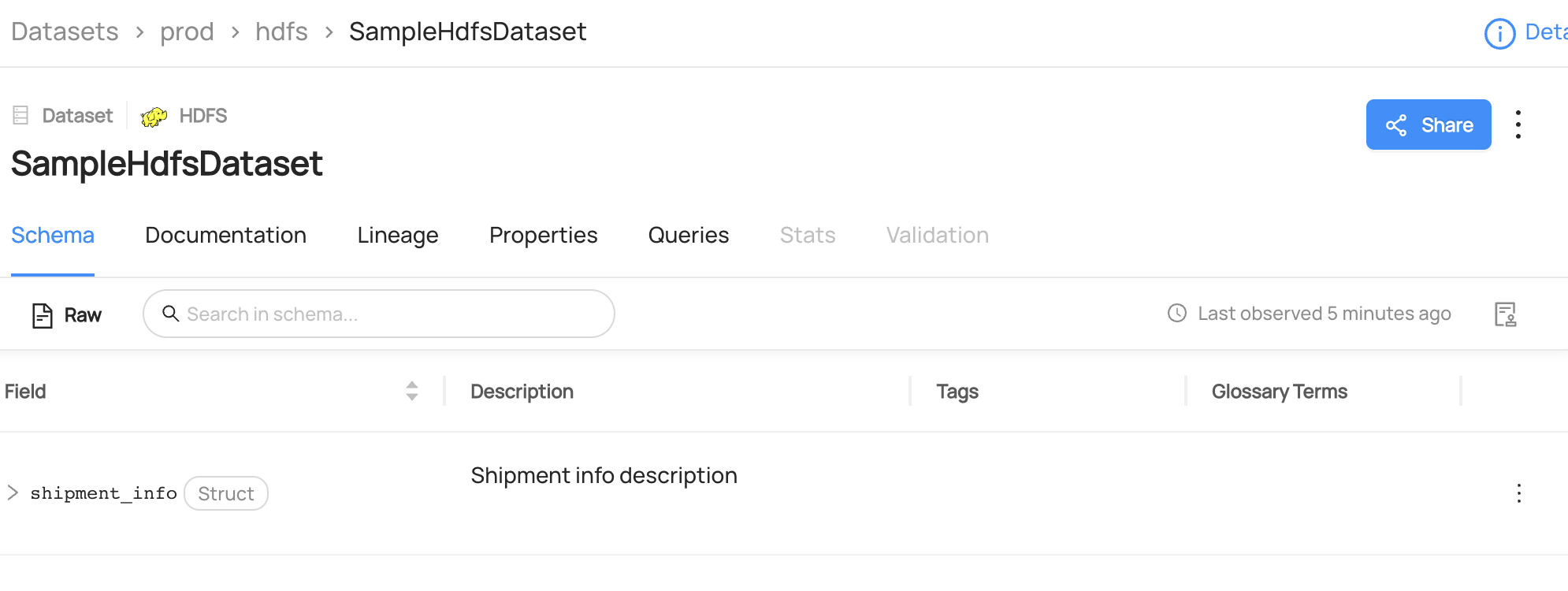

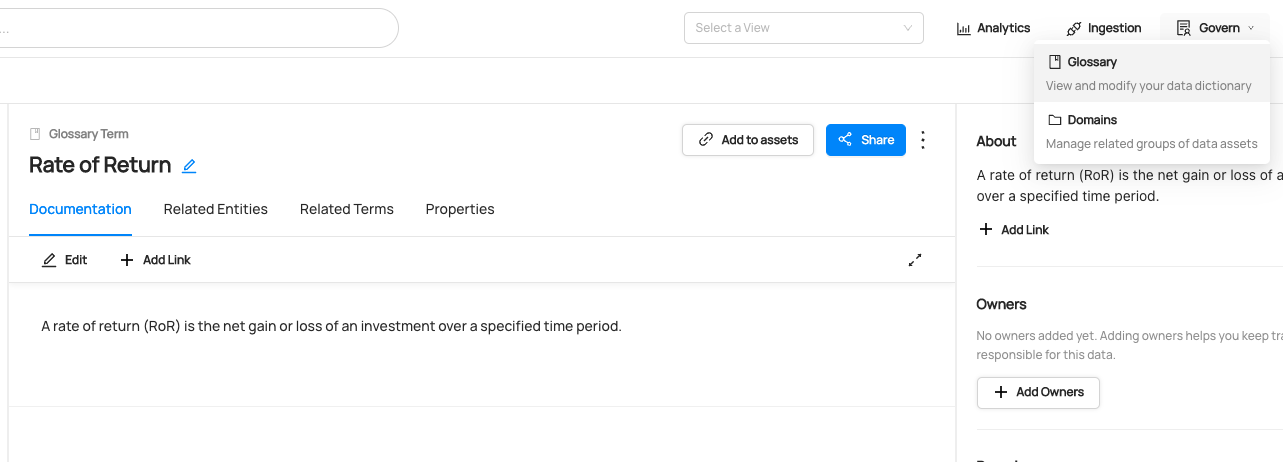

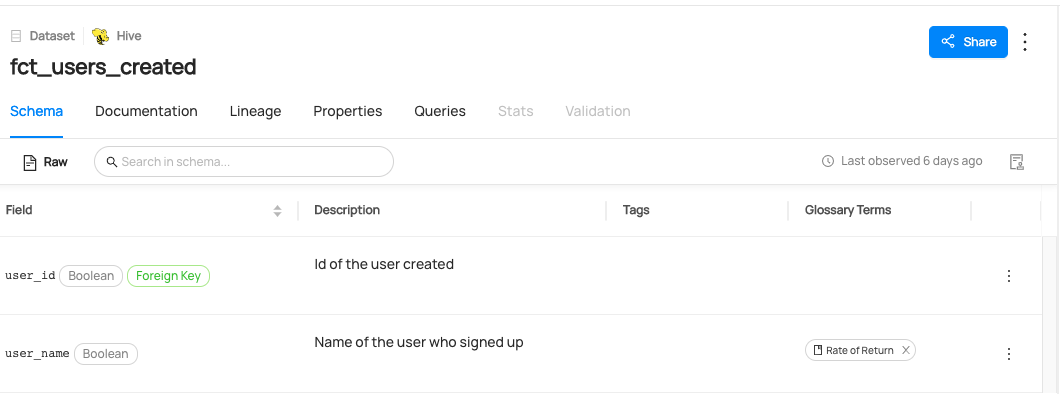

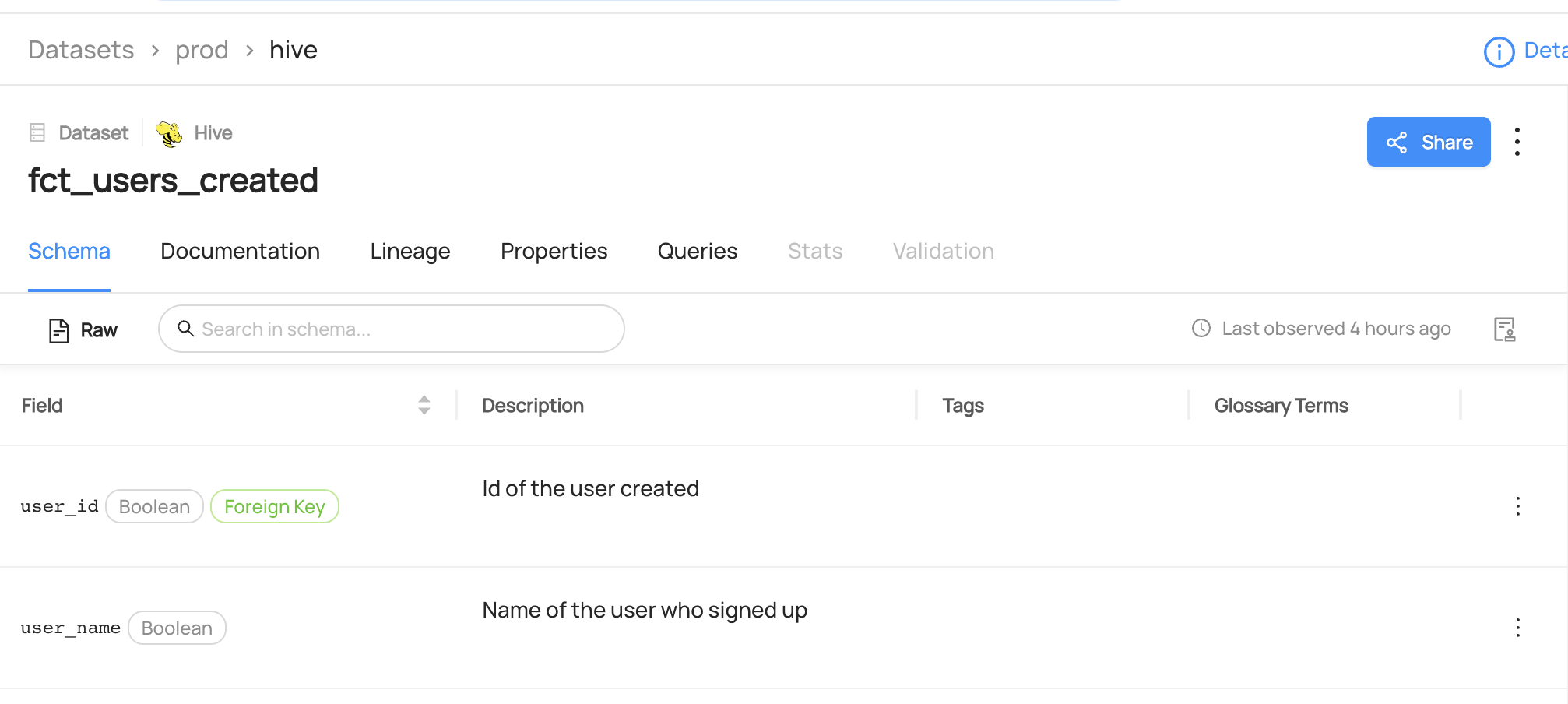

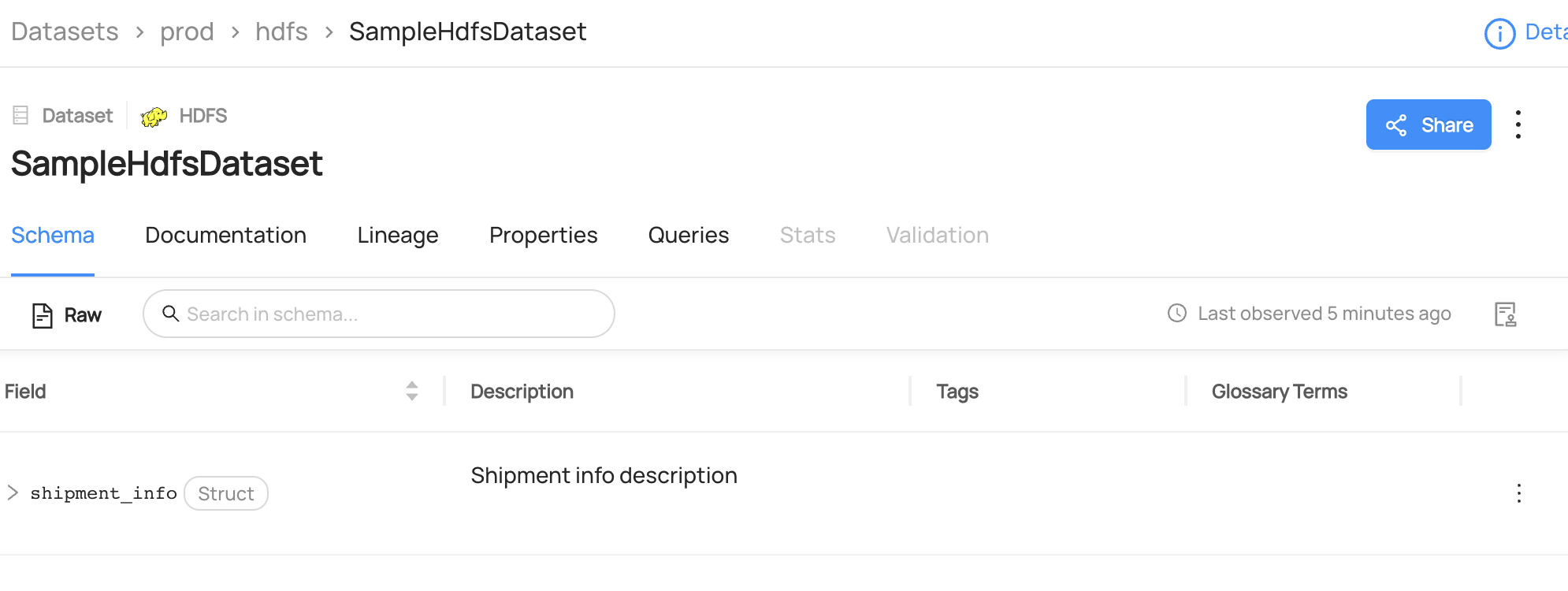

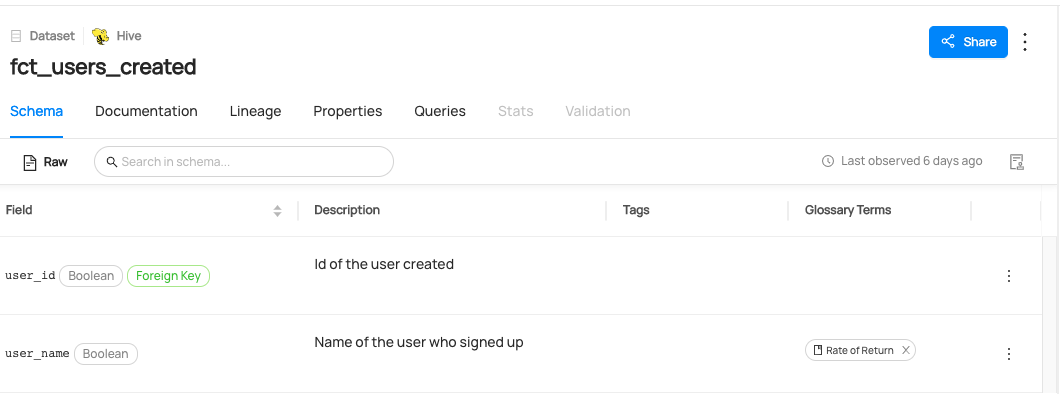

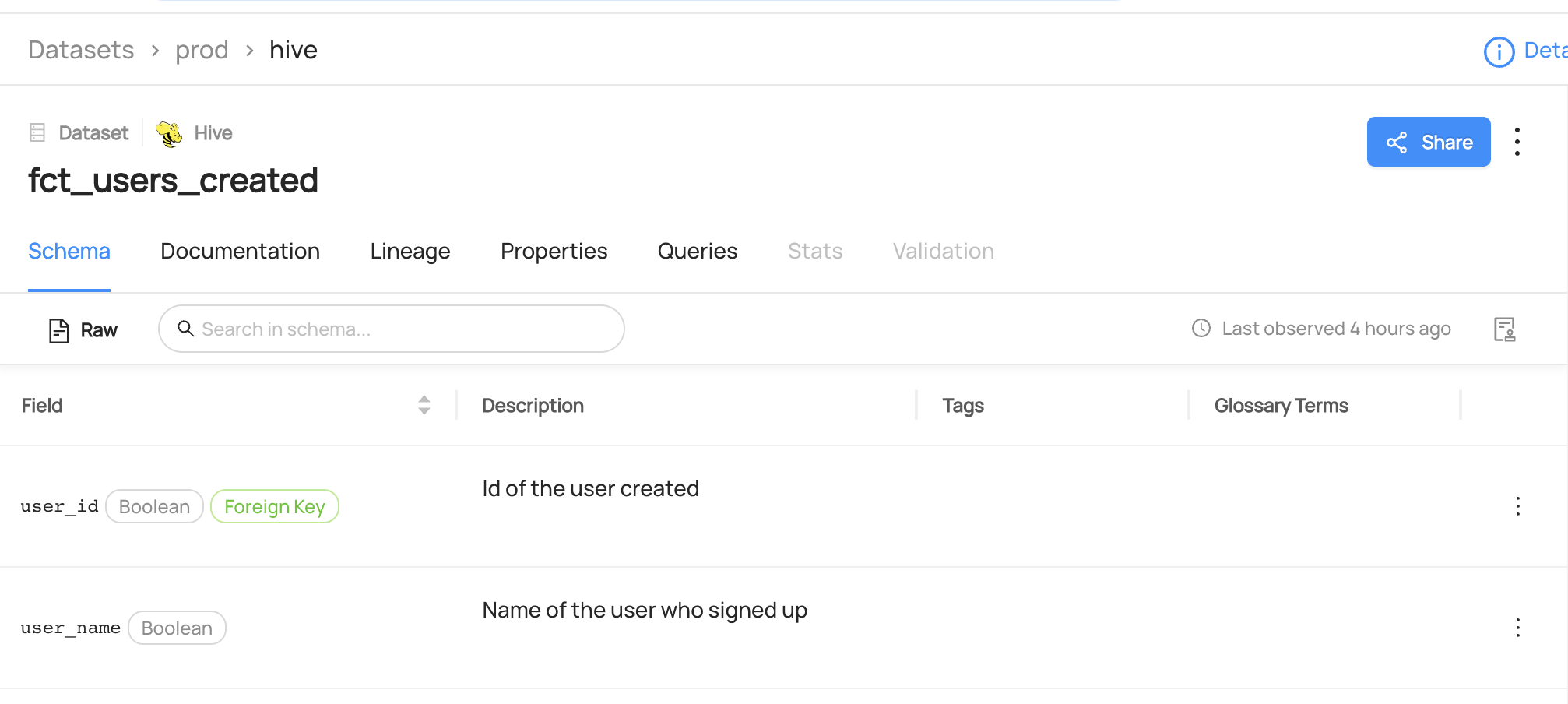

You can now see the new term `Rate of Return` has been created.

-

+

+

+  +

+

+

We can also verify this operation by programmatically searching `Rate of Return` term after running this code using the `datahub` cli.

@@ -289,7 +293,11 @@ Expected Response:

You can now see `Rate of Return` term has been added to `user_name` column.

-

+

+

+  +

+

+

## Remove Terms

@@ -361,4 +369,8 @@ curl --location --request POST 'http://localhost:8080/api/graphql' \

You can now see `Rate of Return` term has been removed to `user_name` column.

-

+

+

+  +

+

+

diff --git a/docs/architecture/architecture.md b/docs/architecture/architecture.md

index 6b76b995cc427..6a9c1860d71b0 100644

--- a/docs/architecture/architecture.md

+++ b/docs/architecture/architecture.md

@@ -10,8 +10,16 @@ disparate tools & systems.

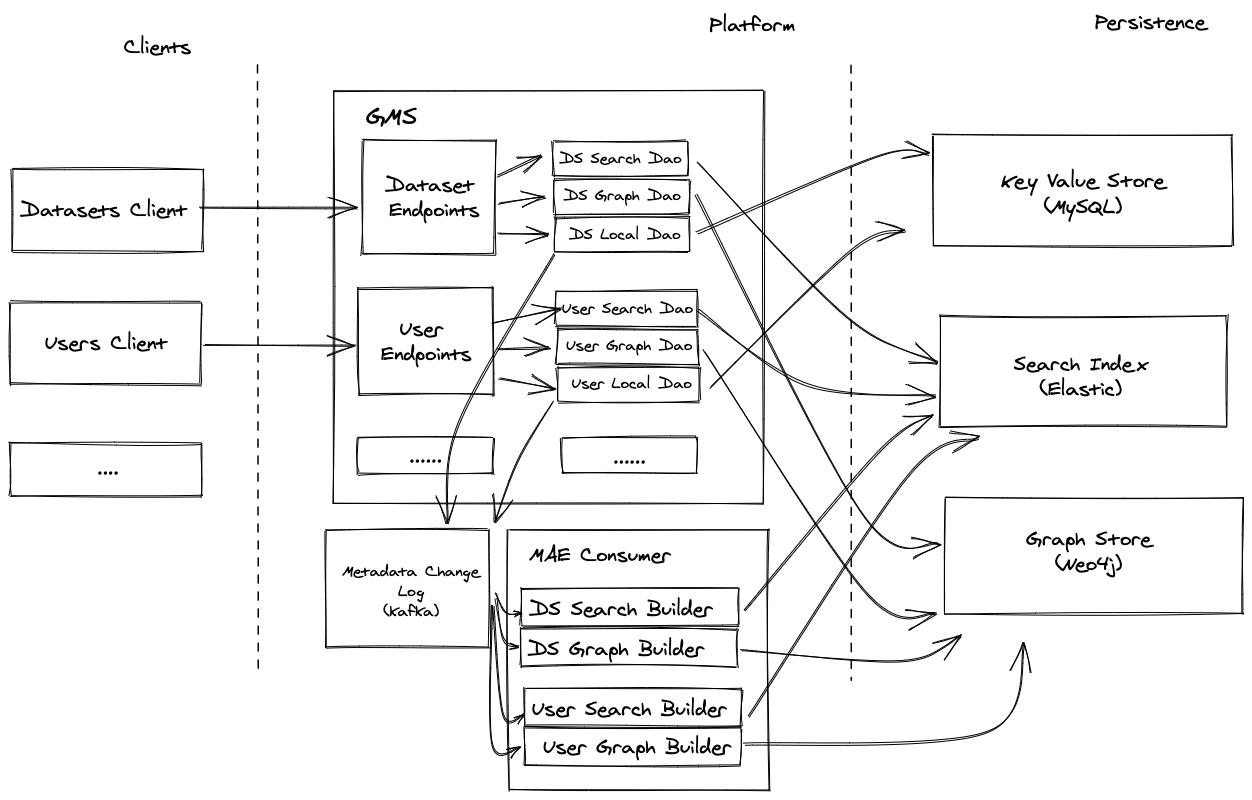

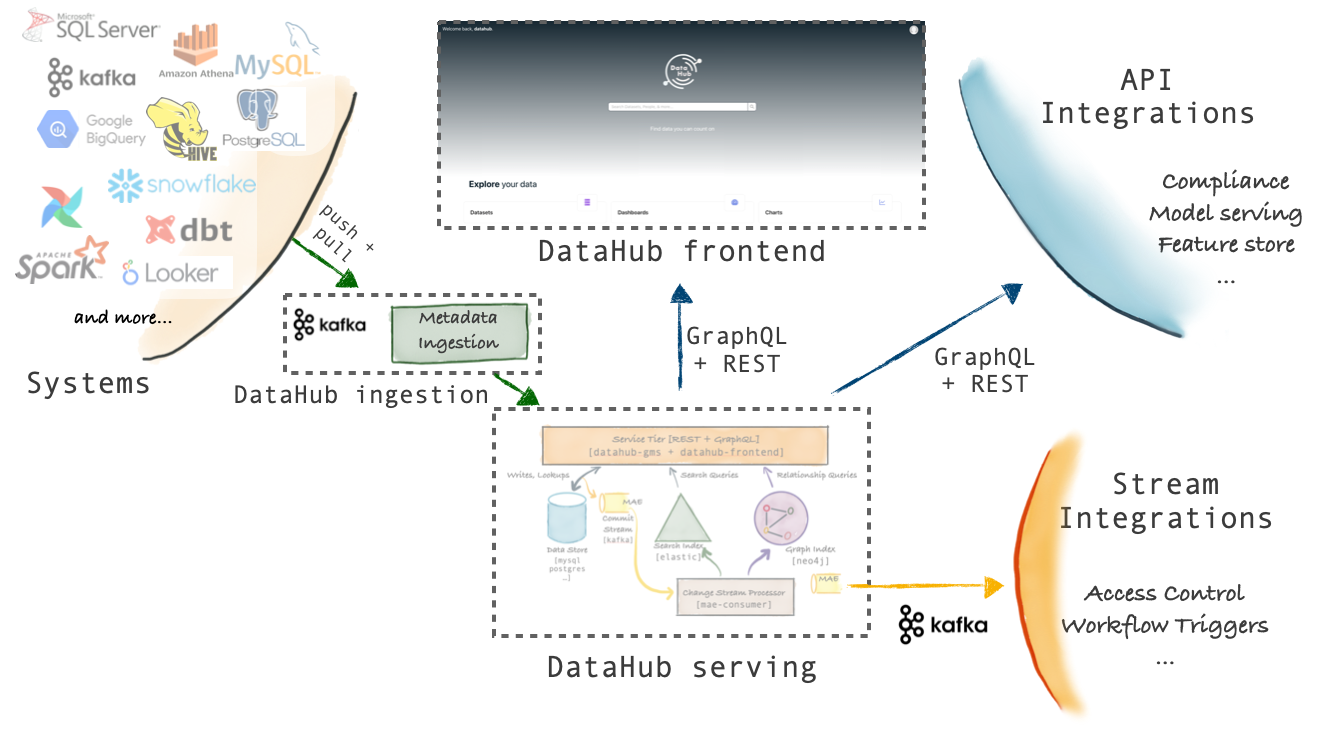

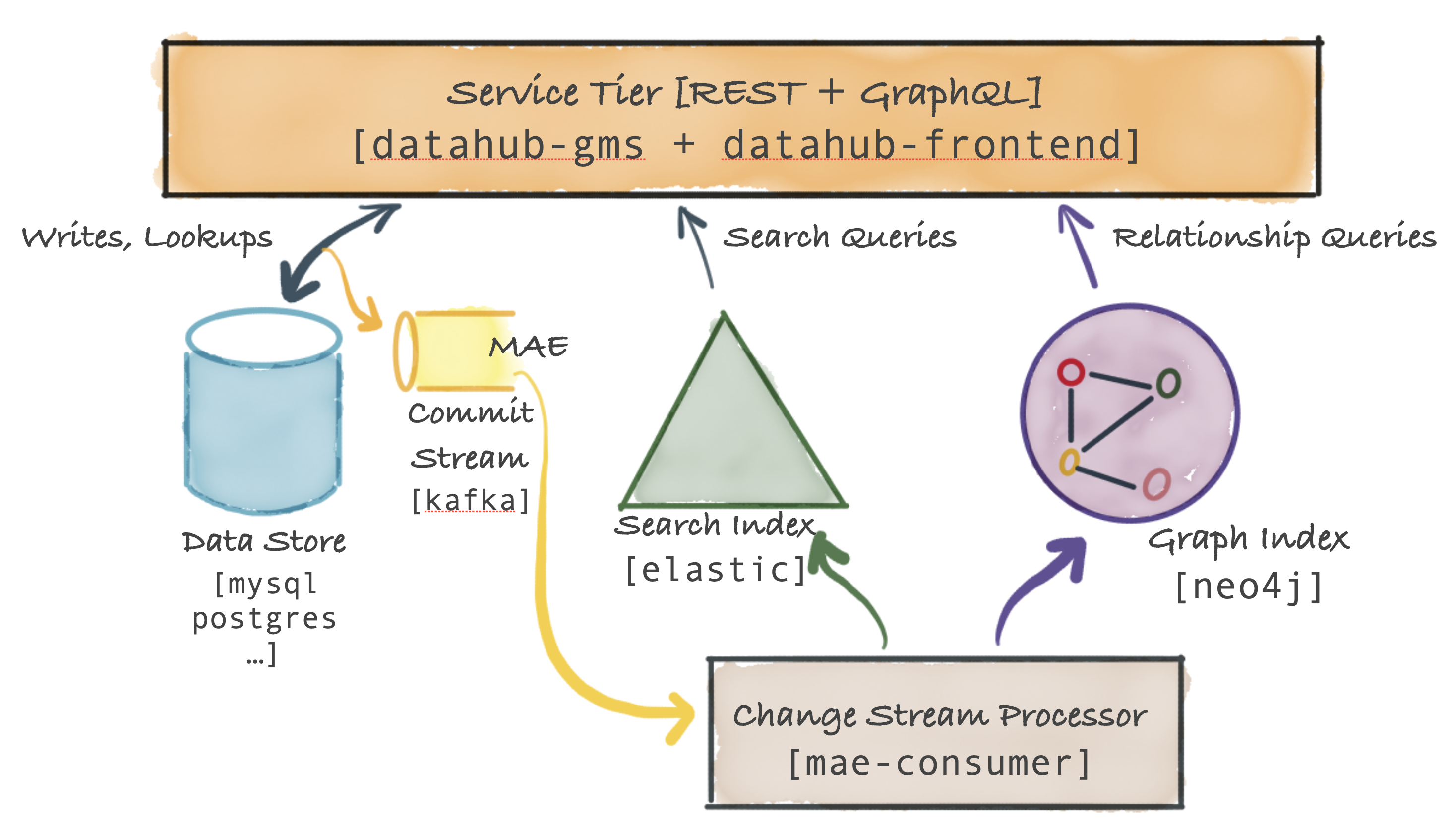

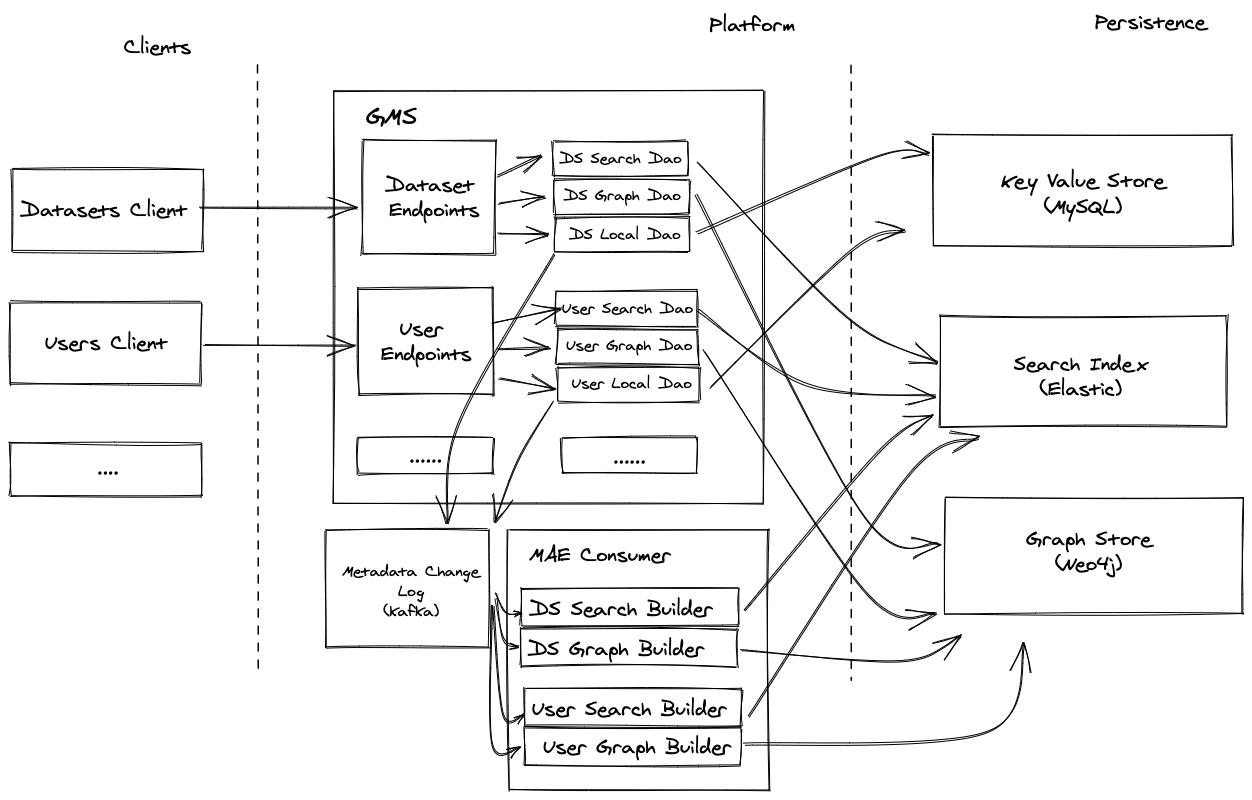

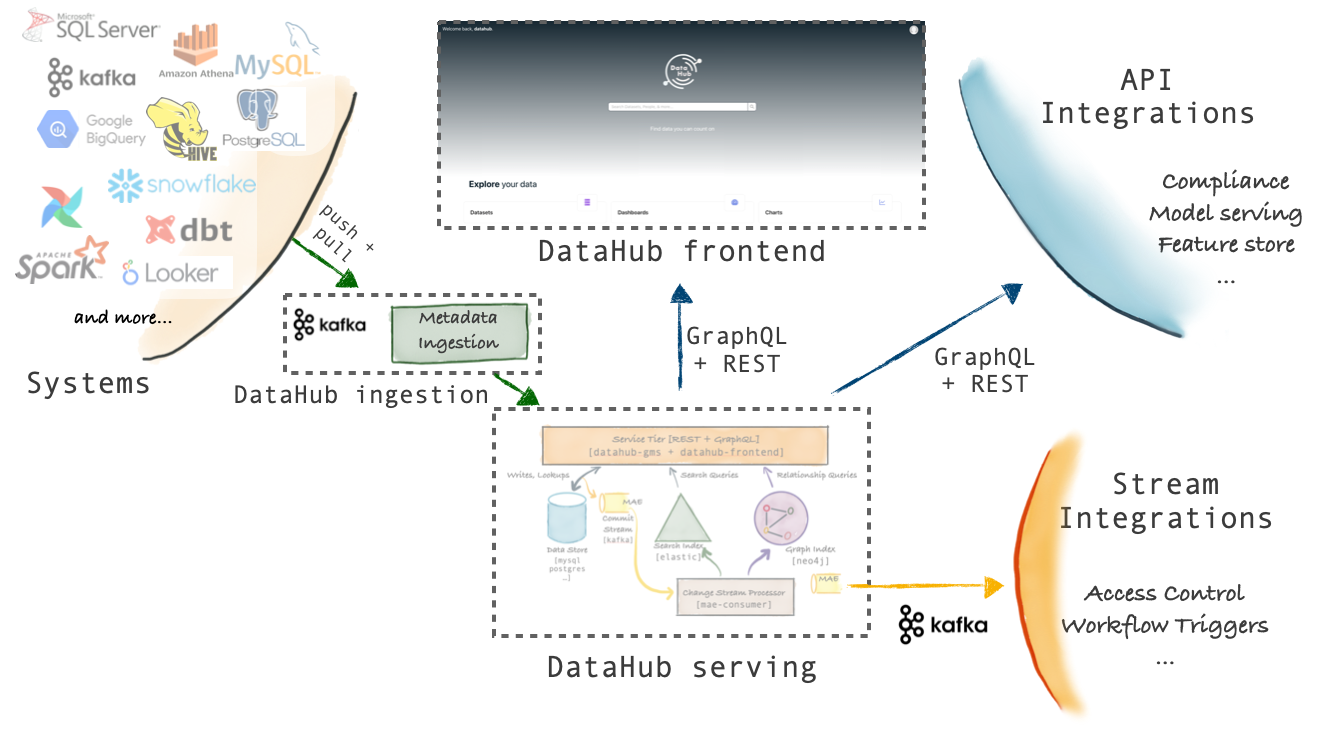

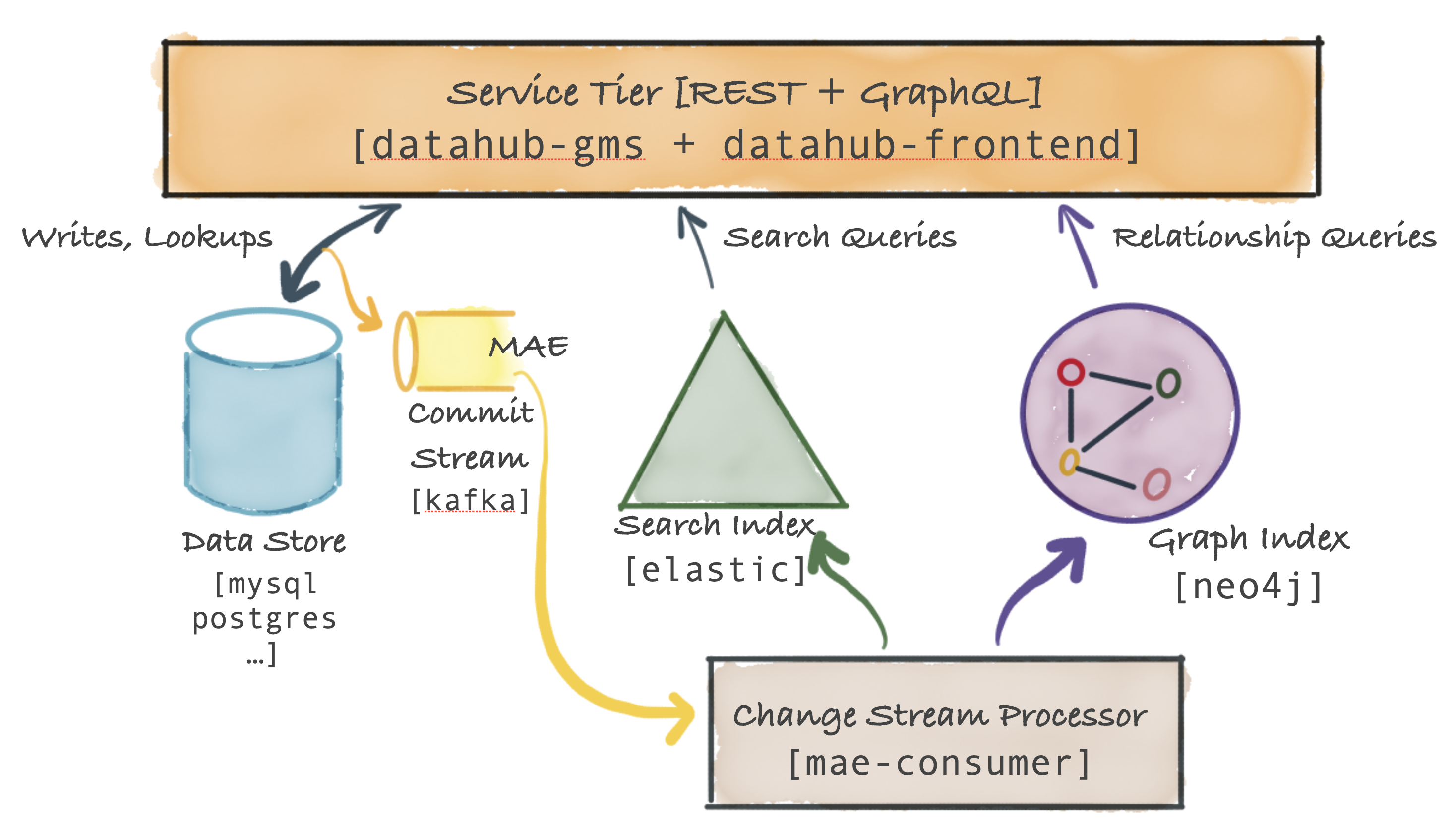

The figures below describe the high-level architecture of DataHub.

-

-

+

+

+  +

+

+

+

+

+  +

+

+

For a more detailed look at the components that make up the Architecture, check out [Components](../components.md).

diff --git a/docs/architecture/metadata-ingestion.md b/docs/architecture/metadata-ingestion.md

index 2b60383319c68..abf8fc24d1385 100644

--- a/docs/architecture/metadata-ingestion.md

+++ b/docs/architecture/metadata-ingestion.md

@@ -6,7 +6,11 @@ title: "Ingestion Framework"

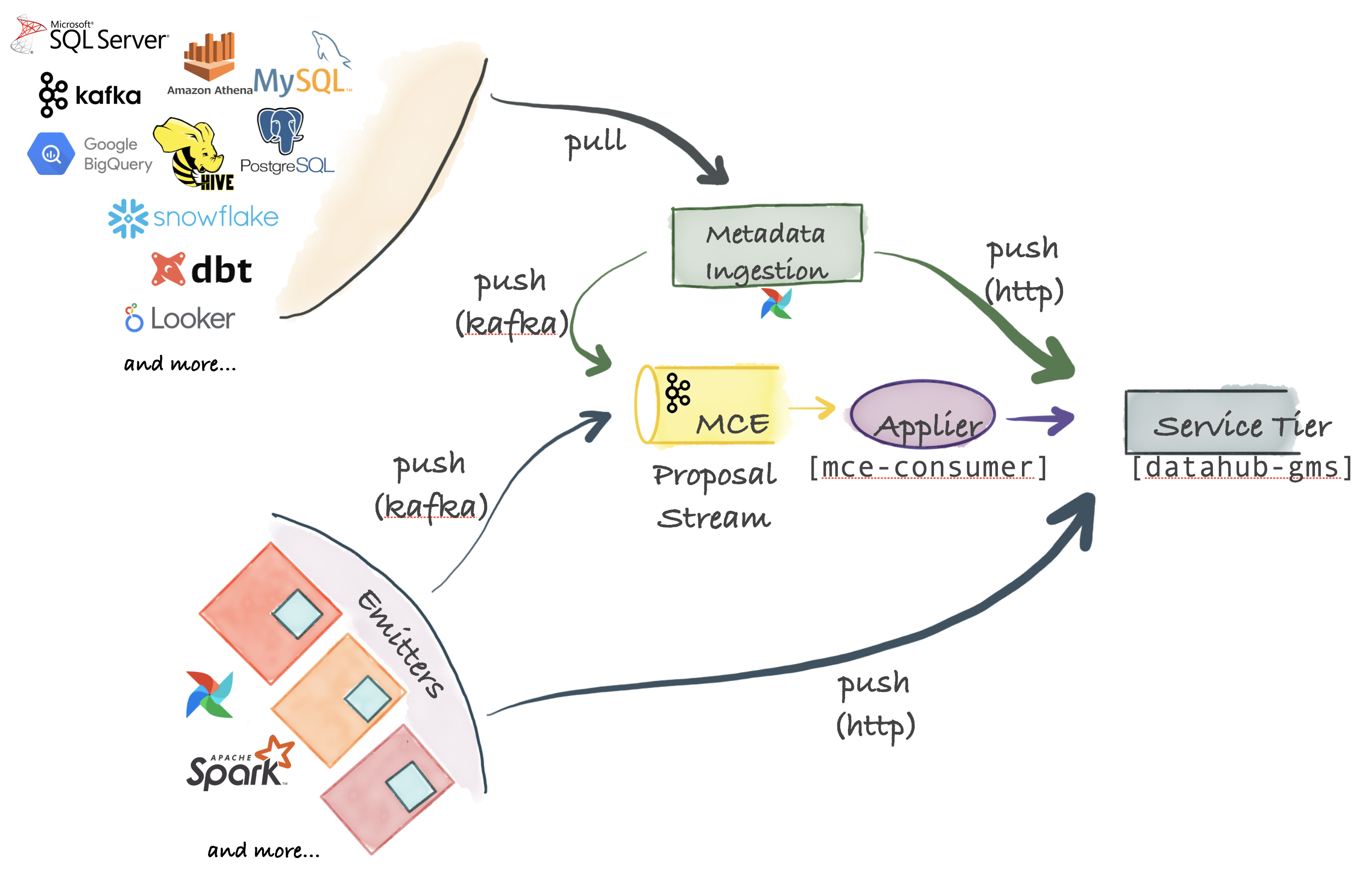

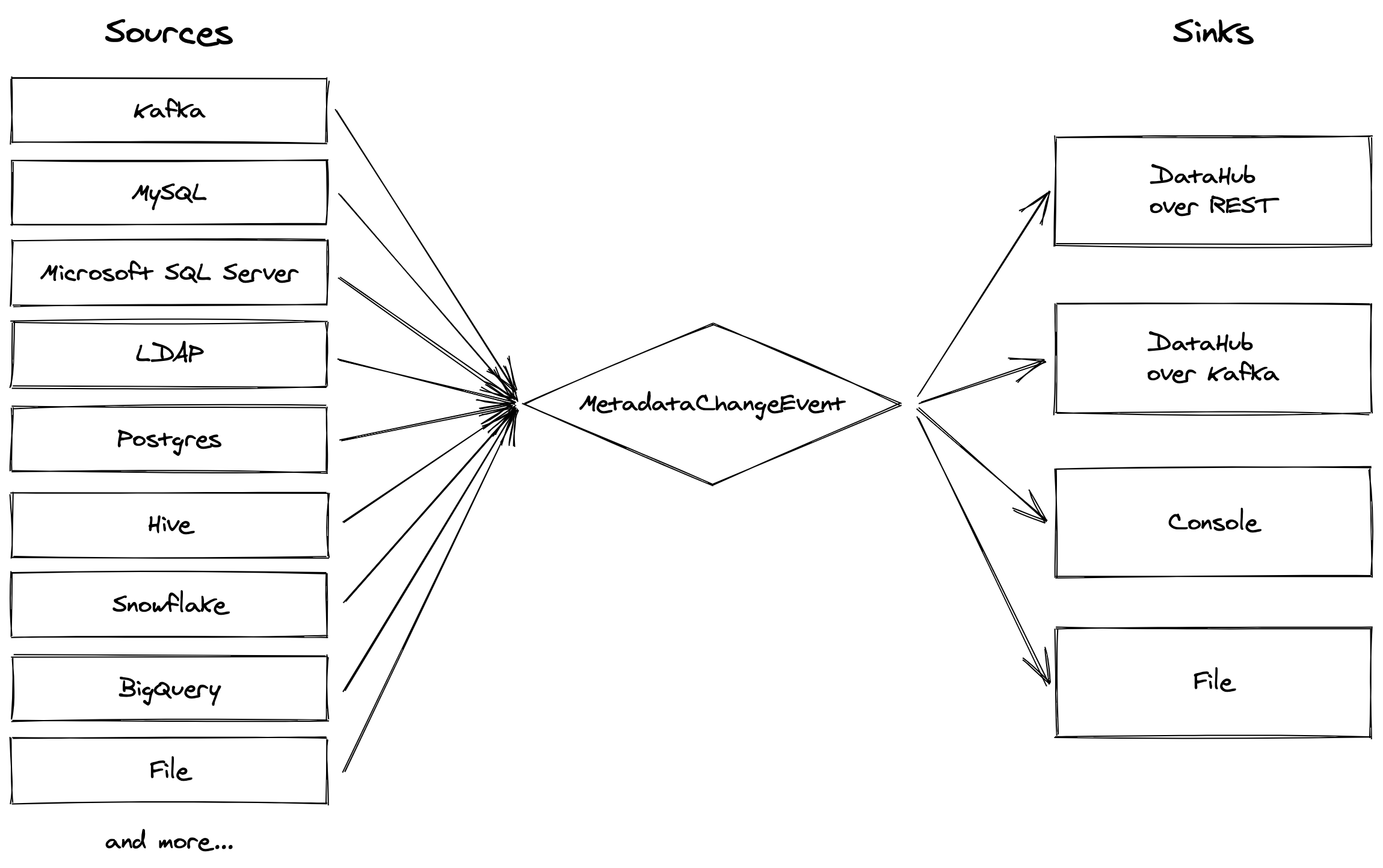

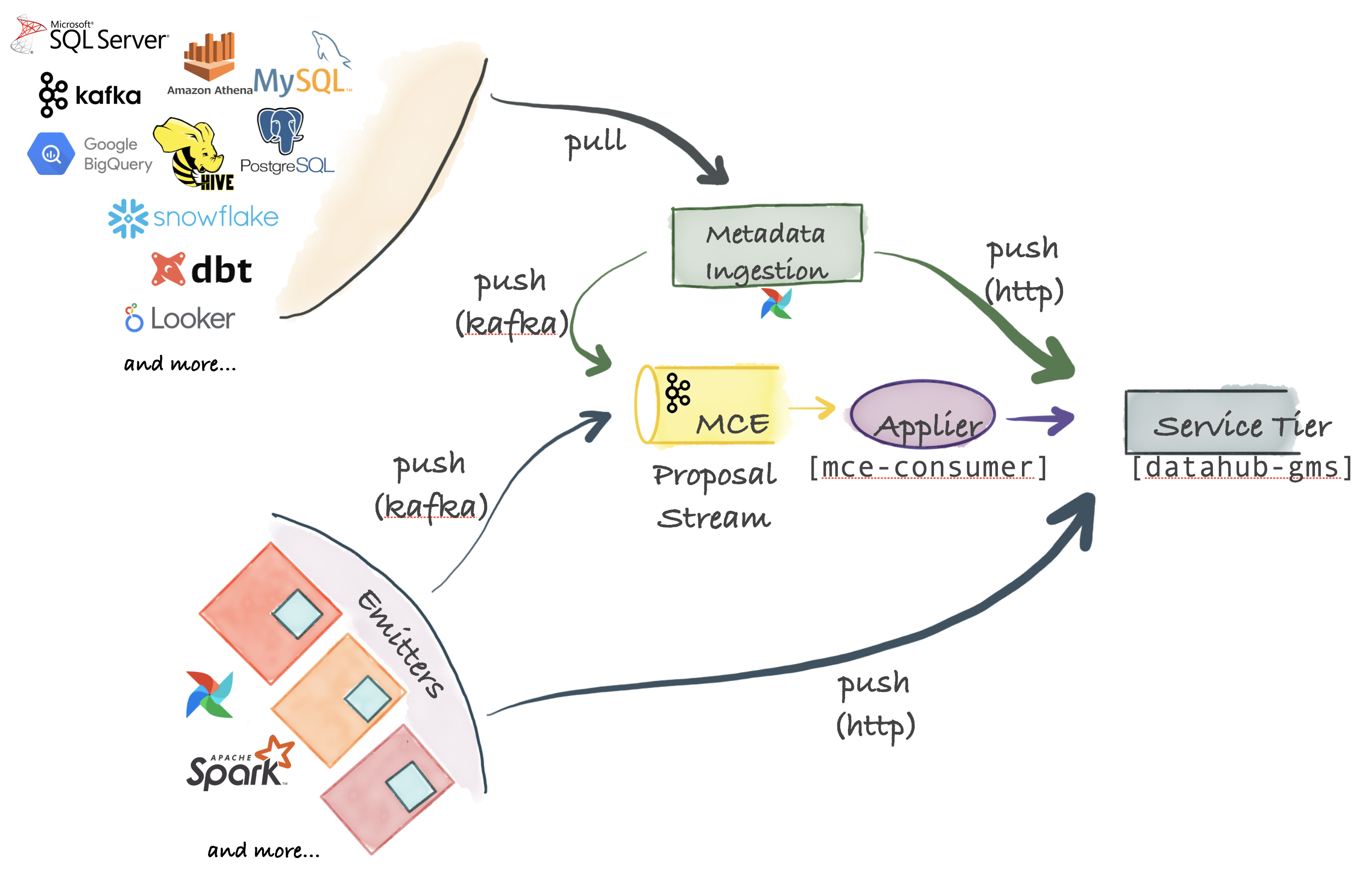

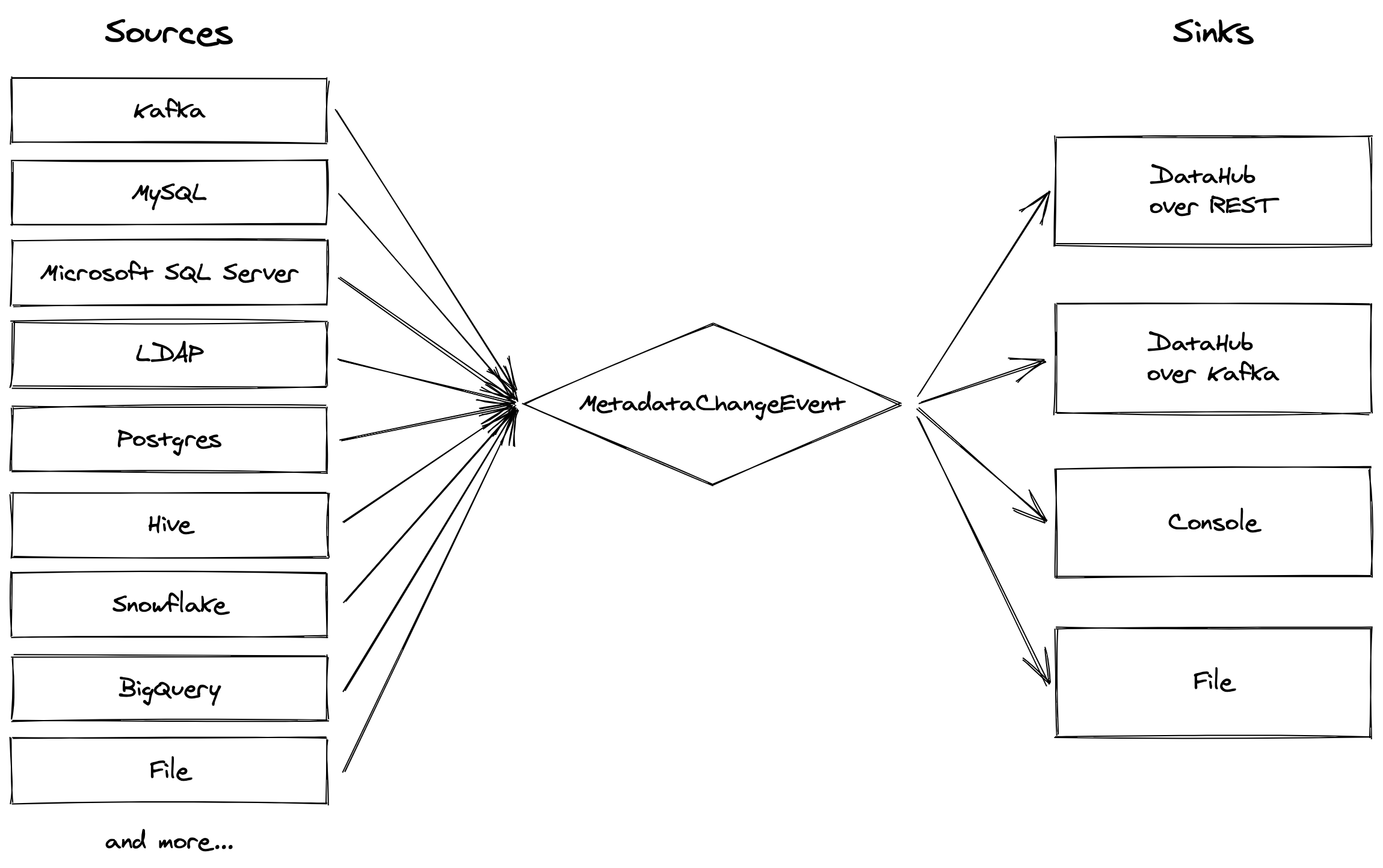

DataHub supports an extremely flexible ingestion architecture that can support push, pull, asynchronous and synchronous models.

The figure below describes all the options possible for connecting your favorite system to DataHub.

-

+

+

+  +

+

+

## Metadata Change Proposal: The Center Piece

diff --git a/docs/architecture/metadata-serving.md b/docs/architecture/metadata-serving.md

index ada41179af4e0..57194f49d5ea4 100644

--- a/docs/architecture/metadata-serving.md

+++ b/docs/architecture/metadata-serving.md

@@ -6,7 +6,11 @@ title: "Serving Tier"

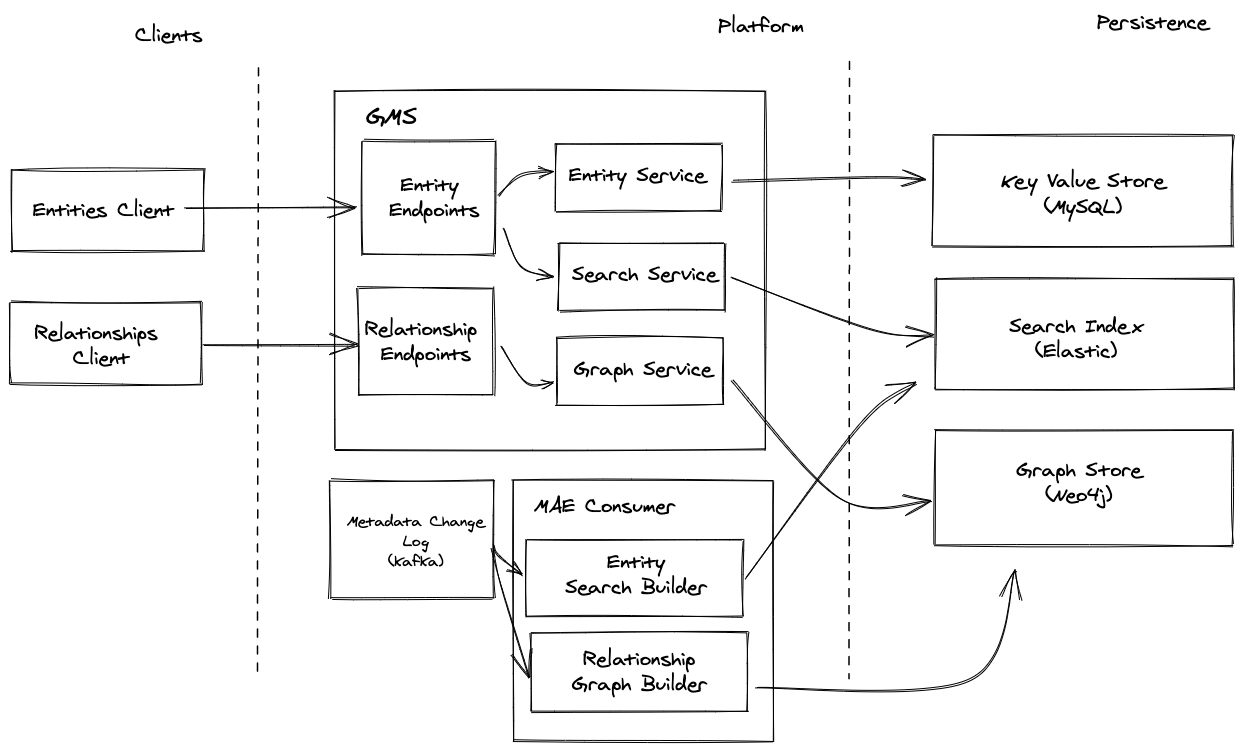

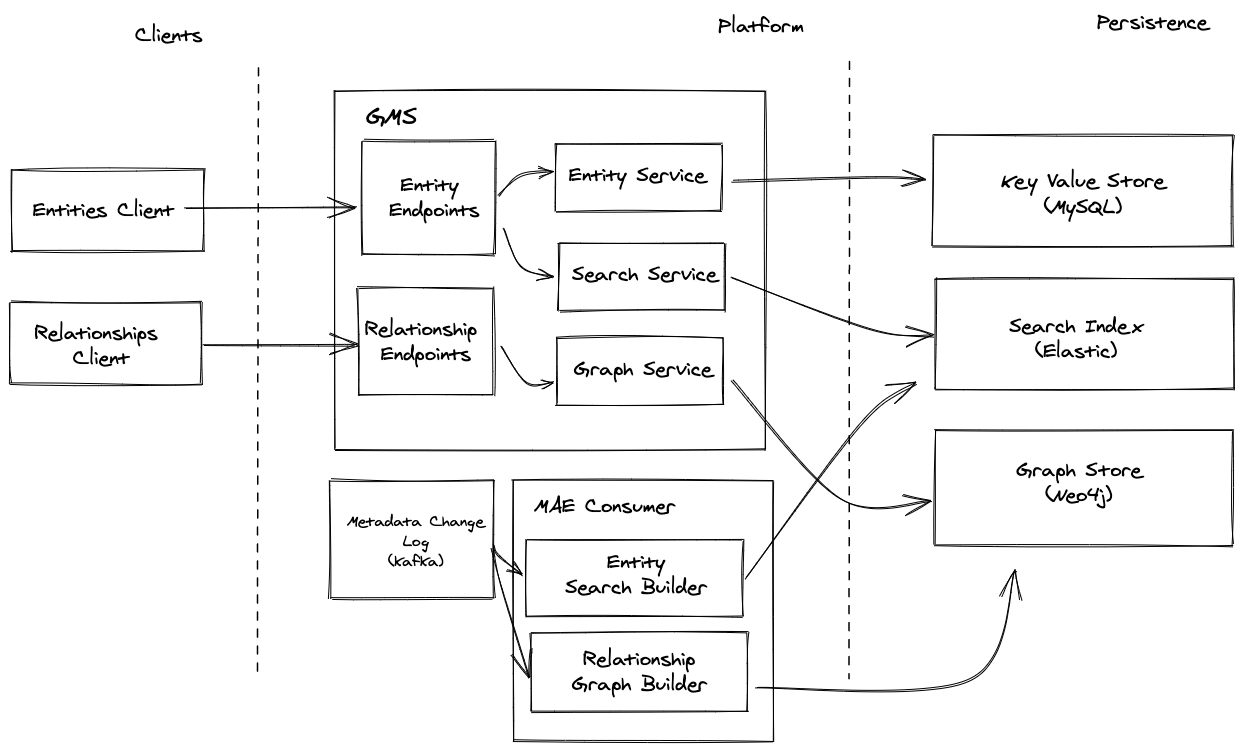

The figure below shows the high-level system diagram for DataHub's Serving Tier.

-

+

+

+  +

+

+

The primary component is called [the Metadata Service](../../metadata-service) and exposes a REST API and a GraphQL API for performing CRUD operations on metadata. The service also exposes search and graph query API-s to support secondary-index style queries, full-text search queries as well as relationship queries like lineage. In addition, the [datahub-frontend](../../datahub-frontend) service expose a GraphQL API on top of the metadata graph.

diff --git a/docs/authentication/concepts.md b/docs/authentication/concepts.md

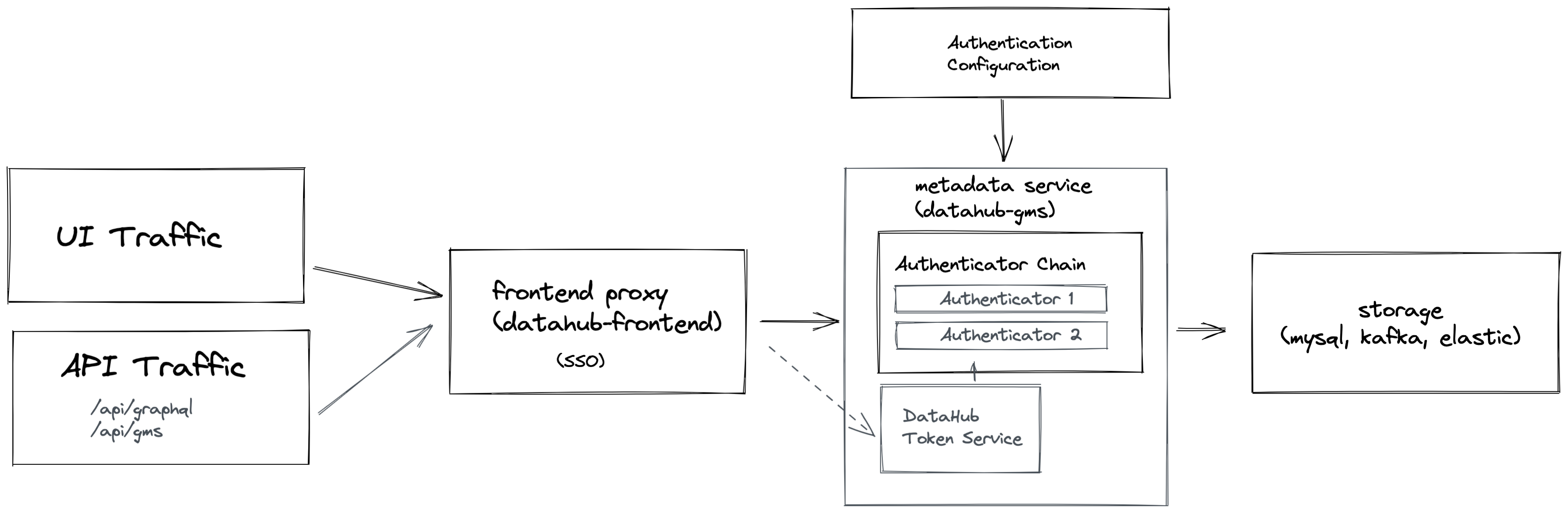

index 715e94c7e0380..0940f86a805f1 100644

--- a/docs/authentication/concepts.md

+++ b/docs/authentication/concepts.md

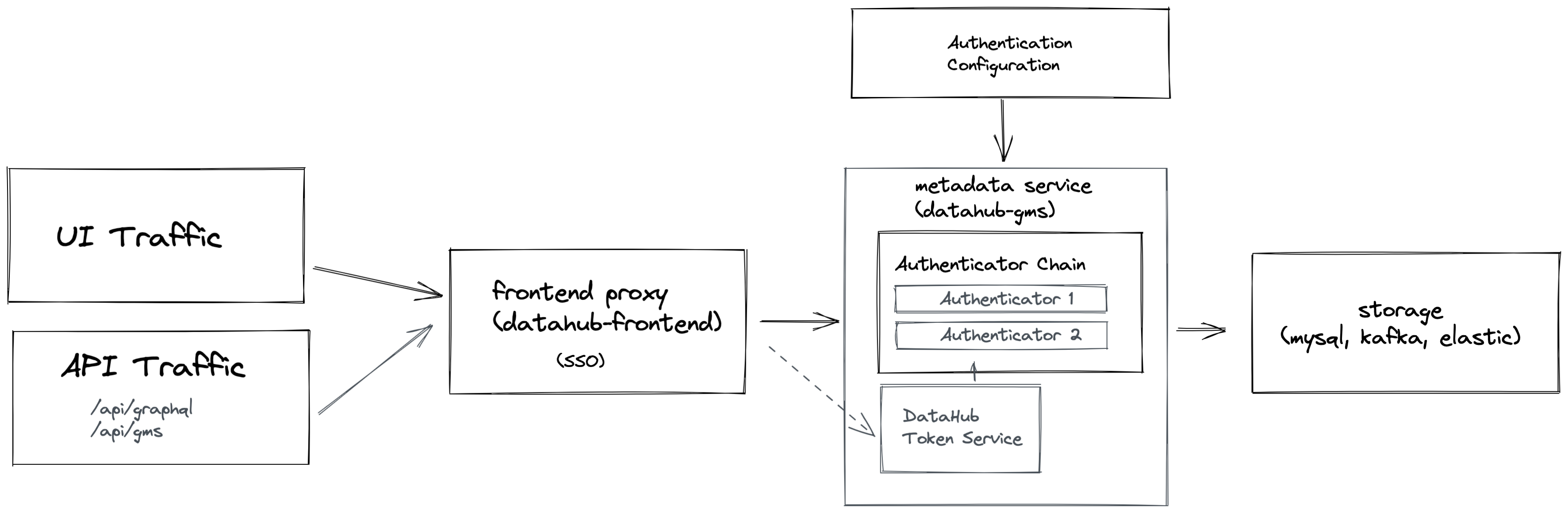

@@ -11,7 +11,11 @@ We introduced a few important concepts to the Metadata Service to make authentic

In following sections, we'll take a closer look at each individually.

-

+

+

+  +

+

+

*High level overview of Metadata Service Authentication*

## What is an Actor?

diff --git a/docs/authentication/guides/sso/configure-oidc-react-azure.md b/docs/authentication/guides/sso/configure-oidc-react-azure.md

index d185957967882..177387327c0e8 100644

--- a/docs/authentication/guides/sso/configure-oidc-react-azure.md

+++ b/docs/authentication/guides/sso/configure-oidc-react-azure.md

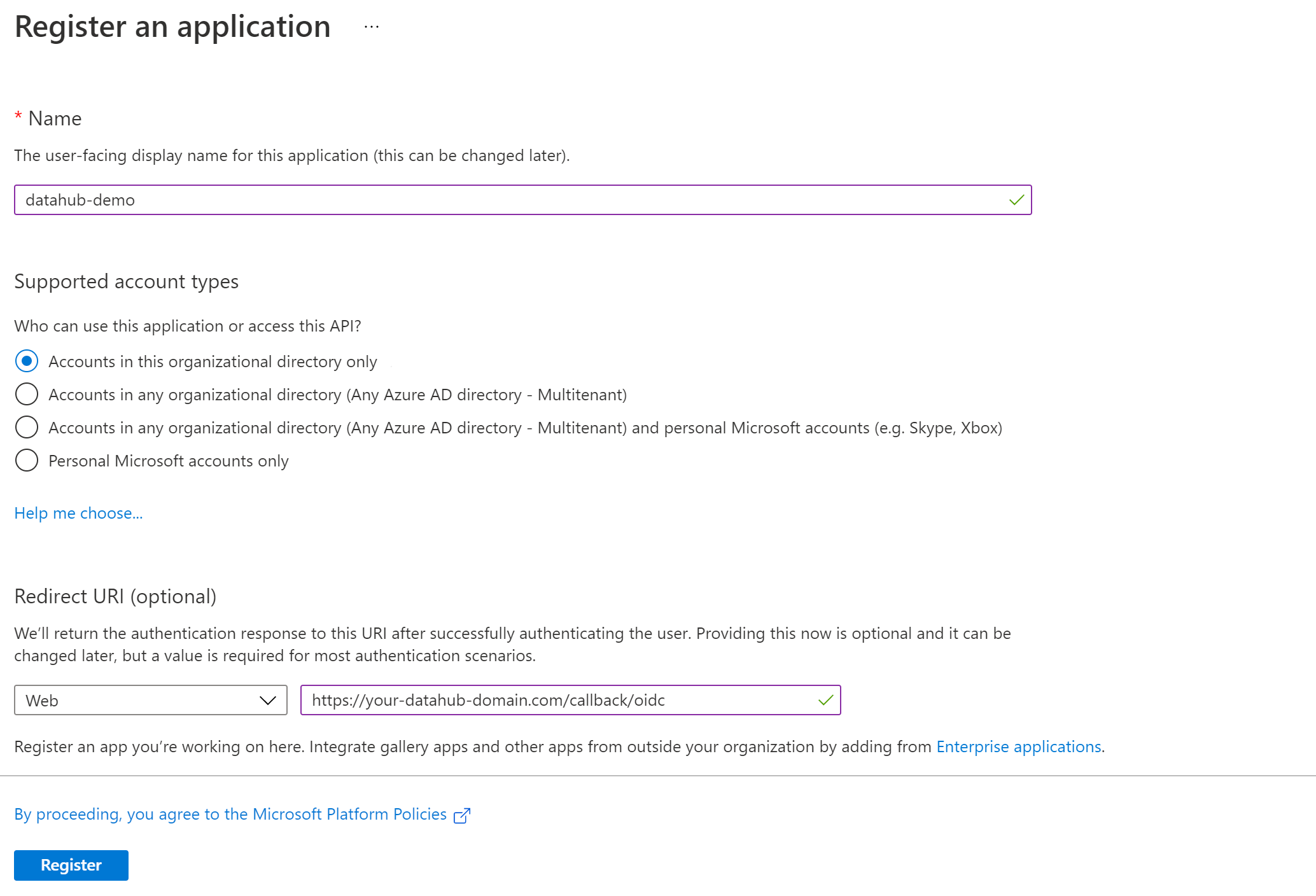

@@ -32,7 +32,11 @@ Azure supports more than one redirect URI, so both can be configured at the same

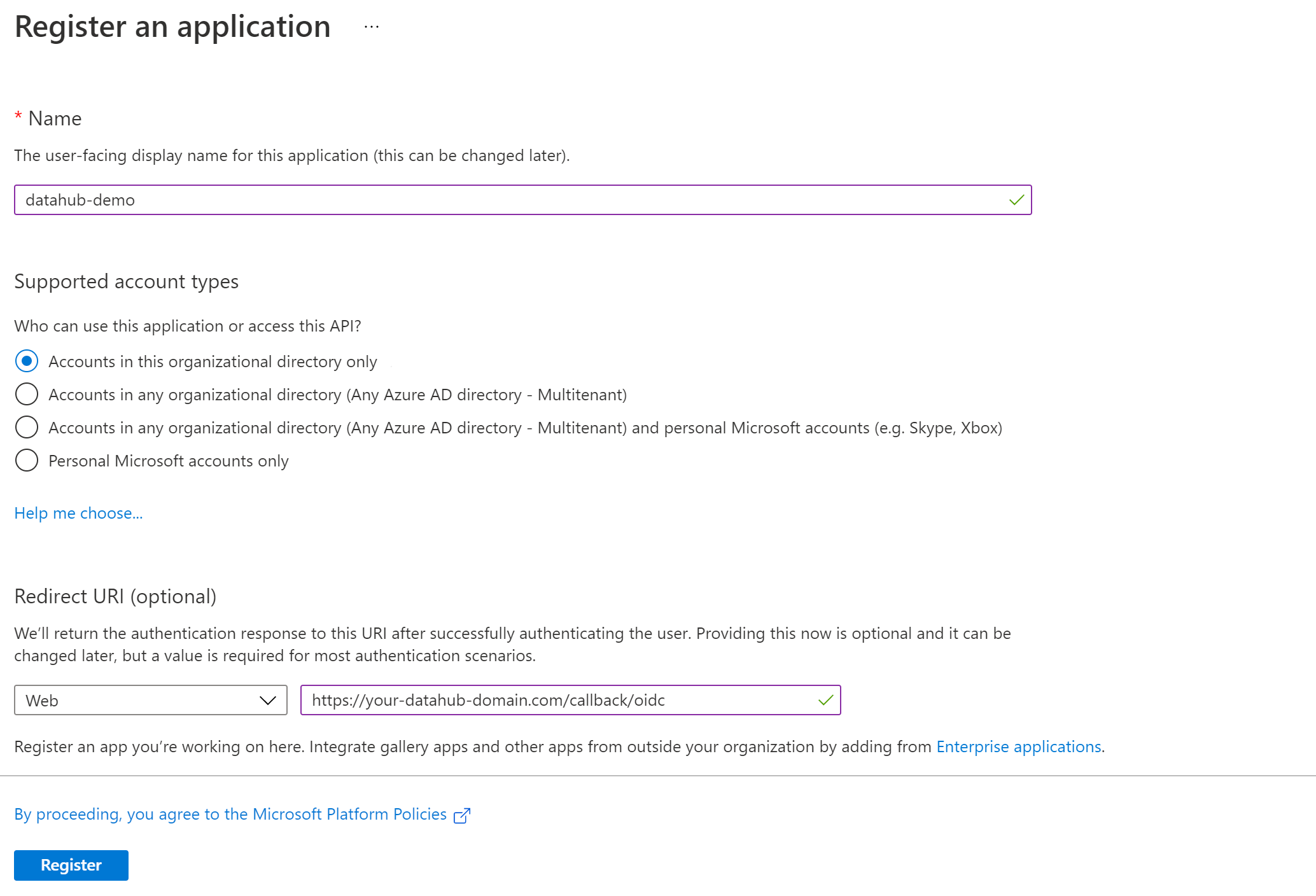

At this point, your app registration should look like the following:

-

+

+

+  +

+

+

e. Click **Register**.

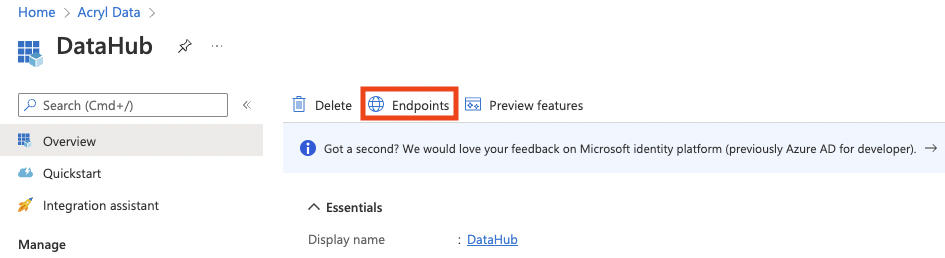

@@ -40,7 +44,11 @@ e. Click **Register**.

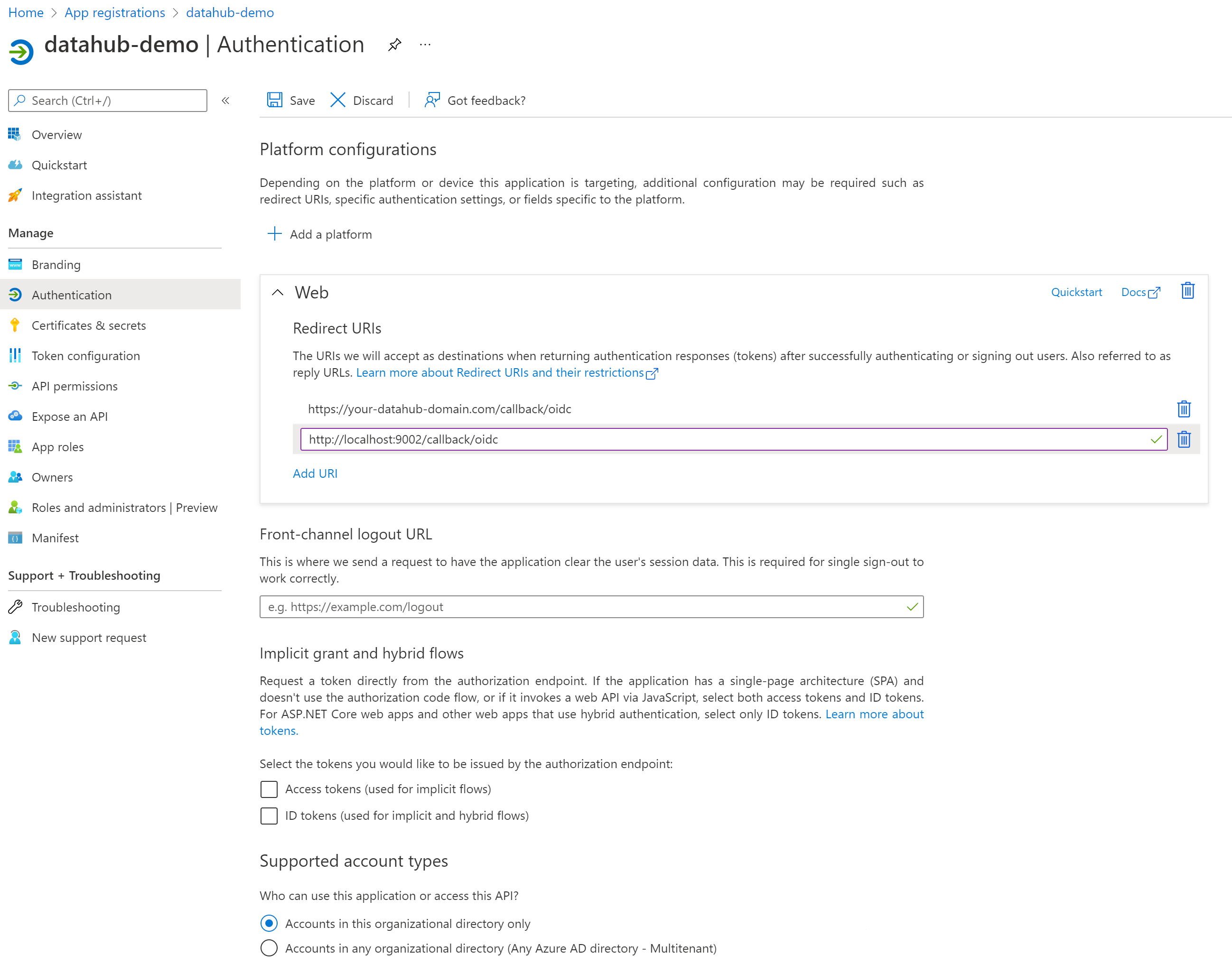

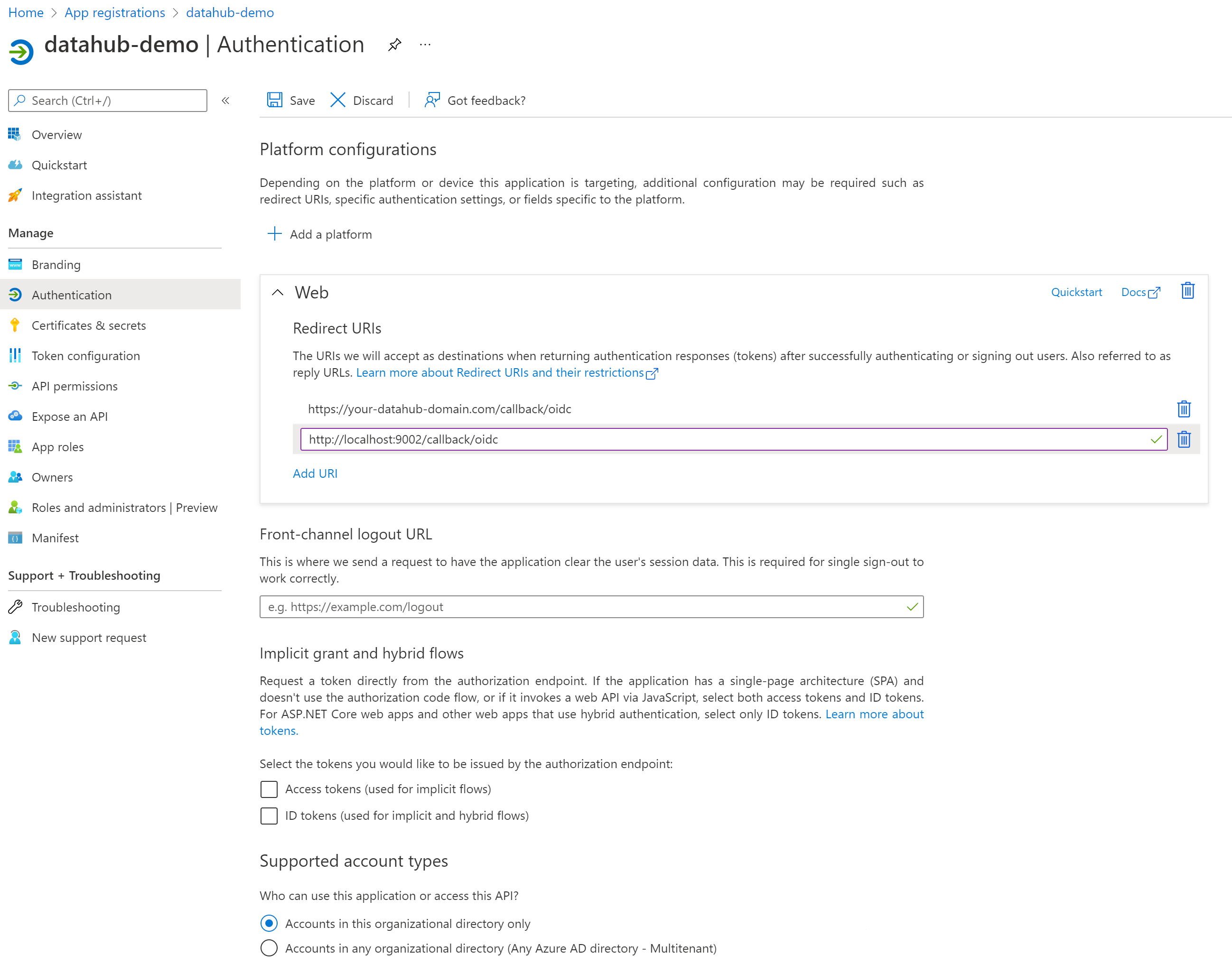

Once registration is done, you will land on the app registration **Overview** tab. On the left-side navigation bar, click on **Authentication** under **Manage** and add extra redirect URIs if need be (if you want to support both local testing and Azure deployments).

-

+

+

+  +

+

+

Click **Save**.

@@ -51,7 +59,11 @@ Select **Client secrets**, then **New client secret**. Type in a meaningful des

**IMPORTANT:** Copy the `value` of your newly create secret since Azure will never display its value afterwards.

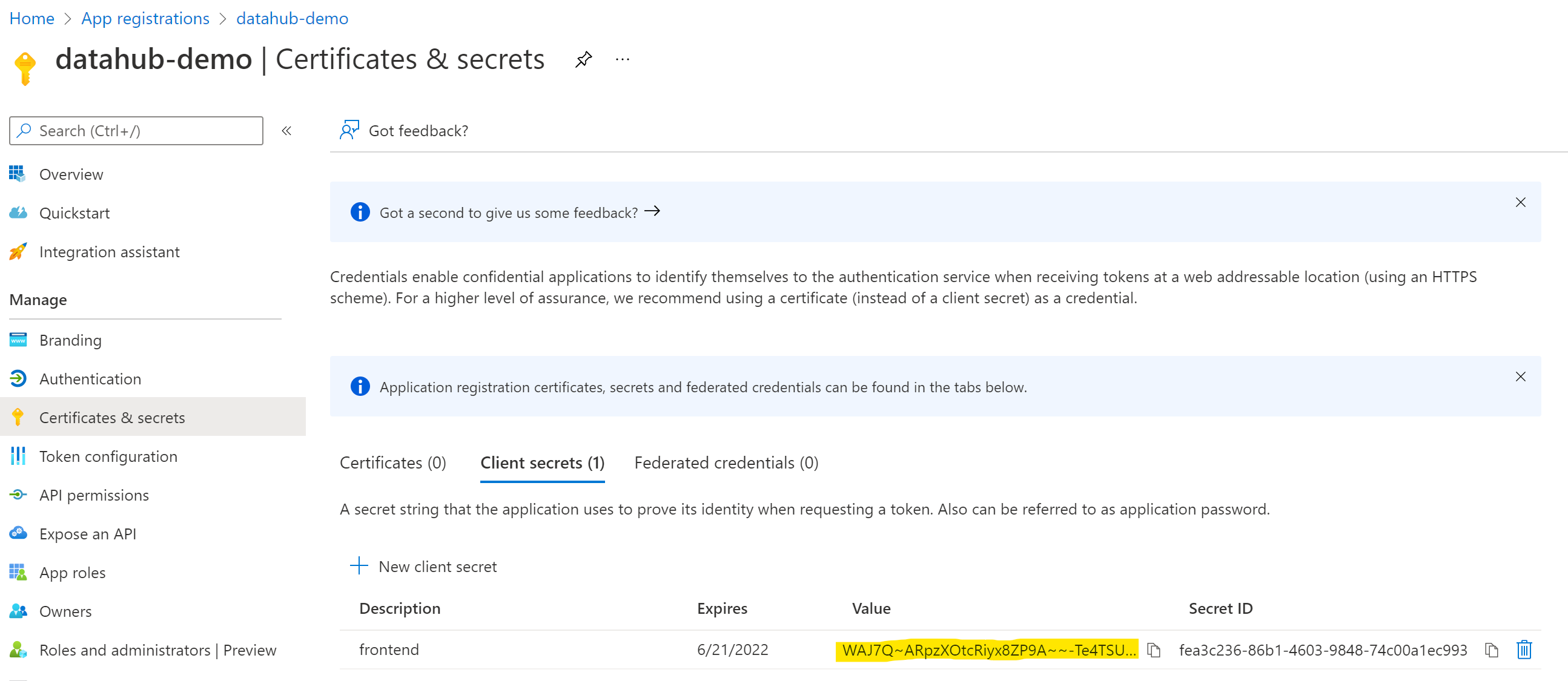

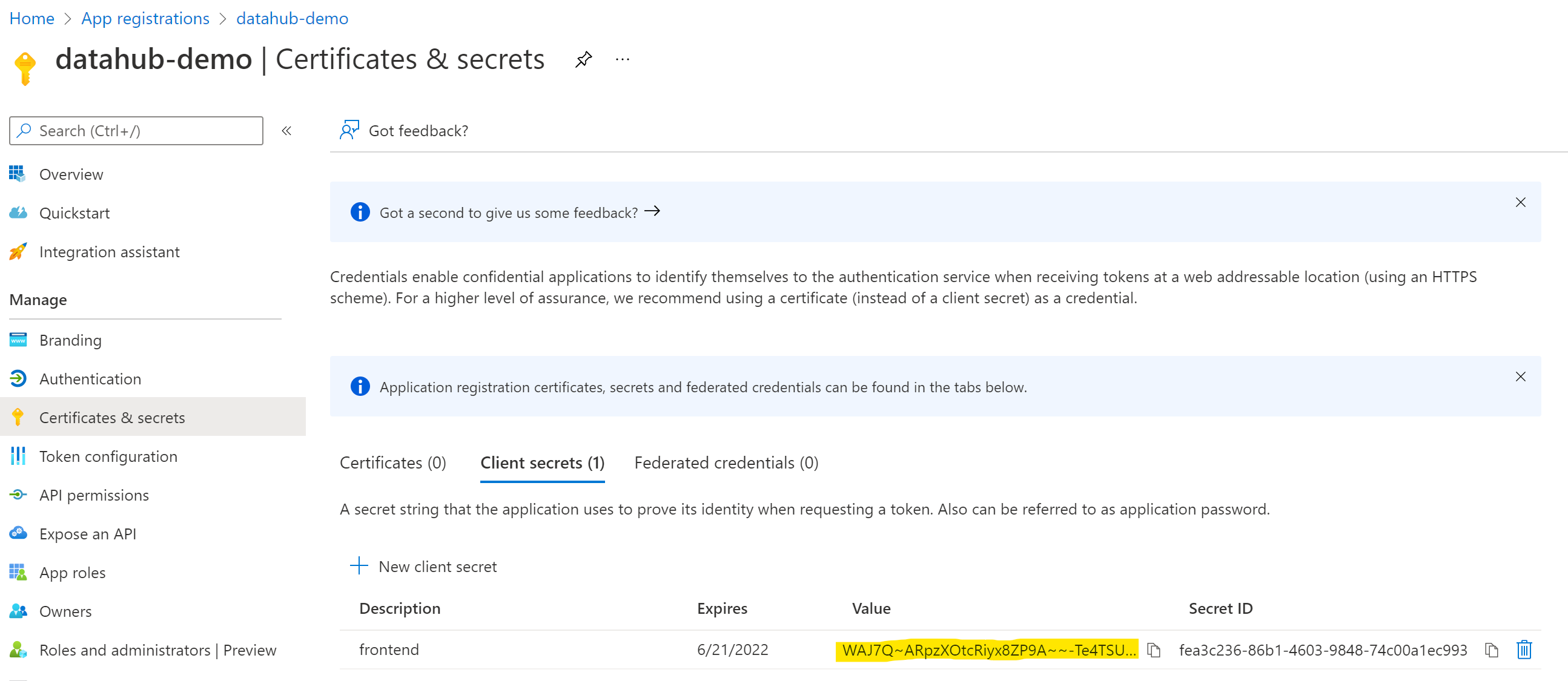

-

+

+

+  +

+

+

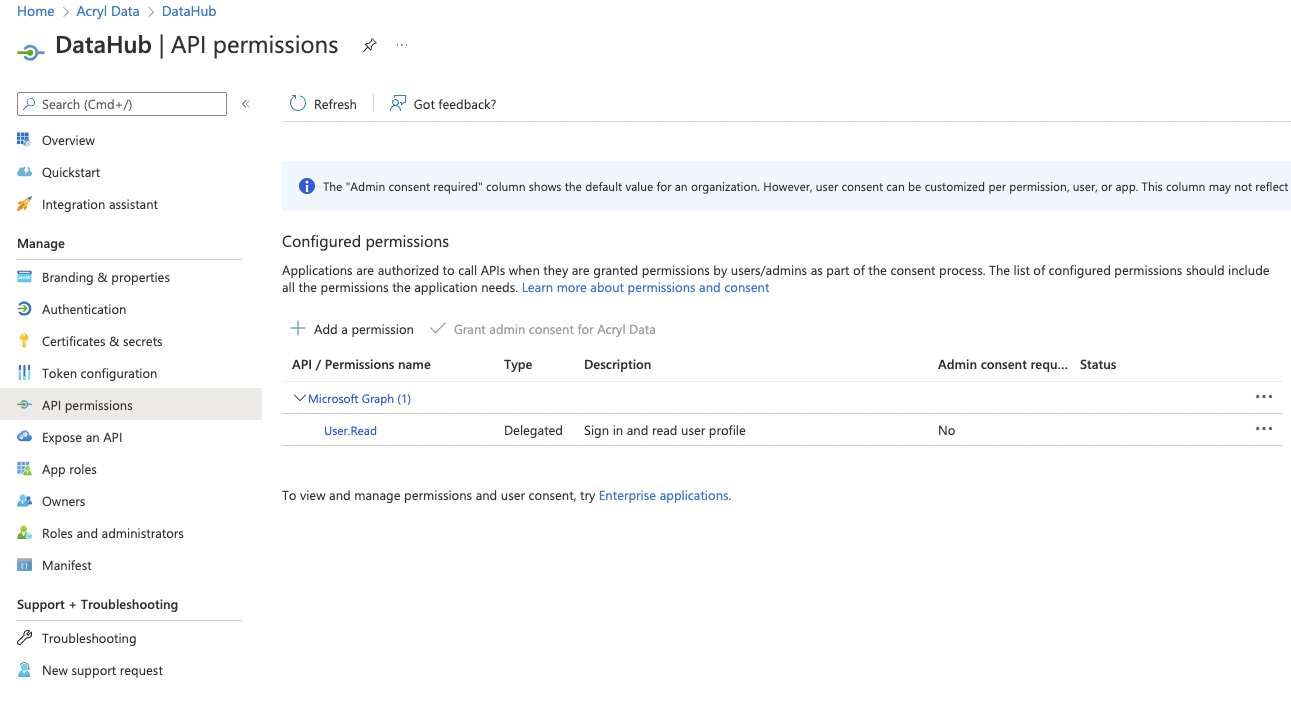

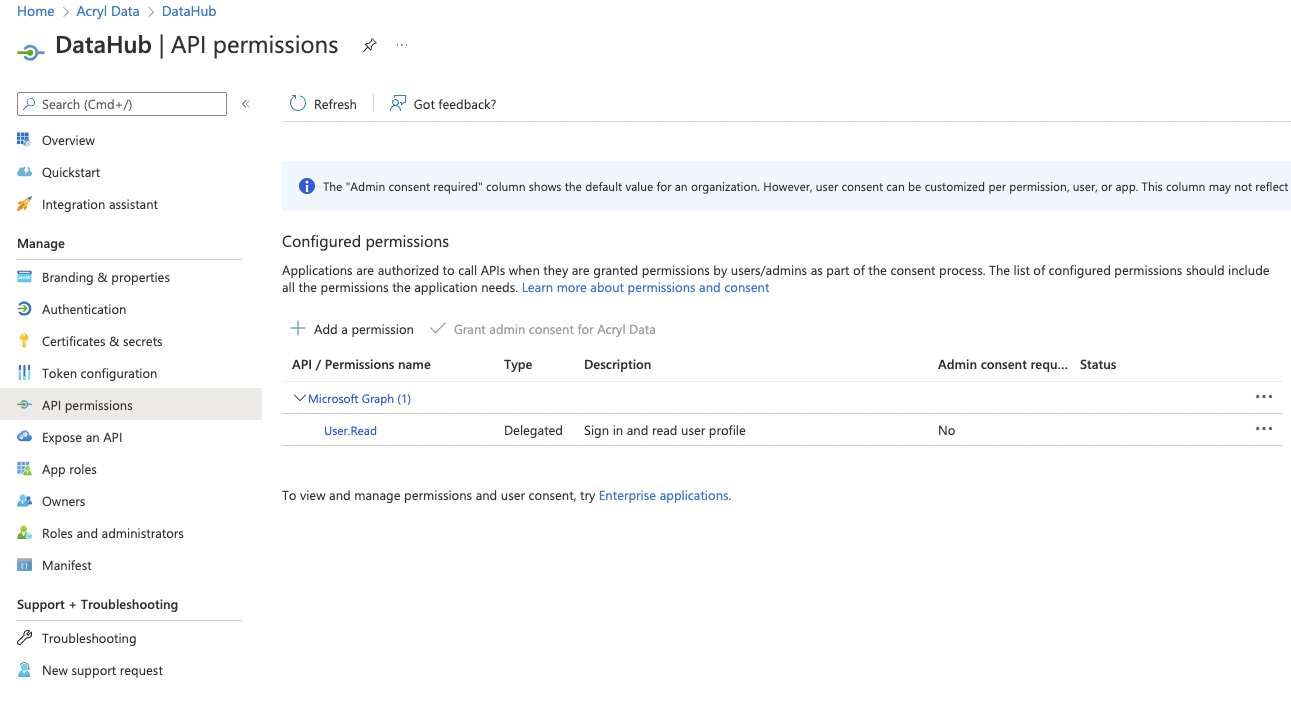

### 4. Configure API permissions

@@ -66,7 +78,11 @@ Click on **Add a permission**, then from the **Microsoft APIs** tab select **Mic

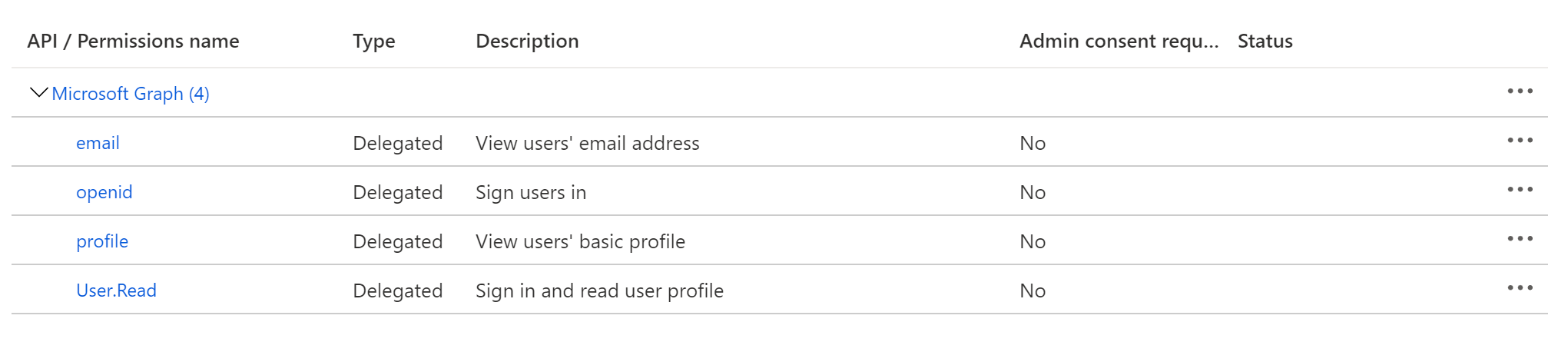

At this point, you should be looking at a screen like the following:

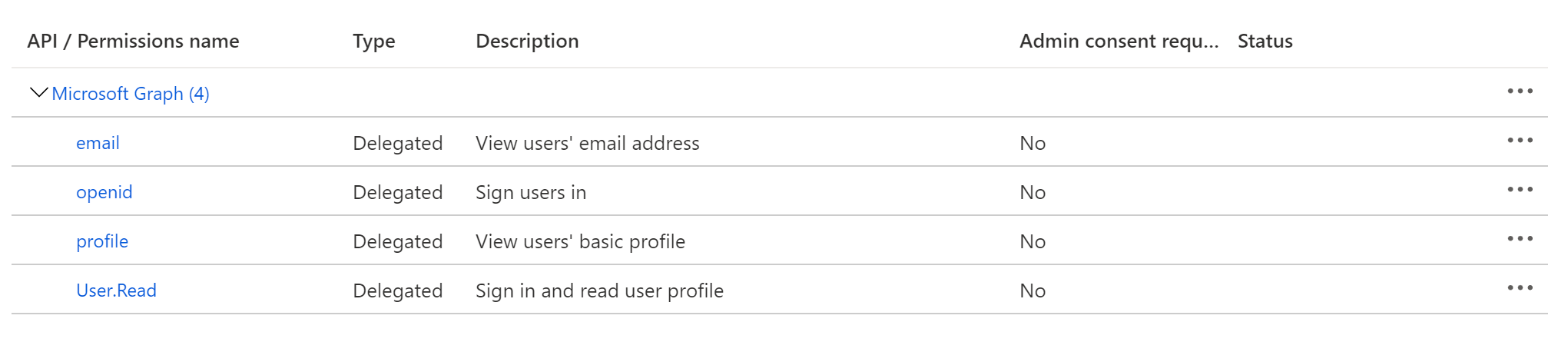

-

+

+

+  +

+

+

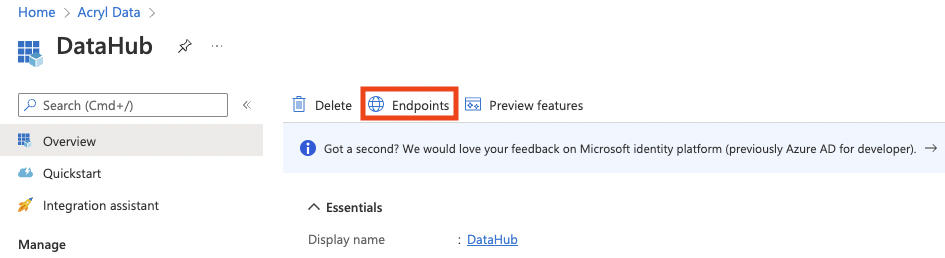

### 5. Obtain Application (Client) ID

diff --git a/docs/authentication/guides/sso/configure-oidc-react-google.md b/docs/authentication/guides/sso/configure-oidc-react-google.md

index 474538097aae2..af62185e6e787 100644

--- a/docs/authentication/guides/sso/configure-oidc-react-google.md

+++ b/docs/authentication/guides/sso/configure-oidc-react-google.md

@@ -31,7 +31,11 @@ Note that in order to complete this step you should be logged into a Google acco

c. Fill out the details in the App Information & Domain sections. Make sure the 'Application Home Page' provided matches where DataHub is deployed

at your organization.

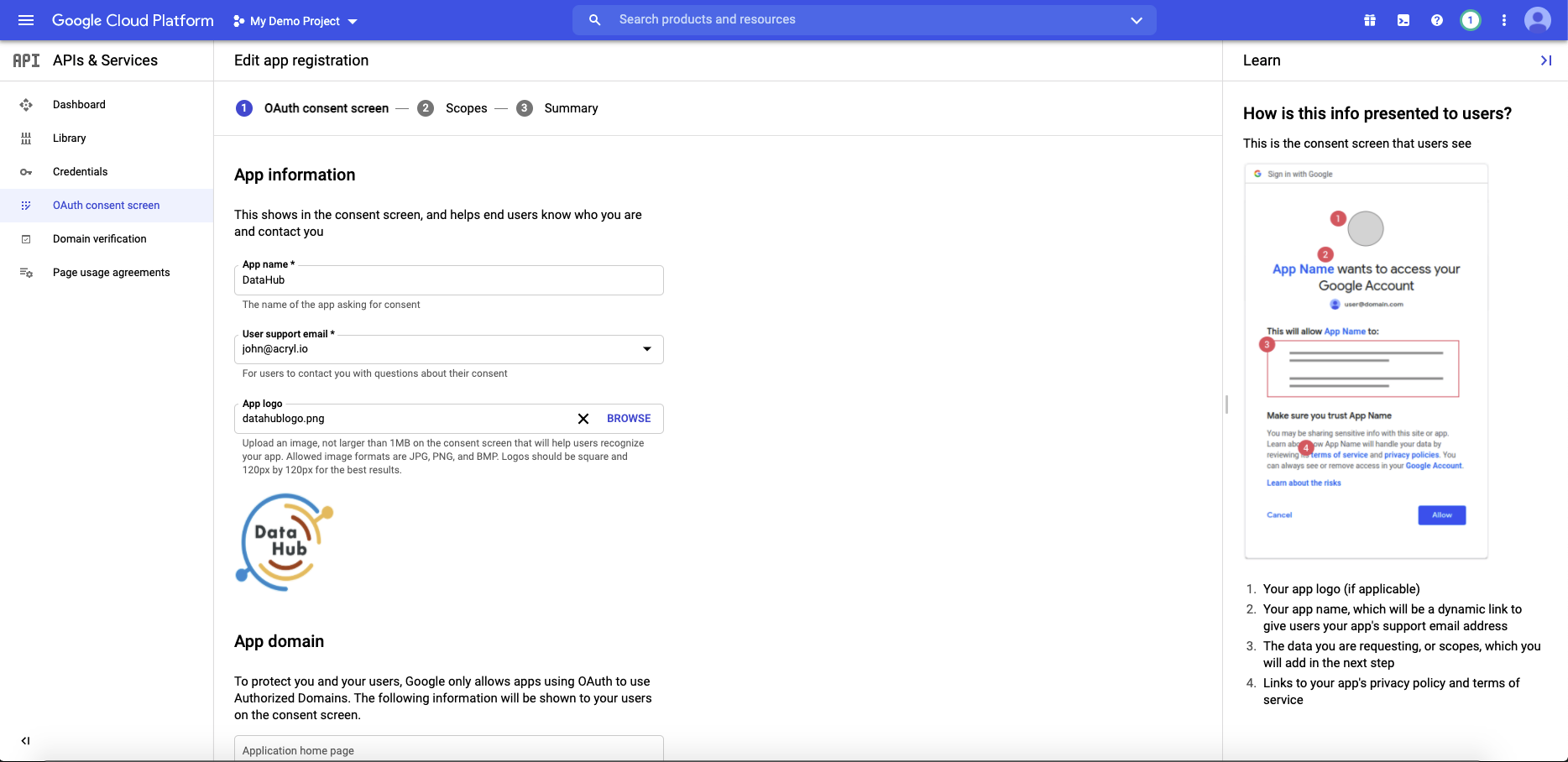

-

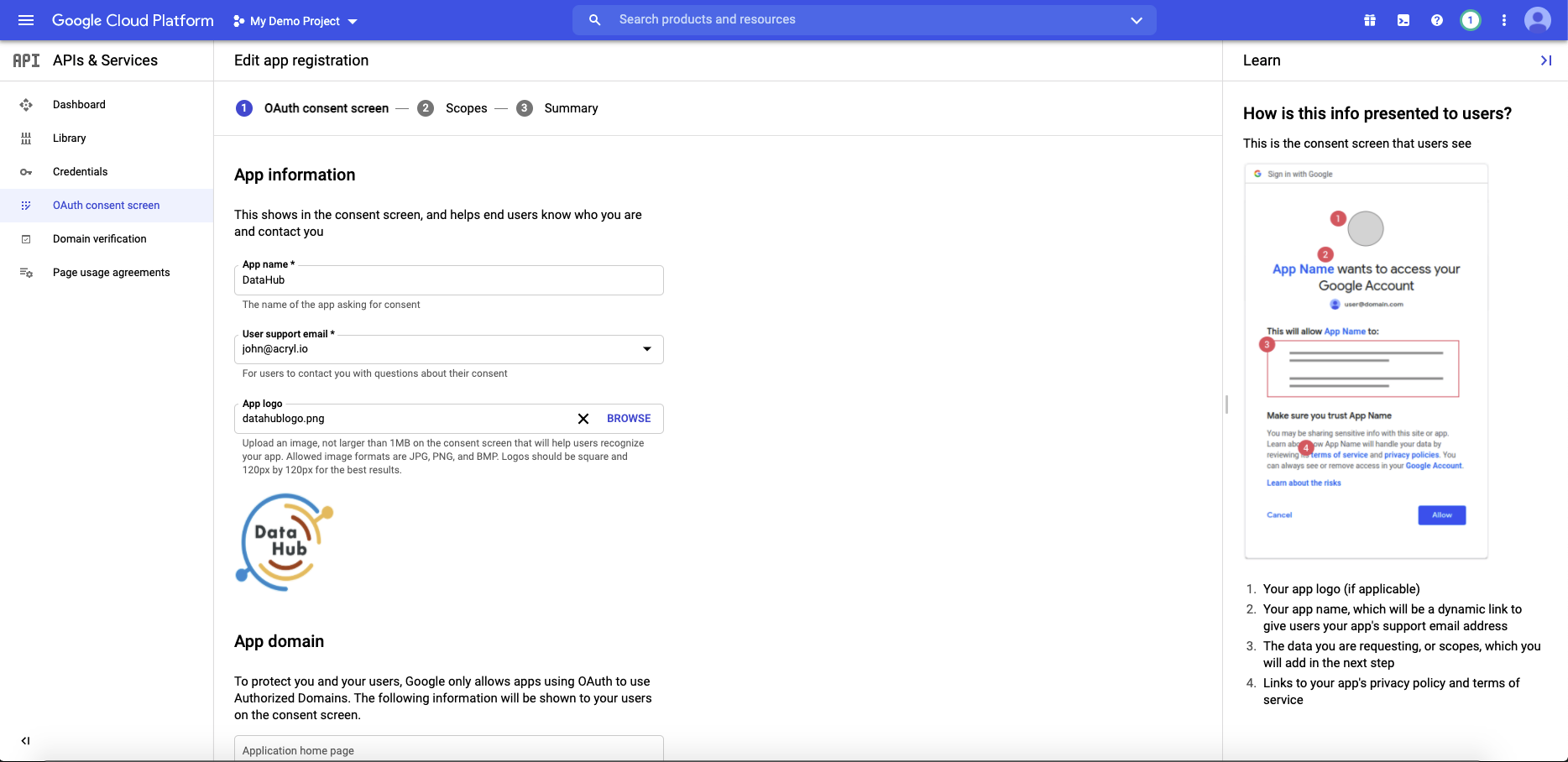

+

+

+  +

+

+

Once you've completed this, **Save & Continue**.

@@ -70,7 +74,11 @@ f. You will now receive a pair of values, a client id and a client secret. Bookm

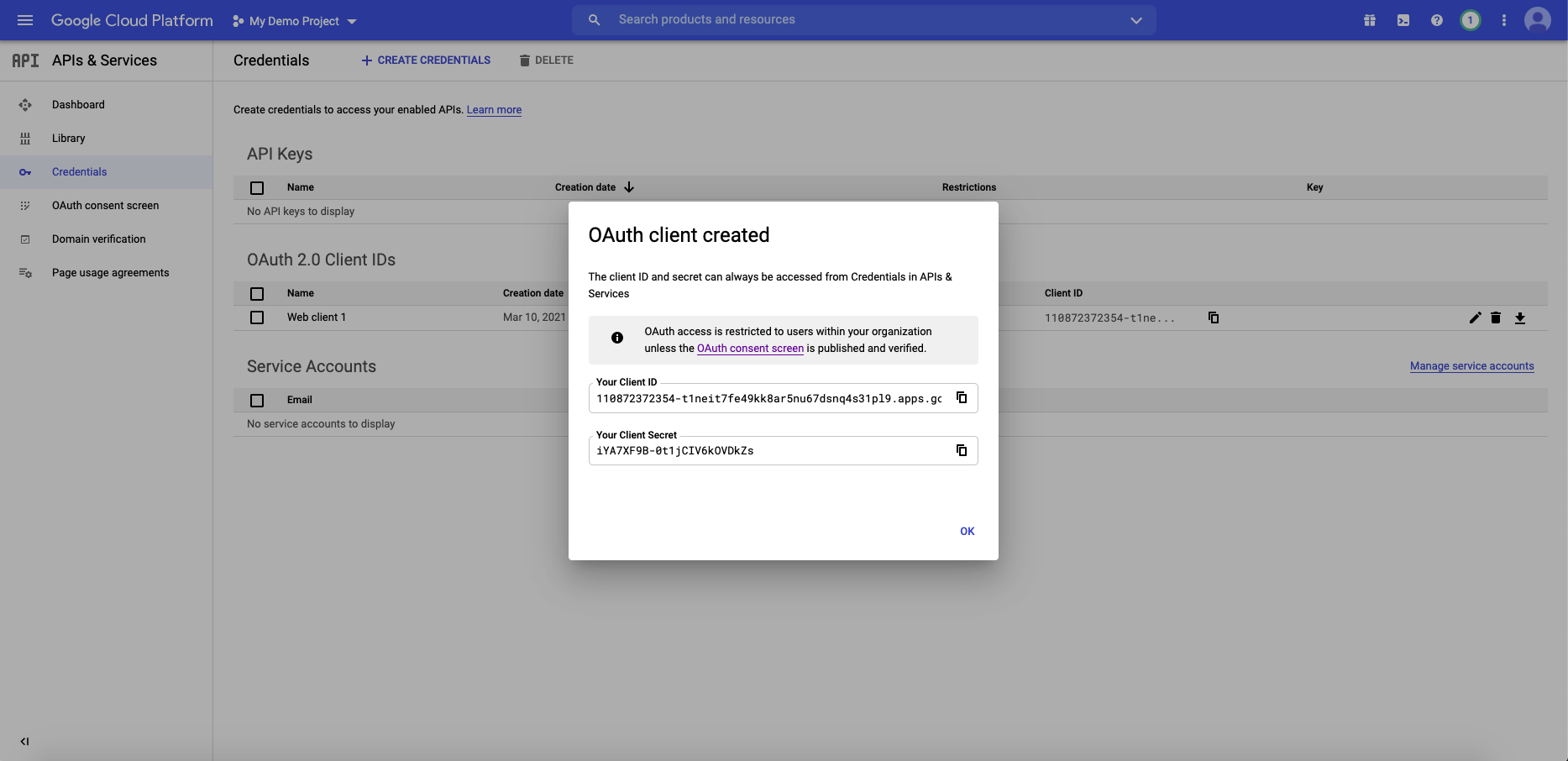

At this point, you should be looking at a screen like the following:

-

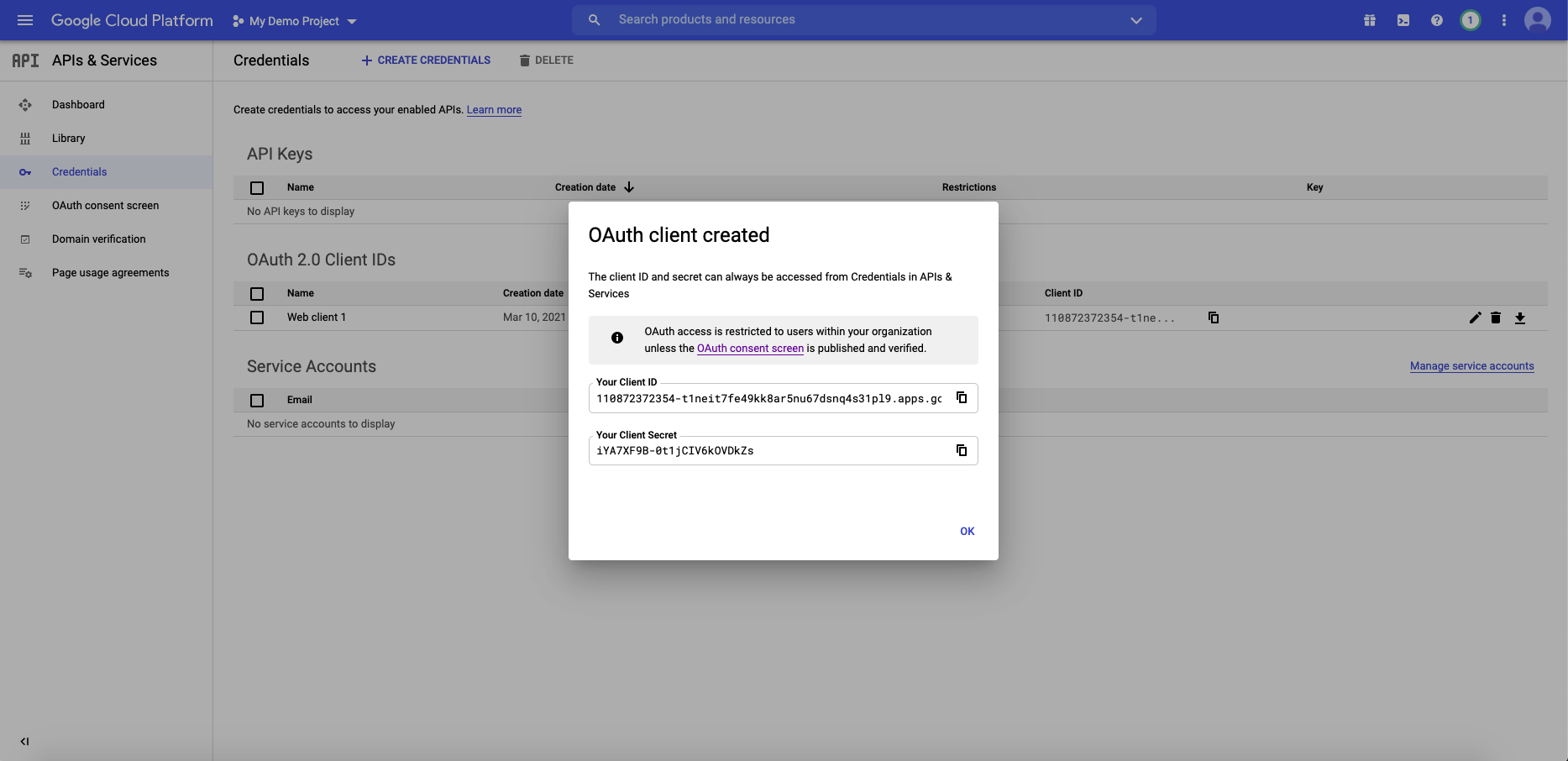

+

+

+  +

+

+

Success!

diff --git a/docs/authentication/guides/sso/configure-oidc-react-okta.md b/docs/authentication/guides/sso/configure-oidc-react-okta.md

index cfede999f1e70..320b887a28f16 100644

--- a/docs/authentication/guides/sso/configure-oidc-react-okta.md

+++ b/docs/authentication/guides/sso/configure-oidc-react-okta.md

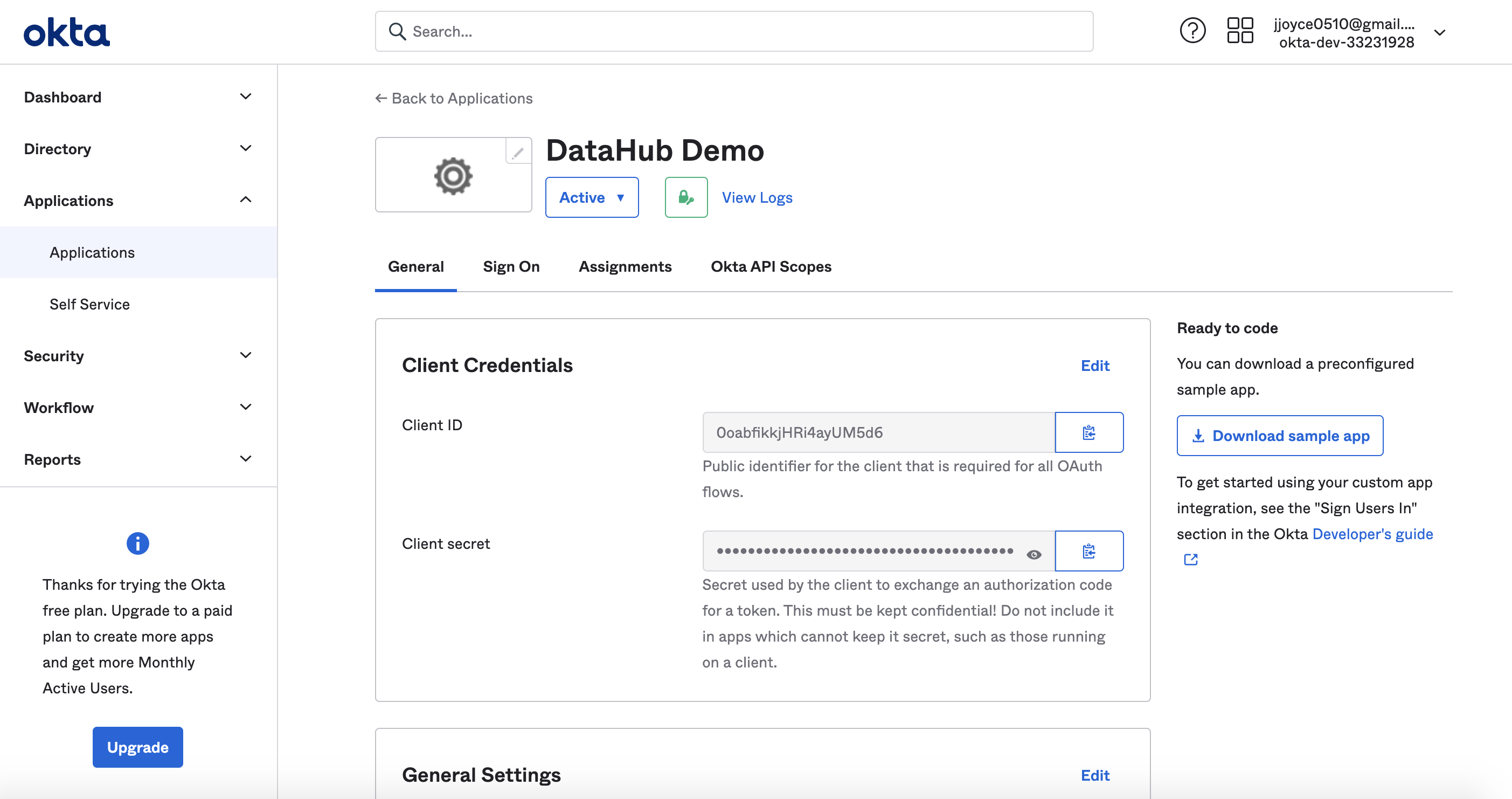

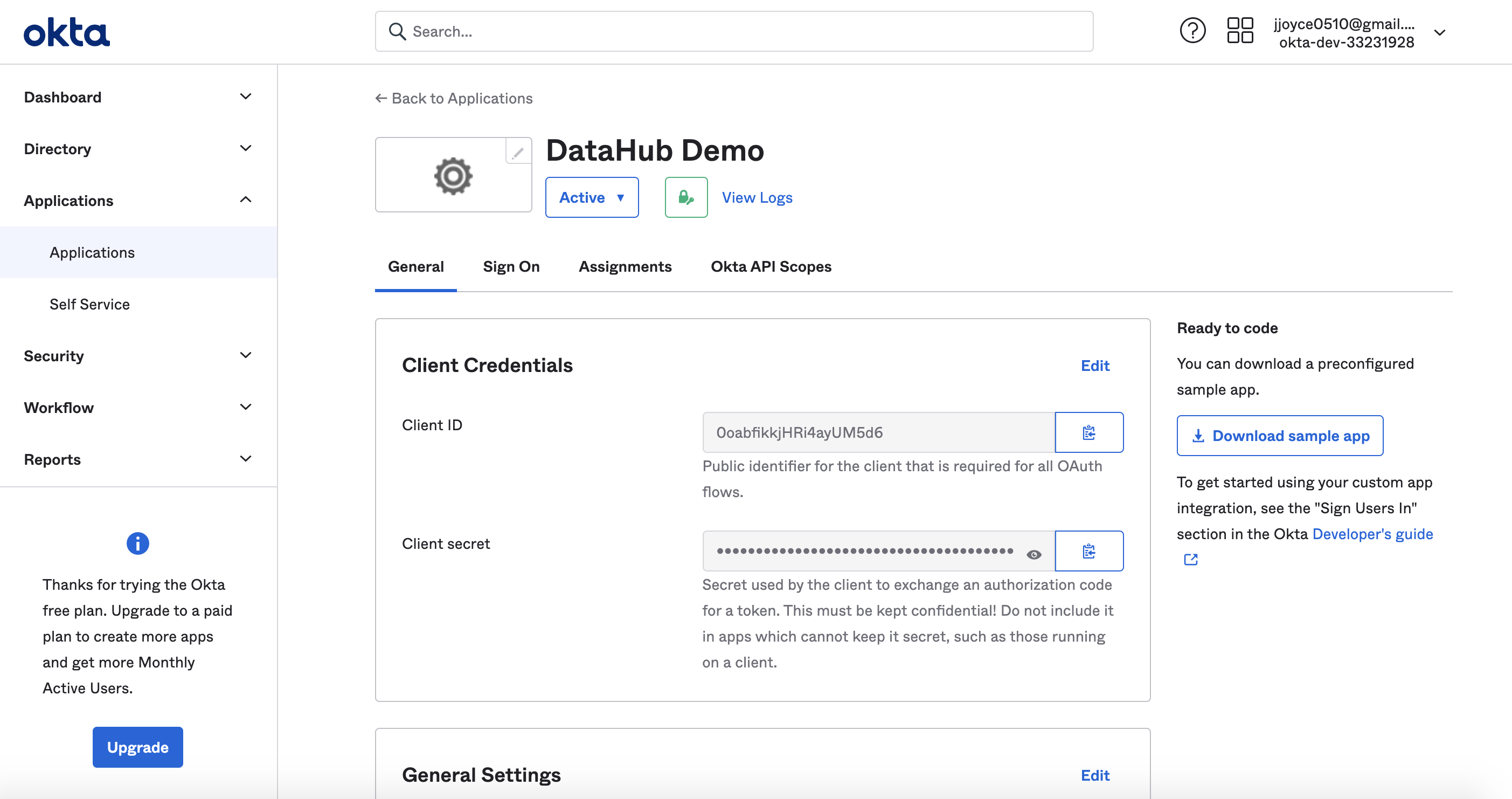

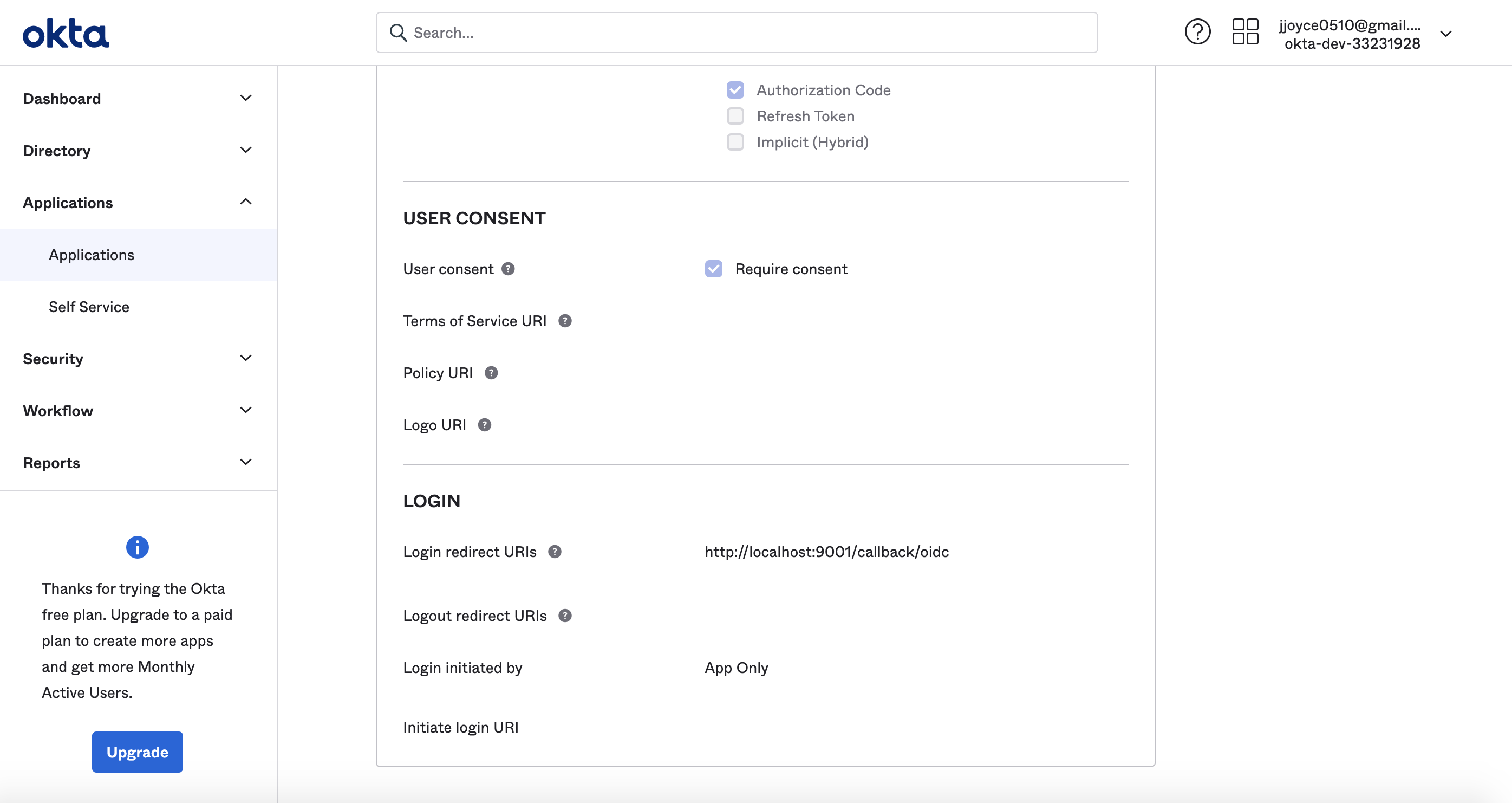

@@ -69,8 +69,16 @@ for example, `https://dev-33231928.okta.com/.well-known/openid-configuration`.

At this point, you should be looking at a screen like the following:

-

-

+

+

+  +

+

+

+

+

+  +

+

+

Success!

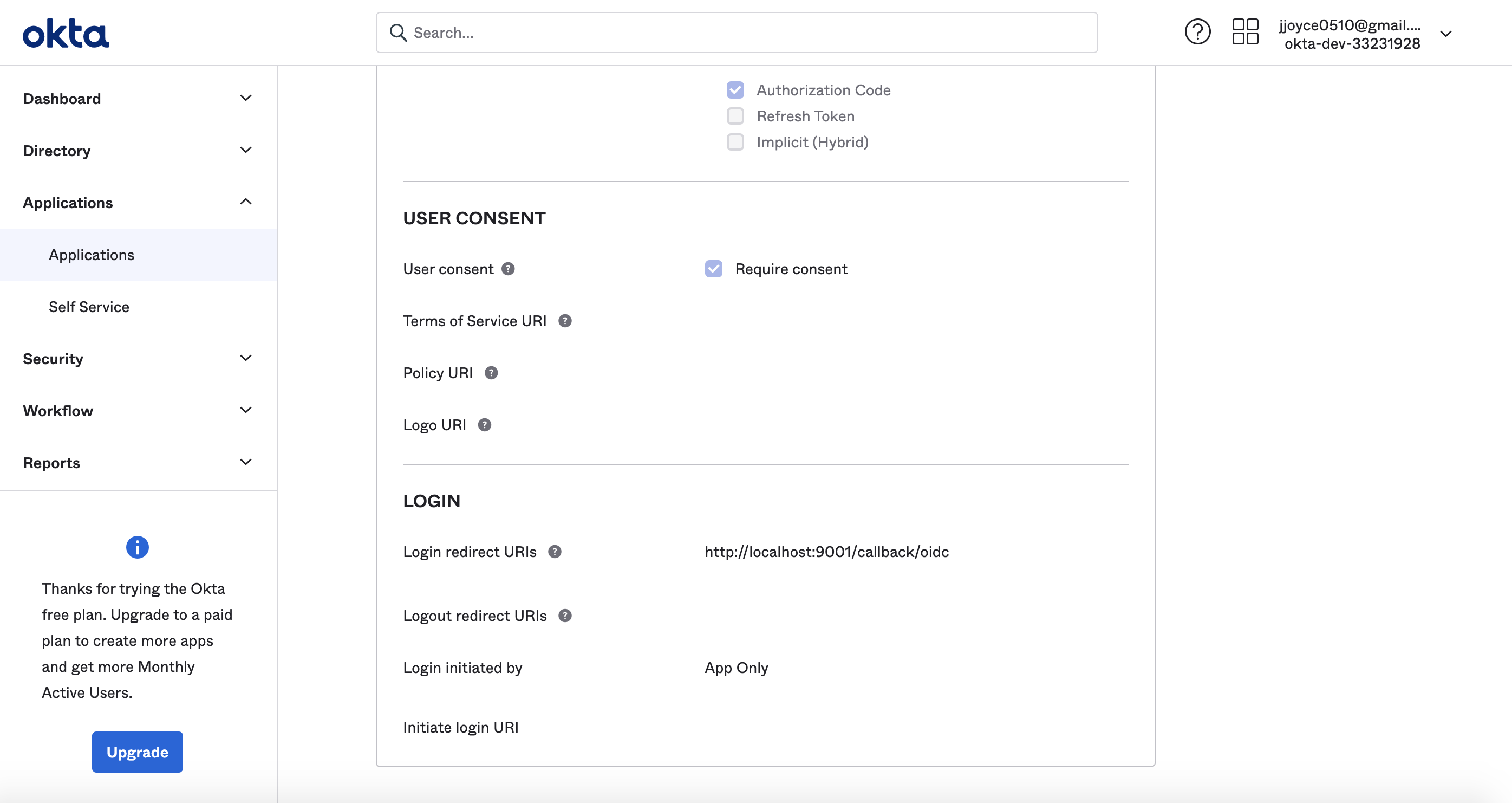

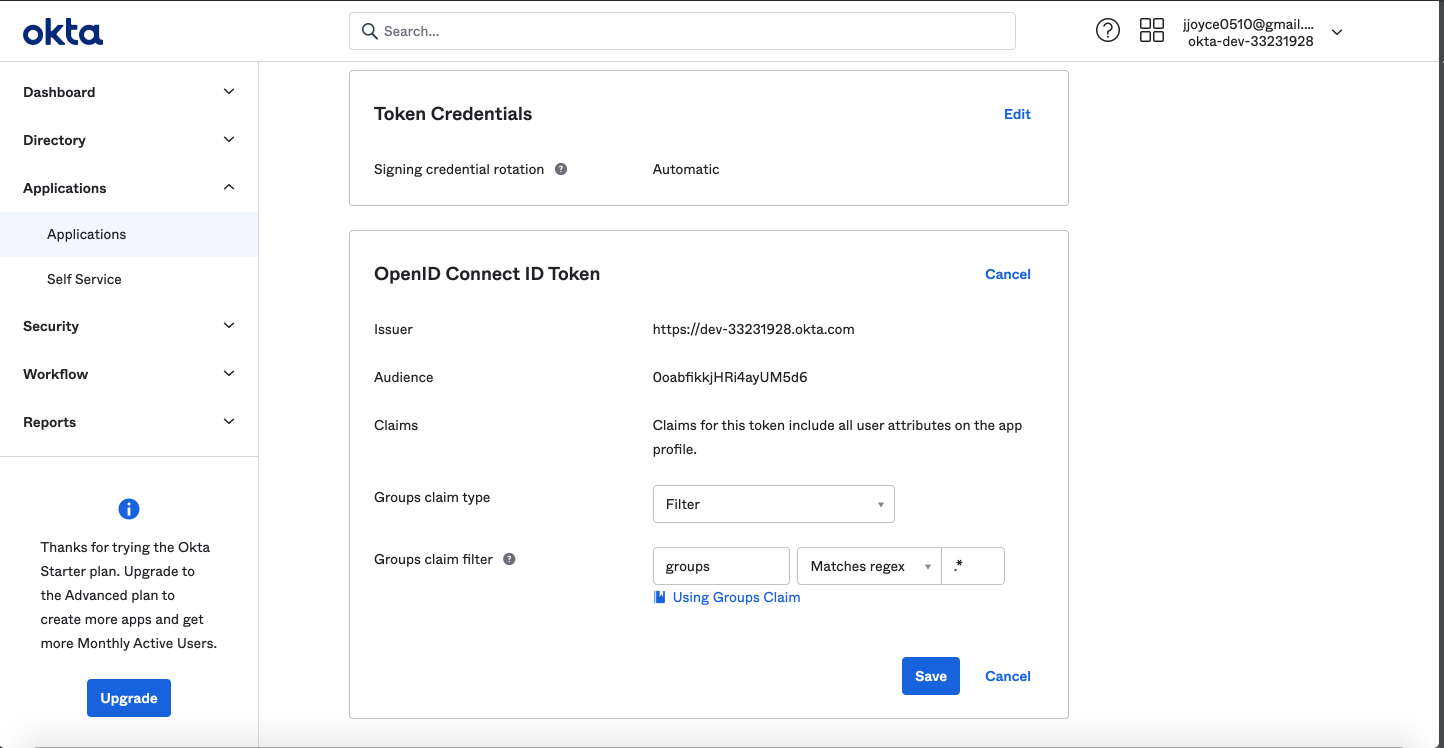

@@ -96,7 +104,11 @@ Replacing the placeholders above with the client id & client secret received fro

>

> By default, we assume that the groups will appear in a claim named "groups". This can be customized using the `AUTH_OIDC_GROUPS_CLAIM` container configuration.

>

->

+>

+

+  +

+

+

### 5. Restart `datahub-frontend-react` docker container

diff --git a/docs/authentication/guides/sso/img/azure-setup-api-permissions.png b/docs/authentication/guides/sso/img/azure-setup-api-permissions.png

deleted file mode 100755

index 4964b7d48ffec..0000000000000

Binary files a/docs/authentication/guides/sso/img/azure-setup-api-permissions.png and /dev/null differ

diff --git a/docs/authentication/guides/sso/img/azure-setup-app-registration.png b/docs/authentication/guides/sso/img/azure-setup-app-registration.png

deleted file mode 100755

index ffb23a7e3ddec..0000000000000

Binary files a/docs/authentication/guides/sso/img/azure-setup-app-registration.png and /dev/null differ

diff --git a/docs/authentication/guides/sso/img/azure-setup-authentication.png b/docs/authentication/guides/sso/img/azure-setup-authentication.png

deleted file mode 100755

index 2d27ec88fb40b..0000000000000

Binary files a/docs/authentication/guides/sso/img/azure-setup-authentication.png and /dev/null differ

diff --git a/docs/authentication/guides/sso/img/azure-setup-certificates-secrets.png b/docs/authentication/guides/sso/img/azure-setup-certificates-secrets.png

deleted file mode 100755

index db6585d84d8ee..0000000000000

Binary files a/docs/authentication/guides/sso/img/azure-setup-certificates-secrets.png and /dev/null differ

diff --git a/docs/authentication/guides/sso/img/google-setup-1.png b/docs/authentication/guides/sso/img/google-setup-1.png

deleted file mode 100644

index 88c674146f1e4..0000000000000

Binary files a/docs/authentication/guides/sso/img/google-setup-1.png and /dev/null differ

diff --git a/docs/authentication/guides/sso/img/google-setup-2.png b/docs/authentication/guides/sso/img/google-setup-2.png

deleted file mode 100644

index 850512b891d5f..0000000000000

Binary files a/docs/authentication/guides/sso/img/google-setup-2.png and /dev/null differ

diff --git a/docs/authentication/guides/sso/img/okta-setup-1.png b/docs/authentication/guides/sso/img/okta-setup-1.png

deleted file mode 100644

index 3949f18657c5e..0000000000000

Binary files a/docs/authentication/guides/sso/img/okta-setup-1.png and /dev/null differ

diff --git a/docs/authentication/guides/sso/img/okta-setup-2.png b/docs/authentication/guides/sso/img/okta-setup-2.png

deleted file mode 100644

index fa6ea4d991894..0000000000000

Binary files a/docs/authentication/guides/sso/img/okta-setup-2.png and /dev/null differ

diff --git a/docs/authentication/guides/sso/img/okta-setup-groups-claim.png b/docs/authentication/guides/sso/img/okta-setup-groups-claim.png

deleted file mode 100644

index ed35426685e46..0000000000000

Binary files a/docs/authentication/guides/sso/img/okta-setup-groups-claim.png and /dev/null differ

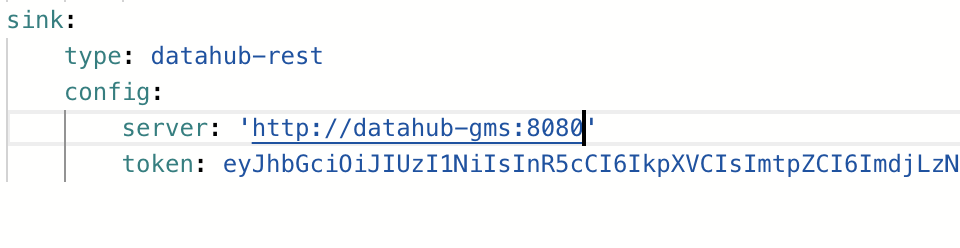

diff --git a/docs/authentication/personal-access-tokens.md b/docs/authentication/personal-access-tokens.md

index 0188aab49444e..dc57a989a4e0c 100644

--- a/docs/authentication/personal-access-tokens.md

+++ b/docs/authentication/personal-access-tokens.md

@@ -71,7 +71,11 @@ curl 'http://localhost:8080/entities/urn:li:corpuser:datahub' -H 'Authorization:

Since authorization happens at the GMS level, this means that ingestion is also protected behind access tokens, to use them simply add a `token` to the sink config property as seen below:

-

+

+

+  +

+

+

:::note

diff --git a/docs/authorization/access-policies-guide.md b/docs/authorization/access-policies-guide.md

index 5820e513a83e3..1eabb64d2878f 100644

--- a/docs/authorization/access-policies-guide.md

+++ b/docs/authorization/access-policies-guide.md

@@ -110,10 +110,13 @@ In the second step, we can simply select the Privileges that this Platform Polic

| Manage Tags | Allow the actor to create and remove any Tags |

| Manage Public Views | Allow the actor to create, edit, and remove any public (shared) Views. |

| Manage Ownership Types | Allow the actor to create, edit, and remove any Ownership Types. |

+| Manage Platform Settings | (Acryl DataHub only) Allow the actor to manage global integrations and notification settings |

+| Manage Monitors | (Acryl DataHub only) Allow the actor to create, remove, start, or stop any entity assertion monitors |

| Restore Indices API[^1] | Allow the actor to restore indices for a set of entities via API |

| Enable/Disable Writeability API[^1] | Allow the actor to enable or disable GMS writeability for use in data migrations |

| Apply Retention API[^1] | Allow the actor to apply aspect retention via API |

+

[^1]: Only active if REST_API_AUTHORIZATION_ENABLED environment flag is enabled

#### Step 3: Choose Policy Actors

@@ -204,8 +207,15 @@ The common Metadata Privileges, which span across entity types, include:

| Edit Status | Allow actor to edit the status of an entity (soft deleted or not). |

| Edit Domain | Allow actor to edit the Domain of an entity. |

| Edit Deprecation | Allow actor to edit the Deprecation status of an entity. |

-| Edit Assertions | Allow actor to add and remove assertions from an entity. |

-| Edit All | Allow actor to edit any information about an entity. Super user privileges. Controls the ability to ingest using API when REST API Authorization is enabled. |

+| Edit Lineage | Allow actor to edit custom lineage edges for the entity. |

+| Edit Data Product | Allow actor to edit the data product that an entity is part of |

+| Propose Tags | (Acryl DataHub only) Allow actor to propose new Tags for the entity. |

+| Propose Glossary Terms | (Acryl DataHub only) Allow actor to propose new Glossary Terms for the entity. |

+| Propose Documentation | (Acryl DataHub only) Allow actor to propose new Documentation for the entity. |

+| Manage Tag Proposals | (Acryl DataHub only) Allow actor to accept or reject proposed Tags for the entity. |

+| Manage Glossary Terms Proposals | (Acryl DataHub only) Allow actor to accept or reject proposed Glossary Terms for the entity. |

+| Manage Documentation Proposals | (Acryl DataHub only) Allow actor to accept or reject proposed Documentation for the entity |

+| Edit Entity | Allow actor to edit any information about an entity. Super user privileges. Controls the ability to ingest using API when REST API Authorization is enabled. |

| Get Timeline API[^1] | Allow actor to get the timeline of an entity via API. |

| Get Entity API[^1] | Allow actor to get an entity via API. |

| Get Timeseries Aspect API[^1] | Allow actor to get a timeseries aspect via API. |

@@ -225,10 +235,19 @@ The common Metadata Privileges, which span across entity types, include:

| Dataset | Edit Dataset Queries | Allow actor to edit the Highlighted Queries on the Queries tab of the dataset. |

| Dataset | View Dataset Usage | Allow actor to access usage metadata about a dataset both in the UI and in the GraphQL API. This includes example queries, number of queries, etc. Also applies to REST APIs when REST API Authorization is enabled. |

| Dataset | View Dataset Profile | Allow actor to access a dataset's profile both in the UI and in the GraphQL API. This includes snapshot statistics like #rows, #columns, null percentage per field, etc. |

+| Dataset | Edit Assertions | Allow actor to change the assertions associated with a dataset. |

+| Dataset | Edit Incidents | (Acryl DataHub only) Allow actor to change the incidents associated with a dataset. |

+| Dataset | Edit Monitors | (Acryl DataHub only) Allow actor to change the assertion monitors associated with a dataset. |

| Tag | Edit Tag Color | Allow actor to change the color of a Tag. |

| Group | Edit Group Members | Allow actor to add and remove members to a group. |

+| Group | Edit Contact Information | Allow actor to change email, slack handle associated with the group. |

+| Group | Manage Group Subscriptions | (Acryl DataHub only) Allow actor to subscribe the group to entities. |

+| Group | Manage Group Notifications | (Acryl DataHub only) Allow actor to change notification settings for the group. |

| User | Edit User Profile | Allow actor to change the user's profile including display name, bio, title, profile image, etc. |

| User + Group | Edit Contact Information | Allow actor to change the contact information such as email & chat handles. |

+| Term Group | Manage Direct Glossary Children | Allow actor to change the direct child Term Groups or Terms of the group. |

+| Term Group | Manage All Glossary Children | Allow actor to change any direct or indirect child Term Groups or Terms of the group. |

+

> **Still have questions about Privileges?** Let us know in [Slack](https://slack.datahubproject.io)!

diff --git a/docs/components.md b/docs/components.md

index ef76729bb37fb..b59dabcf999cc 100644

--- a/docs/components.md

+++ b/docs/components.md

@@ -6,7 +6,11 @@ title: "Components"

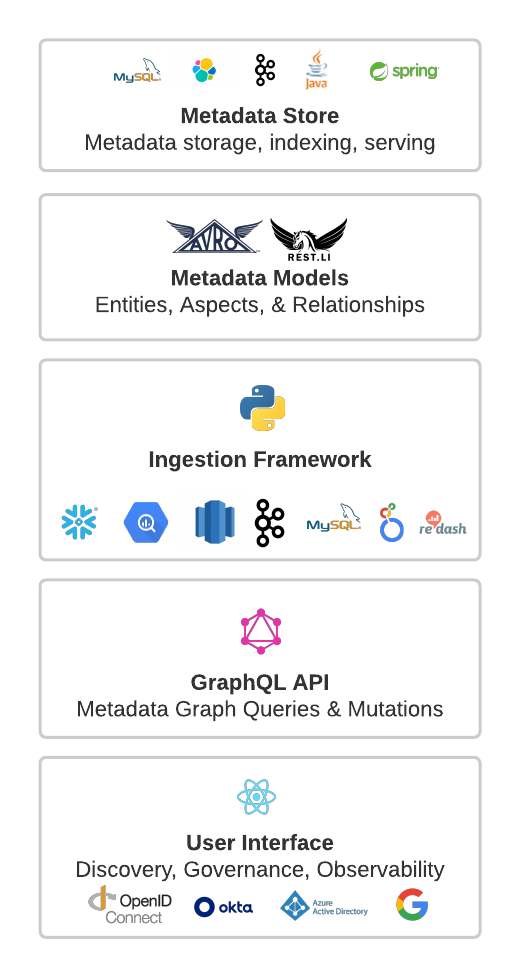

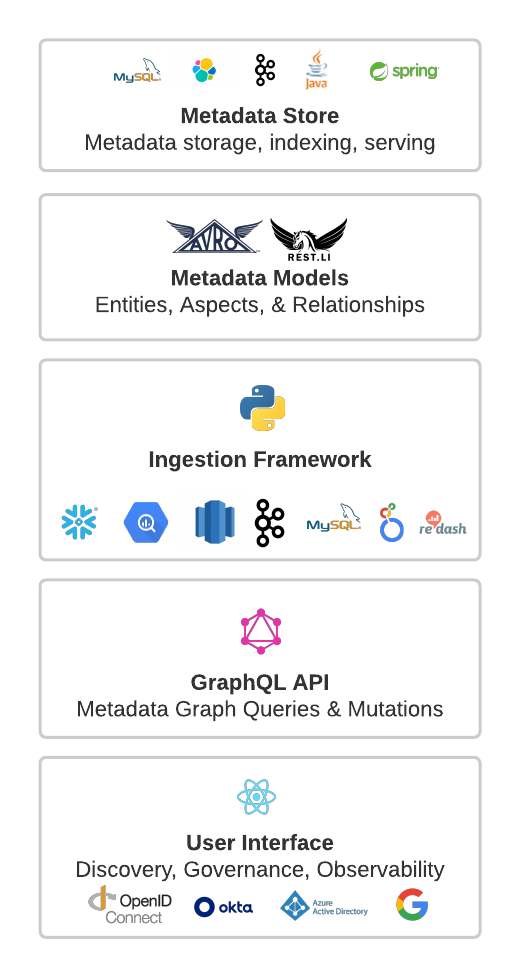

The DataHub platform consists of the components shown in the following diagram.

-

+

+

+  +

+

+

## Metadata Store

diff --git a/docs/demo/DataHub-UIOverview.pdf b/docs/demo/DataHub-UIOverview.pdf

deleted file mode 100644

index cd6106e84ac23..0000000000000

Binary files a/docs/demo/DataHub-UIOverview.pdf and /dev/null differ

diff --git a/docs/demo/DataHub_-_Powering_LinkedIn_Metadata.pdf b/docs/demo/DataHub_-_Powering_LinkedIn_Metadata.pdf

deleted file mode 100644

index 71498045f9b5b..0000000000000

Binary files a/docs/demo/DataHub_-_Powering_LinkedIn_Metadata.pdf and /dev/null differ

diff --git a/docs/demo/Data_Discoverability_at_SpotHero.pdf b/docs/demo/Data_Discoverability_at_SpotHero.pdf

deleted file mode 100644

index 83e37d8606428..0000000000000

Binary files a/docs/demo/Data_Discoverability_at_SpotHero.pdf and /dev/null differ

diff --git a/docs/demo/Datahub_-_Strongly_Consistent_Secondary_Indexing.pdf b/docs/demo/Datahub_-_Strongly_Consistent_Secondary_Indexing.pdf

deleted file mode 100644

index 2d6a33a464650..0000000000000

Binary files a/docs/demo/Datahub_-_Strongly_Consistent_Secondary_Indexing.pdf and /dev/null differ

diff --git a/docs/demo/Datahub_at_Grofers.pdf b/docs/demo/Datahub_at_Grofers.pdf

deleted file mode 100644

index c29cece9e250a..0000000000000

Binary files a/docs/demo/Datahub_at_Grofers.pdf and /dev/null differ

diff --git a/docs/demo/Designing_the_next_generation_of_metadata_events_for_scale.pdf b/docs/demo/Designing_the_next_generation_of_metadata_events_for_scale.pdf

deleted file mode 100644

index 0d067eef28d03..0000000000000

Binary files a/docs/demo/Designing_the_next_generation_of_metadata_events_for_scale.pdf and /dev/null differ

diff --git a/docs/demo/Metadata_Use-Cases_at_LinkedIn_-_Lightning_Talk.pdf b/docs/demo/Metadata_Use-Cases_at_LinkedIn_-_Lightning_Talk.pdf

deleted file mode 100644

index 382754f863c8a..0000000000000

Binary files a/docs/demo/Metadata_Use-Cases_at_LinkedIn_-_Lightning_Talk.pdf and /dev/null differ

diff --git a/docs/demo/Saxo Bank Data Workbench.pdf b/docs/demo/Saxo Bank Data Workbench.pdf

deleted file mode 100644

index c43480d32b8f2..0000000000000

Binary files a/docs/demo/Saxo Bank Data Workbench.pdf and /dev/null differ

diff --git a/docs/demo/Taming the Data Beast Using DataHub.pdf b/docs/demo/Taming the Data Beast Using DataHub.pdf

deleted file mode 100644

index d0062465d9220..0000000000000

Binary files a/docs/demo/Taming the Data Beast Using DataHub.pdf and /dev/null differ

diff --git a/docs/demo/Town_Hall_Presentation_-_12-2020_-_UI_Development_Part_2.pdf b/docs/demo/Town_Hall_Presentation_-_12-2020_-_UI_Development_Part_2.pdf

deleted file mode 100644

index fb7bd2b693e87..0000000000000

Binary files a/docs/demo/Town_Hall_Presentation_-_12-2020_-_UI_Development_Part_2.pdf and /dev/null differ

diff --git a/docs/demo/ViasatMetadataJourney.pdf b/docs/demo/ViasatMetadataJourney.pdf

deleted file mode 100644

index ccffd18a06d18..0000000000000

Binary files a/docs/demo/ViasatMetadataJourney.pdf and /dev/null differ

diff --git a/docs/deploy/aws.md b/docs/deploy/aws.md

index 7b01ffa02a744..228fcb51d1a28 100644

--- a/docs/deploy/aws.md

+++ b/docs/deploy/aws.md

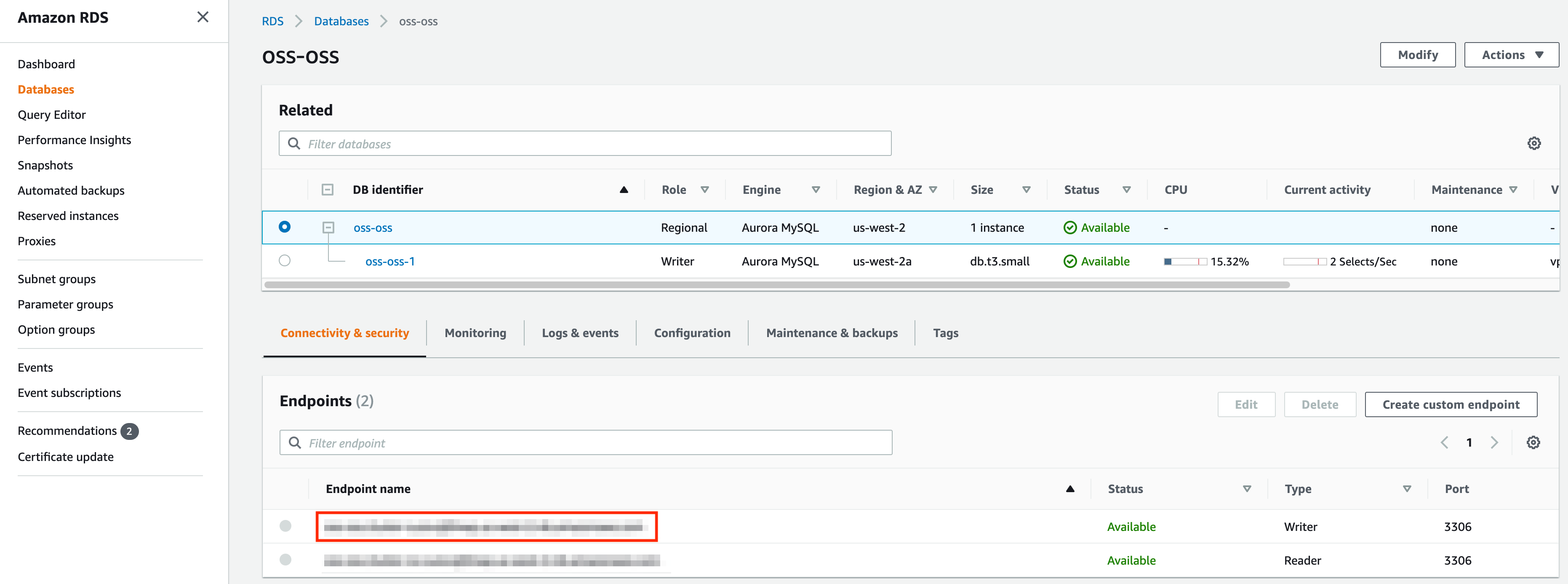

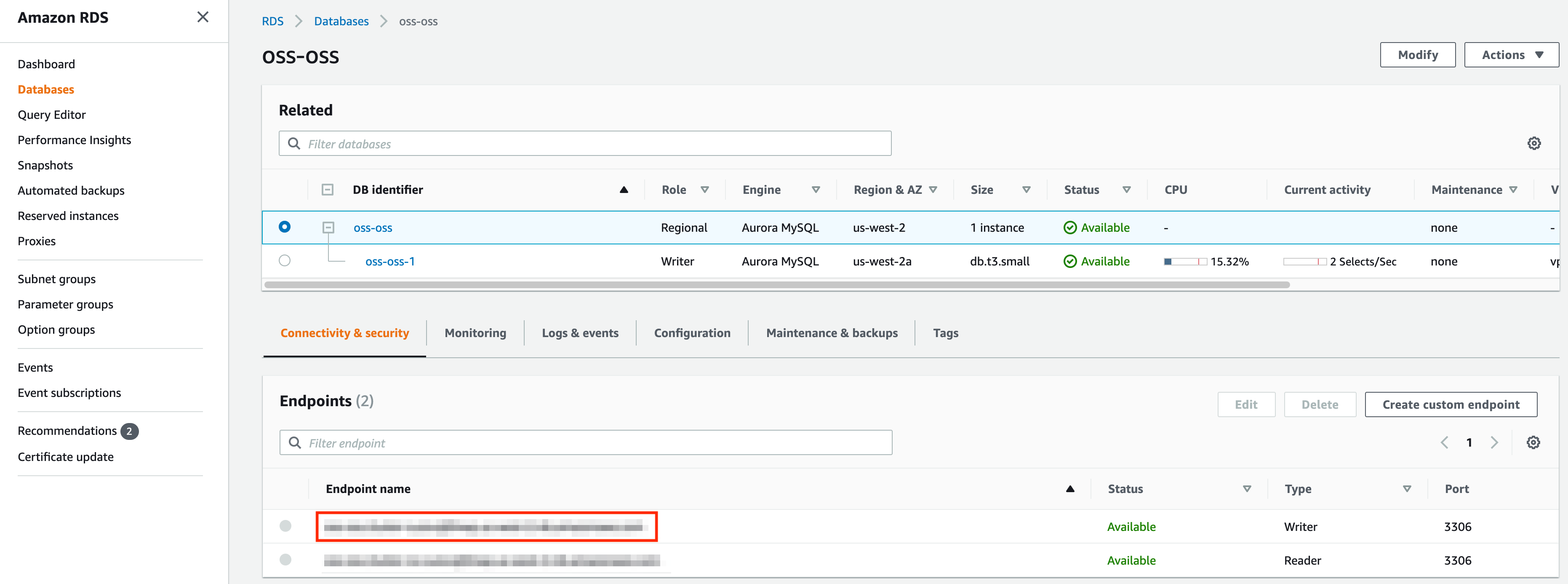

@@ -201,7 +201,11 @@ Provision a MySQL database in AWS RDS that shares the VPC with the kubernetes cl

the VPC of the kubernetes cluster. Once the database is provisioned, you should be able to see the following page. Take

a note of the endpoint marked by the red box.

-

+

+

+  +

+

+

First, add the DB password to kubernetes by running the following.

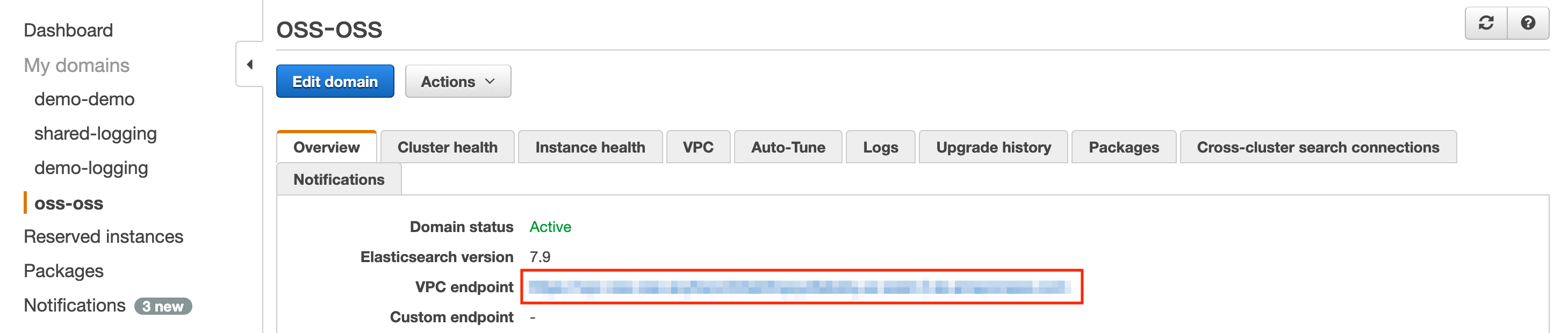

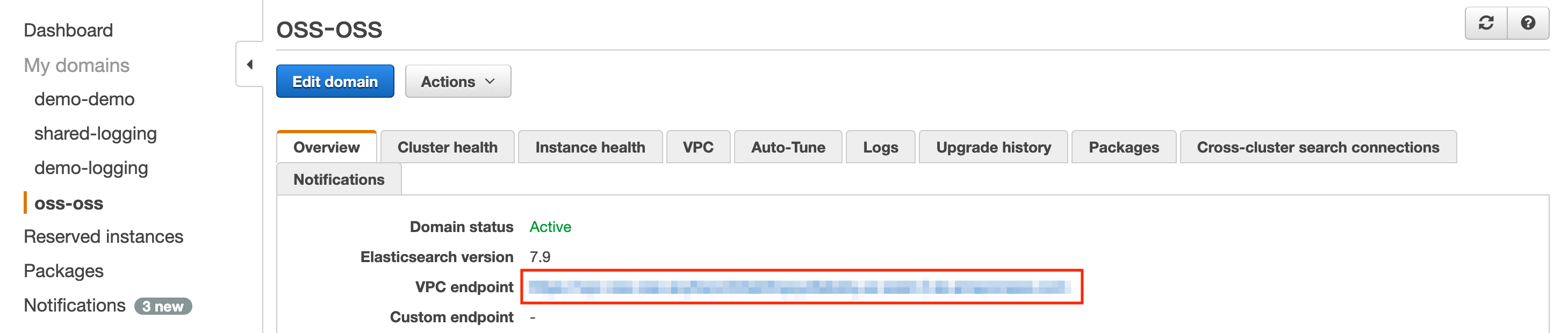

@@ -234,7 +238,11 @@ Provision an elasticsearch domain running elasticsearch version 7.10 or above th

cluster or has VPC peering set up between the VPC of the kubernetes cluster. Once the domain is provisioned, you should

be able to see the following page. Take a note of the endpoint marked by the red box.

-

+

+

+  +

+

+

Update the elasticsearch settings under global in the values.yaml as follows.

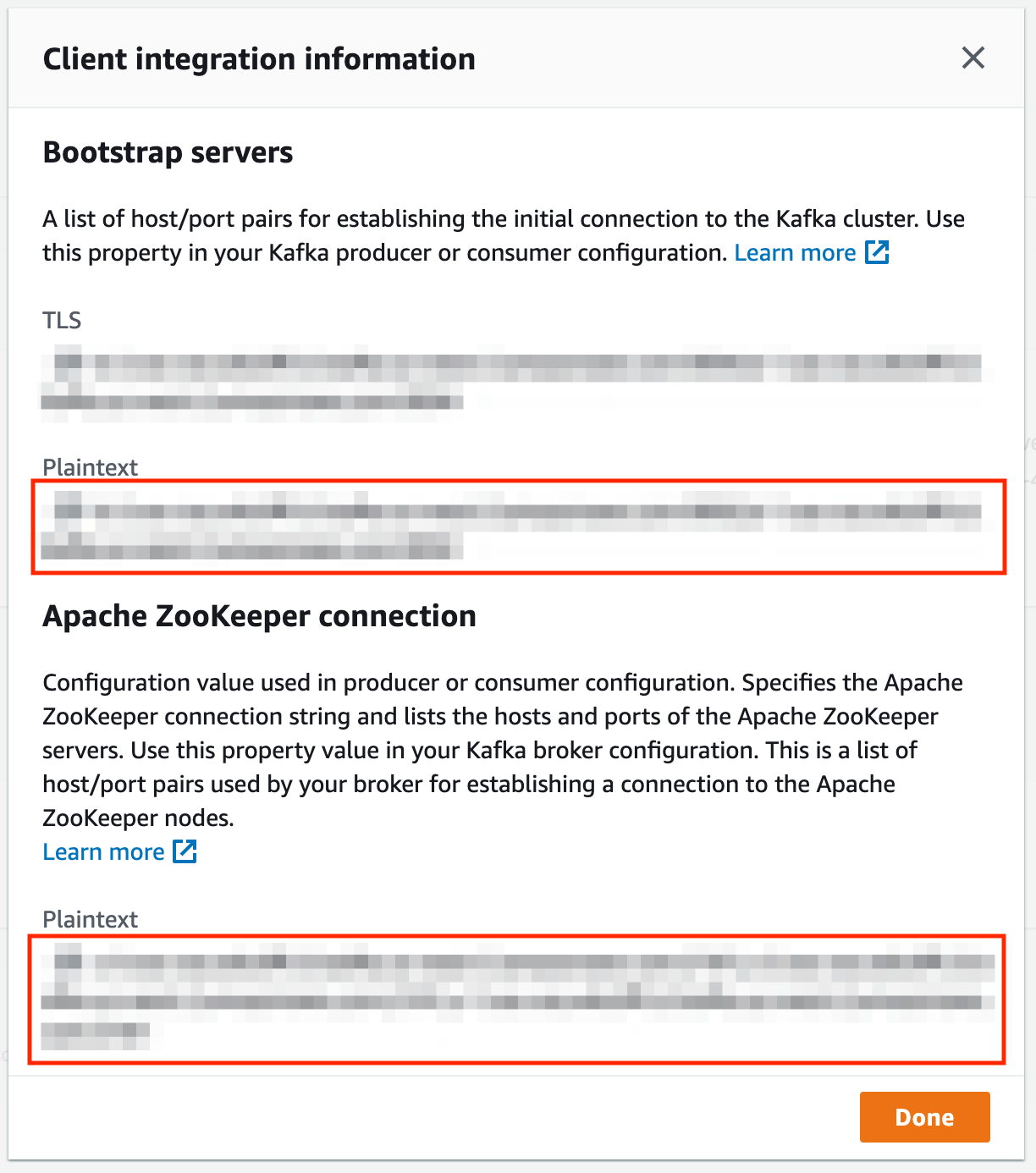

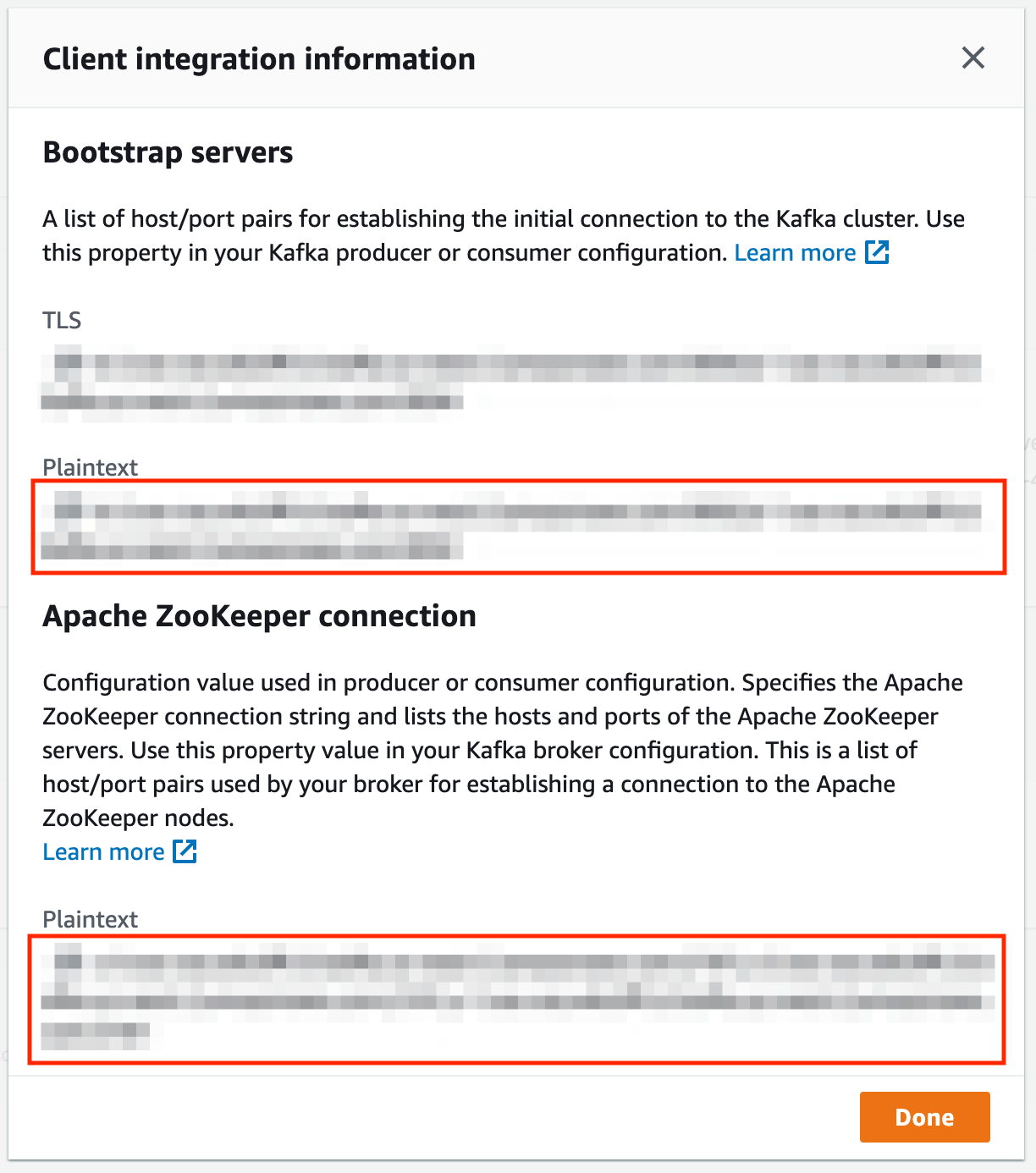

@@ -330,7 +338,11 @@ Provision an MSK cluster that shares the VPC with the kubernetes cluster or has

the kubernetes cluster. Once the domain is provisioned, click on the “View client information” button in the ‘Cluster

Summary” section. You should see a page like below. Take a note of the endpoints marked by the red boxes.

-

+

+

+  +

+

+

Update the kafka settings under global in the values.yaml as follows.

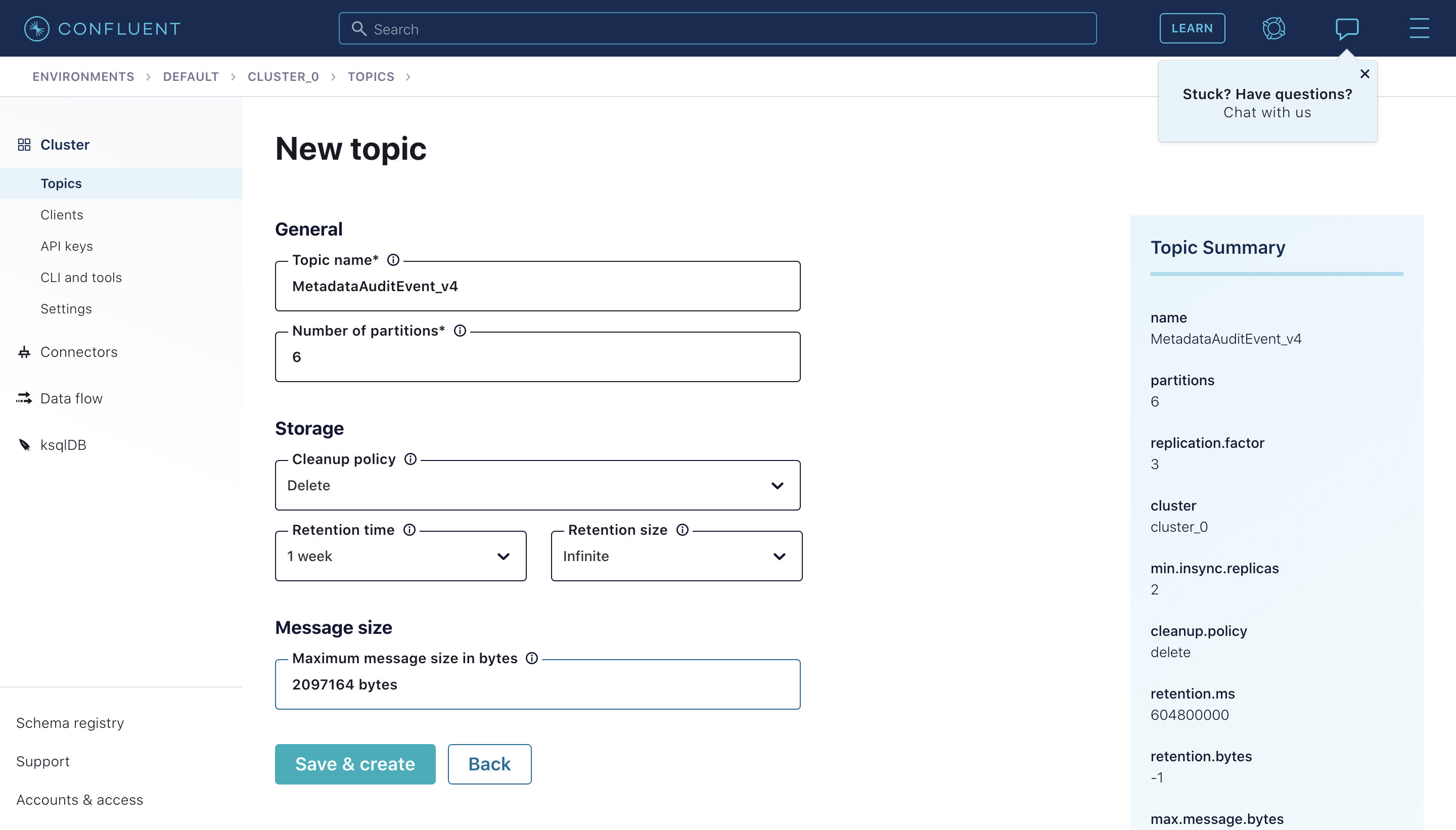

diff --git a/docs/deploy/confluent-cloud.md b/docs/deploy/confluent-cloud.md

index d93ffcceaecee..794b55d4686bf 100644

--- a/docs/deploy/confluent-cloud.md

+++ b/docs/deploy/confluent-cloud.md

@@ -24,7 +24,11 @@ decommissioned.

To create the topics, navigate to your **Cluster** and click "Create Topic". Feel free to tweak the default topic configurations to

match your preferences.

-

+

+

+  +

+

+

## Step 2: Configure DataHub Container to use Confluent Cloud Topics

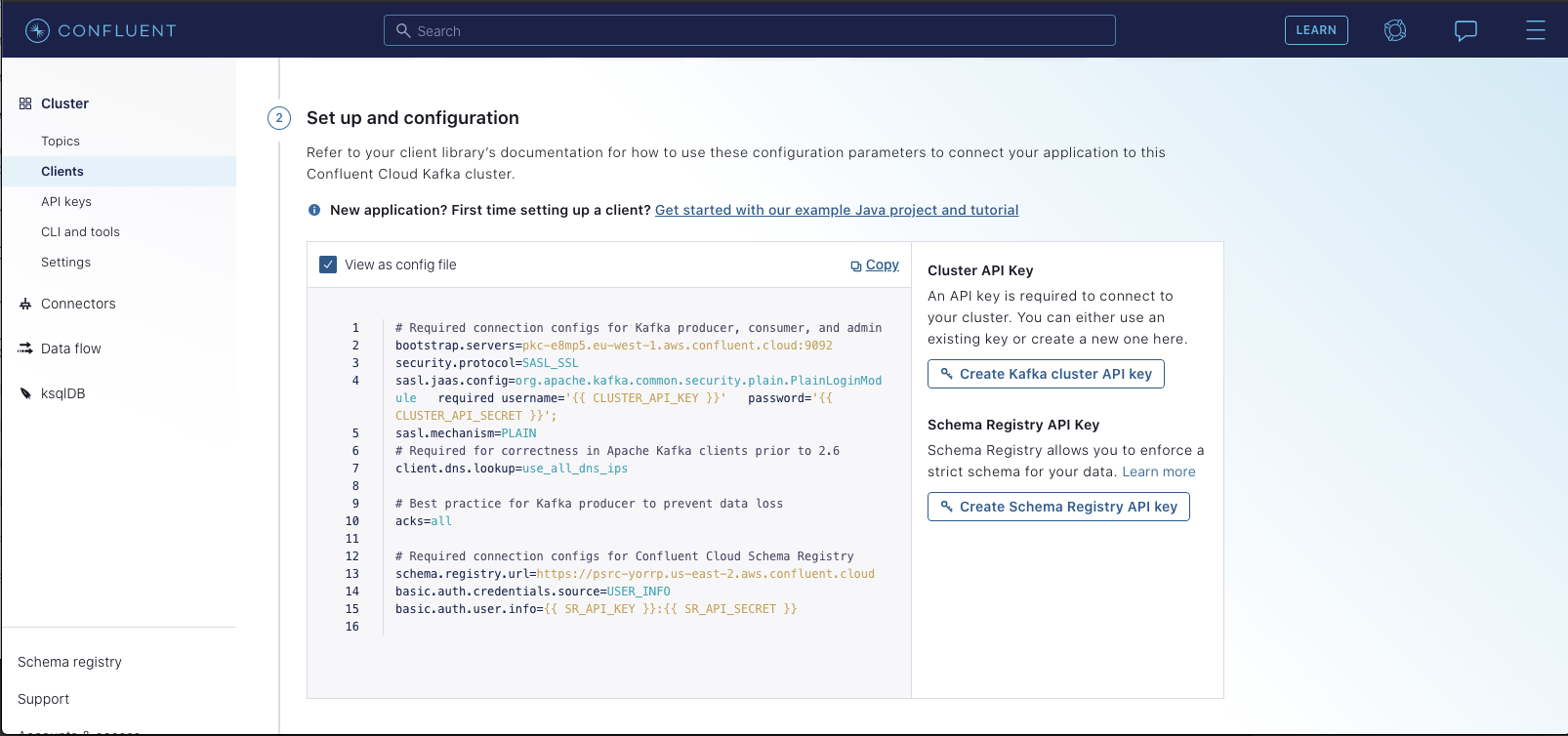

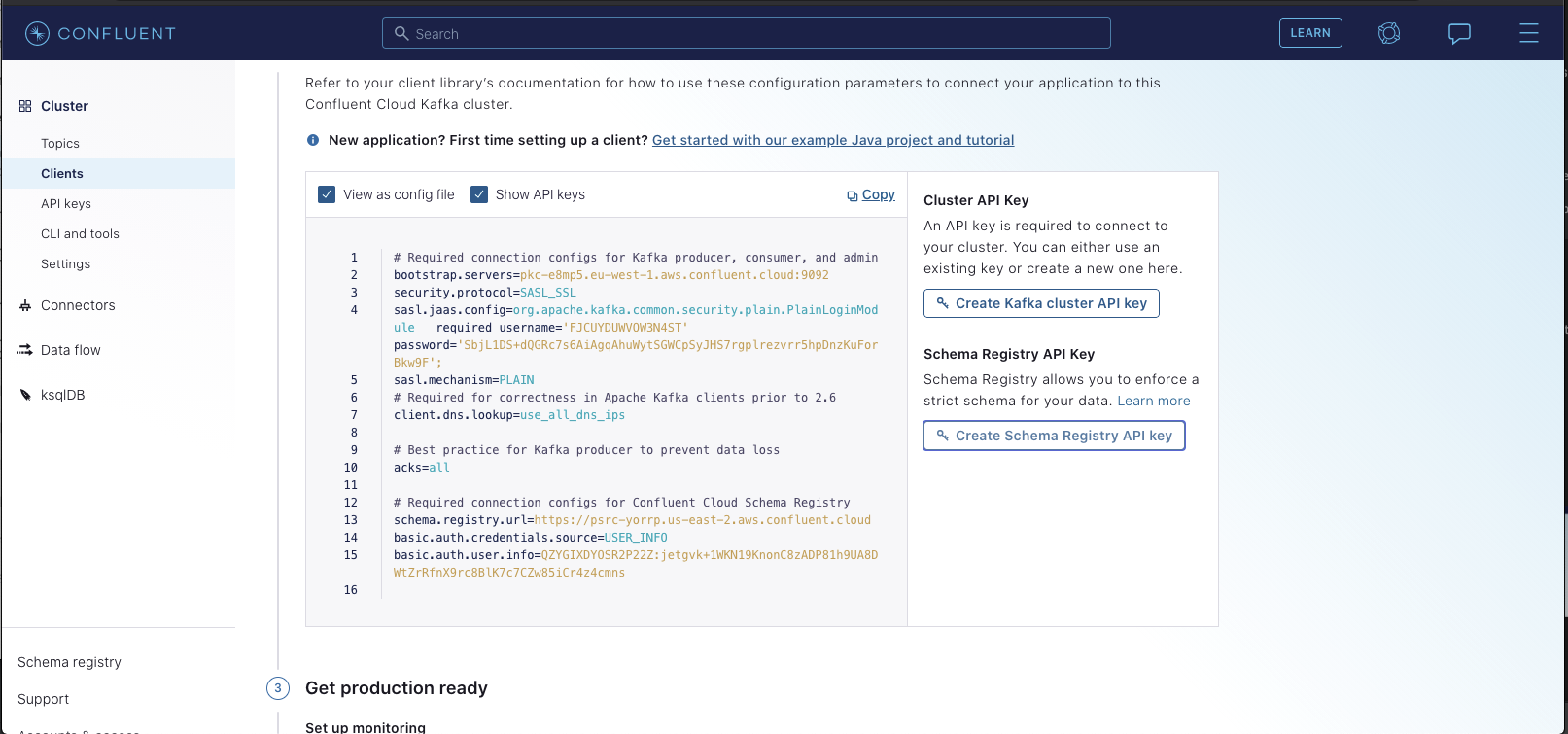

@@ -140,12 +144,20 @@ and another for the user info used for connecting to the schema registry. You'll

select "Clients" -> "Configure new Java Client". You should see a page like the following:

-

+

+

+  +

+

+

You'll want to generate both a Kafka Cluster API Key & a Schema Registry key. Once you do so,you should see the config

automatically populate with your new secrets:

-

+

+

+  +

+

+

You'll need to copy the values of `sasl.jaas.config` and `basic.auth.user.info`

for the next step.

diff --git a/docs/deploy/gcp.md b/docs/deploy/gcp.md

index 3713d69f90636..0cd3d92a8f3cd 100644

--- a/docs/deploy/gcp.md

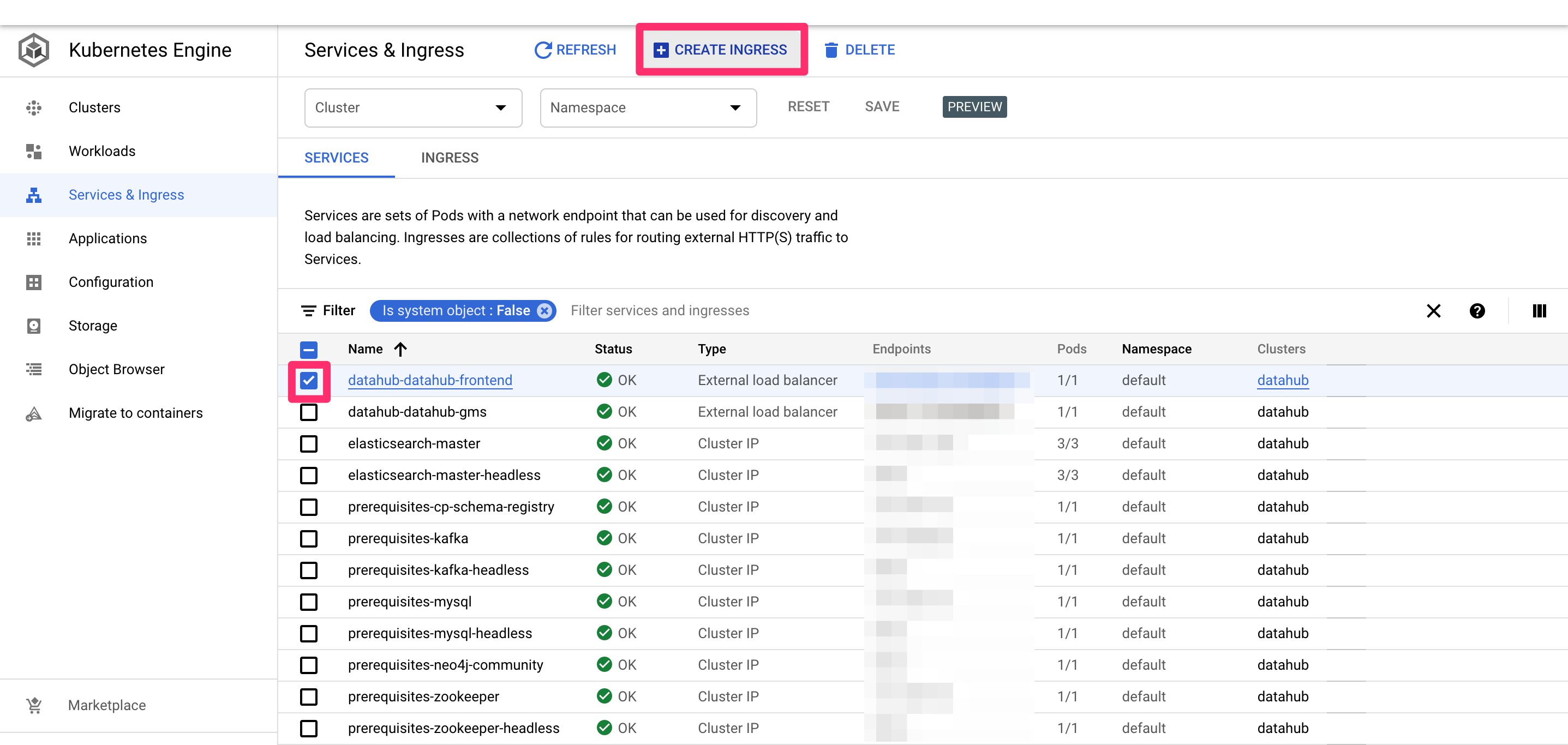

+++ b/docs/deploy/gcp.md

@@ -65,16 +65,28 @@ the GKE page on [GCP website](https://console.cloud.google.com/kubernetes/discov

Once all deploy is successful, you should see a page like below in the "Services & Ingress" tab on the left.

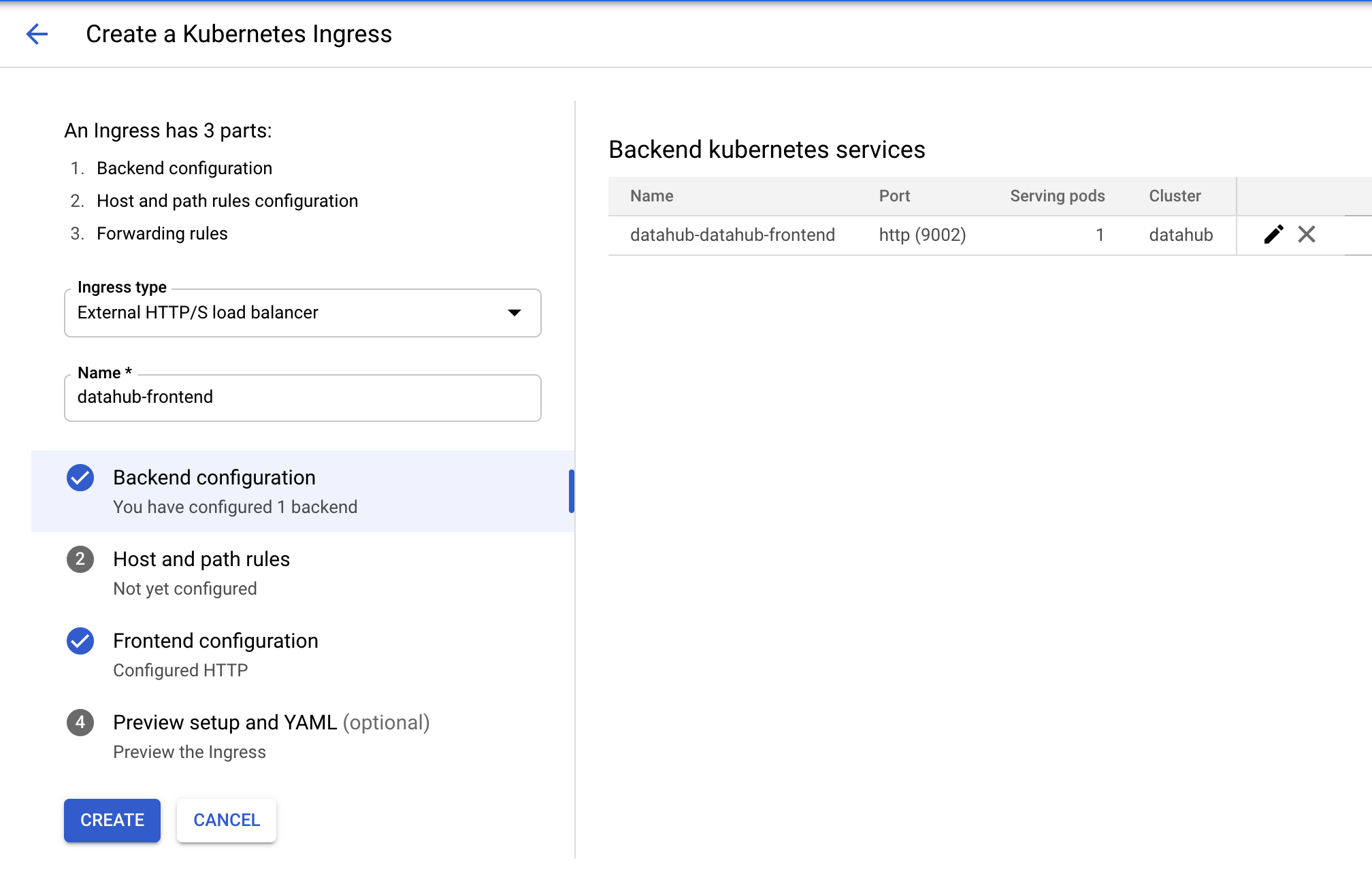

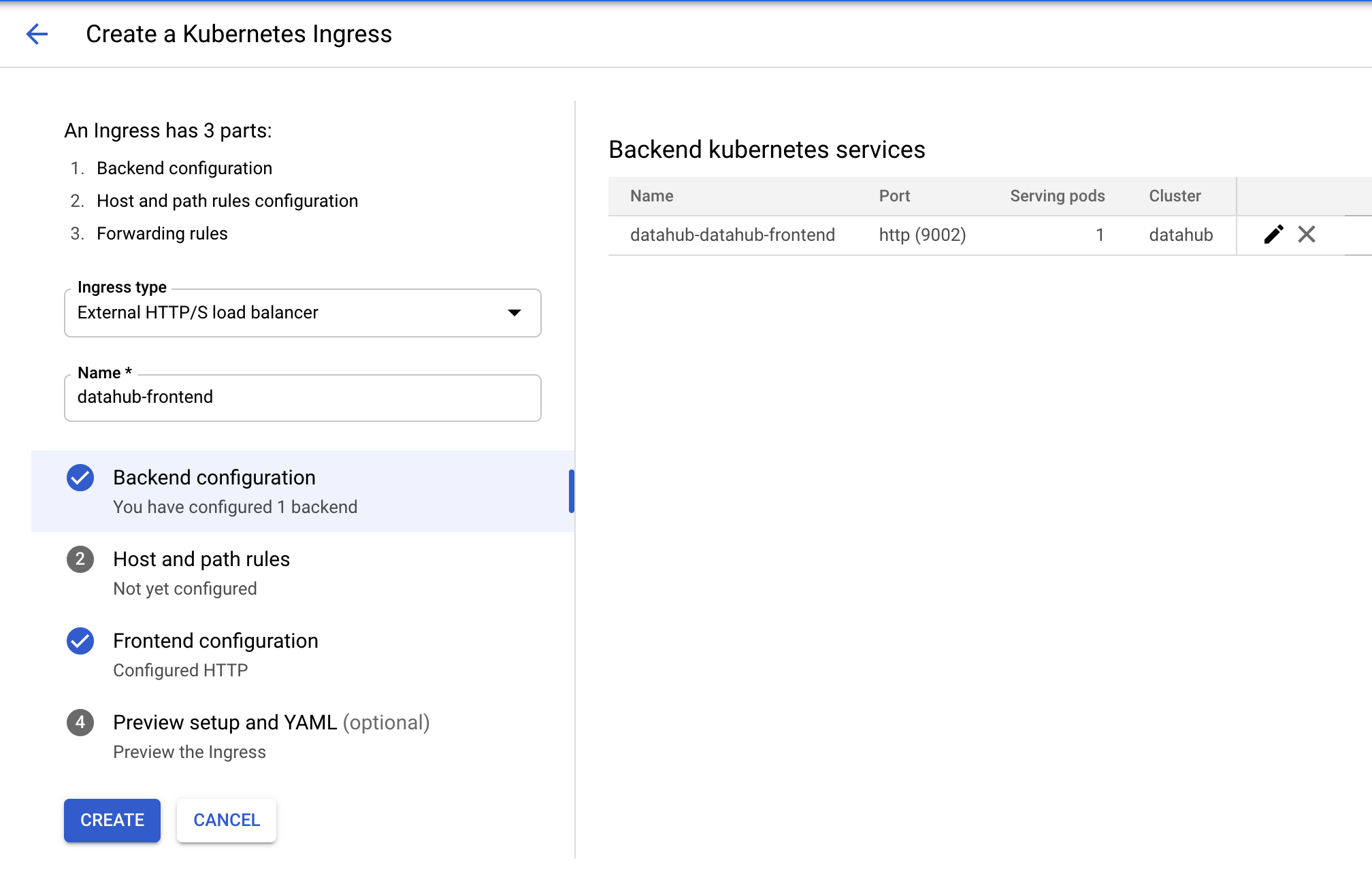

-

+

+

+  +

+

+

Tick the checkbox for datahub-datahub-frontend and click "CREATE INGRESS" button. You should land on the following page.

-

+

+

+  +

+

+

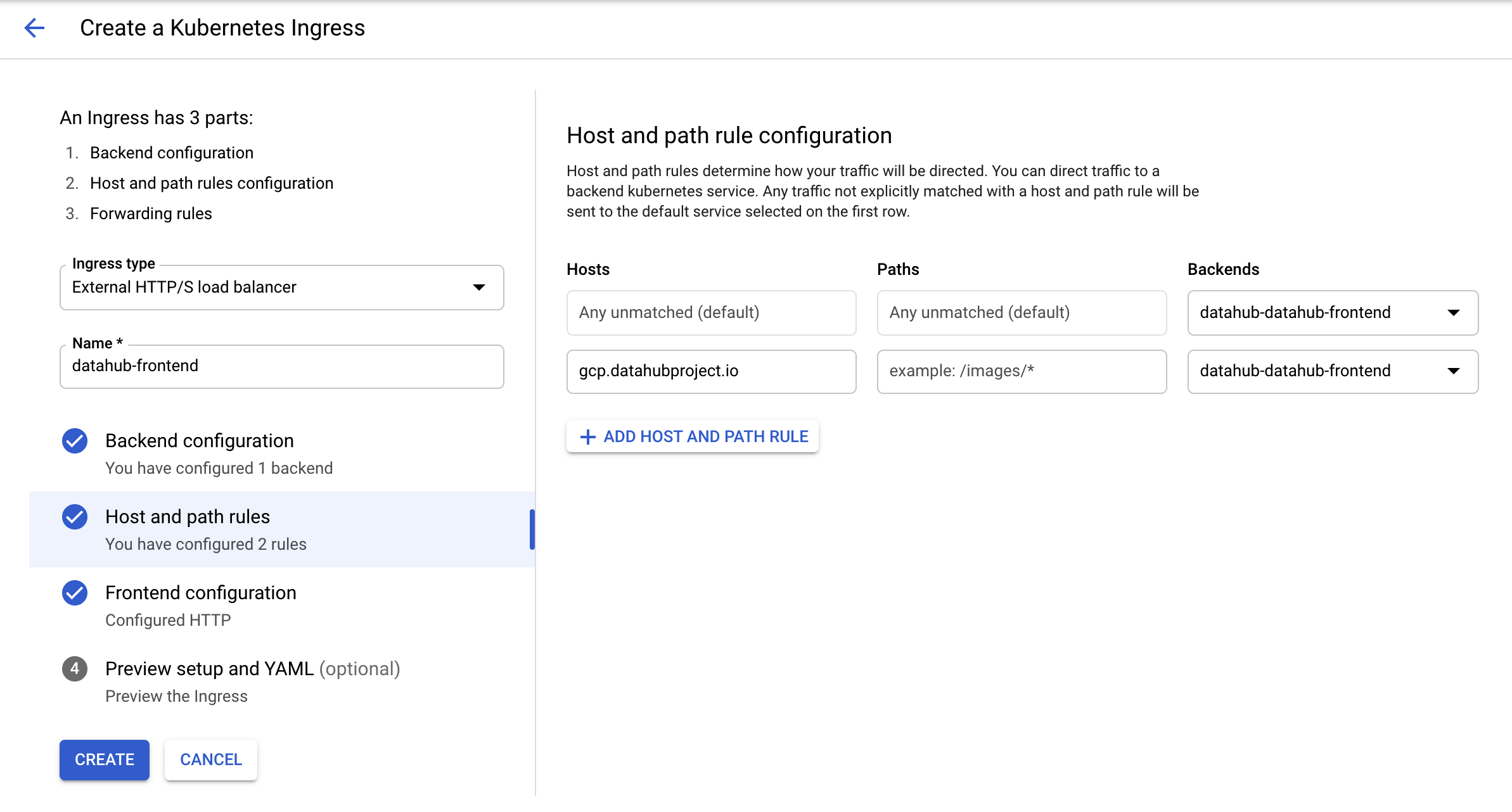

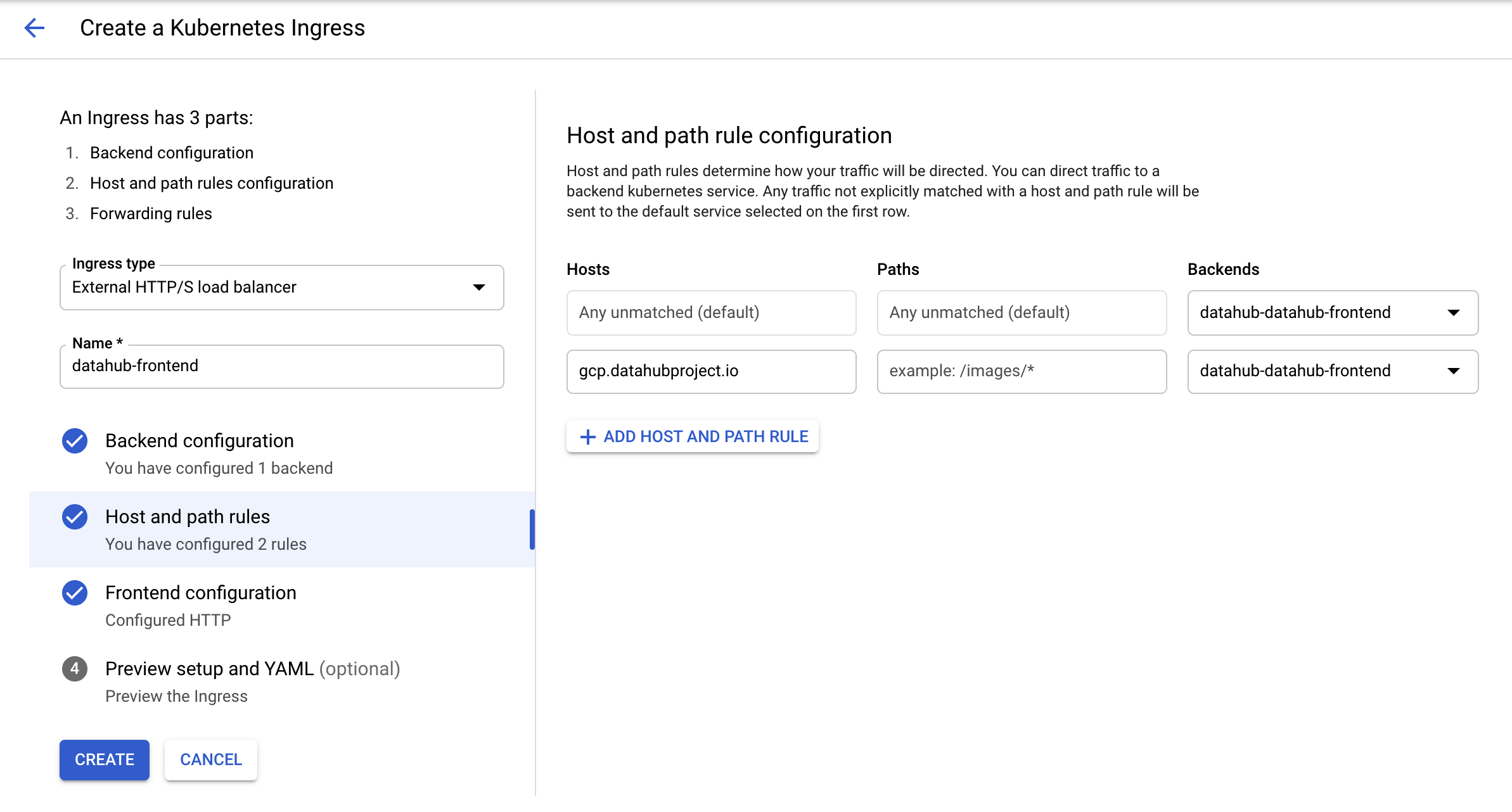

Type in an arbitrary name for the ingress and click on the second step "Host and path rules". You should land on the

following page.

-

+

+

+  +

+

+

Select "datahub-datahub-frontend" in the dropdown menu for backends, and then click on "ADD HOST AND PATH RULE" button.

In the second row that got created, add in the host name of choice (here gcp.datahubproject.io) and select

@@ -83,14 +95,22 @@ In the second row that got created, add in the host name of choice (here gcp.dat

This step adds the rule allowing requests from the host name of choice to get routed to datahub-frontend service. Click

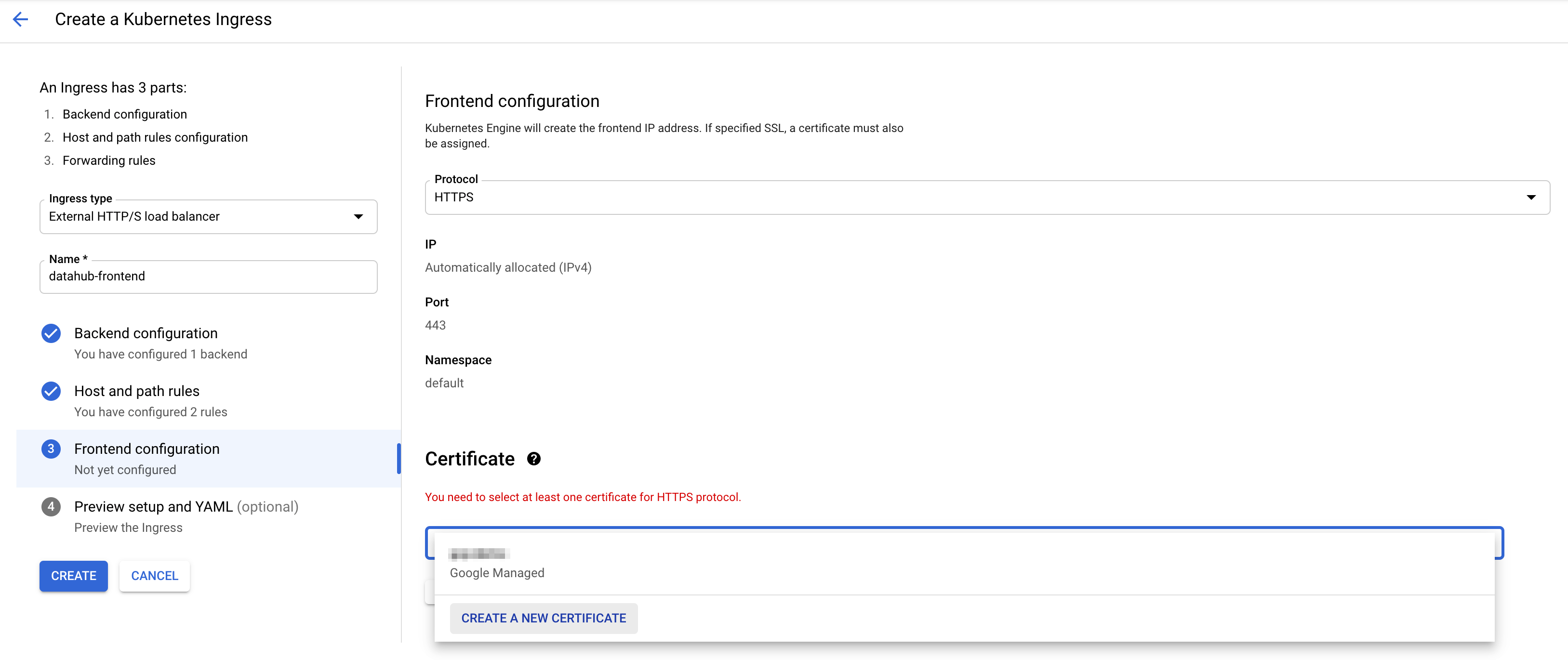

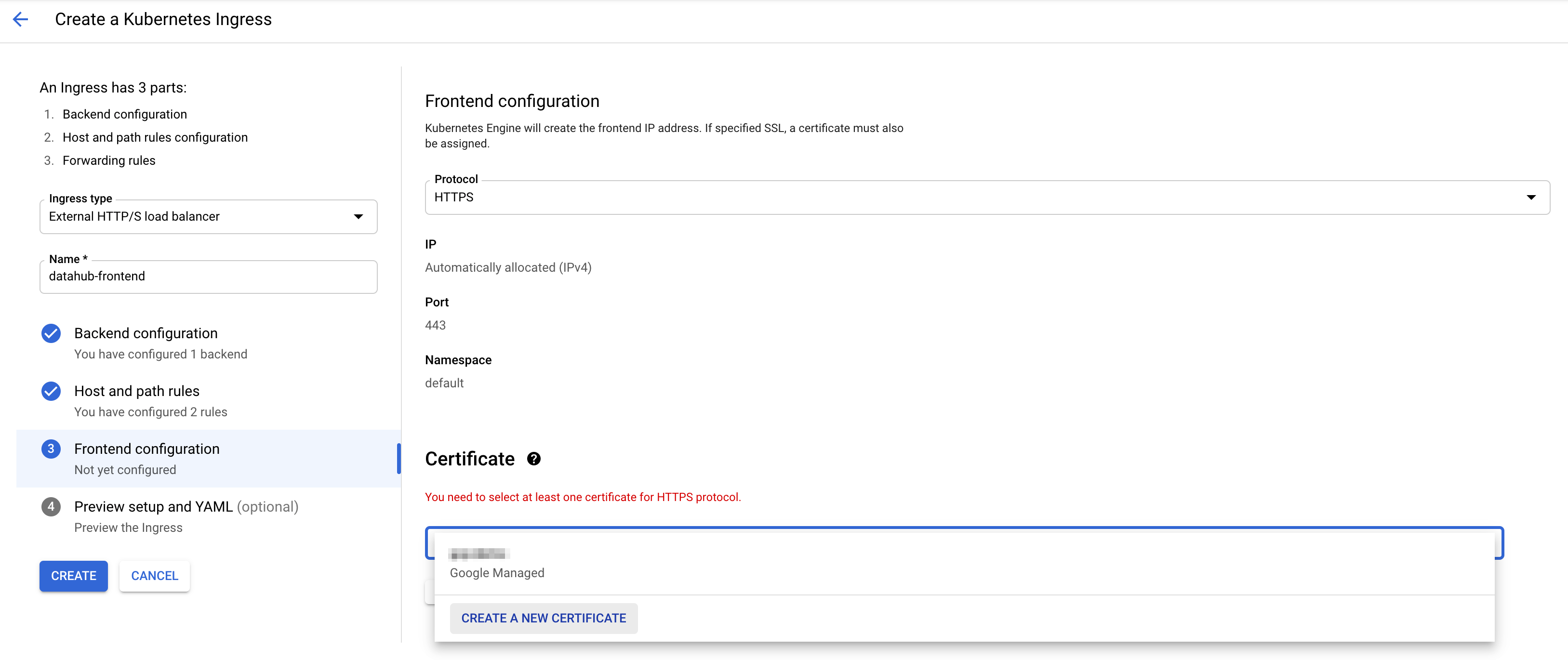

on step 3 "Frontend configuration". You should land on the following page.

-

+

+

+  +

+

+

Choose HTTPS in the dropdown menu for protocol. To enable SSL, you need to add a certificate. If you do not have one,

you can click "CREATE A NEW CERTIFICATE" and input the host name of choice. GCP will create a certificate for you.

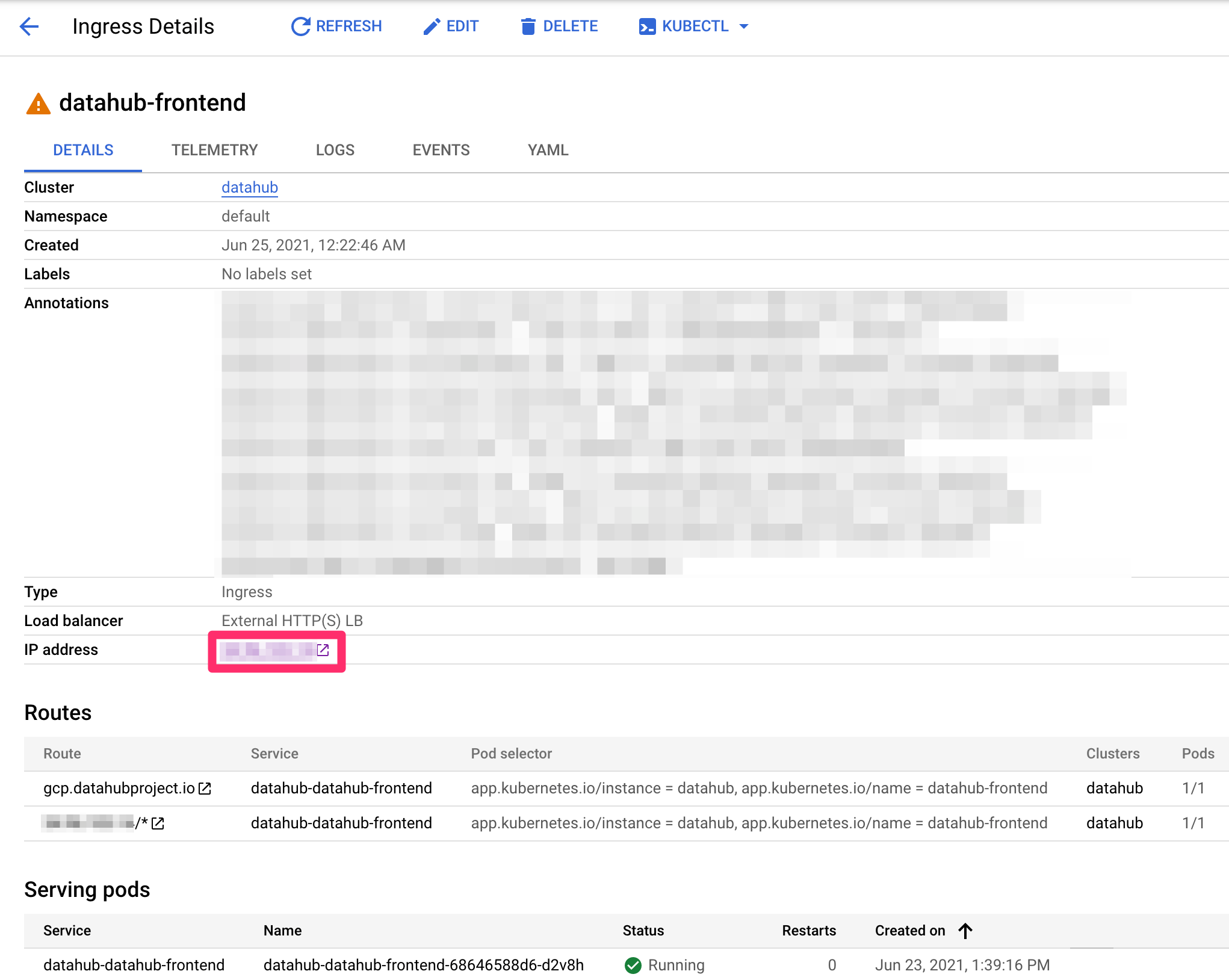

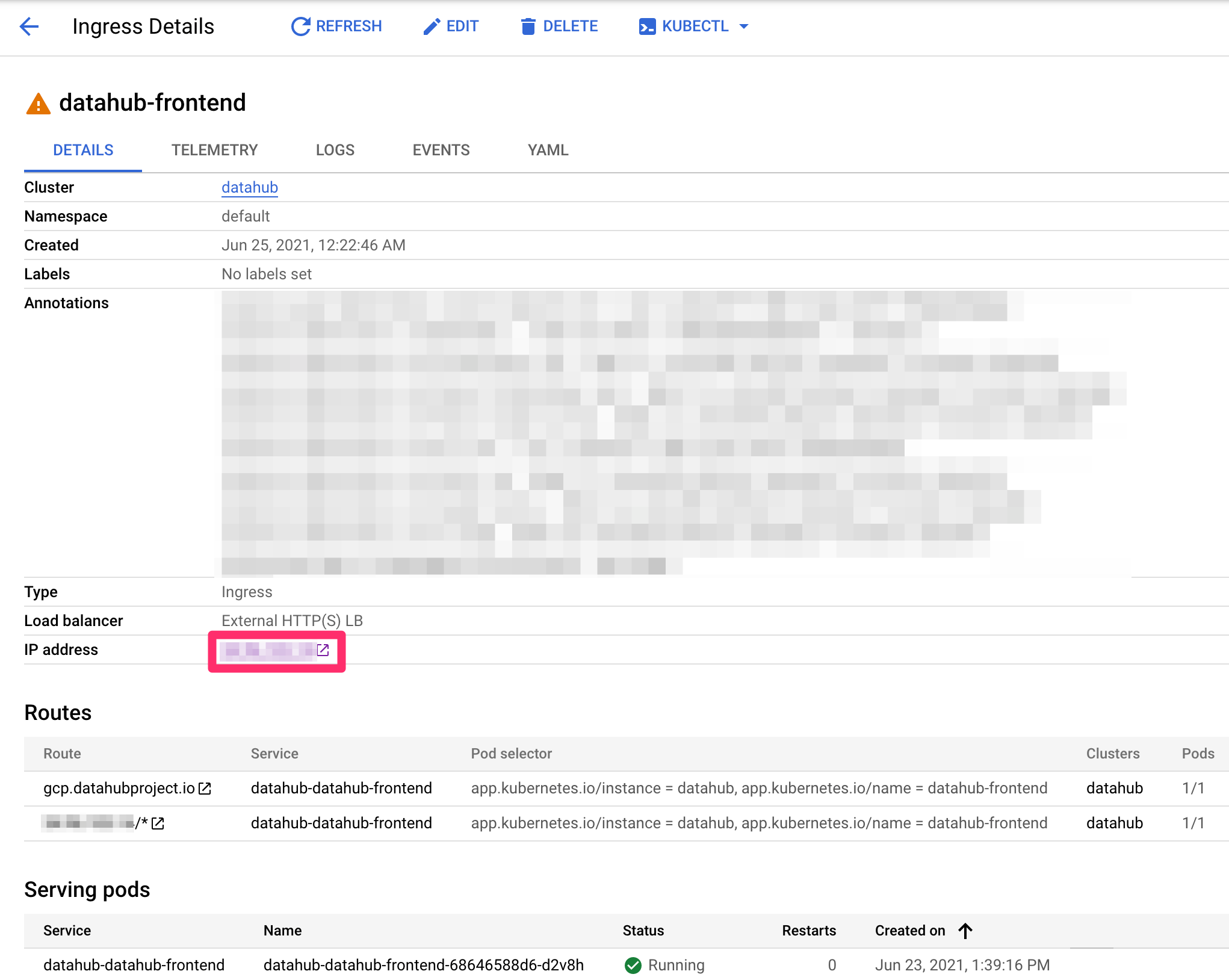

Now press "CREATE" button on the left to create ingress! After around 5 minutes, you should see the following.

-

+

+

+  +

+

+

In your domain provider, add an A record for the host name set above using the IP address on the ingress page (noted

with the red box). Once DNS updates, you should be able to access DataHub through the host name!!

@@ -98,5 +118,9 @@ with the red box). Once DNS updates, you should be able to access DataHub throug

Note, ignore the warning icon next to ingress. It takes about ten minutes for ingress to check that the backend service

is ready and show a check mark as follows. However, ingress is fully functional once you see the above page.

-

+

+

+  +

+

+

diff --git a/docs/dev-guides/timeline.md b/docs/dev-guides/timeline.md

index 966e659b90991..829aef1d3eefa 100644

--- a/docs/dev-guides/timeline.md

+++ b/docs/dev-guides/timeline.md

@@ -14,7 +14,11 @@ The Timeline API is available in server versions `0.8.28` and higher. The `cli`

## Entity Timeline Conceptually

For the visually inclined, here is a conceptual diagram that illustrates how to think about the entity timeline with categorical changes overlaid on it.

-

+

+

+  +

+

+

## Change Event

Each modification is modeled as a

@@ -228,8 +232,16 @@ http://localhost:8080/openapi/timeline/v1/urn%3Ali%3Adataset%3A%28urn%3Ali%3Adat

The API is browse-able via the UI through through the dropdown.

Here are a few screenshots showing how to navigate to it. You can try out the API and send example requests.

-

-

+

+

+  +

+

+

+

+

+  +

+

+

# Future Work

diff --git a/docs/docker/development.md b/docs/docker/development.md

index 2153aa9dc613f..91a303744a03b 100644

--- a/docs/docker/development.md

+++ b/docs/docker/development.md

@@ -92,7 +92,11 @@ Environment variables control the debugging ports for GMS and the frontend.

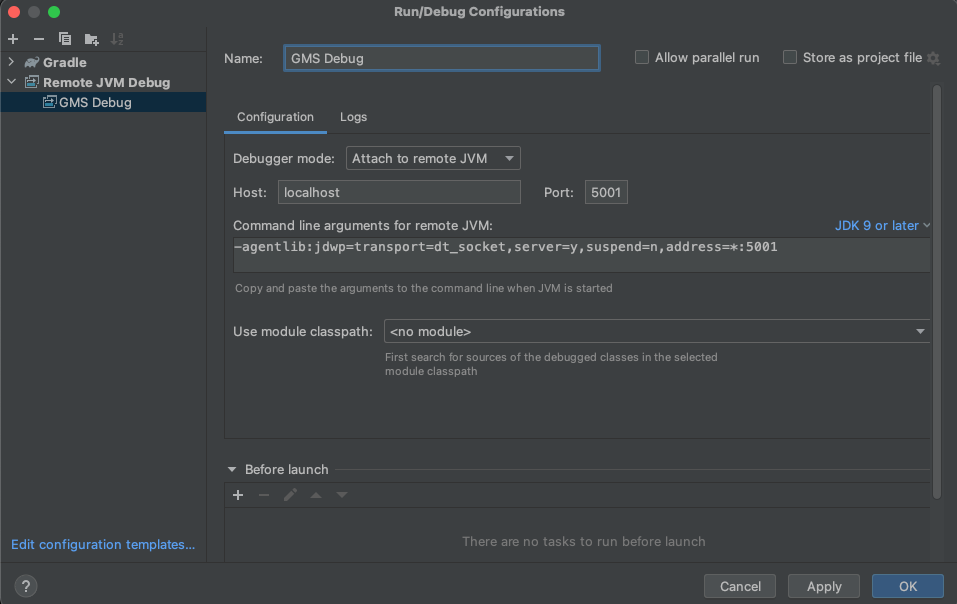

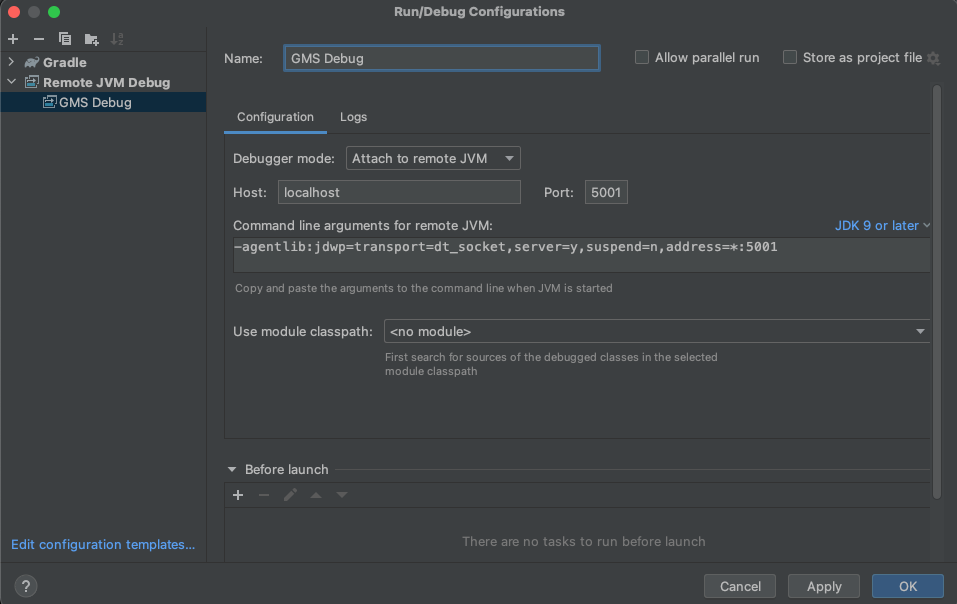

The screenshot shows an example configuration for IntelliJ using the default GMS debugging port of 5001.

-

+

+

+  +

+

+

## Tips for People New To Docker

diff --git a/docs/glossary/business-glossary.md b/docs/glossary/business-glossary.md

index faab6f12fc55e..e10cbed30b913 100644

--- a/docs/glossary/business-glossary.md

+++ b/docs/glossary/business-glossary.md

@@ -31,59 +31,103 @@ In order to view a Business Glossary, users must have the Platform Privilege cal

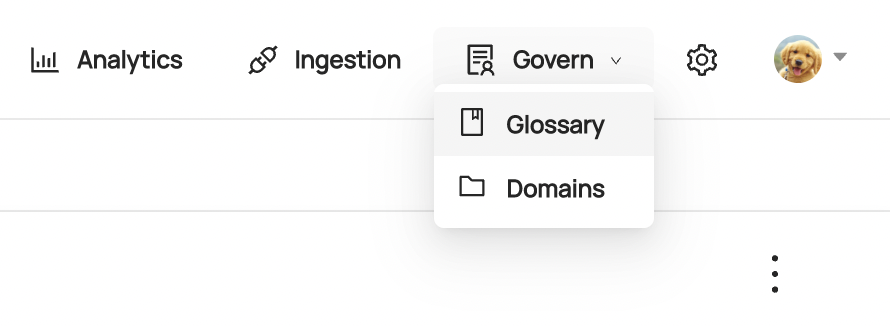

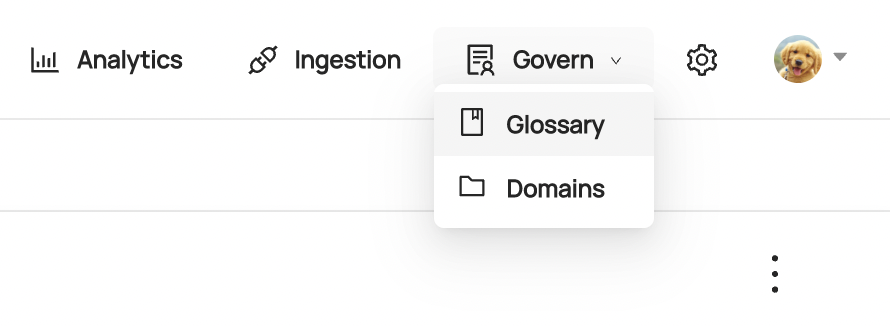

Once granted this privilege, you can access your Glossary by clicking the dropdown at the top of the page called **Govern** and then click **Glossary**:

-

+

+

+  +

+

+

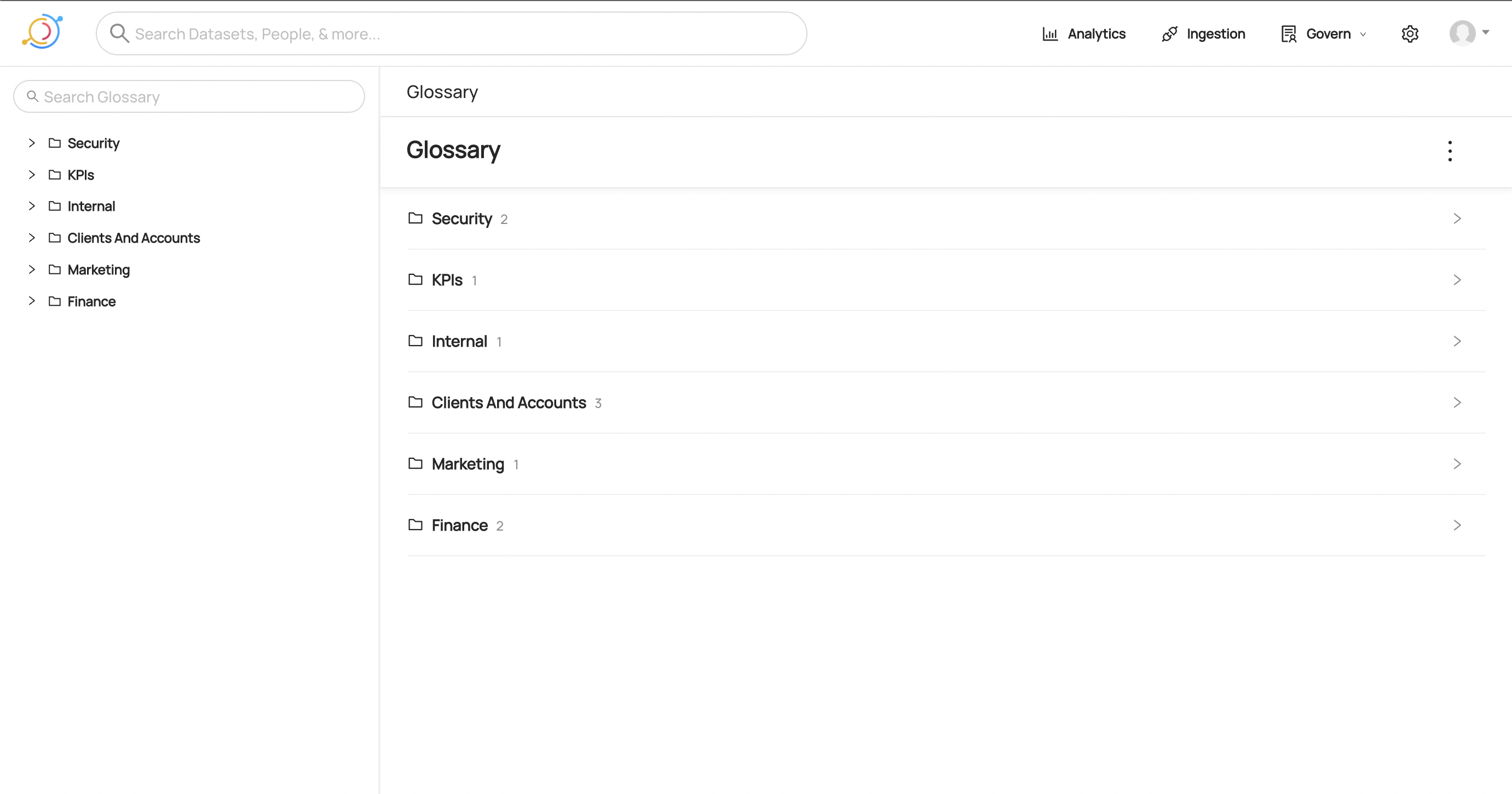

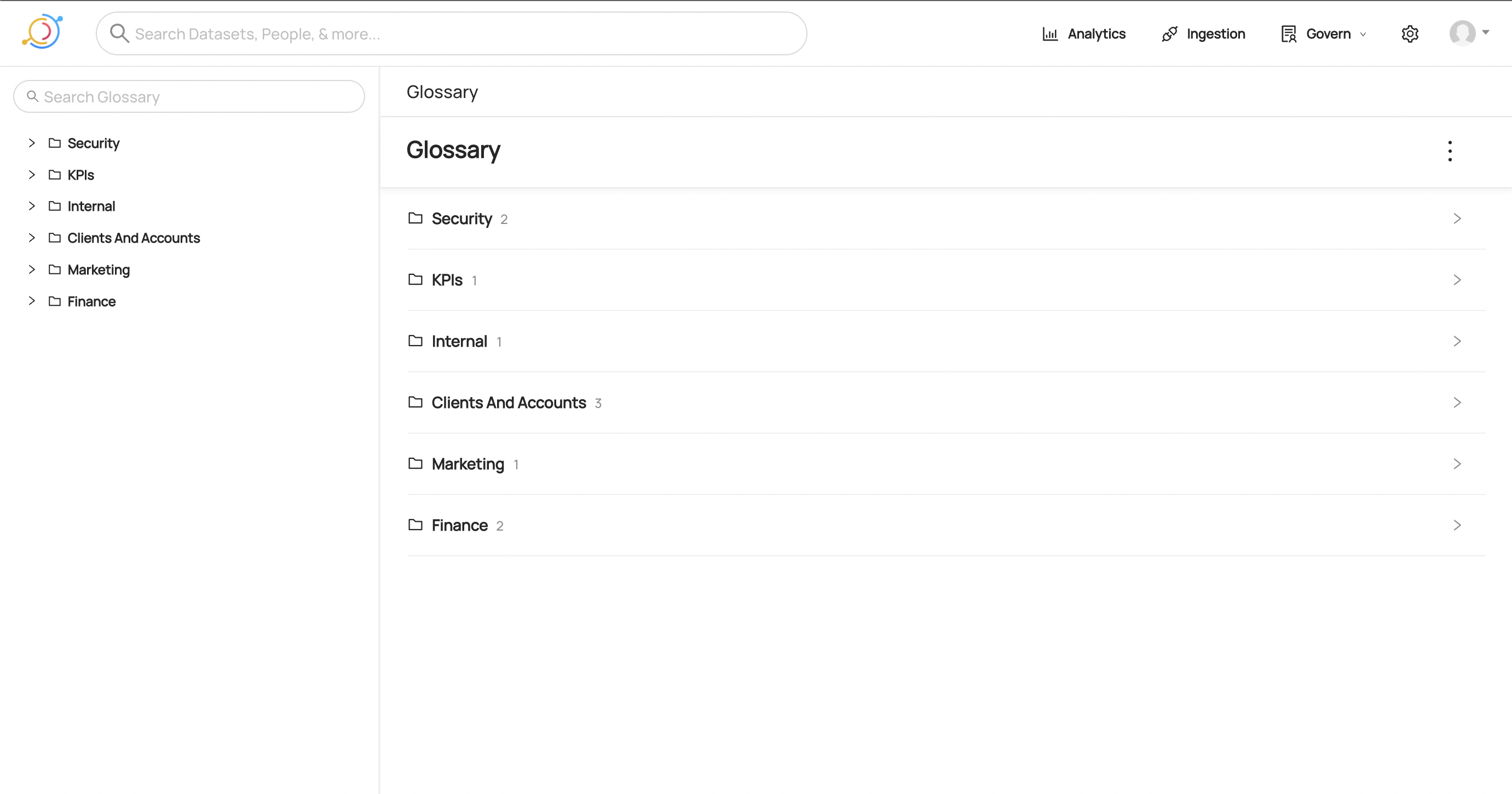

You are now at the root of your Glossary and should see all Terms and Term Groups with no parents assigned to them. You should also notice a hierarchy navigator on the left where you can easily check out the structure of your Glossary!

-

+

+

+  +

+

+

## Creating a Term or Term Group

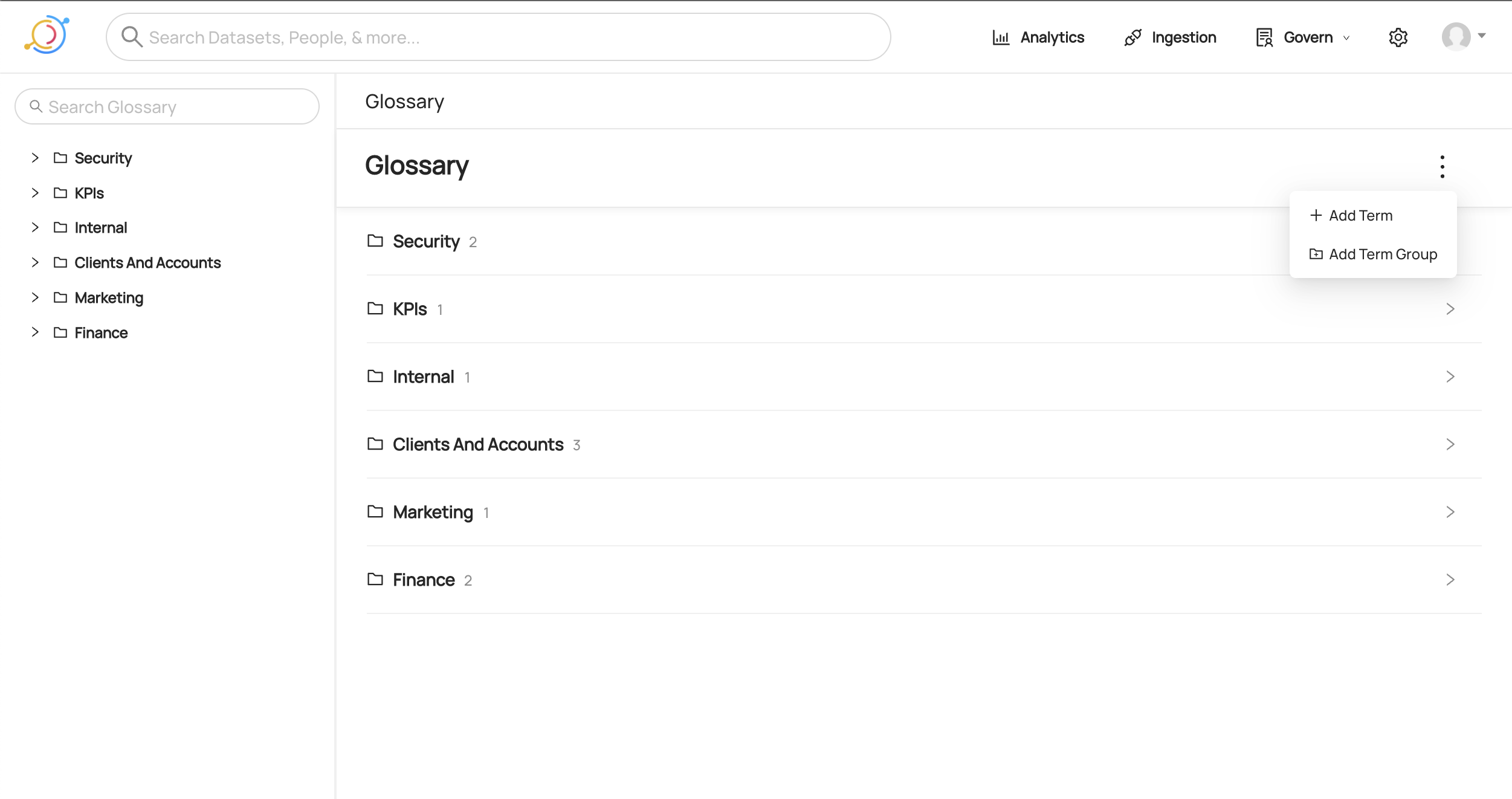

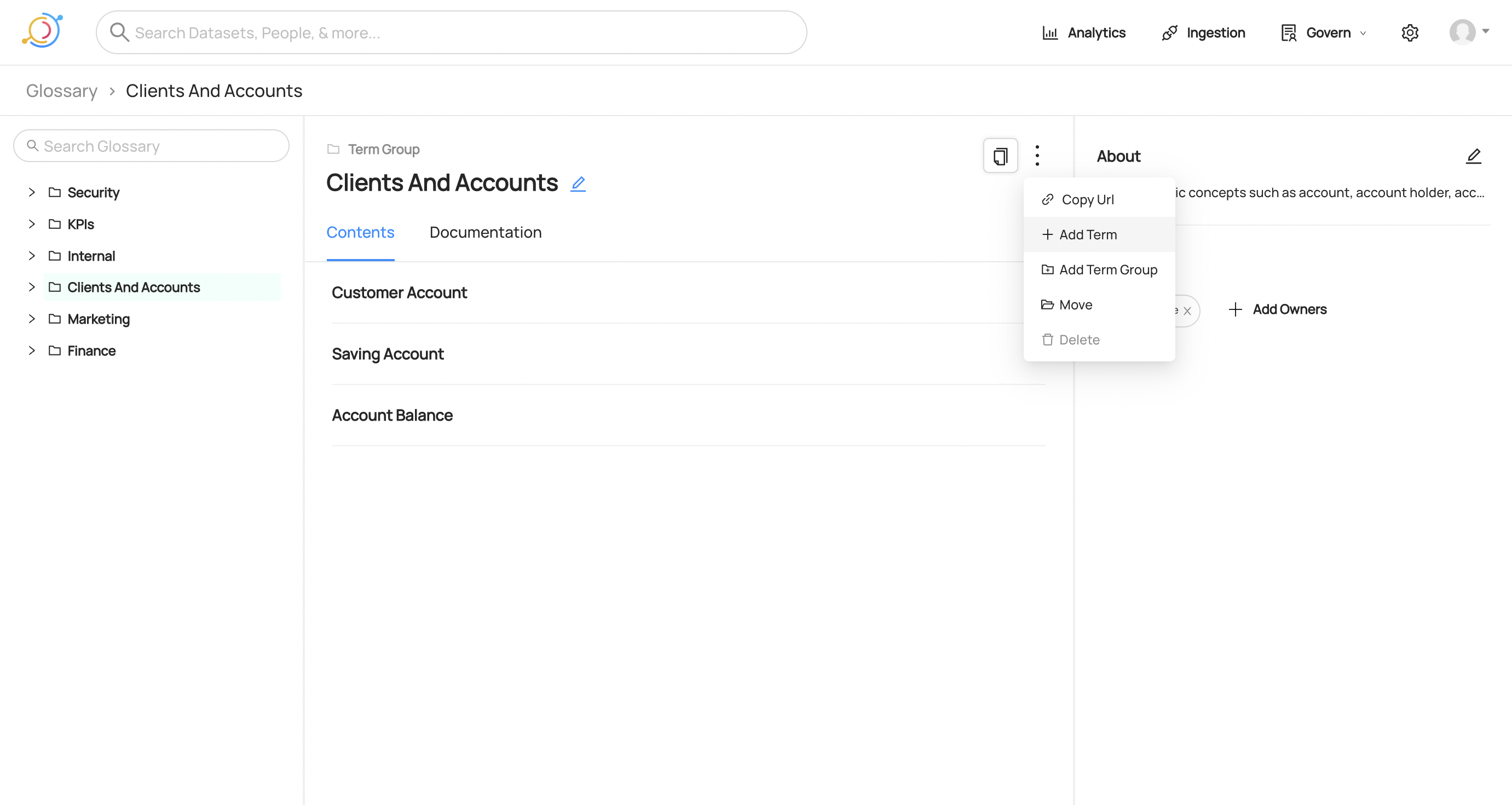

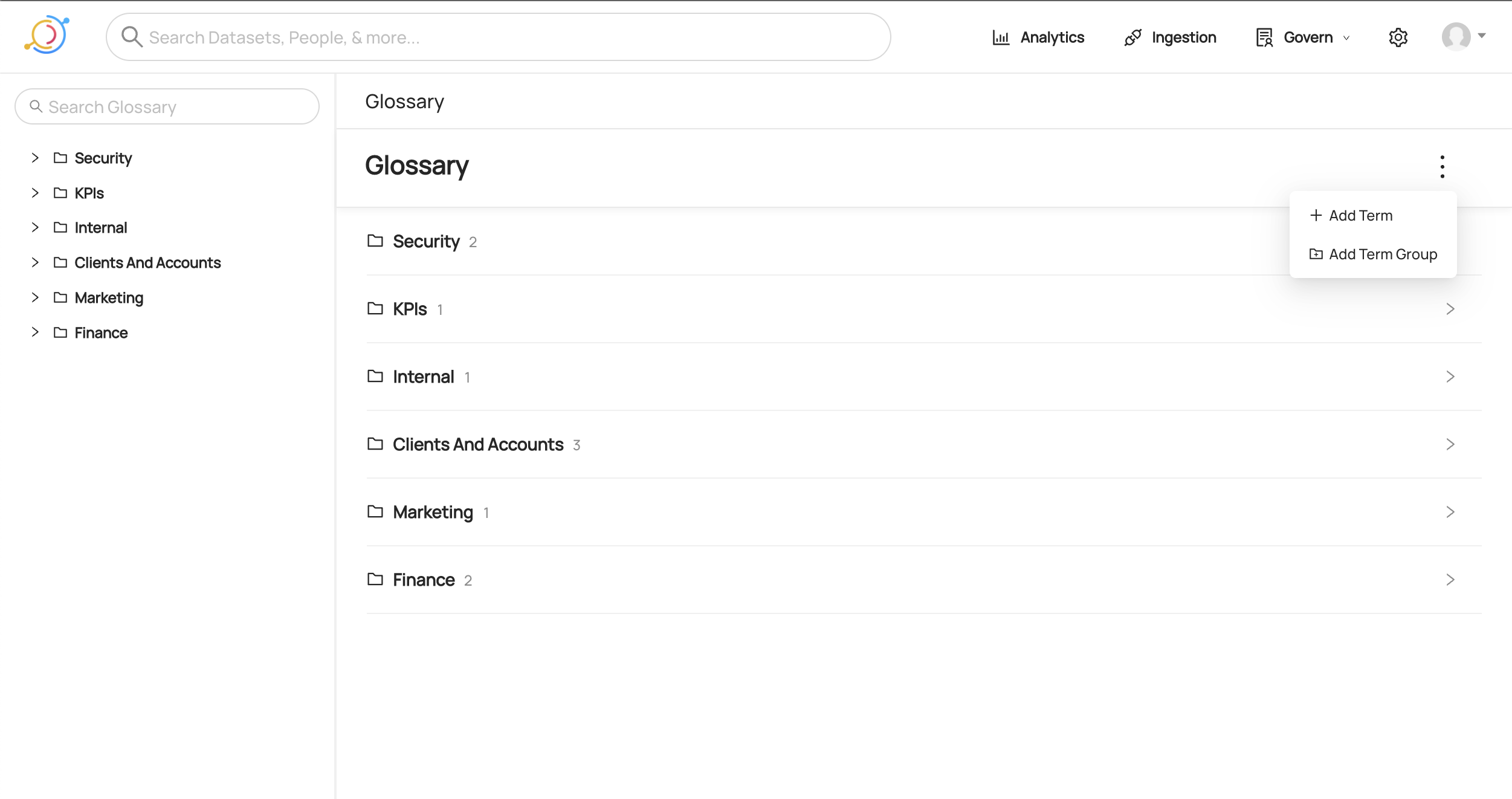

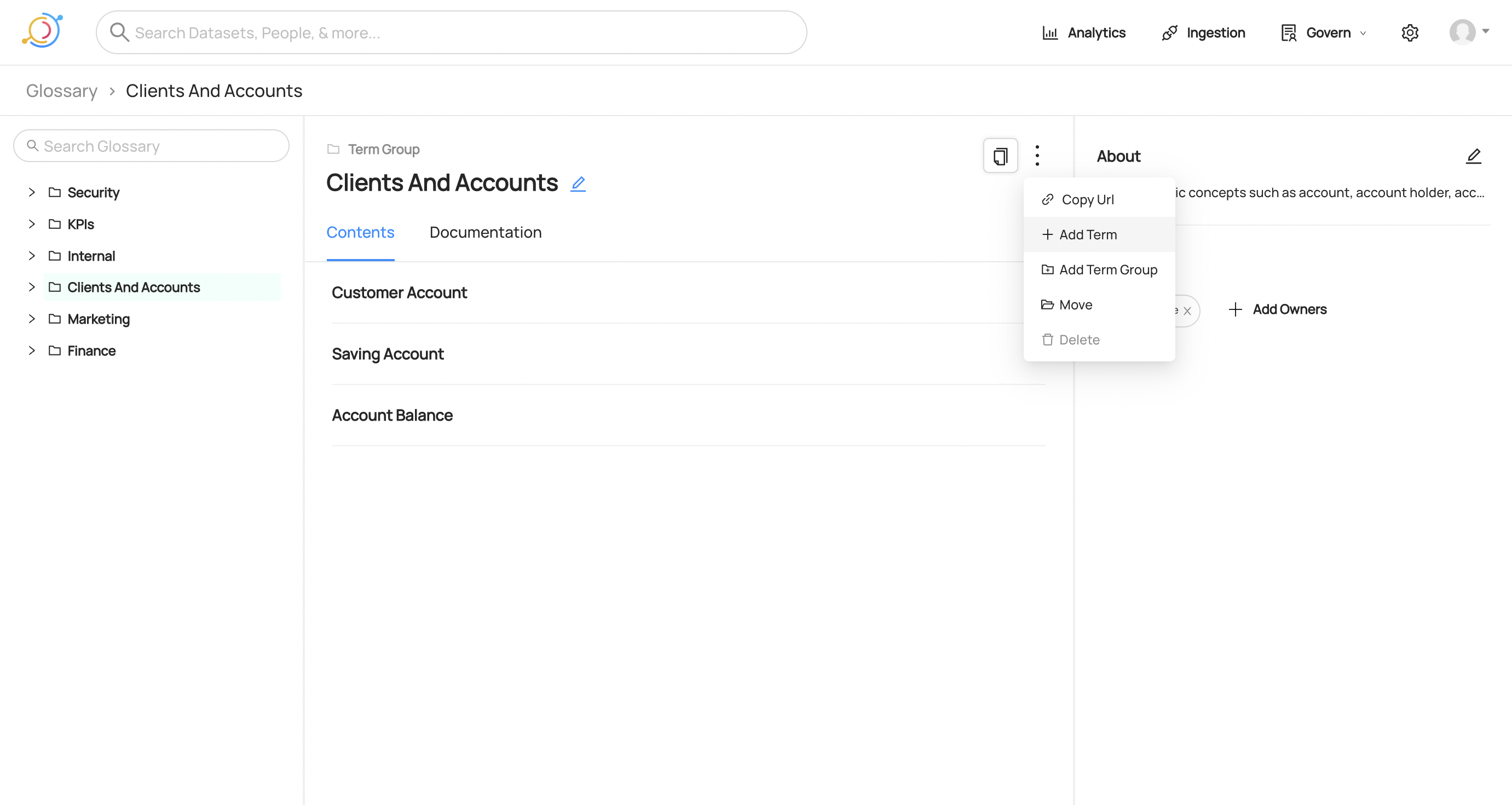

There are two ways to create Terms and Term Groups through the UI. First, you can create directly from the Glossary home page by clicking the menu dots on the top right and selecting your desired option:

-

+

+

+  +

+

+

You can also create Terms or Term Groups directly from a Term Group's page. In order to do that you need to click the menu dots on the top right and select what you want:

-

+

+

+  +

+

+

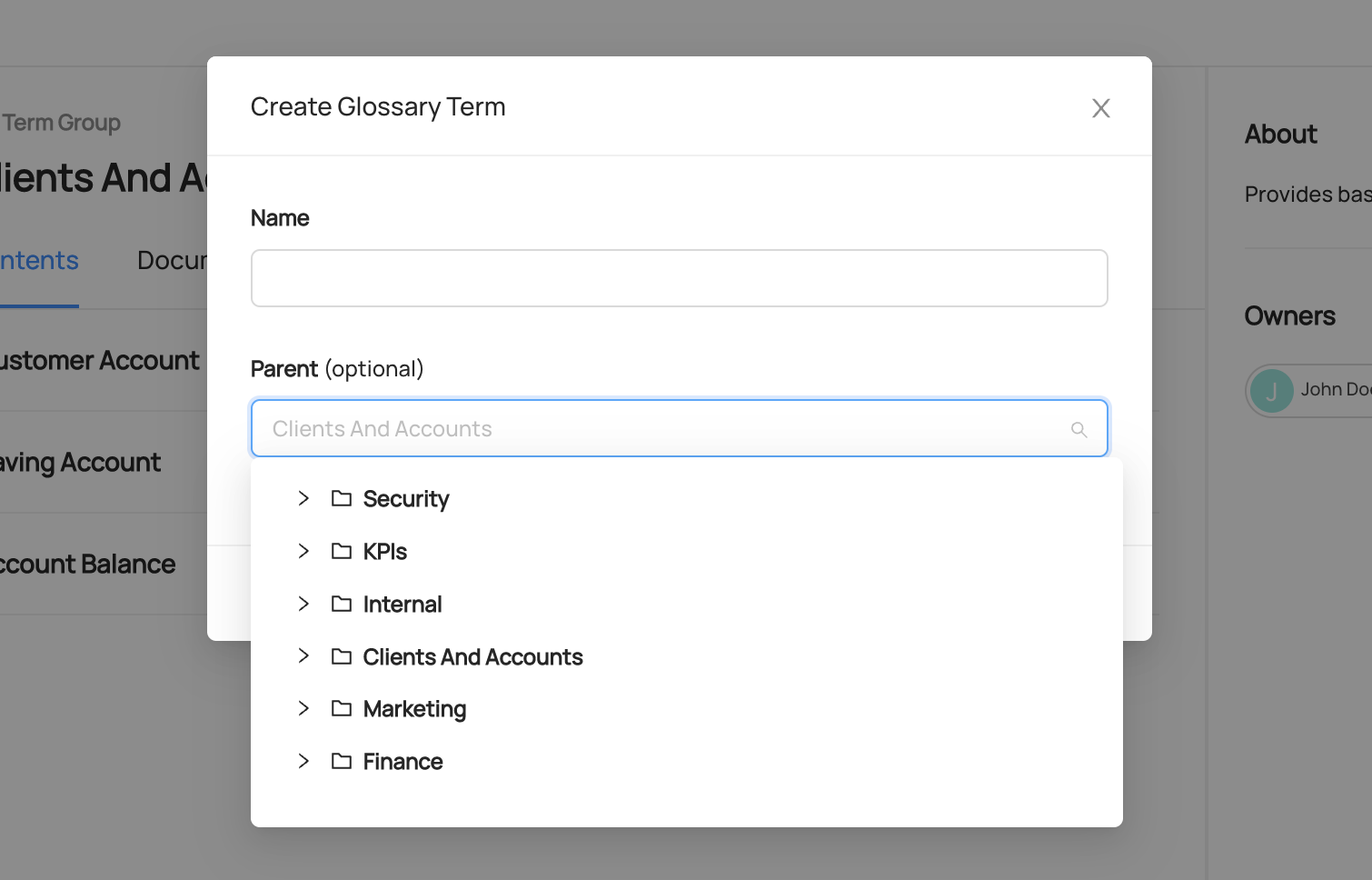

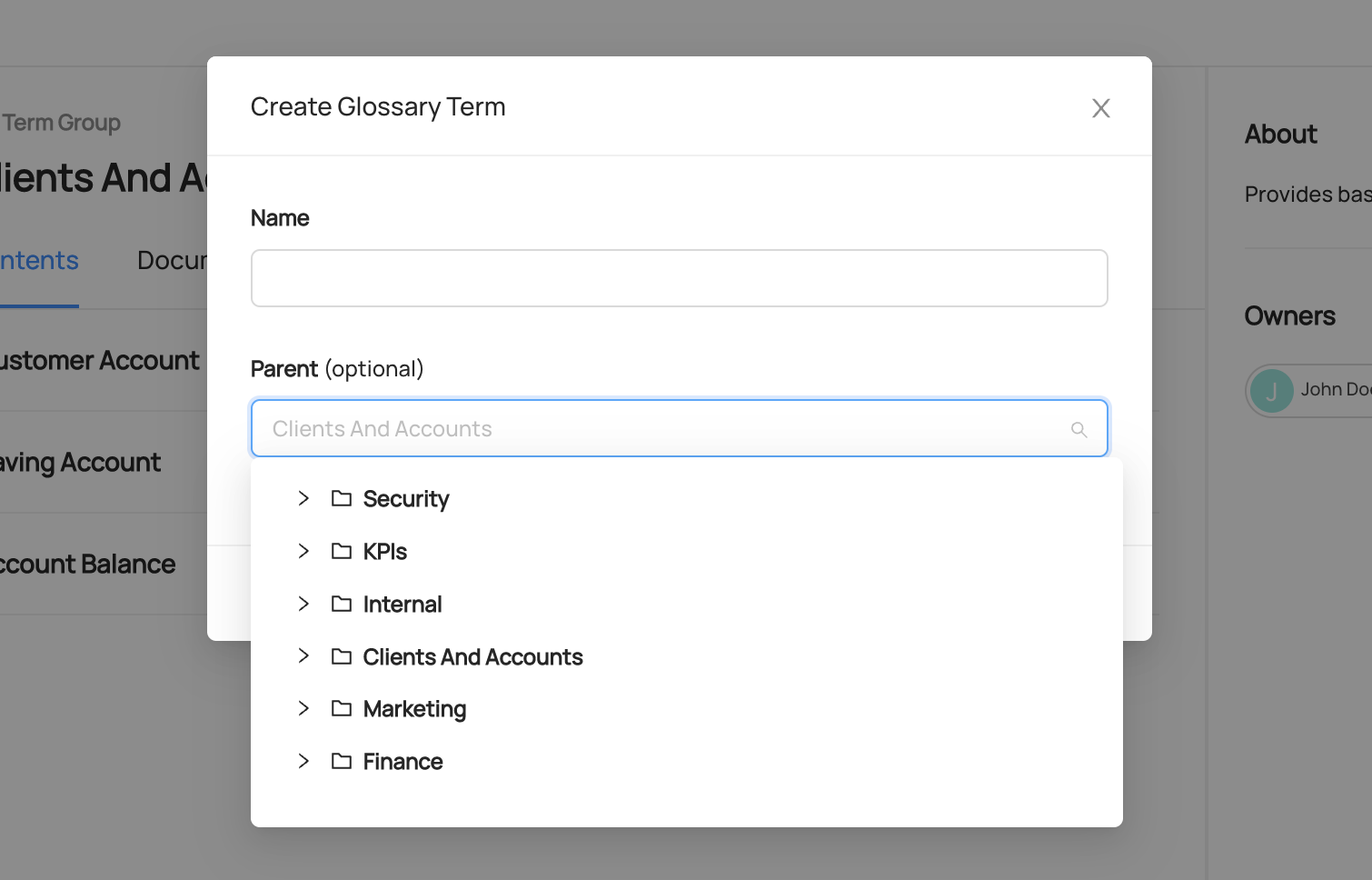

Note that the modal that pops up will automatically set the current Term Group you are in as the **Parent**. You can easily change this by selecting the input and navigating through your Glossary to find your desired Term Group. In addition, you could start typing the name of a Term Group to see it appear by searching. You can also leave this input blank in order to create a Term or Term Group with no parent.

-

+

+

+  +

+

+

## Editing a Term or Term Group

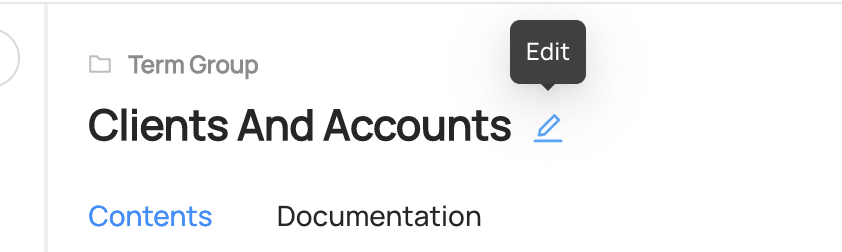

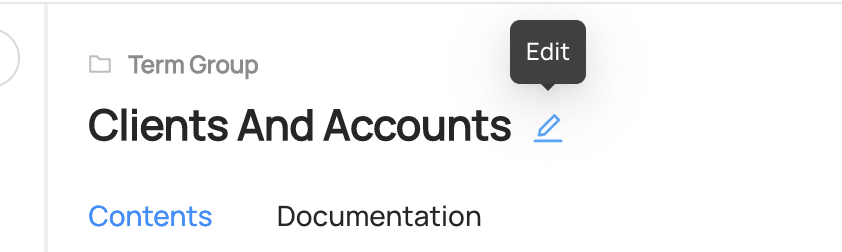

In order to edit a Term or Term Group, you first need to go the page of the Term or Term group you want to edit. Then simply click the edit icon right next to the name to open up an inline editor. Change the text and it will save when you click outside or hit Enter.

-

+

+

+  +

+

+

## Moving a Term or Term Group

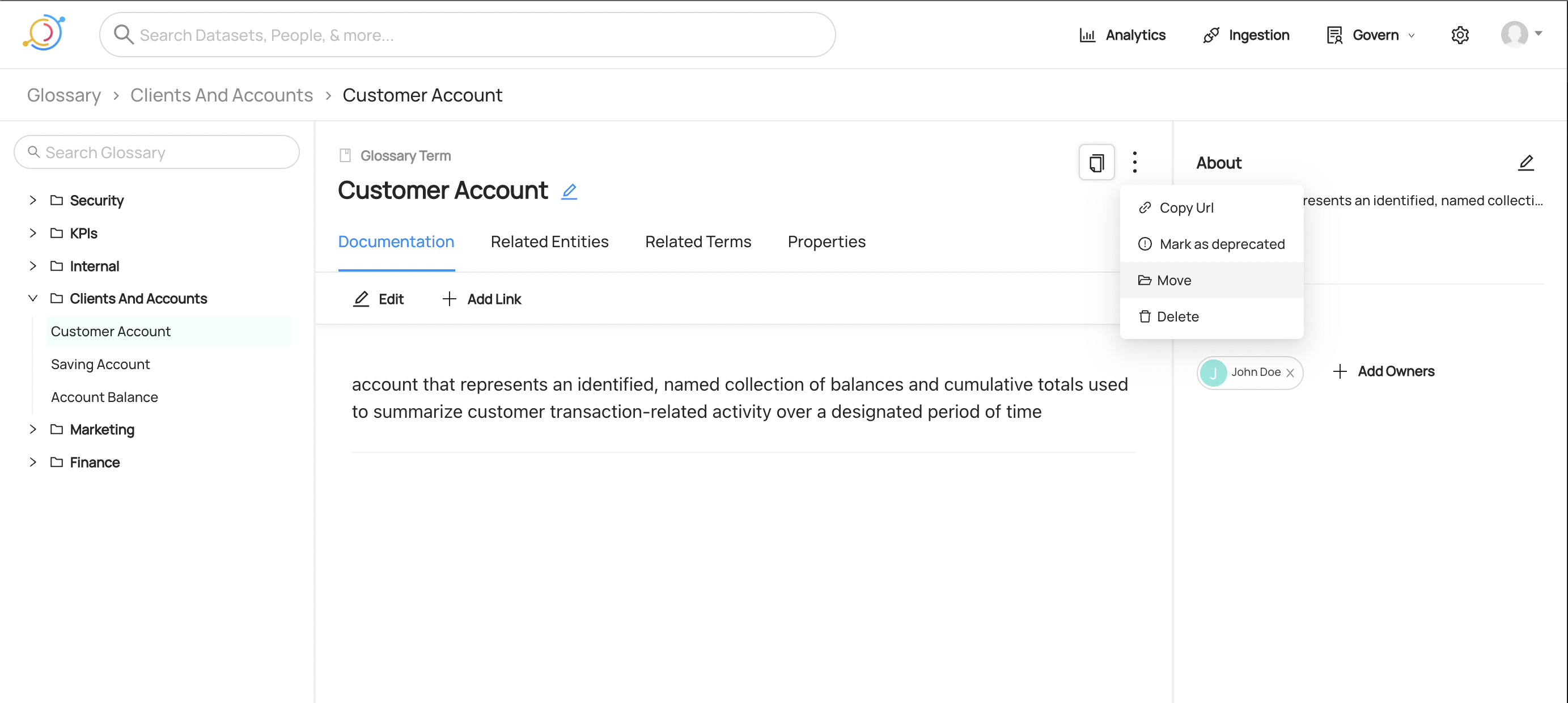

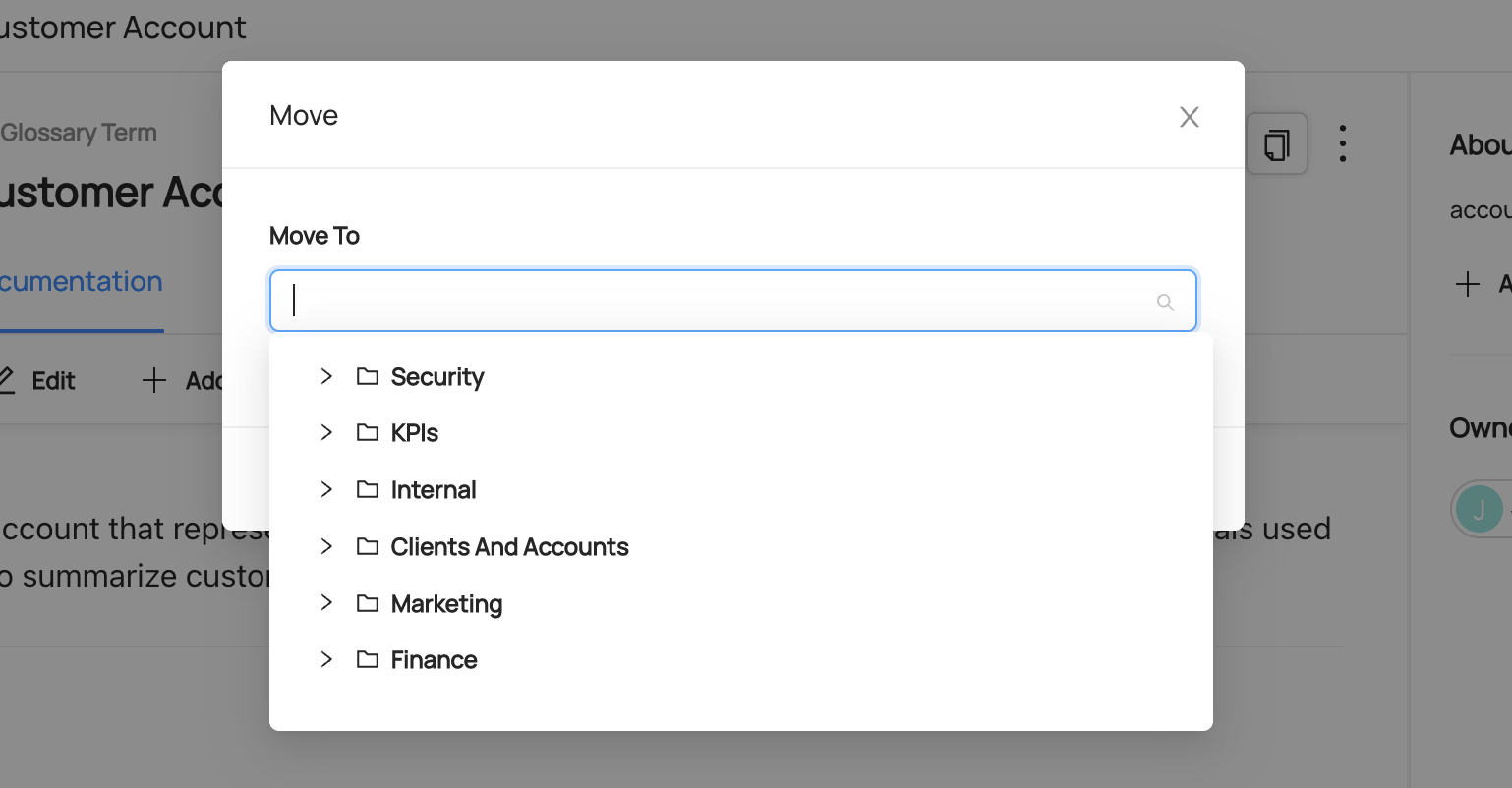

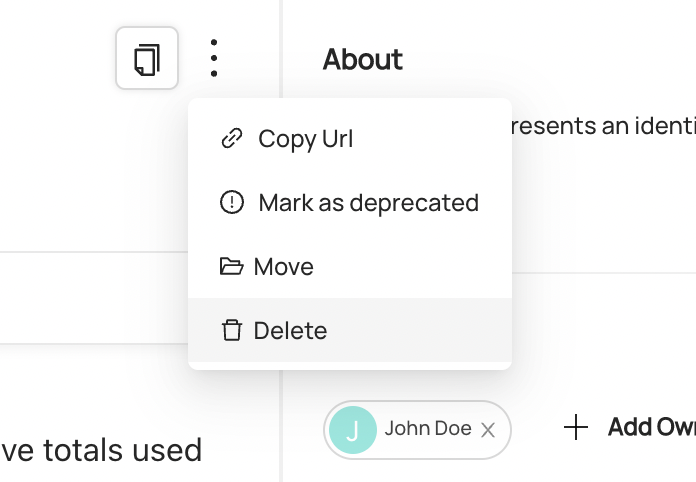

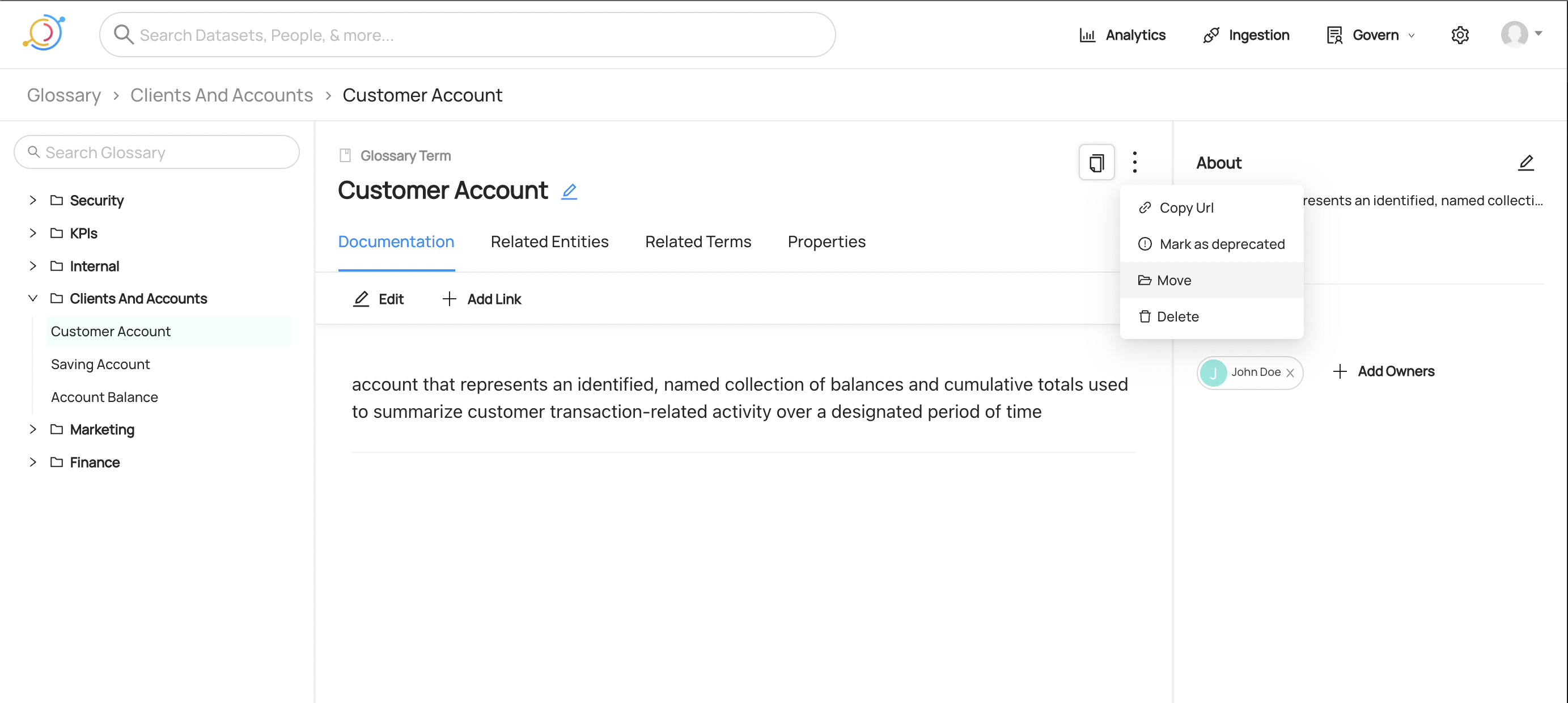

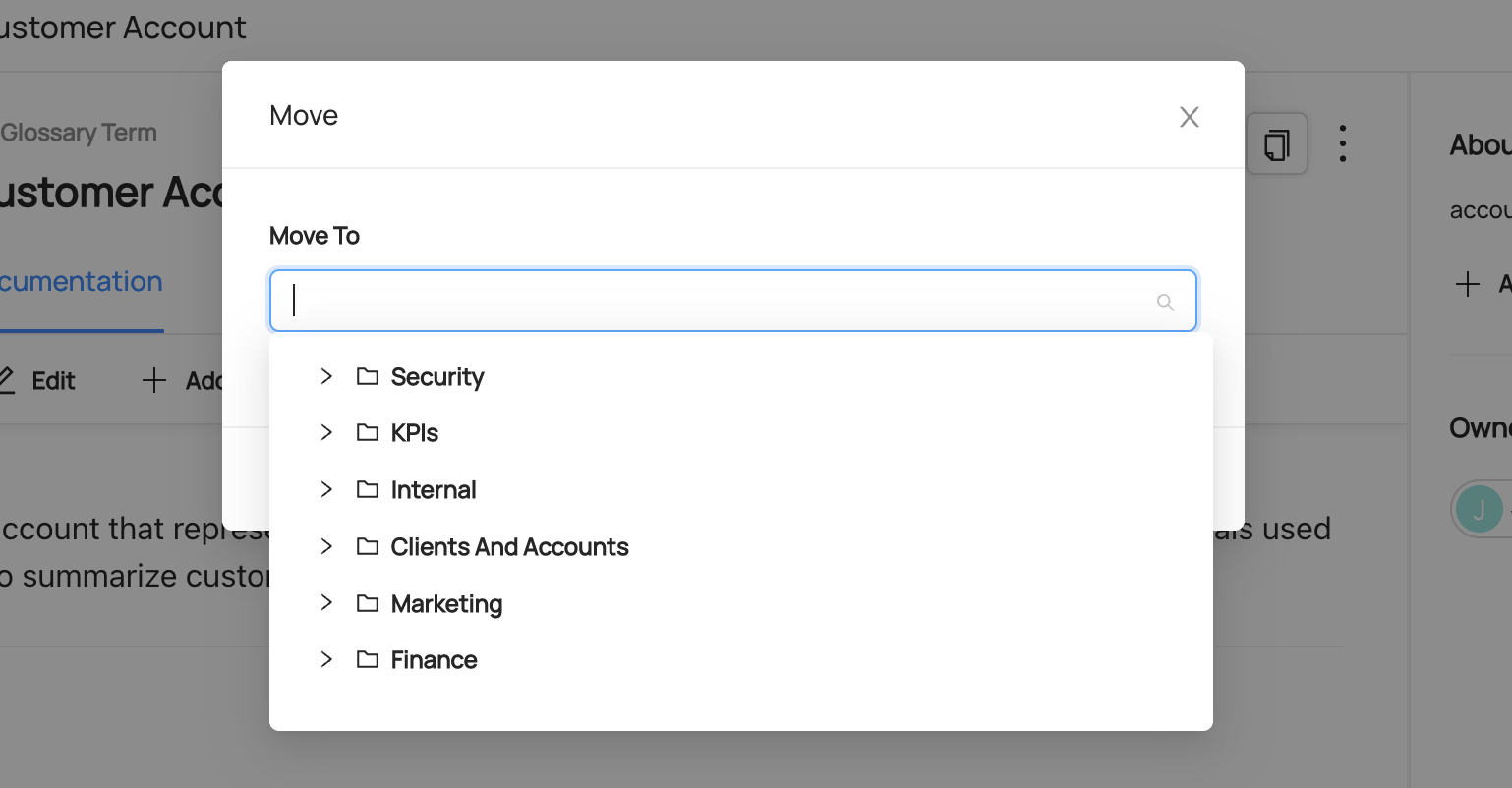

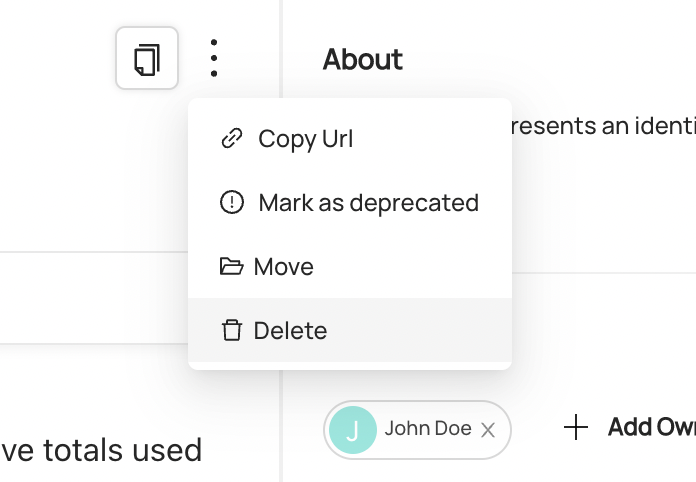

Once a Term or Term Group has been created, you can always move it to be under a different Term Group parent. In order to do this, click the menu dots on the top right of either entity and select **Move**.

-

+

+

+  +

+

+

This will open a modal where you can navigate through your Glossary to find your desired Term Group.

-

+

+

+  +

+

+

## Deleting a Term or Term Group

In order to delete a Term or Term Group, you need to go to the entity page of what you want to delete then click the menu dots on the top right. From here you can select **Delete** followed by confirming through a separate modal. **Note**: at the moment we only support deleting Term Groups that do not have any children. Until cascade deleting is supported, you will have to delete all children first, then delete the Term Group.

-

+

+

+  +

+

+

## Adding a Term to an Entity

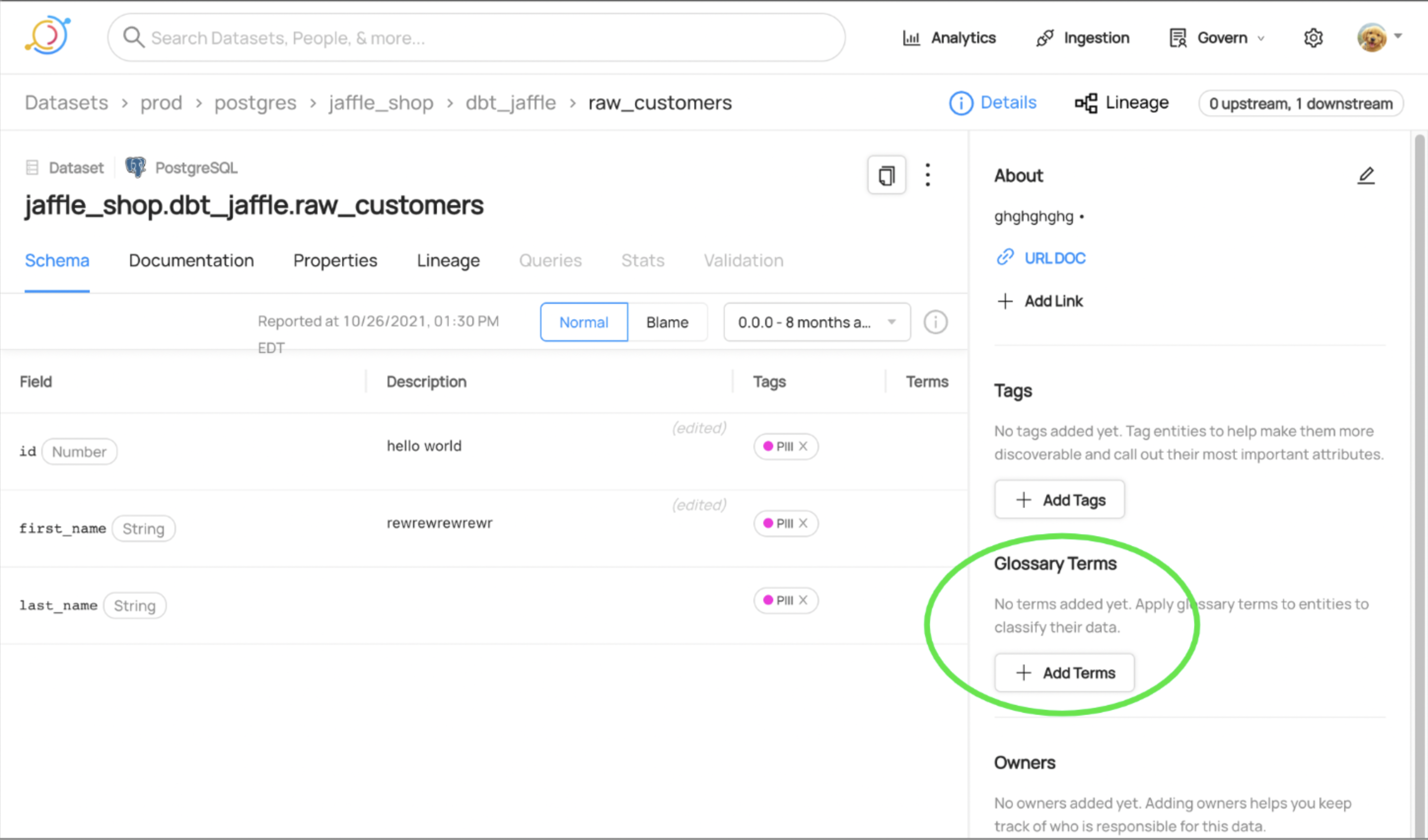

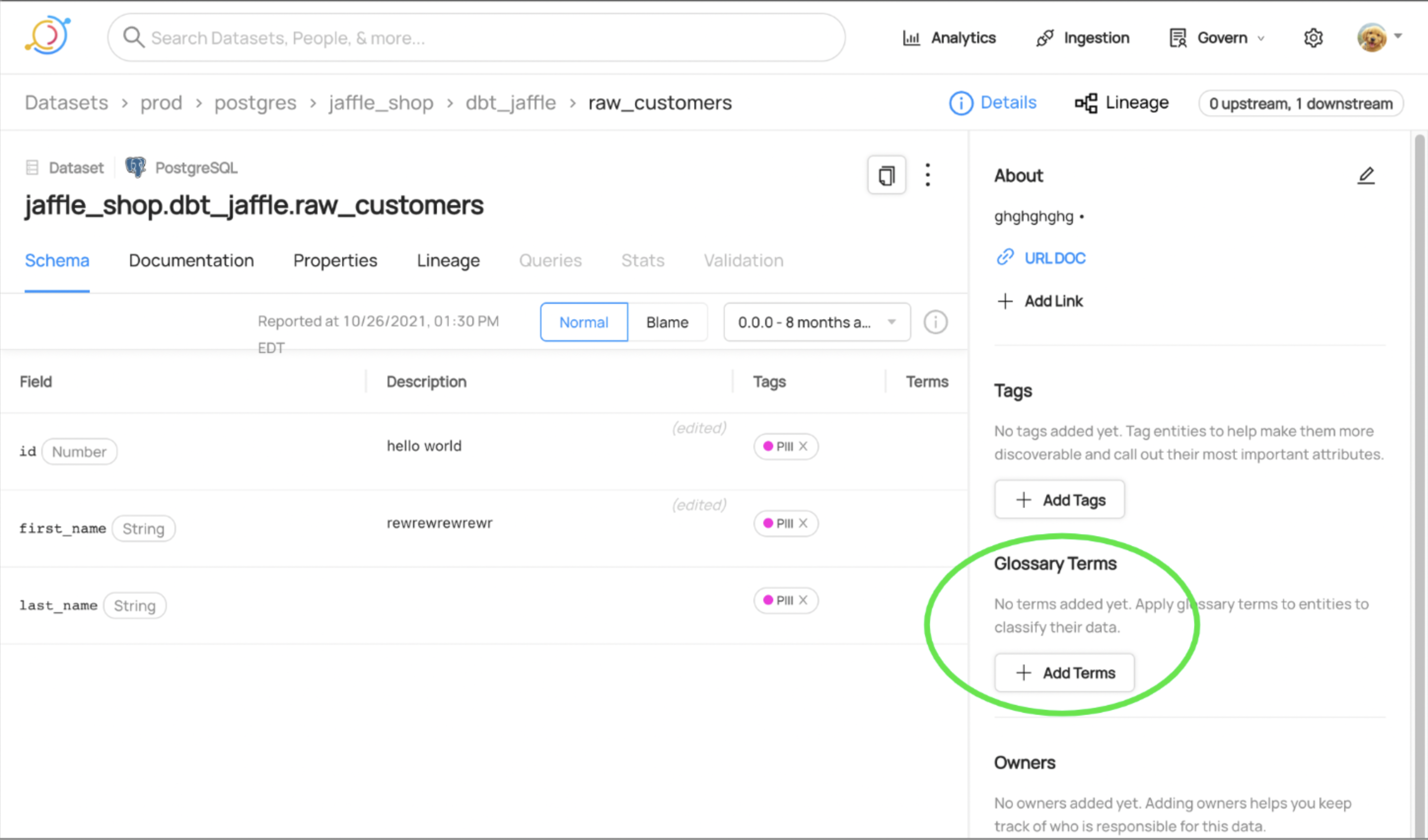

Once you've defined your Glossary, you can begin attaching terms to data assets. To add a Glossary Term to an asset, go to the entity page of your asset and find the **Add Terms** button on the right sidebar.

-

+

+

+  +

+

+

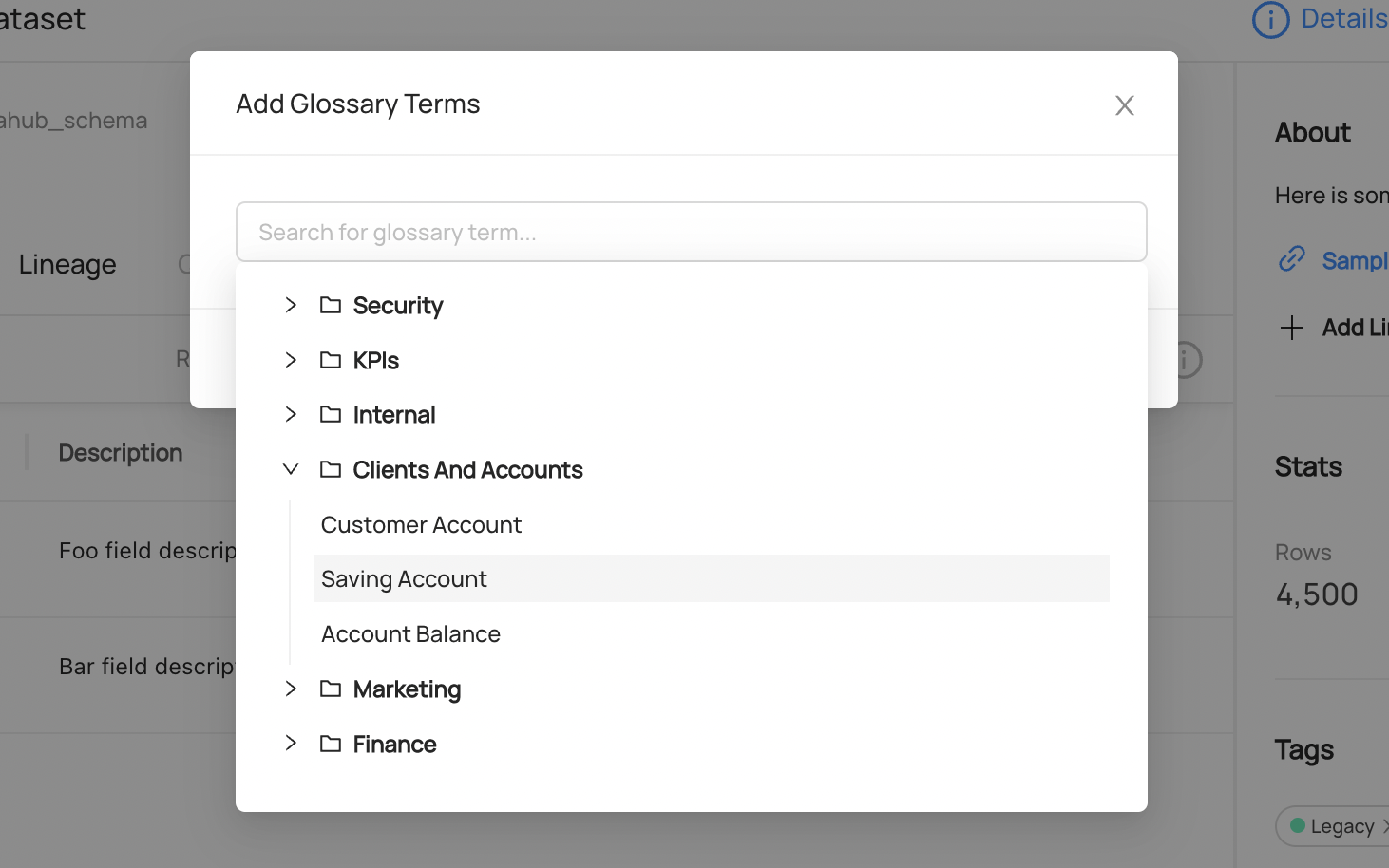

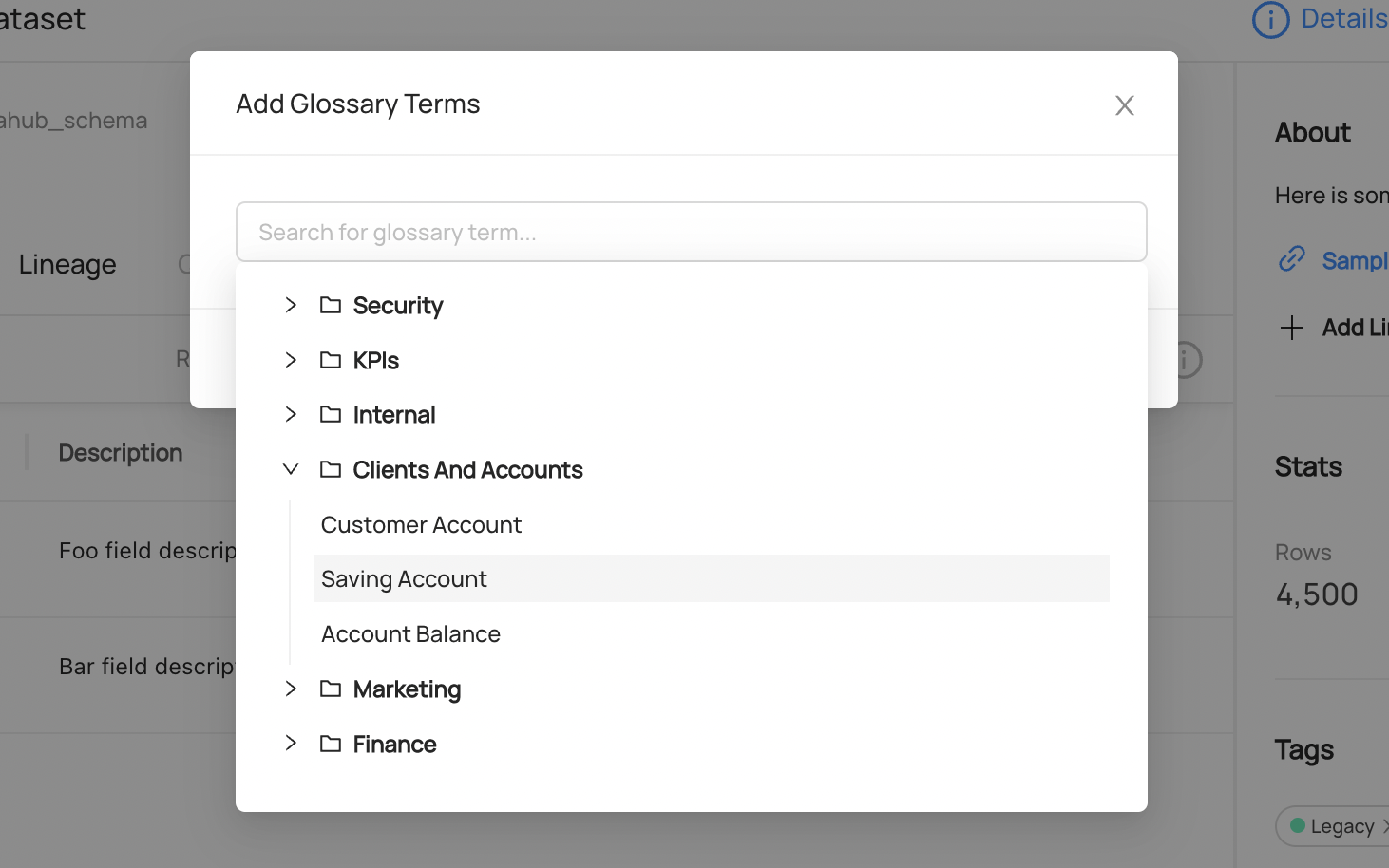

In the modal that pops up you can select the Term you care about in one of two ways:

- Search for the Term by name in the input

- Navigate through the Glossary dropdown that appears after clicking into the input

-

+

+

+  +

+

+

## Privileges

diff --git a/docs/how/configuring-authorization-with-apache-ranger.md b/docs/how/configuring-authorization-with-apache-ranger.md

index 26d3be6d358b2..46f9432e6c18a 100644

--- a/docs/how/configuring-authorization-with-apache-ranger.md

+++ b/docs/how/configuring-authorization-with-apache-ranger.md

@@ -67,7 +67,11 @@ Now, you should have the DataHub plugin registered with Apache Ranger. Next, we'

**DATAHUB** plugin and **ranger_datahub** service is shown in below screenshot:

-

+

+

+  +

+

+

4. Create a new policy under service **ranger_datahub** - this will be used to control DataHub authorization.

5. Create a test user & assign them to a policy. We'll use the `datahub` user, which is the default root user inside DataHub.

@@ -80,7 +84,11 @@ Now, you should have the DataHub plugin registered with Apache Ranger. Next, we'

DataHub platform access policy screenshot:

-

+

+

+  +

+

+

Once we've created our first policy, we can set up DataHub to start authorizing requests using Ranger policies.

@@ -178,7 +186,11 @@ then follow the below sections to undo the configuration steps you have performe

**ranger_datahub** service is shown in below screenshot:

-

+

+

+  +

+

+

2. Delete **datahub** plugin: Execute below curl command to delete **datahub** plugin

Replace variables with corresponding values in curl command

diff --git a/docs/how/updating-datahub.md b/docs/how/updating-datahub.md

index 2b6fd5571cc9e..7ba516c82cf1b 100644

--- a/docs/how/updating-datahub.md

+++ b/docs/how/updating-datahub.md

@@ -15,6 +15,9 @@ This file documents any backwards-incompatible changes in DataHub and assists pe

- #8300: Clickhouse source now inherited from TwoTierSQLAlchemy. In old way we have platform_instance -> container -> co

container db (None) -> container schema and now we have platform_instance -> container database.

- #8300: Added `uri_opts` argument; now we can add any options for clickhouse client.

+- #8659: BigQuery ingestion no longer creates DataPlatformInstance aspects by default.

+ This will only affect users that were depending on this aspect for custom functionality,

+ and can be enabled via the `include_data_platform_instance` config option.

## 0.10.5

diff --git a/docs/imgs/add-schema-tag.png b/docs/imgs/add-schema-tag.png

deleted file mode 100644

index b6fd273389c90..0000000000000

Binary files a/docs/imgs/add-schema-tag.png and /dev/null differ

diff --git a/docs/imgs/add-tag-search.png b/docs/imgs/add-tag-search.png

deleted file mode 100644

index a129f5eba4271..0000000000000

Binary files a/docs/imgs/add-tag-search.png and /dev/null differ

diff --git a/docs/imgs/add-tag.png b/docs/imgs/add-tag.png

deleted file mode 100644

index 386b4cdcd9911..0000000000000

Binary files a/docs/imgs/add-tag.png and /dev/null differ

diff --git a/docs/imgs/added-tag.png b/docs/imgs/added-tag.png

deleted file mode 100644

index 96ae48318a35a..0000000000000

Binary files a/docs/imgs/added-tag.png and /dev/null differ

diff --git a/docs/imgs/airflow/connection_error.png b/docs/imgs/airflow/connection_error.png

deleted file mode 100644

index c2f3344b8cc45..0000000000000

Binary files a/docs/imgs/airflow/connection_error.png and /dev/null differ

diff --git a/docs/imgs/airflow/datahub_lineage_view.png b/docs/imgs/airflow/datahub_lineage_view.png

deleted file mode 100644

index c7c774c203d2f..0000000000000

Binary files a/docs/imgs/airflow/datahub_lineage_view.png and /dev/null differ

diff --git a/docs/imgs/airflow/datahub_pipeline_entity.png b/docs/imgs/airflow/datahub_pipeline_entity.png

deleted file mode 100644

index 715baefd784ca..0000000000000

Binary files a/docs/imgs/airflow/datahub_pipeline_entity.png and /dev/null differ

diff --git a/docs/imgs/airflow/datahub_pipeline_view.png b/docs/imgs/airflow/datahub_pipeline_view.png

deleted file mode 100644

index 5b3afd13c4ce6..0000000000000

Binary files a/docs/imgs/airflow/datahub_pipeline_view.png and /dev/null differ

diff --git a/docs/imgs/airflow/datahub_task_view.png b/docs/imgs/airflow/datahub_task_view.png

deleted file mode 100644

index 66b3487d87319..0000000000000

Binary files a/docs/imgs/airflow/datahub_task_view.png and /dev/null differ

diff --git a/docs/imgs/airflow/entity_page_screenshot.png b/docs/imgs/airflow/entity_page_screenshot.png

deleted file mode 100644

index a782969a1f17b..0000000000000

Binary files a/docs/imgs/airflow/entity_page_screenshot.png and /dev/null differ

diff --git a/docs/imgs/airflow/find_the_dag.png b/docs/imgs/airflow/find_the_dag.png

deleted file mode 100644

index 37cda041e4b75..0000000000000

Binary files a/docs/imgs/airflow/find_the_dag.png and /dev/null differ

diff --git a/docs/imgs/airflow/finding_failed_log.png b/docs/imgs/airflow/finding_failed_log.png

deleted file mode 100644

index 96552ba1e1983..0000000000000

Binary files a/docs/imgs/airflow/finding_failed_log.png and /dev/null differ

diff --git a/docs/imgs/airflow/paused_dag.png b/docs/imgs/airflow/paused_dag.png

deleted file mode 100644

index c314de5d38d75..0000000000000

Binary files a/docs/imgs/airflow/paused_dag.png and /dev/null differ

diff --git a/docs/imgs/airflow/successful_run.png b/docs/imgs/airflow/successful_run.png

deleted file mode 100644

index b997cc7210ff6..0000000000000

Binary files a/docs/imgs/airflow/successful_run.png and /dev/null differ

diff --git a/docs/imgs/airflow/trigger_dag.png b/docs/imgs/airflow/trigger_dag.png

deleted file mode 100644

index a44999c929d4e..0000000000000

Binary files a/docs/imgs/airflow/trigger_dag.png and /dev/null differ

diff --git a/docs/imgs/airflow/unpaused_dag.png b/docs/imgs/airflow/unpaused_dag.png

deleted file mode 100644

index 8462562f31d97..0000000000000

Binary files a/docs/imgs/airflow/unpaused_dag.png and /dev/null differ

diff --git a/docs/imgs/apache-ranger/datahub-platform-access-policy.png b/docs/imgs/apache-ranger/datahub-platform-access-policy.png

deleted file mode 100644

index 7e3ff6fd372a9..0000000000000

Binary files a/docs/imgs/apache-ranger/datahub-platform-access-policy.png and /dev/null differ

diff --git a/docs/imgs/apache-ranger/datahub-plugin.png b/docs/imgs/apache-ranger/datahub-plugin.png

deleted file mode 100644

index 5dd044c014657..0000000000000

Binary files a/docs/imgs/apache-ranger/datahub-plugin.png and /dev/null differ

diff --git a/docs/imgs/apis/postman-graphql.png b/docs/imgs/apis/postman-graphql.png

deleted file mode 100644

index 1cffd226fdf77..0000000000000

Binary files a/docs/imgs/apis/postman-graphql.png and /dev/null differ

diff --git a/docs/imgs/apis/tutorials/column-description-added.png b/docs/imgs/apis/tutorials/column-description-added.png

deleted file mode 100644

index ed8cbd3bf5622..0000000000000

Binary files a/docs/imgs/apis/tutorials/column-description-added.png and /dev/null differ

diff --git a/docs/imgs/apis/tutorials/column-level-lineage-added.png b/docs/imgs/apis/tutorials/column-level-lineage-added.png

deleted file mode 100644

index 6092436e0a6a8..0000000000000

Binary files a/docs/imgs/apis/tutorials/column-level-lineage-added.png and /dev/null differ

diff --git a/docs/imgs/apis/tutorials/custom-properties-added.png b/docs/imgs/apis/tutorials/custom-properties-added.png

deleted file mode 100644

index a7e85d875045c..0000000000000

Binary files a/docs/imgs/apis/tutorials/custom-properties-added.png and /dev/null differ

diff --git a/docs/imgs/apis/tutorials/datahub-main-ui.png b/docs/imgs/apis/tutorials/datahub-main-ui.png

deleted file mode 100644

index b058e2683a851..0000000000000

Binary files a/docs/imgs/apis/tutorials/datahub-main-ui.png and /dev/null differ

diff --git a/docs/imgs/apis/tutorials/dataset-created.png b/docs/imgs/apis/tutorials/dataset-created.png

deleted file mode 100644

index 086dd8b7c9b16..0000000000000

Binary files a/docs/imgs/apis/tutorials/dataset-created.png and /dev/null differ

diff --git a/docs/imgs/apis/tutorials/dataset-deleted.png b/docs/imgs/apis/tutorials/dataset-deleted.png

deleted file mode 100644

index d94ad7e85195f..0000000000000