Replies: 3 comments 26 replies

-

Beta Was this translation helpful? Give feedback.

-

|

I just realize that I never responded to this 😅! Thanks so much for taking the time to actually draw and outline this!

I would say though the 2nd figure shown here shows the same attention matrix as the one above. So if it is clear from the Of course, you are free to multiply the matrices as |

Beta Was this translation helpful? Give feedback.

-

I agree, but this is a publisher convention. Originally, I had more explanations in the text, but I was asked to use figure annotations instead. Not much I can do here, unfortunately.

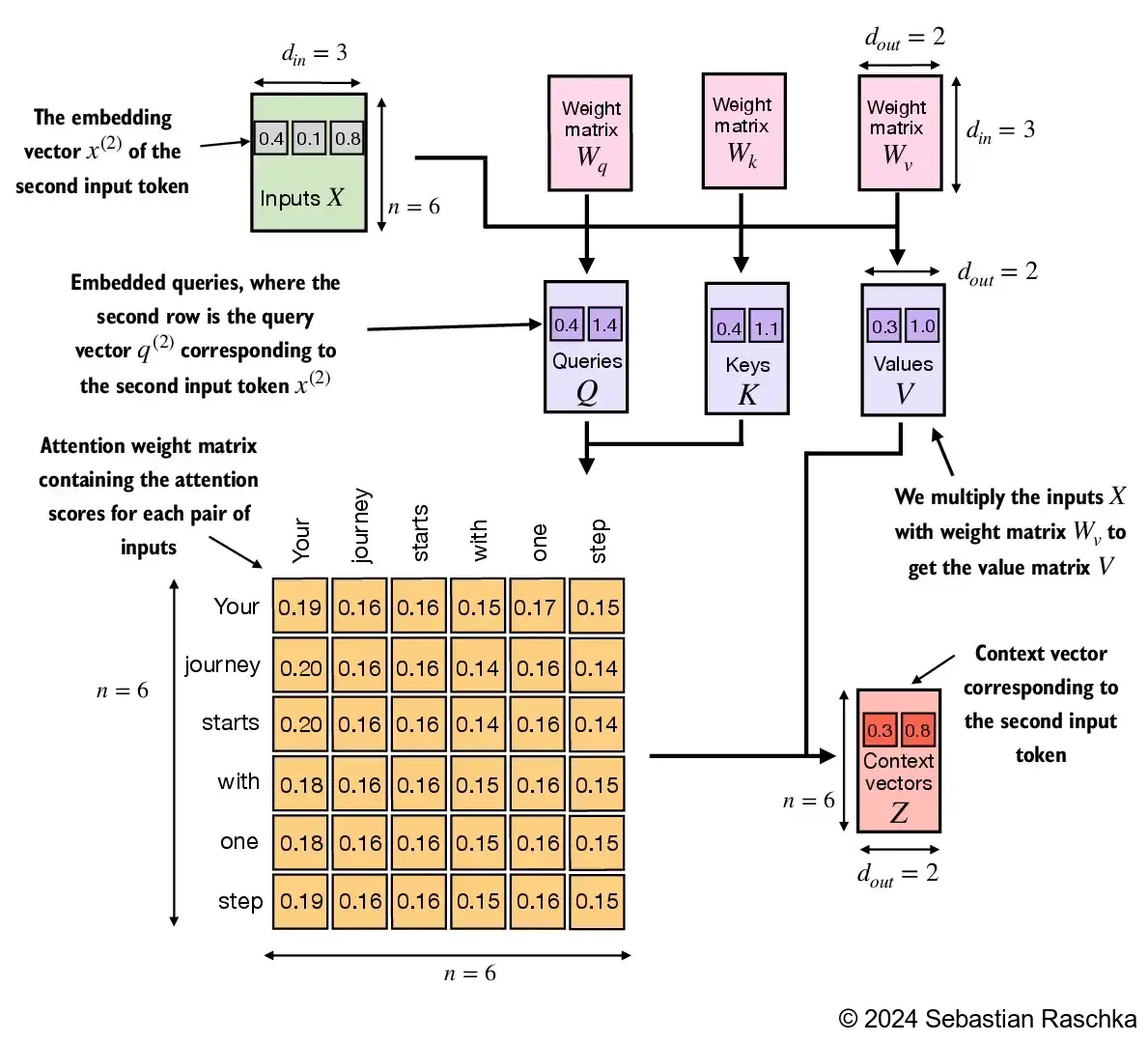

That's a good point, too! I originally rounded the values everywhere but the editor suggested that numbers in the figures have to be the same as in the code. So I then truncated the numbers everywhere instead of rounding them. But then yeah, it gets tricky in cases like this. It's mentioned in the first figure where the truncation is applied. I.e., it says " (Note that the numbers in the figure are truncated to one digit after the decimal point to reduce visual clutter.)" in the figure caption. Maybe I should copy & paste that phrase to the other figures as well. |

Beta Was this translation helpful? Give feedback.

-

I just had a discussion with Grant from 3Blue1Brown, I want to point out an important aspect that's missing in the visualizations, in my opinion, and might be important for the understanding from other readers as well:

3.4.2 Implementing a compact SelfAttention class

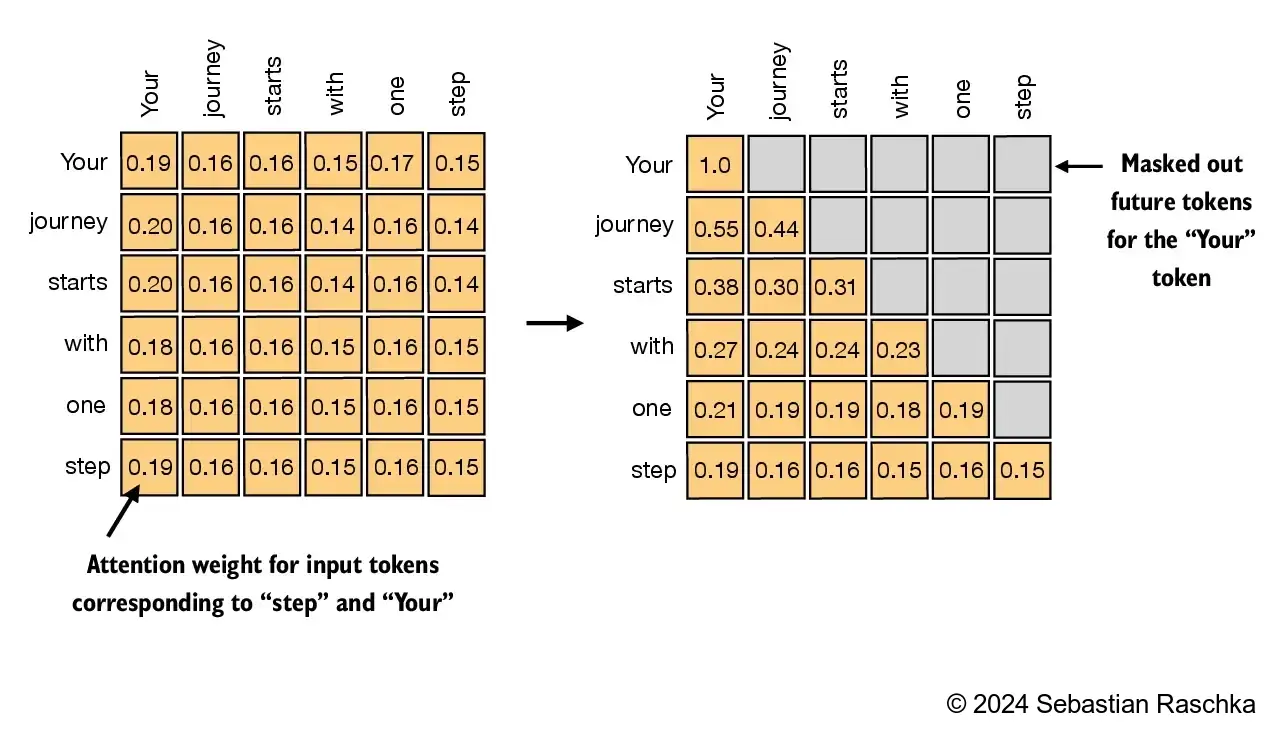

3.5 Hiding future words with causal attention

(P.S.: The normalized attention weights in the last graphic also don't add up row-wise to 1.0 perfectly for most cases there, maybe this could be fixed too.)

From the code, you can understand that the queries are multiplied by the keys:

I think it would be good in the last graphic to indicate with axes labels that the queries are the rows, whereas the keys are the columns - because sometimes you see visualizations where the attention weight table is transposed, like here.

Quick draft:

Beta Was this translation helpful? Give feedback.

All reactions