diff --git a/.readthedocs.yaml b/.readthedocs.yaml

new file mode 100644

index 00000000..ed48a523

--- /dev/null

+++ b/.readthedocs.yaml

@@ -0,0 +1,16 @@

+# .readthedocs.yaml

+# Read the Docs configuration file

+# See https://docs.readthedocs.io/en/stable/config-file/v2.html for details

+

+# Required

+version: 2

+

+# Set the version of Python and other tools you might need

+build:

+ os: ubuntu-22.04

+ tools:

+ python: "3.11"

+

+mkdocs:

+ configuration: mkdocs.yml

+ fail_on_warning: false

diff --git a/docs/api.md b/docs/api.md

index 8b137804..b9df4ded 100644

--- a/docs/api.md

+++ b/docs/api.md

@@ -3,11 +3,13 @@

REST APIs provide programmatic ways to submit new jobs and to download data from both [Michigan Imputation Server](https://imputationserver.sph.umich.edu) and the [TOPMed Imputation Server](https://imputation.biodatacatalyst.nhlbi.nih.gov). It identifies users using authentication tokens, responses are provided in JSON format.

## Authentication

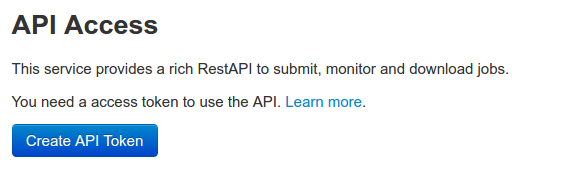

-Both Michigan and TOPMed Imputation Server use a token-based authentication mechanism. The token is required for all future interaction with the server. The token can be created and downloaded from your user profile (username -> Profile):

+The TOPMed Imputation Server uses a token-based authentication mechanism for all automated scripts that use our APIs. The token is required for all future interaction with the server. The token can be created and downloaded from your [user profile](https://imputation.biodatacatalyst.nhlbi.nih.gov/#!pages/profile) (username -> Profile):

-_**Note:** the tokens from Michigan Imputation Server and TOPMed Imputation Server are unique to each server_

+!!! note

+ Tokens will expire after 30 days. **Please keep your token secure** and never commit to a public repository (such as GitHub). We reserve the right to revoke API credentials at any time if an automated script is causing problems.

+

## Job Submission

The API allows setting several imputation parameters.

@@ -15,156 +17,113 @@ The API allows setting several imputation parameters.

### TOPMed Imputation Server Job Submission

URL: https://imputation.biodatacatalyst.nhlbi.nih.gov/api/v2

-POST /jobs/submit/imputationserver@1.7.3

+POST /jobs/submit/imputationserver

The following parameters can be set:

-| Parameter | Values | Default Value | Required |

-| ----------- |:--------------------------------------------------| :-----|---|

-| job-name | (user specified) | | |

-| files | /path/to/file | | **x** |

-| mode | `qconly`

`phasing`

`imputation` | `imputation` | |

-| refpanel | `apps@topmed-r2@1.0.0` | - | **x** |

-| phasing | `eagle`

`no_phasing` | `eagle` | |

-| build | `hg19`

`hg38` | `hg19` | |

-| r2Filter | `0`

`0.001`

`0.1`

`0.2`

`0.3` | `0` | |

-

+| Parameter | Values | Default Value | Required |

+|---------------|---------------------------------------------------|---------------|----------|

+| job-name | (user specified) | | |

+| files | /path/to/file | | **x** |

+| mode | `qconly`

`phasing`

`imputation` | `imputation` | **x** |

+| refpanel | `apps@topmed-r2` | - | **x** |

+| phasing | `eagle`

`no_phasing` | `eagle` | |

+| build | `hg19`

`hg38` | `hg19` | |

+| r2Filter | `0`

`0.001`

`0.1`

`0.2`

`0.3` | `0` | |

+| aesEncryption | `no`

`yes` | `no` | |

+| meta | `no`

`yes` | `no` | |

+

+* The _meta_ option generates a meta-imputation file.

+* AES 256 encryption is stronger than the default option, but `.zip` files using AES 256 cannot be opened with common decompression programs. If you select this option, you will need a tool such as [7-zip](https://www.7-zip.org/download.html) to open your results.

### Examples

### Examples: curl

-#### Submit a single file using TOPMed

+#### Submit file(s) using TOPMed

-To submit a job please change `/path-to-file` to the actual path.

-

-```sh

-curl -H "X-Auth-Token: " -F "input-files=@/path-to-file" -F "input-refpanel=apps@topmed-r2@1.0.0" -F "input-phasing=eagle" https://imputation.biodatacatalyst.nhlbi.nih.gov/api/v2/jobs/submit/imputationserver@1.7.3

-```

-

-#### Submit multiple files using 1000 Genomes Phase 3

-

-Submits multiple vcf files and impute against 1000 Genomes Phase 3 reference panel.

-

-Command:

+To submit a job please change `/path-to-file` to the actual path. This example can be adapted to send one, or multiple, files. (one per chromosome)

```sh

TOKEN="YOUR-API-TOKEN";

-curl https://imputationserver.sph.umich.edu/api/v2/jobs/submit/minimac4 \

- -H "X-Auth-Token: $TOKEN" \

- -F "files=@/path-to/file1.vcf.gz" \

- -F "files=@/path-to/file2.vcf.gz" \

- -F "refpanel=apps@1000g-phase-3-v5" \

- -F "population=eur"

+curl https://imputation.biodatacatalyst.nhlbi.nih.gov/api/v2/jobs/submit/imputationserver \

+ -X "POST" \

+ -H "X-Auth-Token: ${TOKEN}" \

+ -F "job-name=Documentation example (1000G - chr1 and 2)" \

+ -F "files=@/path-to/filename_chr1.vcf.gz" \

+ -F "files=@/path-to/filename_chr2.vcf.gz" \

+ -F "refpanel=apps@topmed-r2" \

+ -F "build=hg38" \

+ -F "phasing=eagle" \

+ -F "population=all" \

+ -F "meta=yes"

```

-Response:

-

-```json

-{

- "id":"job-20120504-155023",

- "message":"Your job was successfully added to the job queue.",

- "success":true

-}

-```

-

-

-#### Submit file from a HTTP(S)

-

-Submits files from https with HRC reference panel and quality control.

-

-Command:

-

-```sh

-TOKEN="YOUR-API-TOKEN";

-

-curl https://imputationserver.sph.umich.edu/api/v2/jobs/submit/minimac4 \

- -H "X-Auth-Token: $TOKEN" \

- -F "files=https://imputationserver.sph.umich.edu/static/downloads/hapmap300.chr1.recode.vcf.gz" \

- -F "files-source=http" \

- -F "refpanel=apps@hrc-r1.1" \

- -F "population=eur" \

- -F "mode=qconly"

-```

Response:

```json

{

- "id":"job-20120504-155023",

+ "id":"job-20160101-000001",

"message":"Your job was successfully added to the job queue.",

"success":true

}

```

-

### Examples: Python

-#### Submit single vcf file

+#### Submit one or more vcf files

-```python

-import requests

+```python3

import json

-# imputation server url

-url = 'https://imputationserver.sph.umich.edu/api/v2'

-token = 'YOUR-API-TOKEN';

-

-# add token to header (see Authentication)

-headers = {'X-Auth-Token' : token }

-data = {

- 'refpanel': 'apps@1000g-phase-3-v5',

- 'population': 'eur'

-}

-

-# submit new job

-vcf = '/path/to/genome.vcf.gz';

-files = {'files' : open(vcf, 'rb')}

-r = requests.post(url + "/jobs/submit/minimac4", files=files, data=data, headers=headers)

-if r.status_code != 200:

- print(r.json()['message'])

- raise Exception('POST /jobs/submit/minimac4 {}'.format(r.status_code))

-

-# print response and job id

-print(r.json()['message'])

-print(r.json()['id'])

-```

-

-#### Submit multiple vcf files

-

-```python

import requests

-import json

# imputation server url

-url = 'https://imputationserver.sph.umich.edu/api/v2'

+base = 'https://imputation.biodatacatalyst.nhlbi.nih.gov/api/v2'

token = 'YOUR-API-TOKEN';

-# add token to header (see Authentication)

+# add token to header (see documentation for Authentication)

headers = {'X-Auth-Token' : token }

data = {

- 'refpanel': 'apps@1000g-phase-3-v5',

- 'population': 'eur'

+ 'job-name': 'Documentation example (1000G - chr1 and 2)',

+ 'refpanel': 'apps@topmed-r2',

+ 'population': 'all',

+ 'build': 'hg38',

+ 'phasing': 'eagle',

+ 'r2Filter': 0,

+ 'meta': 'yes',

}

-# submit new job

-vcf = '/path/to/file1.vcf.gz';

-vcf1 = '/path/to/file2.vcf.gz';

-files = [('files', open(vcf, 'rb')), ('files', open(vcf1, 'rb'))]

-r = requests.post(url + "/jobs/submit/minimac4", files=files, data=data, headers=headers)

-if r.status_code != 200:

- print(r.json()['message'])

- raise Exception('POST /jobs/submit/minimac4 {}'.format(r.status_code))

-

-# print message

-print(r.json()['message'])

-print(r.json()['id'])

+# submit new job. This demonstrates multiple files, one per chromosome. Edit to send one or more chromosomes, as needed.

+vcf1 = '/path/to/filename_chr1.vcf.gz';

+vcf2 = '/path/to/filename_chr2.vcf.gz';

+

+with open(vcf1, 'rb') as f1, open(vcf2, 'rb') as f2:

+ files = [

+ ('files', f1),

+ ('files', f2)

+ ]

+

+ endpoint = "/jobs/submit/imputationserver"

+ resp = requests.post(base + endpoint, files=files, data=data, headers=headers)

+

+output = resp.json()

+

+if resp.status_code != 200:

+ print(output['message'])

+ raise Exception('POST {} {}'.format(endpoint, resp.status_code))

+else:

+ # print message

+ print(output['message'])

+ print(output['id'])

```

## List all jobs

-All running jobs can be returned as JSON objects at once.

+Return a list of all currently running jobs (for the user associated with the provided token).

+

### GET /jobs

### Examples: curl

@@ -174,101 +133,123 @@ Command:

```sh

TOKEN="YOUR-API-TOKEN";

-curl -H "X-Auth-Token: $TOKEN" https://imputationserver.sph.umich.edu/api/v2/jobs

+curl -H "X-Auth-Token: ${TOKEN}" https://imputation.biodatacatalyst.nhlbi.nih.gov/api/v2/jobs

```

Response:

```json

-[

- {

- "applicationId":"minimac",

- "executionTime":0,

- "id":"job-20160504-155023",

- "name":"job-20160504-155023",

- "positionInQueue":0,

- "running":false,

- "state":5

- },{

- "applicationId":"minimac",

- "executionTime":0,

- "id":"job-20160420-145809",

- "name":"job-20160420-145809",

- "positionInQueue":0,

- "running":false,

- "state":5

- },{

- "applicationId":"minimac",

- "executionTime":0,

- "id":"job-20160420-145756",

- "name":"job-20160420-145756",

- "positionInQueue":0,

- "running":false,

- "state":5

- }

-]

+{

+ "count": 1,

+ "page": 1,

+ "pages": [

+ 1

+ ],

+ "data": [

+ {

+ "app": null,

+ "application": "Genotype Imputation (Minimac4) 1.7.3",

+ "canceld": false,

+ "complete": true,

+ "currentTime": 1687898833855,

+ "endTime": 0,

+ "finishedOn": 1687898825867,

+ "id": "job-20230627-204701-307",

+ "name": "job-20230627-204701-307",

+ "positionInQueue": -1,

+ "priority": 0,

+ "progress": -1,

+ "setupEndTime": 1687898822002,

+ "setupRunning": false,

+ "setupStartTime": 1687898821563,

+ "startTime": 0,

+ "state": 5,

+ "submittedOn": 1687898821315,

+ "userAgent": "curl/7.87.0",

+ "workspaceSize": ""

+ }

+ ]

+}

```

### Example: Python

```python

-import requests

import json

+import requests

+

# imputation server url

-url = 'https://imputationserver.sph.umich.edu/api/v2'

+url = 'https://imputation.biodatacatalyst.nhlbi.nih.gov/api/v2'

token = 'YOUR-API-TOKEN';

# add token to header (see authentication)

headers = {'X-Auth-Token' : token }

-# get all jobs

-r = requests.get(url + "/jobs", headers=headers)

-if r.status_code != 200:

- raise Exception('GET /jobs/ {}'.format(r.status_code))

+# get all jobs for the user associated with this token

+endpoint = "/jobs"

+resp = requests.get(url + endpoint, headers=headers)

+if resp.status_code != 200:

+ raise Exception('GET {} {}'.format(endpoint, resp.status_code))

# print all jobs

-for job in r.json():

+for job in resp.json()['data']:

print('{} [{}]'.format(job['id'], job['state']))

```

## Monitor Job Status

### /jobs/{id}/status

+This endpoint includes basic information about a job, such as execution status (waiting = 1, running = 2, waiting to export results = 3, success = 4, failed = 5, canceled = 6). See `/jobs/{id}` for another endpoint that provides information about individual step progress, similar to the logs in the UI.

### Example: curl

+Substitute your job ID into the examples as requested. This is commonly used to monitor the status of one specific submitted job. To avoid overwhelming the server, we ask that you rate-limit your automated scripts to check no more often than once per minute. (not included in the example below)

+

Command:

```sh

TOKEN="YOUR-API-TOKEN";

-curl -H "X-Auth-Token: $TOKEN" https://imputationserver.sph.umich.edu/api/v2/jobs/job-20160504-155023/status

+curl -H "X-Auth-Token: $TOKEN" https://imputation.biodatacatalyst.nhlbi.nih.gov/api/v2/jobs/job-20160101-000001/status

```

Response:

```json

{

- "application":"Michigan Imputation Server (Minimac4) 1.1.4",

- "applicationId":"minimac4",

- "deletedOn":-1,

- "endTime":1462369824173,

- "executionTime":0,

- "id":"job-20160504-155023",

- "logs":"",

- "name":"job-20160504-155023",

- "outputParams":[],

- "positionInQueue":0,

- "running":false,

- "startTime":1462369824173,

- "state":5

- ,"steps":[]

+ "app": null,

+ "application": "Genotype Imputation (Minimac4) 1.7.3",

+ "applicationId": "imputationserver",

+ "canceld": false,

+ "complete": true,

+ "currentTime": 1687898968305,

+ "deletedOn": -1,

+ "endTime": 0,

+ "finishedOn": 1687898825867,

+ "id": "job-20160101-000001",

+ "logs": "",

+ "name": "job-20160101-000001",

+ "outputParams": [],

+ "positionInQueue": -1,

+ "priority": 0,

+ "progress": -1,

+ "running": false,

+ "setupEndTime": 1687898822002,

+ "setupRunning": false,

+ "setupStartTime": 1687898821563,

+ "startTime": 0,

+ "state": 5,

+ "steps": [],

+ "submittedOn": 1687898821315,

+ "workspaceSize": ""

}

```

## Monitor Job Details

+This endpoint includes more specific information about a job and completed steps, similar to what is shown in the website job details page. Generally, this endpoint is less helpful for automated scripts; the information in this endpoint is easier to read from within the website UI.

+

### /jobs/{id}

### Example: curl

@@ -276,5 +257,5 @@ Response:

```sh

TOKEN="YOUR-API-TOKEN";

-curl -H "X-Auth-Token: $TOKEN" https://imputationserver.sph.umich.edu/api/v2/jobs/job-20160504-155023/

+curl -H "X-Auth-Token: $TOKEN" https://imputation.biodatacatalyst.nhlbi.nih.gov/api/v2/jobs/job-20160101-000001/

```

diff --git a/docs/contact.md b/docs/contact.md

index 4582ed91..9bb1a928 100644

--- a/docs/contact.md

+++ b/docs/contact.md

@@ -2,23 +2,15 @@

The complete Imputation Server source code is available on [GitHub](https://github.com/genepi/imputationserver). Feel free to create issues and pull requests. Before contacting us, please have a look at the [FAQ page](/faq) first.

-Please contact [Michigan Imputation Server](mailto:imputationserver@umich.edu) in case of other problems.

+Please contact the [helpdesk](mailto:imputationserver@umich.edu) in case of other questions or issues.

## TOPMed Imputation Server Team

[TOPMed Imputation Server](https://imputation.biodatacatalyst.nhlbi.nih.gov) provides free genotype imputation for the TOPMed reference panel. You can upload phased or unphased GWAS agenotypes and receive phased and imputed genomes in return. For uploaded data sets an extensive QC is performed.

+* [Helpdesk](mailto:imputationserver@umich.edu) (**use this address for all inquiries**)

* [Albert Smith](mailto:albertvs@umich.edu)

-* [Jacob Pleiness](mailto:pleiness@umich.edu)

-

-## Michigan Imputation Server Team

-

-[Michigan Imputation Server](https://imputationserver.sph.umich.edu) provides a free genotype imputation service using Minimac4, supporting imputation 1000 Genome and Haplotype Reference Consortium Panels . You can upload phased or unphased GWAS genotypes and receive phased and imputed genomes in return. For all uploaded data sets an extensive QC is performed.

-

-* [Christian Fuchsberger](mailto:cfuchsb@umich.edu)

-* [Lukas Forer](mailto:lukas.forer@i-med.ac.at)

-* [Sebastian Schönherr](mailto:sebastian.schoenherr@i-med.ac.at)

-* [Sayantan Das](mailto:sayantan@umich.edu)

+* [Andrew Boughton](mailto:abought@umich.edu)

* [Gonçalo Abecasis](mailto:goncalo@umich.edu)

diff --git a/docs/data-sensitivity.md b/docs/data-sensitivity.md

index d763a9a4..645d2f5d 100644

--- a/docs/data-sensitivity.md

+++ b/docs/data-sensitivity.md

@@ -1,13 +1,15 @@

# Data Security

-For TOPMed Imputation, data is transfered to a secure server hosted on Amazon Web Servies, a wide array of security measures are in force:

+For TOPMed Imputation, data is transferred to a secure server hosted on Amazon Web Services. As of May 2023, we have completed a rigorous security review and received a federal Authorization to Operate (ATO) from NIH/NHLBI. A wide array of security measures are in force:

-- The complete interaction with the server is secured with HTTPS.

+- All traffic to and from the server is secured with HTTPS.

- Input data is deleted from our servers as soon it is not needed anymore.

-- We only store the number of samples and markers analyzed, we don't ever "look" at your data in anyway.

-- All results are encrypted with a strong one-time password - thus, only you can read them.

-- After imputation is finished, the data uploader has 7 days to use an encrypted connection to get results back.

-- The complete source code is available in a [public Github repository](https://github.com/genepi/imputationserver/tree/qc-refactoring).

+- We only store the number of samples and markers analyzed. We don't ever "look" at your data in any way.

+- All results are encrypted with a strong one-time password. We do not retain this password: only you can read the results.

+- After imputation is finished, the user has 7 days to download the results, after which they are automatically deleted.

+- The complete source code is available via public Github repositories:

+ - [Imputation pipeline](https://github.com/statgen/imputationserver/)

+ - [Web application](https://github.com/statgen/cloudgene)

## Who has access?

@@ -20,9 +22,9 @@ To upload and download data, users must register with a unique e-mail address an

A wide array of security measures are in force on the imputation servers:

- All stored data is encrypted at rest using FIPS 140-2 validated cryptographic software as well as encrypted in transit.

-- Access controls follow principles of least privilege. All administrative access is secured via two-factor authentication using roll-based access controls and temporary credentials.

+- Access controls follow the principle of least privilege. All administrative access is secured via two-factor authentication using role-based access controls and temporary credentials.

- Network access is restricted and filtered via web application firewalls, network access control lists, and security groups. Public/private network segmentation also ensures only the services that need to be are exposed to the public internet. All internal traffic and requests are logged and scanned for malicious or unusual activity.

-- Advanced DDOS protection is in place to assure consistent site availability.

+- Advanced DDoS protection is in place to assure consistent site availability.

- All administrative user activities, system activities, and network traffic is logged and scanned for anomalies and malicious activity. Findings are alerted to administrative users.

diff --git a/docs/faq.md b/docs/faq.md

index 68075718..77f1d9d1 100644

--- a/docs/faq.md

+++ b/docs/faq.md

@@ -1,22 +1,31 @@

# Frequently Asked Questions

## I did not receive a password for my imputation job

-Michigan Imputation Server creates a random password for each imputation job. This password is not stored on server-side at any time. If you didn't receive a password, please check your Mail SPAM folder. Please note that we are not able to re-send you the password.

+The TOPMed Imputation Server creates a random password for each imputation job. This password is not stored on server-side at any time. If you didn't receive a password, please check your Mail SPAM folder. Please note that we are not able to re-send you the password. If you lose it, you will need to re-run your imputation job.

+

+## I would like to impute more than 25,000 samples

+Due to server resource requirements, the Imputation Server will not accept jobs that are very small or very large (< 20 or > 25,000 samples).

+

+This limit exists to preserve quality of service for a wide audience. A workaround is to break large jobs into multiple chunks of 25k samples each. After completion, the results can be re-merged using [hds-util](https://github.com/statgen/hds-util) to combine the chunks and calculate the corrected R2.

+

+If you have a use case that routinely requires smaller or larger jobs, please [contact us](/contact) with details.

+

+## I would like to impute WGS data, but the server says I have too many variants

+The Imputation Server is meant to fill in the gaps between sites on a genotyping array (not WGS). Due to server resource requirements, imputing samples with many variants (more than 20,000 in any given 10Mb chunk) is not supported.

+

+If you have WGS at 50x, then you would get little to no benefit from imputing on the server.

## Unzip command is not working

-Please check the following points: (1) When selecting AES256 encryption, please use 7z to unzip your files (Debian: `sudo apt-get install p7zip-full`). For our default encryption all common programs should work. (2) If your password includes special characters (e.g. \\), please put single or double quotes around the password when extracting it from the command line (e.g. `7z x -p"PASSWORD" chr_22.zip`).

+Please check the following points: (1) When selecting AES256 encryption, please use [7z](https://www.7-zip.org/download.html) to unzip your files (Debian: `sudo apt-get install p7zip-full`). For the default encryption option, all common `.zip` decompression programs should work. (2) If your password includes special characters (e.g. \\), please put single or double quotes around the password when extracting it from the command line (e.g. `7z x -p"PASSWORD" chr_22.zip`).

## Extending expiration date or reset download counter

-Your data is available for 7 days. In case you need an extension, please let [us](/contact) know.

+Your data is available for 7 days. If you need an extension, please [let us know](/contact).

## How can I improve the download speed?

-[aria2](https://aria2.github.io/) tries to utilize your maximum download bandwidth. Please keep in mind to raise the k parameter significantly (-k, --min-split-size=SIZE). You will otherwise hit the Michigan Imputation Server download limit for each file (thanks to Anthony Marcketta for point this out).

+[aria2](https://aria2.github.io/) tries to utilize your maximum download bandwidth. Remember to raise the k parameter significantly (-k, --min-split-size=SIZE). You will otherwise hit the Imputation Server download limit for each file (thanks to Anthony Marcketta for pointing this out).

## Can I download all results at once?

We provide wget command for all results. Please open the results tab. The last column in each row includes direct links to all files.

-## Can I set up Michigan Imputation Server locally?

-We are providing a single-node Docker image that can be used to impute from Hapmap2 and 1000G Phase3 locally. Click [here](/docker) to give it a try. For usage in production, we highly recommend setting up a Hadoop cluster.

-

-## Your web service looks great. Can I set up my own web service as well?

-All web service functionality is provided by [Cloudgene](http://www.cloudgene.io/). Please contact us, in case you want to set up your own service.

+## My company requires security review to use an external service

+Due to small team size, it is difficult for us to complete detailed security questionnaires for every company or entity. However, as of May 2023, we have completed a rigorous external security review and received federal Authorization to Operate (ATO) from NHLBI/NIH. Please see our [security](/data-sensitivity) documentation for some common information, or [contact us](/contact) for specific questions.

diff --git a/docs/getting-started.md b/docs/getting-started.md

index df6e9aae..6baf0885 100644

--- a/docs/getting-started.md

+++ b/docs/getting-started.md

@@ -10,9 +10,9 @@ Please cite this paper if you use the Imputation Server in your GWAS study:

> Das S, Forer L, Schönherr S, Sidore C, Locke AE, Kwong A, Vrieze S, Chew EY, Levy S, McGue M, Schlessinger D, Stambolian D, Loh PR, Iacono WG, Swaroop A, Scott LJ, Cucca F, Kronenberg F, Boehnke M, Abecasis GR, Fuchsberger C. [Next-generation genotype imputation service and methods](https://www.ncbi.nlm.nih.gov/pubmed/27571263). Nature Genetics 48, 1284–1287 (2016).

-## Setup your first imputation job

+## Set up your first imputation job

-Please [login](https://imputation.biodatacatalyst.nhlbi.nih.gov/index.html#!pages/login) with your credentials and click on the **Run** tab to start a new imputation job. The submission dialog allows you to specify the properties of your imputation job.

+Please [log in](https://imputation.biodatacatalyst.nhlbi.nih.gov/index.html#!pages/login) with your credentials and click on the **Run** tab to start a new imputation job. The submission dialog allows you to specify the properties of your imputation job.

@@ -26,24 +26,15 @@ The TOPMed Imputation Server offers genotype imputation for the TOPMed reference

- TOPMed (Version r2 2020)

-#### Michigan Imputation Server

-

-The Michigan Imputation Server has several additional reference panels available. Please select one that fulfills your needs and supports the population of your input data:

+See the [reference panels documentation](/reference-panels) for details.

-- HRC (Version r1.1 2016)

-- HLA Imputation Panel: two-field (four-digit) and G-group resolution

-- HRC (Version r1 2015)

-- 1000 Genomes Phase 3 (Version 5)

-- 1000 Genomes Phase 1 (Version 3)

-- CAAPA - African American Panel

-- HapMap 2

-- TOPMed Freeze5 (in preparation)

+#### Michigan Imputation Server

-More details about all available reference panels can be found [here](/reference-panels/).

+The Michigan Imputation Server is a separate service with several additional reference panels available. Consult the relevant [documentation](https://imputationserver.readthedocs.io/en/latest/reference-panels/) for details.

### Upload VCF files from your computer

-When using the file upload, data is uploaded from your local file system to Michigan Imputation Server. By clicking on **Select Files** an open dialog appears where you can select your VCF files:

+When using the file upload, data is uploaded from your local file system to the TOPMed Imputation Server. By clicking on **Select Files** an open dialog appears where you can select your VCF files:

@@ -59,10 +50,10 @@ Please make sure that all files fulfill the [requirements](/prepare-your-data).

Since version 1.7.2 URL-based uploads (sftp and http) are no longer supported. Please use direct file uploads instead.

### Build

-Please select the build of your data. Currently the options **hg19** and **hg38** are supported. Michigan Imputation Server automatically updates the genome positions (liftOver) of your data. All reference panels except TOPMed are based on hg19 coordinates.

+Please select the build of your data. Currently, the options **hg19** and **hg38** are supported. The TOPMed Imputation Server automatically updates the genome positions of your data (liftOver). The TOPMed reference panel is based on hg38 coordinates.

### rsq Filter

-To minimize the file size, Michigan Imputation Server includes a r2 filter option, excluding all imputed SNPs with a r2-value (= imputation quality) smaller then the specified value.

+To minimize the file size, the Imputation Server includes a r2 filter option, excluding all imputed SNPs with a r2-value (= imputation quality) smaller than the specified value.

### Phasing

@@ -74,20 +65,9 @@ If your uploaded data is *unphased*, Eagle v2.4 will be used for phasing. In cas

### Population

-Please select the population of your uploaded samples. This information is used to compare the allele frequencies between your data and the reference panel. Please note that not every reference panel supports all sub-populations.

+Please select whether to compare allele frequencies between your data and the reference panel. Please note that not every reference panel supports all sub-populations.

-| Population | Supported Reference Panels |

-| ----------- | ---------------------------|

-| **AFR** | all except TOPMed-r2 |

-| **AMR** | all except TOPMed-r2 |

-| **EUR** | all except TOPMed-r2 |

-| **Mixed** | all |

-| **AA** | CAAPA |

-| **ASN** | 1000 Genomes Phase 1 (Version 3) |

-| **EAS** | 1000 Genomes Phase 3 (Version 5) |

-| **SAS** | 1000 Genomes Phase 3 (Version 5) |

-

-In case your population is not listed or your samples are from different populations, please select **Mixed** to skip the allele frequency check. For mixed populations, no QC-Report will be created.

+In case your samples are mixed from different populations, please select **Skip** to skip the allele frequency check. For mixed populations, no QC-Report will be created.

### Mode

@@ -96,7 +76,7 @@ Please select if you want to run **Quality Control & Imputation**, **Quality Con

### AES 256 encryption

-All Imputation Server results are encrypted by default. Please tick this checkbox if you want to use AES 256 encryption instead of the default encryption method. Please note that AES encryption does not work with standard unzip programs. We recommend to use 7z instead.

+All Imputation Server results are returned as an encrypted `.zip` file by default. This option enables stronger AES 256 encryption instead of the default encryption method. Please note that AES encryption does not work with standard unzip programs. We recommend [7-zip](https://www.7-zip.org/download.html) instead.

## Start your imputation job

@@ -117,7 +97,7 @@ After Input Validation has finished, basic statistics can be viewed directly in

-If you encounter problems with your data please read this tutorial about [Data Preparation](/prepare-your-data) to ensure your data is in the correct format.

+If you encounter problems with your input data, please read this tutorial about [Data Preparation](/prepare-your-data) to ensure your data is in the correct format.

### Quality Control

@@ -130,7 +110,7 @@ In this step we check each variant and exclude it in case of:

5. allele mismatch between reference panel and uploaded data

6. SNP call rate < 90%

-All filtered variants are listed in a file called `statistics.txt` which can be downloaded by clicking on the provided link. More informations about our QC pipeline can be found [here](/pipeline).

+All filtered variants are listed in a file called `statistics.txt` which can be downloaded by clicking on the provided link. More information about our QC pipeline can be found [here](/pipeline).

@@ -138,17 +118,17 @@ If you selected a population, we compare the allele frequencies of the uploaded

### Pre-phasing and Imputation

-Imputation is achieved with Minimac4. The progress of all uploaded chromosomes is updated in real time and visualized with different colors.

+Imputation is performed using Minimac4. The progress of all uploaded chromosomes is updated in real time and visualized with different colors.

### Data Compression and Encryption

-If imputation was successful, we compress and encrypt your data and send you a random password via mail.

+If imputation was successful, we compress and encrypt your data and send you a random password via e-mail.

-This password is not stored on our server at any time. Therefore, if you lost the password, there is no way to resend it to you.

+**This password is not stored on our server** at any time. Therefore, if you lost the password, there is no way to resend it to you, and you will need to re-impute your results.

## Download results

@@ -157,7 +137,7 @@ The user is notified by email, as soon as the imputation job has finished. A zip

!!! important "All data is deleted automatically after 7 days"

- Be sure to download all needed data in this time period. We send you a reminder 48 hours before we delete your data. Once your job hast the state **retired**, we are not able to recover your data!

+ Be sure to download all needed data in this time period. We send you a reminder 48 hours before we delete your data. Once your job has the state **retired**, we are not able to recover your data!

### Download via a web browser

@@ -170,8 +150,7 @@ In order to download results via the commandline using `wget`or `aria2` you need

-A new dialog appears which provides you the private link. Click on the tab **wget command** to get a copy & paste ready command that can be used on Linux or MacOS to download the file in you terminal:

-

+A new dialog appears which provides you the private link. Click on the tab **wget command** to get a copy & paste ready command that can be used on Linux or MacOS to download the file via the command-line.

### Download all results at once

diff --git a/docs/index.md b/docs/index.md

index 733d4b5e..13b4cd37 100644

--- a/docs/index.md

+++ b/docs/index.md

@@ -4,19 +4,10 @@

-# Michigan Imputation Server

-!!! note "ASHG2020 Workshop"

- Click [here](/workshops/ASHG2020) for additional resources and tutorials. For questions: [mis-ashg2020@umich.edu](mailto:mis-ashg2020@umich.edu). To sign-up for the dedicated Slack-channel: [Slack sign-up](https://join.slack.com/t/eurac-workspace/shared_invite/zt-iqlxpl01-PwAxoTvlcXpDZo04ZKCBZQ)

-

-

-[Michigan Imputation Server](https://imputationserver.sph.umich.edu) provides a free genotype imputation service using [Minimac4](http://genome.sph.umich.edu/wiki/Minimac4). You can upload phased or unphased GWAS genotypes and receive phased and imputed genomes in return. Our server offers imputation from 1000 Genomes (Phase 1 and 3), CAAPA, [HRC](http://www.haplotype-reference-consortium.org/) and the [TOPMed](http://nhlbiwgs.org/) reference panel. For all uploaded datasets an extensive QC is performed.

-

-

-

-Please cite this paper if you use Imputation Server in your publication:

+Please cite this paper if you use the TOPMed Imputation Server in your publication:

> Das S, Forer L, Schönherr S, Sidore C, Locke AE, Kwong A, Vrieze S, Chew EY, Levy S, McGue M, Schlessinger D, Stambolian D, Loh PR, Iacono WG, Swaroop A, Scott LJ, Cucca F, Kronenberg F, Boehnke M, Abecasis GR, Fuchsberger C. [Next-generation genotype imputation service and methods](https://www.ncbi.nlm.nih.gov/pubmed/27571263). Nature Genetics 48, 1284–1287 (2016).

-The complete source code is hosted on [GitHub](https://github.com/genepi/imputationserver/) using Travis CI for continuous integration.

+The complete source code is hosted on [GitHub](https://github.com/statgen/imputationserver/) using Travis CI for continuous integration.

diff --git a/docs/pipeline.md b/docs/pipeline.md

index f4c5059f..f23f0151 100644

--- a/docs/pipeline.md

+++ b/docs/pipeline.md

@@ -4,13 +4,13 @@ Our pipeline performs the following steps:

## Quality Control

-* Create chunks with a size of 20 Mb

-* For each 20Mb chunk we perform the following checks:

+* Create chunks with a size of 10 Mb

+* For each 10Mb chunk we perform the following checks:

**On Chunk level:**

* Determine amount of valid variants: A variant is valid iff it is included in the reference panel. At least 3 variants must be included.

- * Determine amount of variants found in the reference panel: At least 50 % of the variants must be be included in the reference panel.

+ * Determine amount of variants found in the reference panel: At least 50% of the variants must be included in the reference panel.

* Determine sample call rate: At least 50 % of the variants must be called for each sample.

Chunk exclusion: if (#variants < 3 || overlap < 50% || sampleCallRate < 50%)

@@ -51,45 +51,15 @@ Our pipeline performs the following steps:

## Imputation

###

-* Execute for each chunk minimac in order to impute the phased data (we use a window of 500 kb):

-

-````sh

-./Minimac4 --refHaps HRC.r1-1.GRCh37.chr1.shapeit3.mac5.aa.genotypes.m3vcf.gz

---haps chunk_1_0000000001_0020000000.phased.vcf --start 1 --end 20000000

---window 500000 --prefix chunk_1_0000000001_0020000000 --cpus 1 --chr 20 --noPhoneHome

---format GT,DS,GP --allTypedSites --meta --minRatio 0.00001

-````

-If a map file is available (currently TOPMed only), the following cmd is executed:

+* For each chunk, run minimac in order to impute the phased data (we use a window of 500 kb):

````sh

./Minimac4 --refHaps HRC.r1-1.GRCh38.chr1.shapeit3.mac5.aa.genotypes.m3vcf.gz

--haps chunk_1_0000000001_0020000000.phased.vcf --start 1 --end 20000000

---window 500000 --prefix chunk_1_0000000001_0020000000 --cpus 1 --chr 20 --noPhoneHome

+--window 500000 --prefix chunk_1_0000000001_0020000000 --cpus 4 --chr 20 --noPhoneHome

--format GT,DS,GP --allTypedSites --meta --minRatio 0.00001 --referenceEstimates --map B38_MAP_FILE.map

````

-## HLA Imputation Pipeline

-

-In addition to intergenic SNPs, HLA imputation outputs five different types of markers: (1) binary marker for classical HLA alleles; (2) binary marker for the presence/absence of a specific amino acid residue; (3) HLA intragenic SNPs, and (4) binary markers for insertion/deletions, as described in the typical output below. The goal is to minimize prior assumption on which types of variations will be causal and test all types of variations simultaneously in an unbiased fashion. However, the users are always free to restrict analyses to specific marker subsets.

-

-!!! note

- For binary encodings, A = Absent, T = Present.

-

-

-| Type | Format | Example |

-|----------|-------------|------|

-| Classical HLA alleles | HLA\_[GENE]\*[ALLELE]| HLA_A\*01:02 (two-field allele)

HLA_A\*02 (one-field allele) |

-| HLA amino acids | AA_[GENE]\_[AMINO ACID POSITION]\_[GENOMIC POSITION]\_[EXON]\_[RESIDUE] | AA_B_97_31324201_exon3_V (amino acid position 97 in HLA-B, genomic position 31324201 (GrCh37) in exon 3, residue = V (Val) ) |

-| HLA intragenic SNPs | SNPS_[GENE]\_[GENE POSITION]\_[GENOMIC POSITION]\_[EXON/INTRON] | SNPS_C_2666_31237183_intron6 (SNP at position 2666 of the gene body, genomic position 31237183 in intron 6)|

-| Insertions/deletions | INDEL_[TYPE]\_[GENE]\_[POSITION]| INDEL_AA_C_300x301_31237792 Indel between amino acids 300 and 301 in HLA-C, at genomic position 31237792) |

-

-

-We note that our current implementation of the reference panel is limited to the G-group resolution (DNA sequences that determine the exons 2 and 3 for class I and exon 2 for class II genes), and amino acid positions outside the binding groove were taken as its best approximation. When converting G-group alleles to the two-field resolution, we first approximated G-group alleles to their corresponding allele at the four-field resolution based on the ordered allele list in the distributed IPD-IMGT/HLA database (version 3.32.0). We explicitly include exonic information in the HLA-TAPAS output.

-

-

-For more information about HLA imputation and help, please visit [https://github.com/immunogenomics/HLA-TAPAS](https://github.com/immunogenomics/HLA-TAPAS).

-

-

## Compression and Encryption

* Merge all chunks of one chromosome into one single vcf.gz

@@ -97,10 +67,21 @@ For more information about HLA imputation and help, please visit [https://github

## Chromosome X Pipeline

-Additionally to the standard QC, the following per-sample checks are executed for chrX:

+In addition to the standard QC, the following per-sample checks are executed for chrX:

* Ploidy Check: Verifies if all variants in the nonPAR region are either haploid or diploid.

* Mixed Genotypes Check: Verifies if the amount of mixed genotypes (e.g. 1/.) is < 10 %.

-For phasing and imputation, chrX is split into three independent chunks (PAR1, nonPAR, PAR2). These splits are then automatically merged by Michigan Imputation Server and are returned as one complete chromosome X file. Only Eagle is supported.

+For phasing and imputation, chrX is split into three independent chunks (PAR1, nonPAR, PAR2). These splits are then automatically merged by the Imputation Server and are returned as one complete chromosome X file. Only Eagle is supported.

+

+## b37 coordinates

+

+| ChrX PAR1 Region | chr X1 (< 2699520) |

+| ChrX nonPAR Region | chr X2 (2699520 - 154931044) |

+| ChrX PAR2 Region | chr X3 (> 154931044) |

+

+## b38 coordinates

+| ChrX PAR1 Region | chr X1 (< 2781479) |

+| ChrX nonPAR Region | chr X2 (2781479 - 155701383) |

+| ChrX PAR2 Region | chr X3 (> 155701383) |

\ No newline at end of file

diff --git a/docs/prepare-your-data.md b/docs/prepare-your-data.md

index fb1fb0d7..5a9a390e 100644

--- a/docs/prepare-your-data.md

+++ b/docs/prepare-your-data.md

@@ -1,14 +1,17 @@

# Data preparation

-Michigan Imputation Server accepts VCF files compressed with [bgzip](http://samtools.sourceforge.net/tabix.shtml). Please make sure the following requirements are met:

+TOPMed Imputation Server accepts VCF files compressed with [bgzip](http://samtools.sourceforge.net/tabix.shtml). Please make sure the following requirements are met:

- Create a separate vcf.gz file for each chromosome.

- Variations must be sorted by genomic position.

- GRCh37 or GRCh38 coordinates are required.

+ - If your input data is GRCh37/hg19, please ensure chromosomes are encoded without prefix (e.g. 20).

+ - If your input data is GRCh38/hg38, please ensure chromosomes are encoded with prefix 'chr' (e.g. chr20).

- VCF files need to be version 4.2 (or lower)

+- Due to server resource requirements, there is a maximum of 25k samples per chromosome per job (and a minimum of 20 samples). Please see the FAQ for details.

!!! note

- Several \*.vcf.gz files can be uploaded at once.

+ Multiple \*.vcf.gz files (one per chromosome) can be uploaded as part of a single job.

diff --git a/docs/reference-panels.md b/docs/reference-panels.md

index eb96c528..1f5f488b 100644

--- a/docs/reference-panels.md

+++ b/docs/reference-panels.md

@@ -14,4 +14,4 @@ The TOPMed panel consists of 194,512 haplotypes

| Imputation Server: | [https://imputation.biodatacatalyst.nhlbi.nih.gov](https://imputation.biodatacatalyst.nhlbi.nih.gov) |

| Website: | [https://www.nhlbiwgs.org/](https://www.nhlbiwgs.org/) |

-**Additional [reference panels](https://imputationserver.readthedocs.io/en/latest/reference-panels/) are available from the [Michigan Imputation Server](https://imputationserver.sph.umich.edu).**

\ No newline at end of file

+**Additional [reference panels](https://imputationserver.readthedocs.io/en/latest/reference-panels/) are available from the [Michigan Imputation Server](https://imputationserver.sph.umich.edu), which is a separate service.**

\ No newline at end of file

diff --git a/mkdocs.yml b/mkdocs.yml

index c7919fcc..749747cb 100644

--- a/mkdocs.yml

+++ b/mkdocs.yml

@@ -1,4 +1,4 @@

-site_name: Michigan Imputation Server

+site_name: TOPMed Imputation Server

theme: readthedocs

pages:

@@ -11,8 +11,6 @@ pages:

- FAQ: faq.md

- Developer Documentation:

- API: api.md

- - Docker: docker.md

- - Create Reference Panels: create-reference-panels.md

- Workshops:

- ASHG2022:

- Overview: workshops/ASHG2022.md

@@ -35,18 +33,18 @@ pages:

-repo_name: genepi/imputationserver

-repo_url: https://github.com/genepi/imputationserver

-edit_uri: edit/master/docs

+repo_name: statgen/imputationserver

+repo_url: https://github.com/statgen/imputationserver

+edit_uri: edit/release/docs

extra:

social:

- type: github-alt

- link: https://github.com/genepi/imputationserver

+ link: https://github.com/statgen/imputationserver

- type: twitter

link: https://twitter.com/umimpute

markdown_extensions:

- toc:

- permalink:

- - admonition:

+ permalink: True

+ - admonition: {}