This is the code accompanying the paper: "Exploring both Individuality and Cooperation for Air-Ground Spatial Crowdsourcing by Multi-Agent Deep Reinforcement Learning", to be appear in ICDE 2023.

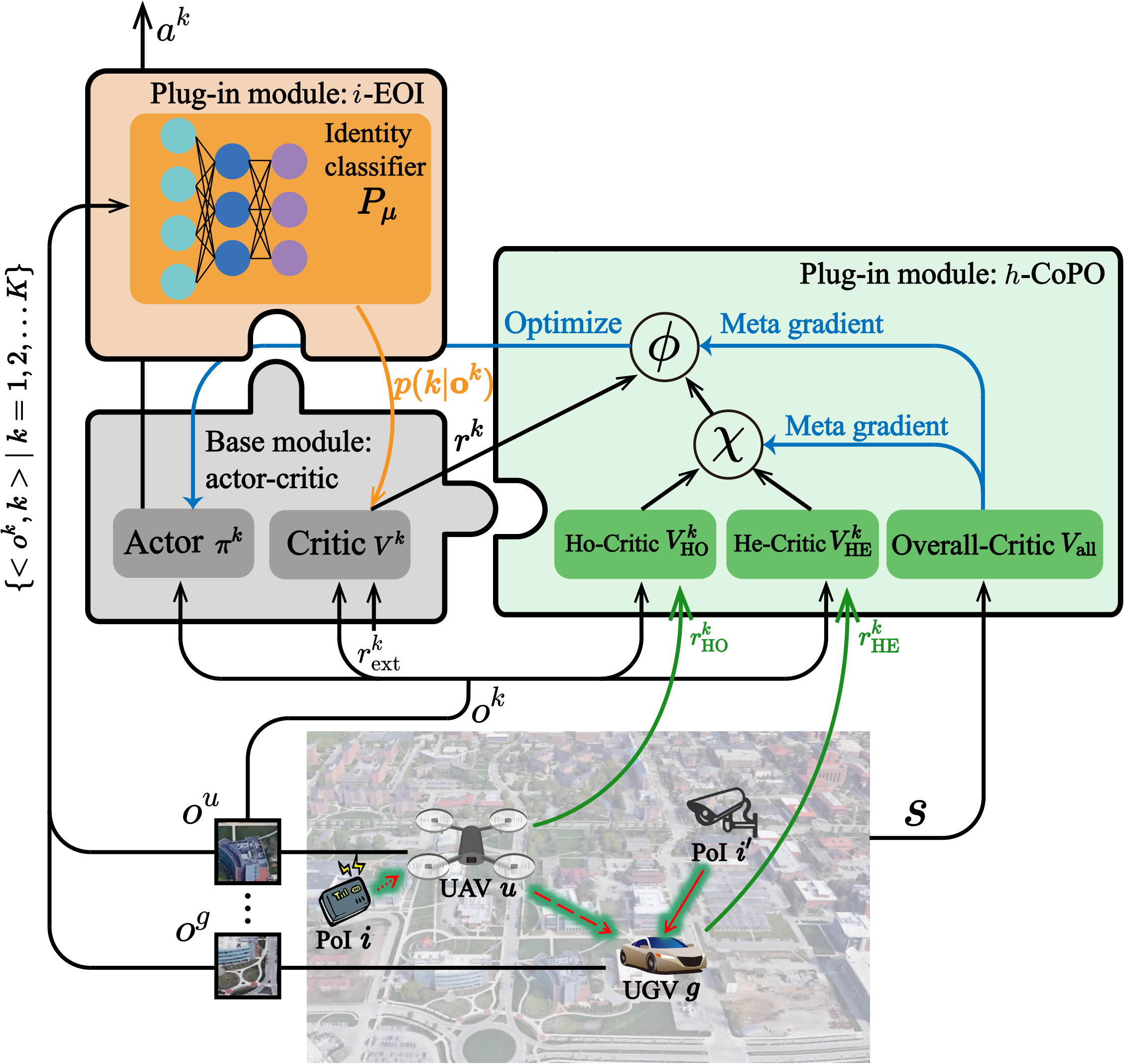

Spatial crowdsourcing (SC) has proven as a promising paradigm to employ human workers to collect data from diverse Point-of-Interests (PoIs) in a given area. Different from using human participants, we propose a novel air-ground SC scenario to fully take advantage of benefits brought by unmanned vehicles (UVs), including unmanned aerial vehicles (UAVs) with controllable high mobility and unmanned ground vehicles (UGVs) with abundant sensing resources. The objective is to maximize the amount of collected data, geographical fairness among all PoIs, and minimize the data loss and energy consumption, integrated as one single metric called “efficiency”. We explicitly explore both individuality and cooperation natures of UAVs and UGVs by proposing a multi-agent deep reinforcement learning (MADRL) framework called “h/i-MADRL”. Compatible with all multi-agent actor-critic methods, h/i-MADRL adds two novel plug-in modules: (a) h-CoPO, which models the cooperation preference among heterogenous UAVs and UGVs; and (b) i-EOI, which extracts the UV’s individuality and encourages better spatial division of work by adding intrinsic reward. Extensive experimental results on two real-world datasets on Purdue and NCSU campuses confirm that h/i-MADRL achieves a better exploration of both individuality and cooperation simultaneously, resulting in a better performance in terms of efficiency compared with five baselines.

Overview of h/i-MADRL:

Here we give an example installation on CUDA == 11.4.

-

Clone repo

git clone https://github.com/BIT-MCS/hi-MADRL.git cd hi-MADRL -

Create conda environment

conda create --name hi-MADRL python=3.7 conda activate hi-MADRL -

Install dependent packages

-

Install torch

pip install torch==1.9.0+cu111 -f https://download.pytorch.org/whl/torch_stable.html -

In order to use osmnx to generate roadmap, some dependencies are required

-

For other required packages, please run the code and find which required package hasn't installed yet. Most of them can be installed by

pip install.

-

To train hi-MADRL, use:

python main_PPO_vecenv.py --dataset <DATASET_STR> --use_eoi --use_hcopo

where <DATASET_STR> can be "purdue" or "NCSU". Default hyperparameters of hi-MADRL are used and the default simulation settings are summarized in Table 2.

add --output_dir <OUTPUT_DIR> to specify the place to save outputs(by default outputs are saved in ../runs/debug).

For ablation study, simply remove --use_eoi or --use_hcopo or both of them.

The output of training includes

-

tensorboard

-

modelsaved best model -

train_saved_trajssaved best trajectories for UAVs and UGVs -

train_output.txtrecords the performance in terms of 5 metrics:best trajs have been changed in ts=200. best_train_reward: 0.238 efficiency: 2.029 collect_data_ratio: 0.550 loss_ratio: 0.011 fairness: 0.577 energy_consumption_ratio: 0.155

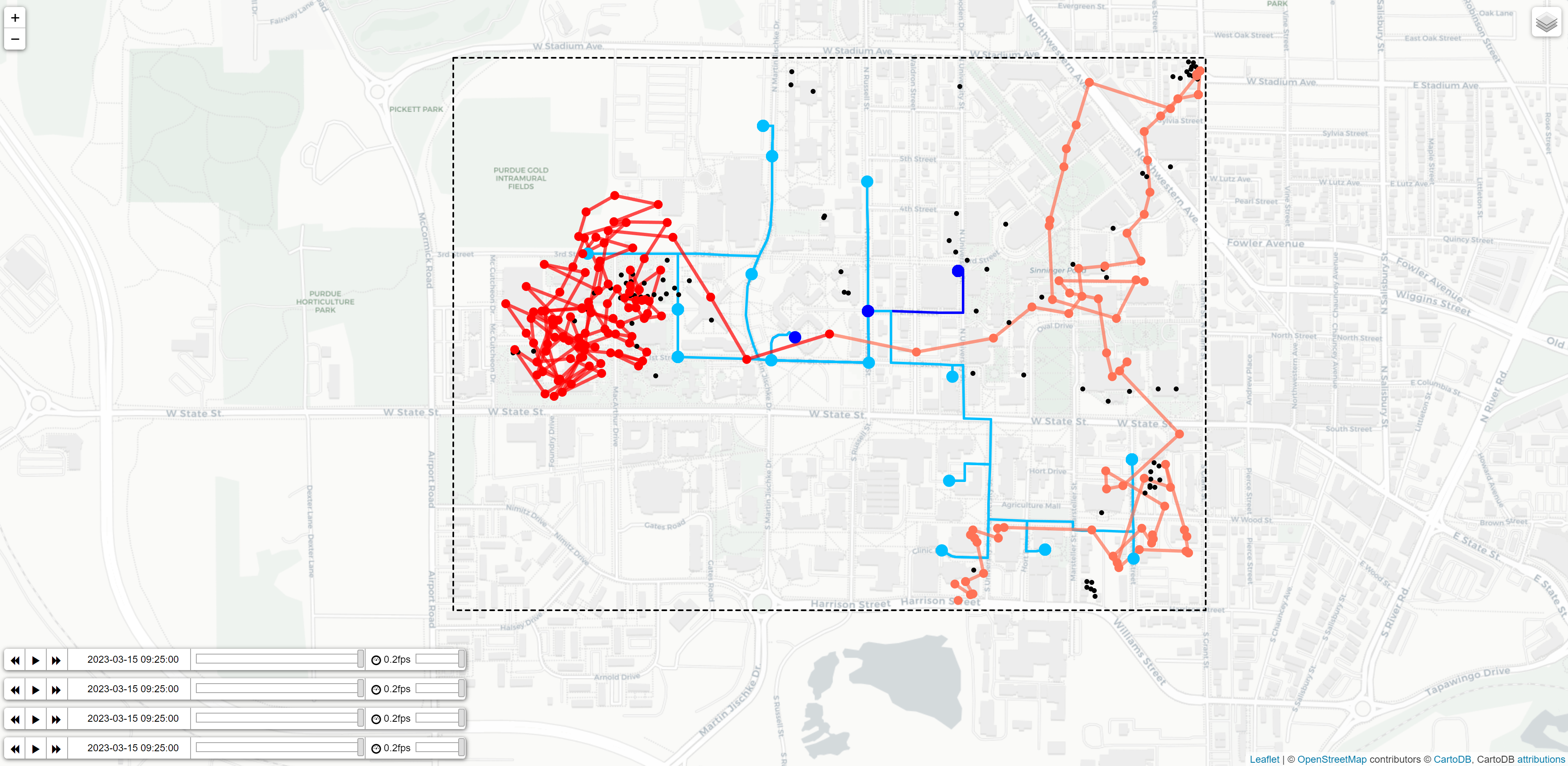

To generate visualized trajectories, Use:

python tools/post/vis_gif.py --output_dir <OUTPUT_DIR>

--group_save_dir <OUTPUT_DIR>

You can use our pretrained output:

python tools/post/vis_gif.py --output_dir runs\pretrained_output_purdue

--group_save_dir runs\pretrained_output_purdue

then a .html file showing visualized trajectories is generated:

you can drag the control panel at lower left corner to see how UAVs and UGVs move.

- https://github.com/XinJingHao/PPO-Continuous-Pytorch

- https://github.com/decisionforce/CoPO

- https://github.com/jiechuanjiang/EOI_on_SMAC

This work was sponsored by the National Natural Science Foundation of China (No. U21A20519 and 62022017).

Corresponding Author: Jianxin Zhao.

If you have any question, please email [email protected].

If you are interested in our work, please cite our paper as

coming soon~~