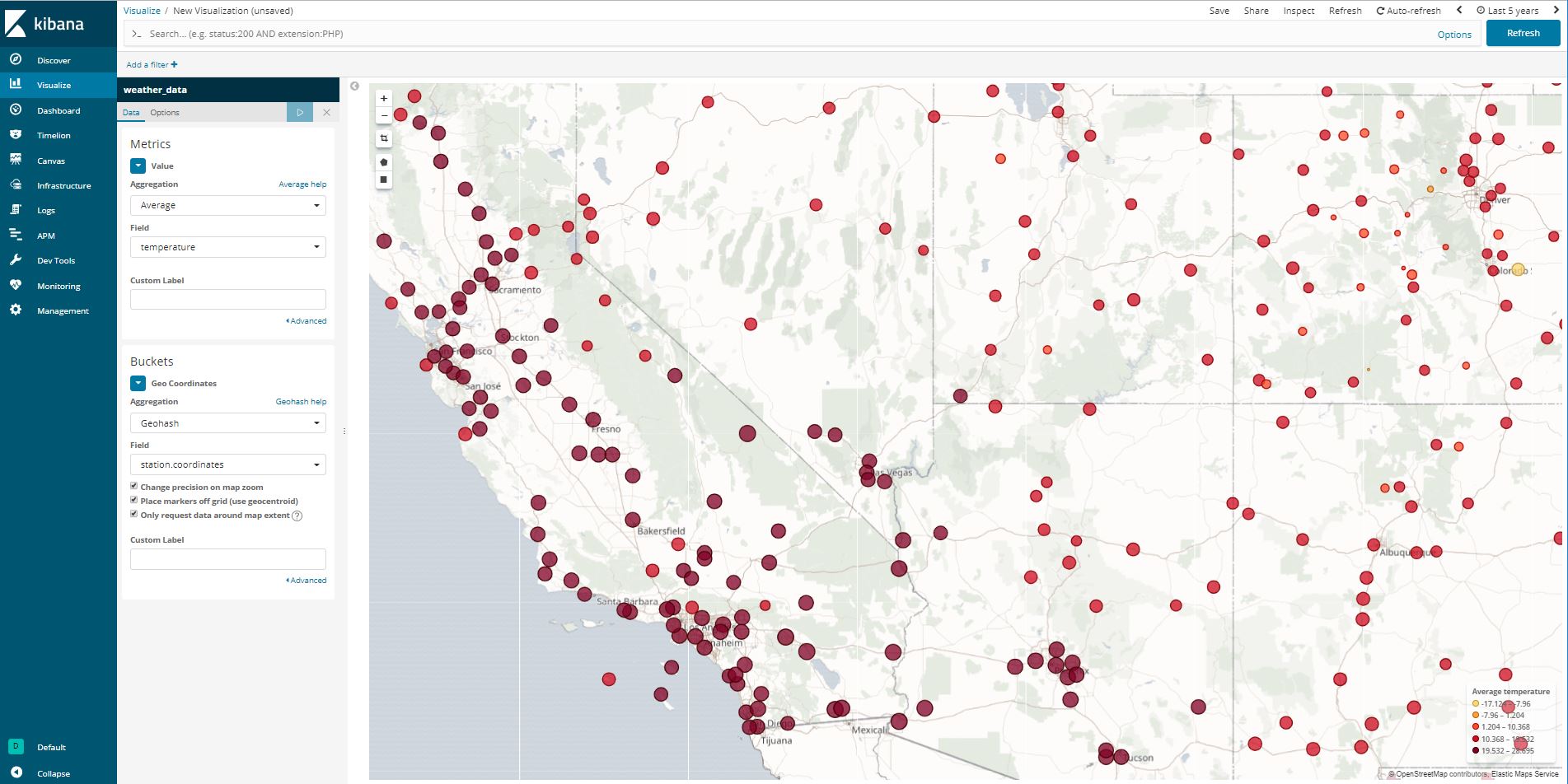

This project is a sample project for Apache Flink. The application parses the Quality Controlled Local Climatological Data (QCLCD) of March 2015, calculates the maximum daily temperature of the stream by using Apache Flink and writes the results back into an Elasticsearch and PostgreSQL database.

The data is the Quality Controlled Local Climatological Data (QCLCD):

Quality Controlled Local Climatological Data (QCLCD) consist of hourly, daily, and monthly summaries for approximately 1,600 U.S. locations. Daily Summary forms are not available for all stations. Data are available beginning January 1, 2005 and continue to the present. Please note, there may be a 48-hour lag in the availability of the most recent data.

The data is available at:

The records in the Quality Controlled Local Climatological Data (QCLCD) dataset are not sorted by the timestamp. The dataset needs to be prepared first, so that all records are sorted ascending by the time of measurement.

I have written a small application, that sorts the original CSV data by the measurement time:

The result is a sorted CSV file, which can be used to run the examples.

I have written several blog posts on Apache Flink:

- June 10, 2016 - Stream Data Processing with Apache Flink

- July 03, 2016 - Building Applications with Apache Flink (Part 1): Dataset, Data Preparation and Building a Model

- July 03, 2016 - Building Applications with Apache Flink (Part 2): Writing a custom SourceFunction for the CSV Data

- July 03, 2016 - Building Applications with Apache Flink (Part 3): Stream Processing with the DataStream API

- July 03, 2016 - Building Applications with Apache Flink (Part 4): Writing and Using a custom PostgreSQL SinkFunction

- July 10, 2016 - Building Applications with Apache Flink (Part 5): Complex Event Processing with Apache Flink