-

Notifications

You must be signed in to change notification settings - Fork 966

Tensorflow Serving Via Docker

This directory contains Dockerfiles to make it easy to get up and running with TensorFlow Serving via Docker.

General installation instructions are on the Docker site, but we give some quick links here:

TensorFlow currently maintain the following Dockerfiles:

- Dockerfile.devel, which is a minimal VM with all of the dependencies needed to build TensorFlow Serving.

run;

username@machine_name:~$docker build --pull -t $USER/tensorflow-serving-devel -f tensorflow_serving/tools/docker/Dockerfile.devel .

username@machine_name:~$docker run --name=test_container -it $USER/tensorflow-serving-devel

root@476b31eb9213:| Name, shorthand | Description |

|---|---|

| --tag , -t | Name and optionally a tag in the ‘name:tag’ format |

| --file , -f | Name of the Dockerfile (Default is ‘PATH/Dockerfile’) |

| --pull | Always attempt to pull a newer version of the image |

Note: All bazel build commands below use the standard -c opt flag. To further optimize the build, refer to the instructions here.

In the running container, we clone, configure and build TensorFlow Serving code.

git clone -b r1.6 --recurse-submodules https://github.com/tensorflow/serving

cd serving/

git clone --recursive https://github.com/tensorflow/tensorflow.git

cd tensorflow

./configure

cd ..

Next we can either install a TensorFlow ModelServer with apt-get using the instructions here, or build a ModelServer binary using:

bazel build -c opt tensorflow_serving/model_servers:tensorflow_model_server

The rest of this tutorial assumes you compiled the ModelServer locally, in which case the command to run it is bazel-bin/tensorflow_serving/model_servers/tensorflow_model_server. If however you installed the ModelServer using apt-get, simply replace that command with tensorflow_model_server.

root@476b31eb9213: **Ctrl+P Ctrl+Q**

username@machine_name: sudo docker cp ./your_model/ 476b31eb9213:/serving

username@machine_name: sudo docker attach 476b31eb9213

root@476b31eb9213:/serving: folder you want to put your model and also rename it in the docker

File Structure of your_model:

├── your_model

├── 1 => It's version of your model, it's also a **must**.

├── saved_model.pb

└── variables

├── variables.data-00000-of-00001

└── variables.index

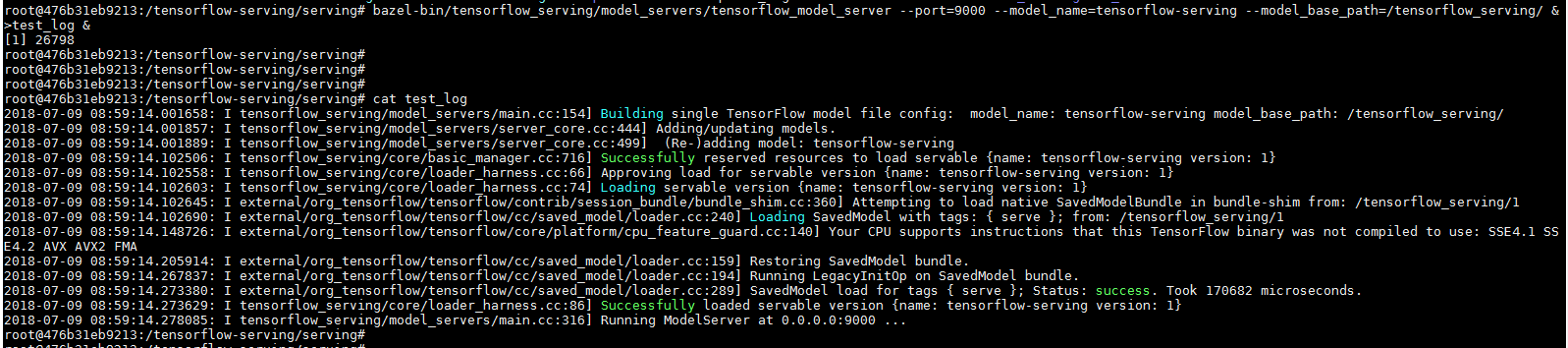

bazel-bin/tensorflow_serving/model_servers/tensorflow_model_server --port=9000 --model_name=tensorflow-serving --model_base_path=/tensorflow_serving/ &>test_log &

[1] 26798**model_base_path **is the absolute path of your exported model in the docker.

Then, check the running log file and you will see

cat test_log - pip install grpcio grpcio-tools

- go out of the docker and use the serving TF (client code)