In this repository you can find algorithms & data structures in the pure JavaScript language.

The goal is to systematize and consolidate knowledge of algorithms.

1.1 Bubble sort

1.2 Quicksort

1.3 Mergesort

1.4 Selection sort

1.5 Heapsort

1.6 Shellsort

1.7 Insertion sort

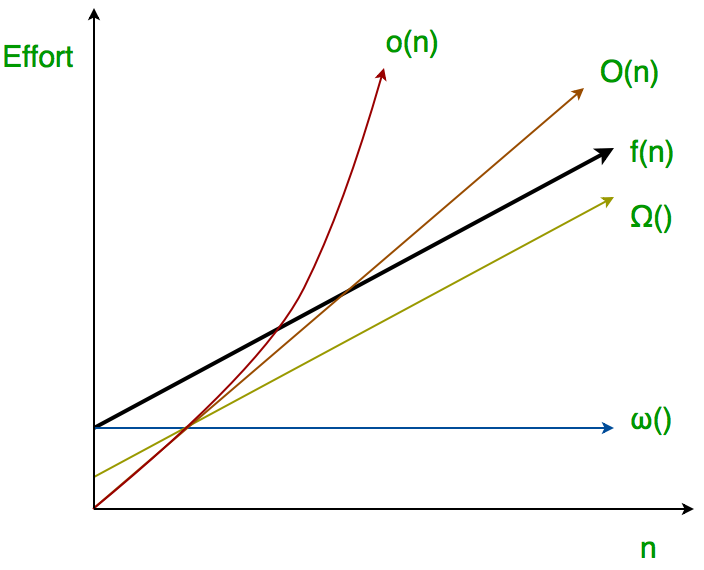

- Description of the kinds of bounds on asymptotic growth rates

- Common orders of growth

- Computational complexity of algorithms

- Pascal's triangle

- Single number

- Happy number

- Valid Palindrome

- Valid Anagram

- Path Sum

- Add One Row to Tree

- Largest Perimeter Triangle

- Delete Node in a Linked List

- Check if the Sentence Is Pangram

- Integer to Roman

- Contains Duplicate II

- Quick sort with built-in methods

- Pure quick sort

- Classic quick sort (Hoare partition scheme)

- Optimized classic quick sort (Hoare partition scheme)

- Binary search

- Largest & smallest element in an array

- Second largest & smallest element in an array

- Breadth First Search (BFS)

- Depth First Search (DFS)

- Dijkstra

- Bellman Ford's algorithm

Computational complexity is a complexity that expresses the dependence of the algorithm's workload on the input data. The amount of work includes time and space (the amount of memory) computational complexity. This area attempts to answer the central question of algorithm design: "how will the execution time and the amount of memory occupied change depending on the size of the input?" The main classes of complexity are:

Class P - problems for which there are fast solutions - sorting, array searching, matrix multiplication, and others. Algorithms of class P are called polynomial - the running time of which does not depend too much on the size of the input data (that is, the time does not exceed the polynomial of the data size).

Class NP - In the theory of algorithms class NP (from non-deterministic polynomial) is a set of decidability problems (where you can answer is solvable or not), whose solution can be checked on the Turing machine in time, not exceeding the value of a polynomial of the size of the input data, in the presence of some additional information (the so-called solution certificate). Any problem of class NP can be solved by brute force (kind of like bruteforcing, exponential complexity). Roughly speaking, these are complex problems that take a very long time to solve(factorial, exponential) and for which there is no optimally fast algorithm. Examples: Hamiltonian path problem, the traveling salesman problem.

Time complexity - computation complexity, that is defined from the input data determining the number of operations and equal to the algorithm's running time with the given input data. The time complexity of an algorithm is usually expressed using asymptotic analysis.

Not to be confused with runtime which describes how much time it takes to execute a program on a specific computing machine, while the time complexity is not tied to the actual execution time and the current computer on which the algorithm will be executed.

Space complexity - computation complexity, that describe amount of memory space required to solve an instance of the computational problem as a function of characteristics of the input. In other words, it is the memory required by an algorithm until it executes completely. Similar to time complexity, space complexity is often expressed asymptotically in big O notation.

Asymptotic analysis is a complexity analysis for input data that tends to infinity. It is expressed in notations (O(big O), Ω(omega), Θ(theta)). In most cases, big O notation is used, which defines the worst case (upper limit from function growth) and does not take into account constants.

Let f and g be functions from positive integers to positive integers. Then:

| Designation | Bound | Efficiency compared to the real complexity of the algorithm |

|---|---|---|

| Θ; f(n) = Θ(g(n)), if there exists a positive integer n0 and a positive constants c1 > 0 and c2 > 0, such that c1 * g(n) ≤ f(n) ≤ c2 * g(n) ∀ n>n0 | Lower and upper bounds, accurate estimate | Equal |

| О; f(n) = О(g(n)), if there exists a positive integer n0 and a positive constant c > 0, such that f(n)≤cg(n) ∀ n≥n0 | Upper bound, but this bound might or might not be a supremum | Less or equal |

| o; f(n) = o(g(n)), if there exists a positive constant c > 0, such that f(n)≤cg(n) ∀ but perhaps finitely many n | Upper bound, not an accurate estimate | Less |

| Ω; f(n) = Ω(g(n)), if there exists a positive integer n0 and a positive constant c > 0, such that cg(n)≤f(n) ∀ n>n0 | Lower bound, but this bound might or might not be a infimum | Greater or equal |

| ω, f(n) = ω(g(n)), if there exists a positive constant c > 0, such that cg(n)≤f(n) ∀ but perhaps finitely many n | Lower bound, not an accurate estimate | Greater |

"Ο(f(n))" implies that as the amount of input data n increases, the running time of the algorithm will increase no faster than some constant multiplied by f(n).

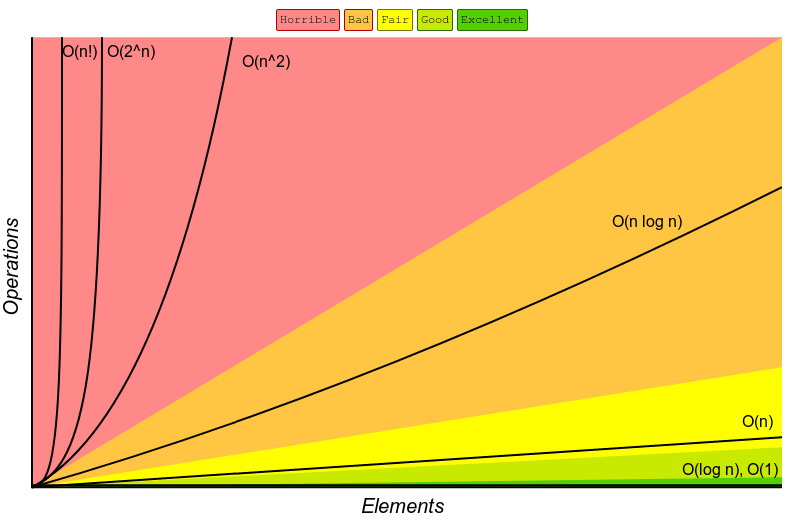

The order of growth of an algorithm is a rough estimate of the time/memory required to execute a computer program as the size of the input data changes.

Growth order ignores the constant factor needed for fixed operations and focuses on operations that increase in proportion to the size of the input data.

| Function | Type of Growth | Example |

|---|---|---|

| 1 | constant | Finding the median value in a sorted array of number |

| n | linear | Finding the smallest or largest item in an unsorted array |

| log n | logarithmic | Binary search |

| n log n | linearithmic | Fast Fourier transform |

| n^2 | quadratic | Bubble sort |

| n^3 | cubic | Naive multiplication of two n * n matrices |

| 2^poly(n) | exponential | Solving matrix chain multiplication via brute-force search |

| n! | factorial | Solving the traveling salesman problem via brute-force search |

Reference - Big-O Cheat Sheet

| Algorithm | Time complexity | Space complexity | ||

| * | Best | Average | Worst | Worst |

| Quicksort | Ω(n log(n)) | Θ(n log(n)) | O(n^2) | O(n), optimized O(log(n)) |

| Mergesort | Ω(n log(n)) | Θ(n log(n)) | O(n log(n)) | O(n) |

| Timsort | Ω(n) | Θ(n log(n)) | O(n log(n)) | O(n) |

| Heapsort | Ω(n log(n)) | Θ(n log(n)) | O(n log(n)) | O(1) |

| Bubble Sort | Ω(n) | Θ(n^2) | O(n^2) | O(1) |

| Insertion Sort | Ω(n) | Θ(n^2) | O(n^2) | O(1) |

| Selection Sort | Ω(n^2) | Θ(n^2) | O(n^2) | O(1) |

| Tree Sort | Ω(n log(n)) | Θ(n log(n)) | O(n^2) | O(n) |

| Shell Sort | Ω(n log(n)) | depends on gap sequence, in general: Θ(n(log(n))^2) | depends on gap sequence: O(n(log(n))^2) or O(n^2) | O(1) |

| Bucket Sort | Ω(n+k) | Θ(n+k) | O(n^2) | O(n) |

| Radix Sort | Ω(nk) | Θ(nk) | O(nk) | O(n+k) |

| Counting Sort | Ω(n+k) | Θ(n+k) | O(n+k) | O(k) |

| Counting Sort | Ω(n) | Θ(n log(n)) | O(n log(n)) | O(n) |