LLM Final Project - Building a Knowledge Graph enriched with Large Language Models and Linked to DBpedia

This project aims to build a knowledge graph enriched with large language models and linked to DBpedia. The knowledge graph will be built using the Neo4j library and the LangChain library. The knowledge graph will be linked to DBpedia using the SPARQLWrapper library. The project will be implemented in Python.

- Python 3.12

- Docker

- Docker Compose

- Kaggle Dataset

- Clone the repository

git clone https://github.com/theFellandes/LLM-Final-Project- Change the directory to the repository

cd LLM-Final-Project- Run the following command to start the neo4j database

docker-compose up -d- Create a venv environment

python -m venv venv- Activate the venv environment for Linux

source venv/bin/activate- Activate the venv environment for Windows

venv\Scripts\activate.bat- Install the required python packages

pip install -r requirements.txt- Install the kaggle dataset either by manually or using the /scrapper/kagglehub.py script. Installing kaggle dataset by hand is advised because the scrapper/data_scrapper.py will start to print out bunch of objects after downloading.

python scrapper/data_scrapper.py- If you manually install the Kaggle dataset, move the dataset named under .cache file to project root folder

To create the knowledge graph, run the following command:

python graph_generator.py -> This script will start to read data from Kagglehub and generate the knowledge graph

python graph_generator_user_rating.py -> This script will also read the data from Kagglehub however it will start from the user rating dataThese scripts take too much time, you can use the following command to manually insert the data into the Neo4j database:

CALL apoc.periodic.iterate(

'LOAD CSV WITH HEADERS FROM "file:///18/book100k-200k.csv" AS row RETURN row',

'

CALL apoc.do.when(

row.Id IS NULL OR row.Authors IS NULL,

"RETURN null",

"

MERGE (b:Book {id: toInteger(row.Id)})

SET b.name = row.Name, b.language = row.Language, b.publisher = row.Publisher, b.publishMonth = row.PublishMonth, b.rating = row.Rating, b.ISBN = row.ISBN

MERGE (a:Author {name: row.Authors})

MERGE (b)-[:WRITTEN_BY]->(a)

", {row: row})

YIELD value RETURN value

',

{batchSize: 500, parallel: true, retries: 5}

) YIELD batches, failedBatches, total, timeTaken, committedOperations

RETURN batches, failedBatches, total, timeTaken, committedOperations;In order to work with this script, you need to move the .cache file to Docker volume. You can do this by running the following command:

docker cp .cache neo4j:/var/lib/neo4j/importOr in Windows you can basically drag and drop the .cache file to the neo4j/import folder.

After moving the .cache file to the Docker volume, you can run the following command to insert the user rating data into the Neo4j database:

CALL apoc.periodic.iterate(

'LOAD CSV WITH HEADERS FROM "file:///18/user_rating_0_to_1000.csv" AS row RETURN row',

'

CALL apoc.do.when(

row.ID IS NULL OR row.Name IS NULL OR row.Rating IS NULL,

"RETURN null",

"

MERGE (b:Book {id: toInteger(row.ID)})

ON CREATE SET b.name = row.Name

MERGE (u:User {id: toInteger(row.ID)})

ON CREATE SET u.name = \"user_\" + toString(row.ID),

u.created_at = timestamp()

MERGE (u)-[r:REVIEWED_BY]->(b)

ON CREATE SET r.rating = row.Rating,

r.review = row.Rating,

r.created_at = timestamp()

", {row: row})

YIELD value RETURN value

',

{batchSize: 500, parallel: true, retries: 5}

) YIELD batches, failedBatches, total, timeTaken, committedOperations

RETURN batches, failedBatches, total, timeTaken, committedOperations;To observe the knowledge graph generated by script, open your browser and go to http://localhost:7474/. The default username is neo4j and the password is your_password.

In the enrichment part, the following scripts can be used:

python internal/graph/dbpedia/dbpedia_integration2.py -> This script will start to enrich the knowledge graph with genres

python internal/graph/dbpedia/dbpedia_awards.py -> This script will start to enrich the knowledge graph with awards

python internal/graph/dbpedia/dbpedia_description.py -> This script will start to enrich the knowledge graph with descriptionsAfter the enrichment part done, to observe the sentiment analysis and similarity analysis, the following scripts can be used:

python internal/graph/llm/bart_sentiment_analysis.py -> This script will start to perform sentiment analysis using BART

python internal/graph/llm/llm_sentiment_analysis.py -> This script will start to perform sentiment analysis using multiple llms

python internal/graph/llm/llm_similarity_langchain.py -> This script will start to perform similarity analysis using multiple llmsgraph_generator.py - The main script that reads csv file and create the knowledge graph from the csv file.

graph_generator_user_rating.py - The main script that reads csv file and create the knowledge graph from the csv file.

docker_reader.py - This is the class for reading docker-compose.yml file and get the username, password and connection string values.

legacy - The directory that contains the trial and error scripts.

internal - The directory that contains the internal modules of the project.

internal/db/neo4j_handler.py - This is the class for performing Neo4j operations

internal/graph/graph_llm.py - Even though this class isn't used at the moment, this is for enriching the knowledge graph using llm.

internal/graph/knowledge_graph_builder.py - Even though this class isn't used at the moment, this is for enriching the knowledge graph using llm.

internal/reader/yaml_reader.py - Parent class for docker_reader.py. This class reads general yaml files.

internal/graph/dbpedia/dbpedia_integration2.py - Standalone script for creating genres using DBPedia.

internal/graph/dbpedia/dbpedia_awards.py - Standalone script for creating awards using DBPedia.

internal/graph/dbpedia/dbpedia_description.py - Standalone script for creating descriptions using DBPedia.

internal/graph/llm/bart_sentiment_analysis.py - Standalone script for sentiment analysis using BART.

internal/graph/llm/llm_sentiment_analysis.py - Standalone script for sentiment analysis using multiple llms.

internal/graph/llm/llm_similarity_langchain.py - Standalone script for similarity analysis using multiple llms. (WORK IN PROGRESS)

requirements.txt - The file that contains the required python packages.

Dockerfile - The file that contains the docker configuration for the neo4j database.

docker-compose.yml - The file that contains the docker compose configuration for the neo4j database.

scrapper - Fetching and manipulating data happens in this folder's contents.

.env - The file that contains the environment variables for the docker compose.

.gitignore - The file that contains the files and directories that are ignored by git.

README.md - The file that contains the information about the project.

deliverables - The folder that contains the presentation given for this project and the report written for the project.

The project was hard at the creating the knowledge graph part. Because it took too much time to store the data onto Neo4j. Many methods were tested in this stage of the project, the data processing part. One of the tried method was running csv through a Python script and then insert it into Neo4j. This was time-consuming however it was the most effective in the case of accuracy because due to synchronized approach, it ensured the connection was never lost with the Neo4j server. The other method was to use the Neo4j's own import tool. This was faster than the previous method however it was not as accurate as the previous method. The reason for this was the connection between the Neo4j server and the import tool was not as synchronized as the previous method. This caused some data loss in the process. Due to utilizing asynchronous method using apoc library, the data loss was huge. Another method was to apply concurrency with Python. This had the connection pooling issues with local Neo4j server. There were so many race condition errors and connection errors, I've abandoned this method.

Tried methods can be found under the legacy folder. pooling&graphql contains the concurrency script as well as connecting to dbpedia.

Making sentiment analysis was the easiest part because LangChain library was very easy to use. The only problem was the time it took to process the data. The sentiment analysis took too much time to process the data. The similarity analysis was not implemented due to the time constraints and issues that had arisen could not solve in the time frame.

Using DBpedia and enriching the knowledge graph with it was also one of the hardest parts because the querying was very different from writing SQL queries. It required a lot of time to understand the querying language and how to use it. The other problem was the data was not as accurate as the data in the Kaggle dataset. This caused some data loss in the process.

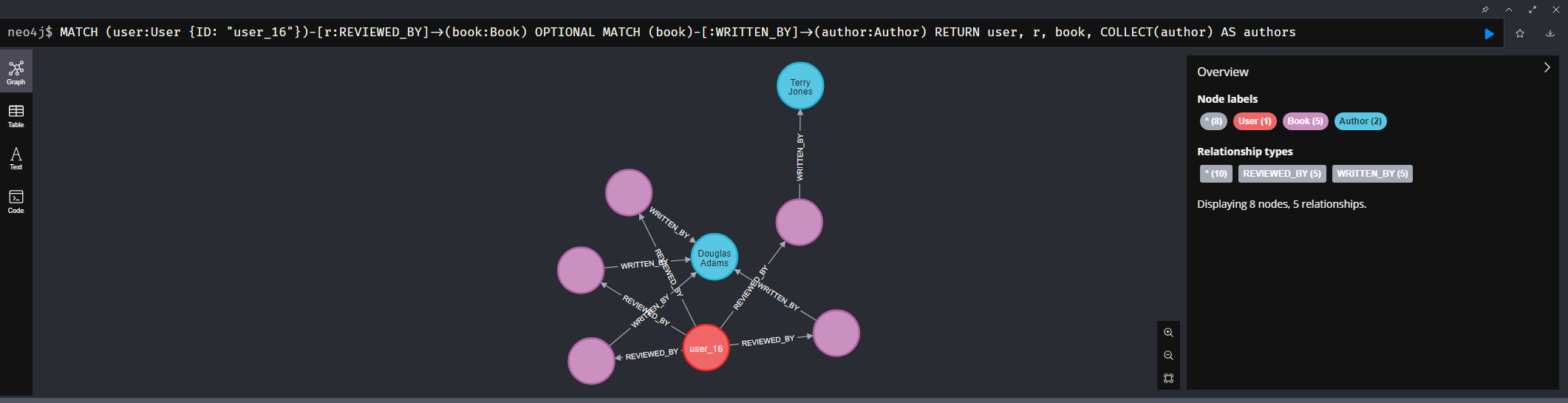

This graph is the first version of the knowledge graph. This contains only the data and nothing else. The next version will contain the data enriched with the large language models and sparql.

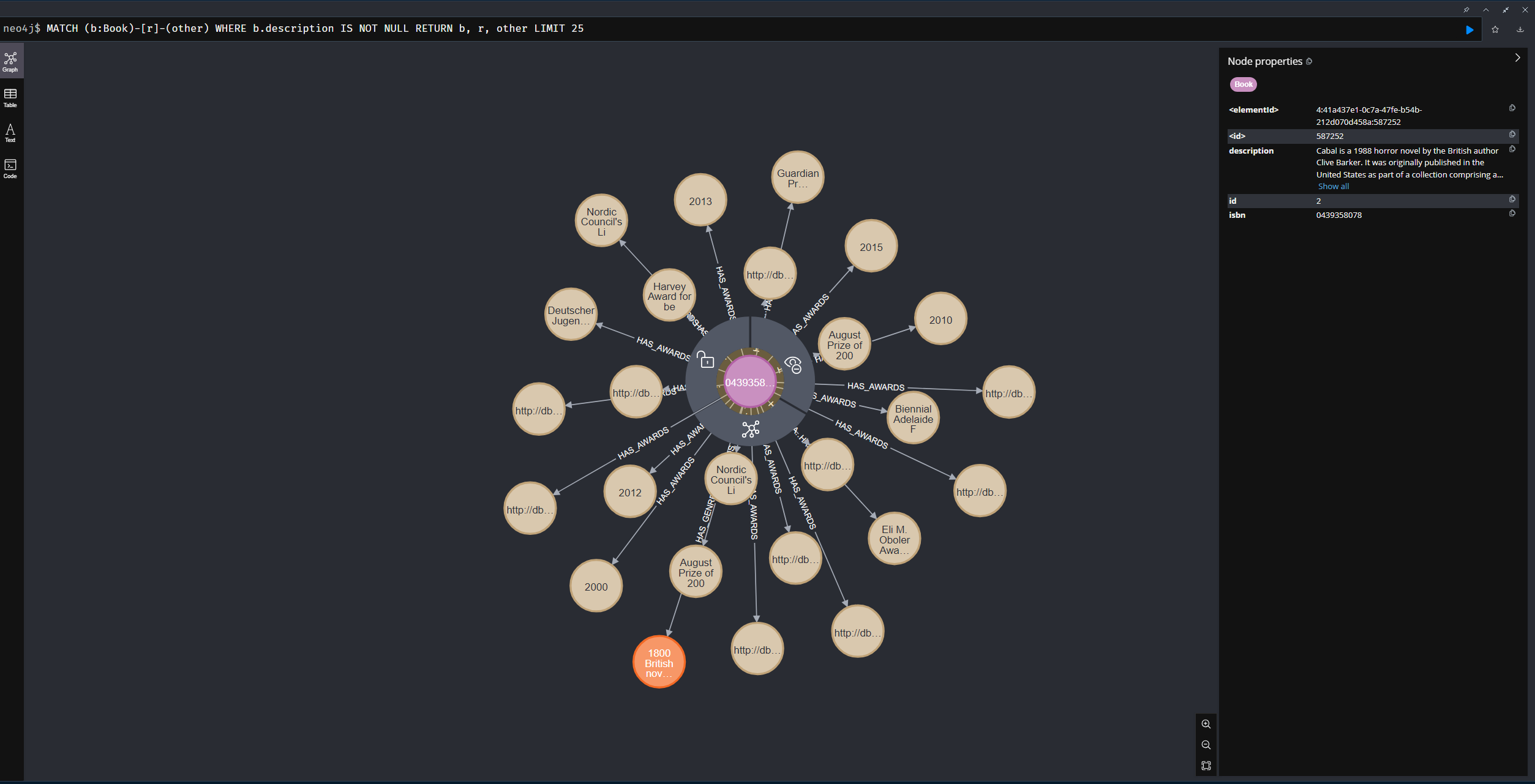

This graph is the second version of the knowledge graph. It was filled using APOC and added DBpedia data to enrich the graph with genres and awards.

In version 2, these are the labels.

Also in version 2, descriptions were added.

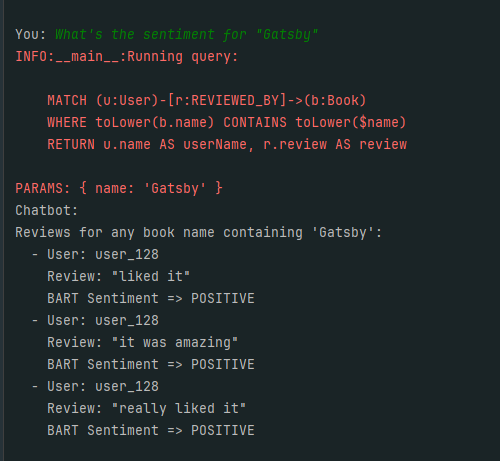

In version 3, the sentiment analysis was performed on the graph.

Also in this version, the sentiment analysis was performed using OpenAI and BART.

Utilizing the similarity analysis, the following time took to generate it:

Therefore, using 1000 random books to perform similarity analysis was done. This similarity was done using OpenAI.

- My Family -> For supporting me during the project and putting up with me during the stressful times of the project.

- Aydın Burak Kuşçu -> For trying the steps and giving feedbacks on missing content of the README.md file.

- Bahram Jannesarr -> For providing the dataset on Kaggle.