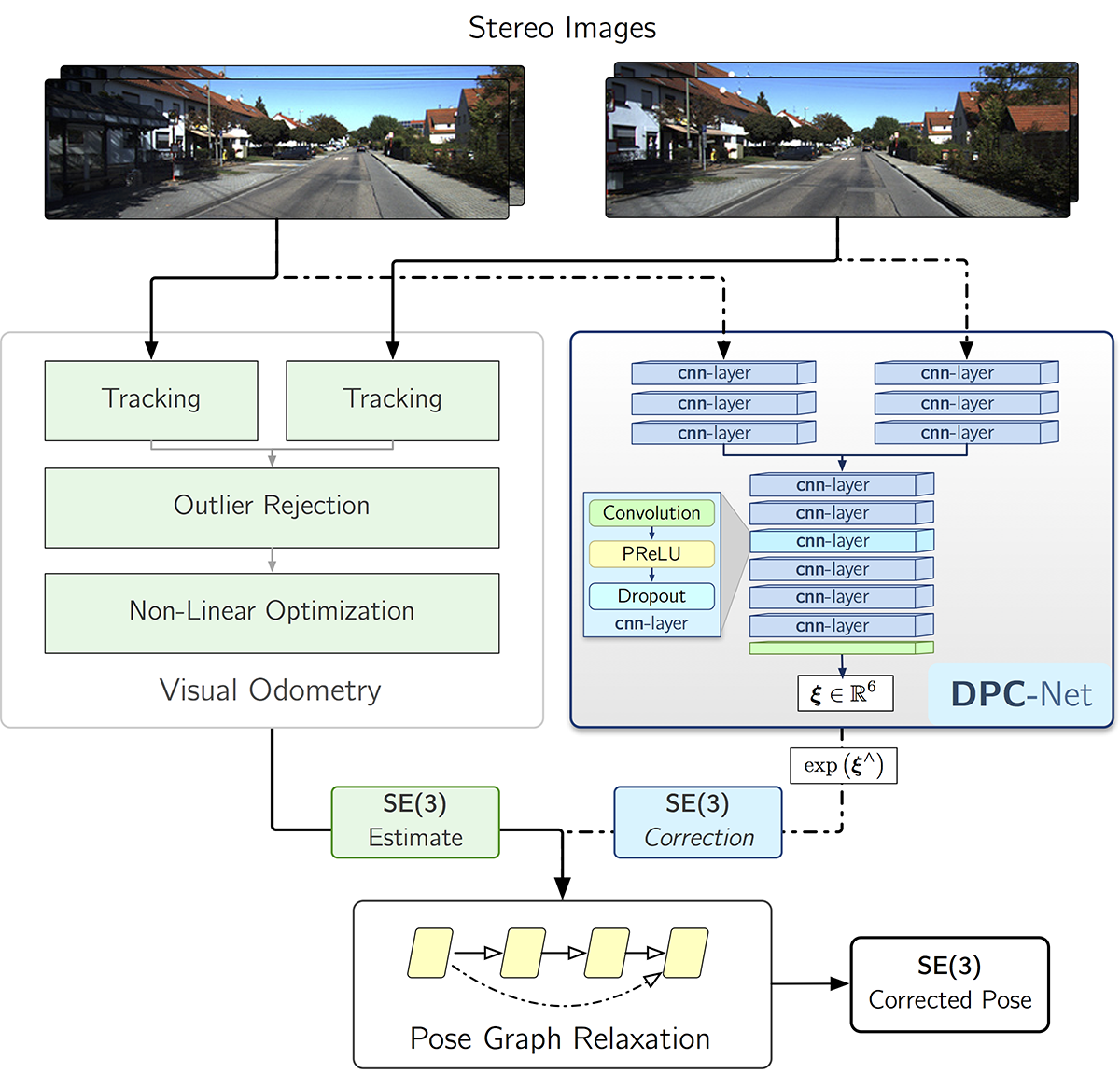

Code for Dense Pose Corrections. DPC-Net learns SE(3) corrections to classical geometric and probabilistic visual localization pipelines (e.g., visual odometry).

-

Ensure that pytorch is installed on your machine. We perform all training and testing on a a GTX Titan X (Maxwell) with 12GiB of memory.

-

Install pyslam and liegroups. We use pyslam's

TrajectoryMetricsclass to store computed trajectories, and use it to compute pose graph relaxations. -

Clone DPC-net:

git clone https://github.com/utiasSTARS/dpc-net

- Download pre-trained models and stats for our sample estimator (based on libviso2):

ftp://128.100.201.179/2017-dpc-net

-

Open and edit the appropriate variables (mostly paths) in

test_dpc_net.py. -

Run

test_dpc_net.py --seqs 00 --corr pose.

Note that this code does not include the pose graph relaxation, and as a result the statistics it outputs are based on a simple correction model (where in between poses are left untouched).

To train DPC-Net, you need two things:

- A list of frame-to-frame corrections to a localization pipeline (this is typically computed using some form of ground-truth).

- A set of images (stereo or mono, depending on whether the correction is SO(3) or SE(3)) from which the model can learn corrections.

To use the KITTI odometry benchmark to train DPC-Net, you can use the scripts train_dpc_net.py and create_kitti_training_data.py as starting points. If you use our framework, you'll need to save your estimator's poses in a TrajectoryMetrics object.

If you use this code in your research, please cite:

@article{2018_Peretroukhin_DPC,

author = {Valentin Peretroukhin and Jonathan Kelly},

doi = {10.1109/LRA.2017.2778765},

journal = {{IEEE} Robotics and Automation Letters},

link = {https://arxiv.org/abs/1709.03128},

title = {{DPC-Net}: Deep Pose Correction for Visual Localization},

year = {2018}

}