-

Notifications

You must be signed in to change notification settings - Fork 0

Docker

Virtualization and deployment in software development

Author: András Szabolcs Nagy, Zoltán Szatmári

Deploying multi-component applications is not trivial, since we should take care on shared data, communication and the right environments. Doing this deployment for each release, even more for each test execution requires a systematic approach to ensure the consistent environment and comparable executions. Doing integration tests requires frequent deployment in a reproducible way.

In this course, we will learn:

- what are the main deployment techniques

- what is operating-system-level virtualization or container-based virtualization,

- what are the currently available OS level virtualization technologies,

- how recent technologies can be used in software development,

- the docker technology.

During this lab we focus on the multi-component software deployments. Such components should be deployed on the same or different physical or virtual machine or container environment, while the communication between the components should be ensured.

There are three main approaches:

- Installing the components on the same or different hosts directly.

- Packaging the components into application container images and running the containers on one or multiple hosts.

- Packaging the components into virtual machine images and running these virtual machines.

During the deployment and also testing phases the reproducible environment is very important. We should ensure that the components are executed in the same environment (software stack) in the development and production environment and during the tests. Deploying a consistent environment is easier said than done. Even if we use configuration management tools like Chef and Puppet, there are always OS updates and other things that change between hosts and environments.

When installing components directly to the hosts, the consistency and reproducible requirement cannot be ensured. Using a systematic method for building container images or virtual machine images provides stable, well-defined environments and reproducible executions.

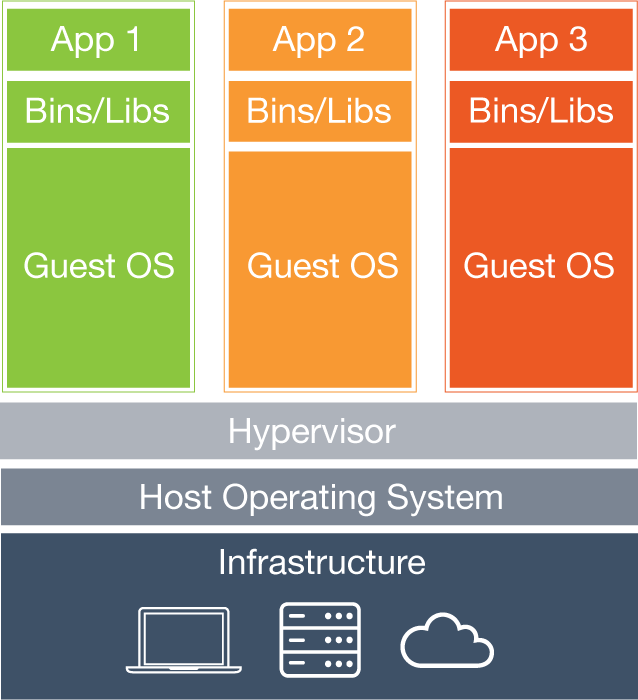

Using a virtualization method and building an image provides consistent environments. The virtualization method can be categorized based on how it mimics hardware to a guest operating system and emulates guest operating environment. Primarily, there are two main types of virtualization:

- Emulation and paravirtualization is based on some type of hypervisor which is responsible for translating guest OS kernel code to software instructions or directly executes it on the bare-metal hardware. A virtual machine provides a computing environment with dedicated resources, requests for CPU, memory, hard disk, network and other hardware resources that are managed by a virtualization layer. A virtual machine has its own OS and full software stack. Just think about VMware Player, Oracle VirtualBox, DOSBox or any hyper-visors (operating system for running virtual machines) such as VMware ESXi or Hyper-V to name the most popular technologies.

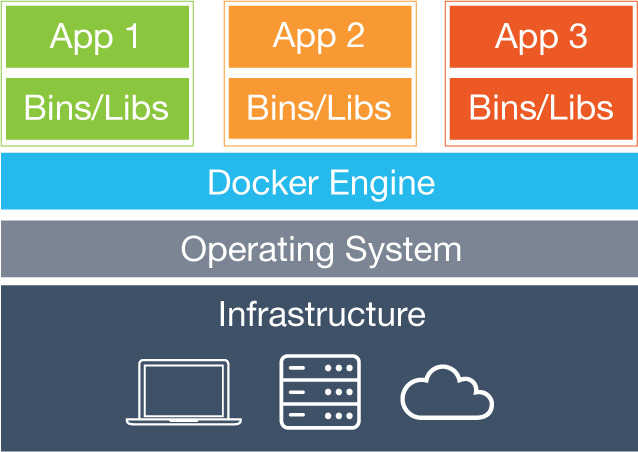

- Container-based virtualization, also known as operating system-level virtualization, enables multiple isolated executions within a single operating system kernel. It has the best possible performance and density, while featuring dynamic resource management. The isolated virtual execution environment provided by this type of virtualization is called a container and can be viewed as a well-defined group of processes. Operating-system-level virtualization uses the same kernel as the host operating system as shown on the next Figure. Applications can be installed and can be run in the same way as on a full operating system.

Starting from a base image (virtual machine or container image) the environment can be built up and saved as a new image in a consistent way.

There are many technologies that provide automatic virtual machine image construction (e.g. vagrant, packer) on different platforms (e.g. Amazon AWS, VMware, Xen). For container-based solutions there is the Docker that is the de facto standard. During this lab we will rely on these lightweight Docker-based container solutions.

Advantages of Container-based virtualization:

- Has minimal overhead.

- Uses less storage.

- -> Scales well if dozens or hundreds of guests are needed.

- Updates, modifications to the host will be instantly applied to the guests as well.

Disadvantages

- The guest OS cannot differ from the host OS in kernel level, even in kernel version.

- A concrete OS virtualization technology is heavily OS dependent.

Docker is an emerging, open-source OS level virtualization technology primarly for Unix systems and is written in the Go programming language. Docker provides the ability to package and run an application in a loosely isolated environment called a container. It provides tooling and a platform to manage the lifecycle of your containers:

- Encapsulate your applications (and supporting components) into Docker containers.

- Distribute and ship those containers to your teams for further development and testing.

- Deploy those applications to your production environment, whether it is in a local data center or the cloud.

The core concepts of Docker are images and containers. In the following we highlight the most important parts of the Docker overview.

A Docker image is a read-only template with instructions for creating a Docker container. For example, an image might contain an Ubuntu operating system with an Apache web server and your web application installed. You can build or update images from scratch or download and use images created by others. An image may be based on, or may extend, one or more other images. A Docker image is described in text file called a Dockerfile, which has a simple, well-defined syntax.

A Docker container is a runnable instance of a Docker image. You can run, start, stop, move, or delete a container using Docker API or CLI commands. When you run a container, you can provide configuration metadata such as networking information or environment variables.

An image is defined in a Dockerfile. Every image starts from a base image, e.g. from ubuntu, a base Ubuntu image. The Docker image is built from the base image using a simple, descriptive set of steps we call instructions, which are stored in a Dockerfile (e.g. RUN a command, ADD a file, ENTRYPOINT configures a container that will run as an executable).

Images come to life with the docker run command, which creates a container by adding a read-write layer on top of the image. This combination of read-only layers topped with a read-write layer is known as a union file system. Changes exist only within an individual container instance. When a container is deleted, any changes are lost unless steps are taken to preserve them.

Instead of copying the tutorials available on the internet, we collected them here and propose the required sections to read. In order to prepare for the lab, we advise you to read the following additional material:

- Docker overview

- Working with Docker Containers

- Build your own image

- Dockerfile reference (Read the Usage, Format, FROM, RUN, ADD, CMD, ENTRYPOINT sections)

- Docker run reference (Read at least the Detached vs. foreground, CMD (default command or options), ENV (environment variables), VOLUME (shared filesystems) and EXPOSE (incoming ports) sections)

- Also you may want to check the Best practices when writing a Dockerfile.

Installing Docker needs a few commands but there is an official script which takes care of everything (upgrades docker if already installed). It is recommended to download and execute it right away. You can check if you have the latest docker with the docker version command (17.09 as of October 2017).

Docker is also available for Windows and for Mac. It uses Hyper-V on Windows or xhyve on Mac to run a lightweight Linux virtual machine called Docker VM, which is specifically designed to run Docker (indeed, it has higher overhead on Windows) but seamlessly integrates into Windows with a client. For more details look at the documentation.

- Docker runs as a daemon in the background and uses the Linux kernel capabilities.

- Every command starts with the

dockerkeyword. - An image is a blueprint for starting a container.

- Multiple containers can run from the same image at the same time.

-

docker imageslists the available images. -

docker ps [-a]lists the containers and their status. - You can

pullimages from the Docker Hub repository, e.g.docker pull ubuntuwill download an image called ubuntu. -

docker run [image-name]willcreateandstarta new container (also pulls the image if not present). - A container will preserve the changes made to it if the container is stopped and started again.

- Images can be preconfigured and shared via a text file called Dockerfile.

- Images are built on top of each other, called layers.

- Each image and container has a unique hexadecimal ID.

Docker images can be stored (push command) in Docker registries, from where they can pulled (pull command) to the hosts.

At Docker Hub you can share these configuration files with others, it provides a free registry service. It's worth to explore a bit what images are available publicly. For example there are images for a simple Ubuntu with only Java installed or even complete LAMP servers. Also you can check their Dockerfiles on-line and learn from them. Private images can be stored in a private Docker registry.

docker run --name db -e MYSQL_ROOT_PASSWORD=1234 -e MYSQL_DATABASE=wordpressdb -d mysql

docker run --name ws -e WORDPRESS_DB_PASSWORD=1234 -e WORDPRESS_DB_NAME=wordpressdb --link db:mysql -p 127.0.0.2:80:80 -d wordpress

docker inspect db

docker run --name dbadmin -e MYSQL_ROOT_PASSWORD=1234 -e PMA_HOST=<INSERT THE IP ADDRESS HERE> --link db -p 127.0.0.3:80:80 -d phpmyadmin/phpmyadmin

You can reach your WordPress website by typing the IP address 127.0.0.2 to your browser. Similarly, phpMyAdmin is reachable at 127.0.0.3.

Starting a multi-component system requires multiple docker run executions with a proper parameters, but this task should not do manually every time, because there is the Docker compose tool to overtake it. Docker compose is a tool for defining and running multi-container Docker applications. With Compose, you use a Compose file to configure your application’s services. Then, using a single command, you create and start all the services from your configuration.

Using the docker-compose.yml file you can define the services (containers to start). Each service is based on a Docker image or is built from a Dockerfile. Additionally you can define the previously mentioned parameters (like ports, volumes, environment variables) using that YAML configuration file and finally you can fire up all the services with just one command: docker-compose up.

For more details, please read the following guides:

- Get started with Docker Compose

- Compose file version 3 reference (image, environment, volumes, volume_driver, ports sections)

The followings are from this post. It's worth reading at least the titles.

In short, the microservice architectural style is an approach to develop a single application as a suite of small services, each running in its own process and communicating with lightweight mechanisms, often an HTTP API. These services are built around business capabilities and independently deployable by fully automated deployment machinery. There is a bare minimum of centralized management of these services, which may be written in different programming languages and use different data storage technologies.

Advantages over a monolithic architecture

- Flexibility: services (components) are independently deployable, replaceable and upgradeable.

- Services organized around business capability. Consequently the teams are cross-functional, including the full range of skills required for the development (e.g. user-experience, database, and project management).

- Services are more like products rather than projects.

- Better fault tolerance if services designed to tolerate the failure of other services.

Limitations, best practices

- If the components do not compose cleanly, then all you are doing is shifting complexity from inside a component to the connections between components.

- Remote calls are more expensive than in-process calls, and thus remote APIs need to be coarser-grained, which is often more awkward to use.

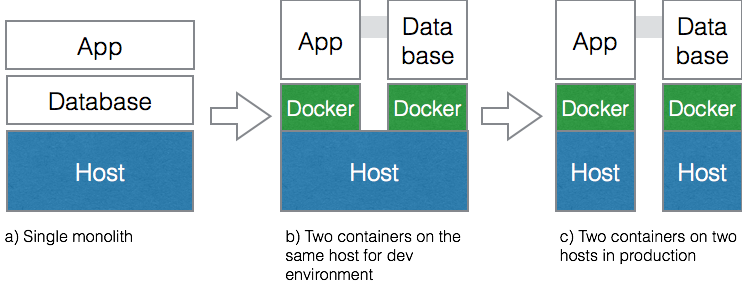

- You shouldn't start with a microservices architecture. Instead begin with a monolith, keep it modular, and split it into microservices once the monolith becomes a problem.

Fun quote from Melvyn Conway, 1967: any organization that designs a system (defined broadly) will produce a design whose structure is a copy of the organization's communication structure.

Docker-like frameworks make a microservices architecture easy to deploy and maintain and is useful in many areas, for example:

- Development environment: developers may only need to execute a docker command to get a full development environment of a project or to get a test data base up and running on their machine.

- Test environment for integration test.

- Production environment: after integration test, the images can be deployed in production environment with the same process.

In previous laboratories, you created a distributed version of the running example using Akka and Thrift. In this laboratory, you can choose any of these solutions to start with. The aim of the laboratory is to get that code deployable with Docker, hence write a proper Dockerfile. You may put a good use of this Docker cheat sheet.

The solution that students submit after the session has to meet the following criteria:

- Provide Dockerfile(s) that can be used to build docker images for your components

- Use as much publicly availably components as you can and just add your services to the images (e.g. don't install the JVM, but use an image with preinstalled JVM).

- Your solution should use at least two components (containers) and meet the Microservice Architecture principles.

- Comment your Dockerfile(s).

- Provide documentation, how to build and run your docker image(s).

- Service definitions are also described using docker-compose. This excercise should at least started, if not completed.

You can additionally make the configuration parameterizable with any input (string, text file) or make it usable by running it in detached mode for extra points.

Spoon is similar to Docker but is native to Windows, very different in the details (as Unix and Windows kernels are different) and isn't open-source, while it also has free services, like an image registry (similar to Docker Hub). If you are interested, check this out.

VMware ThinApp is also an OS level virtualization technology for Windows but it's a fully commercial product and has no free solution/service.

Microsoft also has its own technology called Microsoft AppV.

Just to name a few: FreeBSD jail, LXC (Docker used to build on this technology), Linux-VServer, OpenVZ, Solaris Containers.

Vagrant has very similar services you have learnt in Docker but it works with platform level virtualization (by default with Virtual box, but can work with VMware too with commercial plugins).

- What is the difference between the platform and OS level virtualization?

- In what language Docker is written?

- What is the difference between a container and an image?

- List 3 Docker commands and tell what they do!

- What is a Dockerfile?

- What is the purpose of the ADD and EXPOSE commands?

- What is Docker Hub?

- What is a layer?

- What are microservices?

- Where can you use Docker effectively?